-

Notifications

You must be signed in to change notification settings - Fork 444

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

BeyondFederated - truly decentralised learning at the edge #7254

Comments

|

ToDo: register https://mare.ewi.tudelft.nl/project Latest work by TUDelft: MoDeST: Bridging the Gap between Federated and Decentralized Learning with Decentralized Sampling |

|

To create a suggestion model with neural hashes using metadata as input to find songs in Creative Commons BitTorrent swarms:

Optionally, Improve the model over time, track which songs are actually downloaded or listened to by users, and use this data to train the model to improve its suggestions. |

|

Proposal: a dedicated sprint to implementing a basic search engine.

|

|

https://colab.research.google.com/drive/1j_voFtr6j0gEStsMfcafi9FV5XJOLxjj?usp=sharing

query: ['electronic'] similarity score: 0.3406708597897247 |

|

|

APK including:

wetransfer link (118mb) https://we.tl/t-pnugzyNiRV |

|

Question: how impressed/intimidated/confused are you about recent ML/LLM/Diffusion explosion?

|

|

Have not found resources that does not have a central server; Is it?

{ |

|

|

Ideal sprint outcome for 15 Aug: a operational PeerAI .APK with minimal viable "TFLite Searcher model" with PandaCD and FMA. Focus: genre similarity. Adding new item, fine-tuning, exchanging models is still out of scope. Lets get this operational first! |

|

Modelling "TFLite Searcher model" using subset of dataset using only: Title, Artist, Genre/Tag, Album.

Training goal; creating vectors with similar atrributes having smaller distances.

Porting everything into Kotlin.

|

|

|

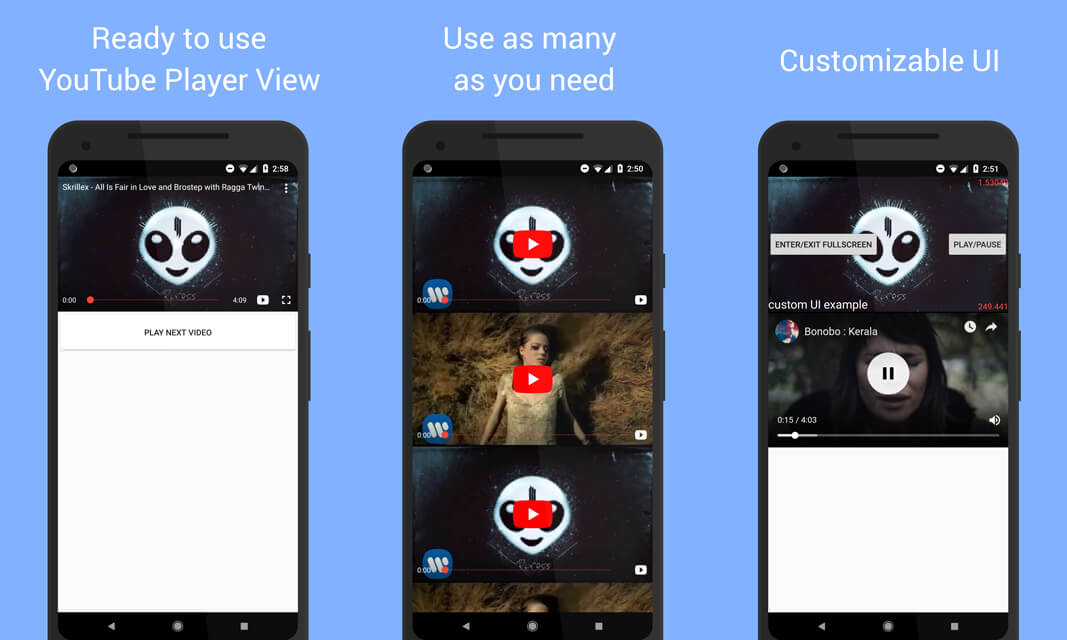

Pleas ensure to cite this work in your thesis, AI Benchmark: All About Deep Learning on Smartphones in 2019. website of ETH-Z en-device AI benchmarking, includes S23 results. UPDATE: Youtube contrains more content than FMA and PandaCD. Great datasets exist. See Youtube player you could connect to your thesis focus of BeyondFederated content search with actual playable content. |

|

Neural Instant Search for Music and Podcast Finished the model design where the final .tflite model will exists from an embedder model and an ScaNN layer.

The model output conists of clostest neighbors including all the metadata of the dataset. However current status of being stucked implementing The TFLite Model Maker library for on-device ML applications; Creating first version of the model. Goal: Self learned semantic network with 100k items Todo: mention research in paper: https://arxiv.org/abs/1908.10396 |

|

TFLight Model Maker: Not being able to build tflight_model_maker since a lot of dependencies where conflicting. Resolved by custom Dockerfile with manual build steps including other libraries. (also repo update since 2 weeks) Model Image

Key decision Learning: Determine what is exactly learned by connected clients (A rebuilded custom index vs clicklig gradients/recommendations within search higher ranked items based on Clicklog (a.k.a popular audio higher ranked)) Decentralized Learning todo;

|

|

Goal for upcoming days: Scale Scalable Nearest Neighbors.

|

|

Realizing this will be a big contribution towards Tflite-support

fat Android build for multiple architectures: x86,x86_64,arm64-v8a,armeabi-v7a |

|

|

Extending TFLite Support with custom API calls (On-Device Scann C++).

Currently focussing on training ScaNN, a single layer K-Means tree is used to partition the database (index) which I'am now being able to modify. The model is trained on forming partition centroids (as a way to reduce search space). In the current setup new entries are pushed in the vector space but the determination on which partition they should appear (closest to certain partition centroids) is hard. Job to be done; Rebuilding partions. INDEX_FILE For a dataset N X should be around SQRT(N) partitions to optimize perfromance. No train method exposed in current model setup so another API call to expose; Either

Non perfect insert works until approximately 100k items, where new embeddings are inserted to closest partition centroids. Older nearest neighbor paper by google In case of On-Device limitiation of recreating the whole index including the new partitions and centroids, interesting research direction Fast Distributed k-Means with a Small Number of Rounds. Research question shift towards: "How can the efficiency and effectiveness of SCANN be enhanced through novel strategies for dynamically adding entries, specifically focusing on the adaptive generation of K-Means tree partitions to accommodate evolving datasets while maintaining optimal search performance?" This research question addresses the challenge of adapting SCANN, a scalable nearest neighbors search algorithm, to handle dynamic datasets. The focus is on developing innovative approaches for adding new entries in a way that optimizes the generation of K-Means tree partitions, ensuring efficient search operations as the dataset evolves. "Evolving datasets" key in a fully decentralized (On-Device) vector space, no central entity to re-calculate all the necessary partioning/indexing. TODO for next sprint; Focus on frozen centroids and imperfect inserts. !Keep it simple! Also implement recommandation model; The main objective of this model is to efficiently weed out all candidates that the user is not interested in. In TensorfFlow recommender, both components can be packaged into a single exportable model, giving us a model that takes the raw user id and returns the titles of top entries for that user. For Searching searching the vector space with a given query will retrieve all top-k results. We then train our loss function based on: {Query, Youtube-clicked-URL,Youtube-clicked-title,Youtube-clicked-views, Youtube-NOT-clicked-URL, date, shadow-signature} |

update: idea for experimental results. Exactly show how insert/lookup starts to degrade as you insert 100k or 10 million items. Cluster become unbalanced, too big, too distorted from centroid? |

Goal:

Slowly progressing due to complexity, not just append new item to partition array + C++ and though development enviroment.. Youtube Iterate trough music category: Analysis of dataset of millions of songs (150mb? -> Device ready!) |

|

|

*Target of past weeks (including some time off on Holiday): Non-Perfect insert

Only 2.4 MB for 20K items Trained cluster Config!! Seeing valueable possibilities here! Such as sharing configs with peers?? Dynamic/sharable vector spaces in distributed context. Self learned or also keep sharing configs.

-[x] Searching Does still work under new custom build library. -[x] Gossip of new items/Clicklog also possible. Different Encoder layers possible within On-Device model; Current implementation includes embeddings based on Universal scentence encoder Meaning encodings are distributed on based semantics but not typos! Meaning |

|

Potential extended gossip design: JSON gossip replaced by gossiped C++ vector/embedding?? Experiment on large tiktok dataset-> https://developers.tiktok.com/products/research-api/ |

|

Results of top 5 items:

|

update: fun fact, Deepmind also uses the library you use 😄 Improving language models by retrieving from trillions of tokens |

|

|

|

|

|

|

Search term -> Query:

|

|

Started full-time thesis around april/may 2023.

Track DST, Q3/4 start. Still "seminar course" ToDo. Has superapp/MusicDAO experience. Discussed as diverse as digital Euro and Web3 search engine (unsupervised learning, online learning, adversarial, byzantine, decentralised, personalised, local-first AI, edge-devices only, low-power hardware accelerated, and self-governance). Done

machine Learning Iclass. (background: Samsung solution, ONE (On-device Neural Engine): A high-performance, on-device neural network inference framework.Recommendation or semantic search? Alternative direction. Some overlap with the G-Rank follow-up project. Essential problem to solve: learning valid Creative Commons Bittorrent swarms.

Second sprint (strictly exploratory):

Doing information retrieval msc course to prepare for this thesis

Literature survey initial idea: "nobody is doing autonomous AI" {unsupervised learning, online learning, adversarial, byzantine, decentralised, personalised, local-first AI, edge-devices only, low-power hardware accelerated, and self-governance}.

The text was updated successfully, but these errors were encountered: