Documentation

The following API documentation of OSPRay can also be found as a pdf document.

For a deeper explanation of the concepts, design, features and performance of OSPRay also have a look at the IEEE Vis 2016 paper “OSPRay – A CPU Ray Tracing Framework for Scientific Visualization” (49MB, or get the smaller version 1.8MB). The slides of the talk (5.2MB) are also available.

OSPRay API

To access the OSPRay API you first need to include the OSPRay header

#include "ospray/ospray.h"where the API is compatible with C99 and C++.

Initialization and Shutdown

To use the API, OSPRay must be initialized with a “device”. A device

is the object which implements the API. Creating and initializing a

device can be done in either of two ways: command line arguments using

ospInit or manually instantiating a device and setting

parameters on it.

Command Line Arguments

The first is to do so by giving OSPRay the command line from

main() by calling

OSPError ospInit(int *argc, const char **argv);OSPRay parses (and removes) its known command line parameters from

your application’s main function. For an example see the tutorial. For possible error codes

see section Error Handling

and Status Messages. It is important to note that the arguments

passed to ospInit are processed in order they are listed.

The following parameters (which are prefixed by convention with

“--osp:”) are understood:

| Parameter | Description |

|---|---|

--osp:debug |

enables various extra checks and debug output, and disables multi-threading |

--osp:num-threads=<n> |

use n threads instead of per

default using all detected hardware threads |

--osp:log-level=<str> |

set logging level; valid values (in order

of severity) are none, error,

warning, info, and debug |

--osp:warn-as-error |

send warning and

error messages through the error callback, otherwise send

warning messages through the message callback; must have

sufficient logLevel to enable warnings |

--osp:verbose |

shortcut for

--osp:log-level=info and enable debug output on

cout, error output on cerr |

--osp:vv |

shortcut for

--osp:log-level=debug and enable debug output on

cout, error output on cerr |

--osp:load-modules=<name>[,...] |

load one or more modules during

initialization; equivalent to calling

ospLoadModule(name) |

--osp:log-output=<dst> |

convenience for setting where status

messages go; valid values for dst are cerr and

cout |

--osp:error-output=<dst> |

convenience for setting where error

messages go; valid values for dst are cerr and

cout |

--osp:device=<name> |

use name as the type of

device for OSPRay to create; e.g., --osp:device=cpu gives

you the default cpu device; Note if the device to be used

is defined in a module, remember to pass

--osp:load-modules=<name> first |

--osp:set-affinity=<n> |

if 1, bind software threads

to hardware threads; 0 disables binding; default is

0 |

--osp:device-params=<param>:<value>[,...] |

set one or more other device parameters;

equivalent to calling ospDeviceSet*(param, value) |

Manual Device Instantiation

The second method of initialization is to explicitly create the device and possibly set parameters. This method looks almost identical to how other objects are created and used by OSPRay (described in later sections). The first step is to create the device with

OSPDevice ospNewDevice(const char *type);where the type string maps to a specific device

implementation. OSPRay always provides the “cpu” device,

which maps to a fast, local CPU implementation. Other devices can also

be added through additional modules, such as distributed MPI device

implementations. See next Chapter for details.

Once a device is created, you can call

void ospDeviceSetParam(OSPObject, const char *id, OSPDataType type, const void *mem);to set parameters on the device. The semantics of setting parameters

is exactly the same as ospSetParam, which is documented

below in the parameters section. The following

parameters can be set on all devices:

| Type | Name | Description |

|---|---|---|

| int | numThreads | number of threads which OSPRay should use |

| bool | disableMipMapGeneration | disable the default generation of MIP maps for textures (e.g., to save the additional memory needed) |

| uint | logLevel | logging level; valid values (in order of

severity) are OSP_LOG_NONE, OSP_LOG_ERROR,

OSP_LOG_WARNING, OSP_LOG_INFO, and

OSP_LOG_DEBUG |

| string | logOutput | convenience for setting where status

messages go; valid values are cerr and

cout |

| string | errorOutput | convenience for setting where error

messages go; valid values are cerr and

cout |

| bool | debug | set debug mode; equivalent to

logLevel=debug and numThreads=1 |

| bool | warnAsError | send warning and

error messages through the error callback, otherwise send

warning messages through the message callback; must have

sufficient logLevel to enable warnings |

| bool | setAffinity | bind software threads to hardware threads if set to 1; 0 disables binding omitting the parameter will let OSPRay choose |

Once parameters are set on the created device, the device must be committed with

void ospDeviceCommit(OSPDevice);To use the newly committed device, you must call

void ospSetCurrentDevice(OSPDevice);This then sets the given device as the object which will respond to all other OSPRay API calls.

Device handle lifetimes are managed with two calls, the first which

increments the internal reference count to the given

OSPDevice

void ospDeviceRetain(OSPDevice)and the second which decrements the reference count

void ospDeviceRelease(OSPDevice)Users can change parameters on the device after initialization (from either method above), by calling

OSPDevice ospGetCurrentDevice();This function returns the handle to the device currently used to

respond to OSPRay API calls, where users can set/change parameters and

recommit the device. If changes are made to the device that is already

set as the current device, it does not need to be set as current again.

Note this API call will increment the ref count of the returned device

handle, so applications must use ospDeviceRelease when

finished using the handle to avoid leaking the underlying device object.

If there is no current device set, this will return an invalid

NULL handle.

When a device is created, its reference count is initially

1. When a device is set as the current device, it

internally has its reference count incremented. Note that

ospDeviceRetain and ospDeviceRelease should

only be used with reference counts that the application tracks: removing

reference held by the current set device should be handled by

ospShutdown. Thus, ospDeviceRelease should

only decrement the reference counts that come from

ospNewDevice, ospGetCurrentDevice, and the

number of explicit calls to ospDeviceRetain.

OSPRay allows applications to query runtime properties of a device in order to do enhanced validation of what device was loaded at runtime. The following function can be used to get these device-specific properties (attributes about the device, not parameter values)

int64_t ospDeviceGetProperty(OSPDevice, OSPDeviceProperty);It returns an integer value of the queried property and the following properties can be provided as parameter:

OSP_DEVICE_VERSION

OSP_DEVICE_VERSION_MAJOR

OSP_DEVICE_VERSION_MINOR

OSP_DEVICE_VERSION_PATCH

OSP_DEVICE_SO_VERSIONEnvironment Variables

OSPRay’s generic device parameters can be overridden via environment

variables for easy changes to OSPRay’s behavior without needing to

change the application (variables are prefixed by convention with

“OSPRAY_”):

| Variable | Description |

|---|---|

| OSPRAY_NUM_THREADS | equivalent to

--osp:num-threads |

| OSPRAY_LOG_LEVEL | equivalent to

--osp:log-level |

| OSPRAY_LOG_OUTPUT | equivalent to

--osp:log-output |

| OSPRAY_ERROR_OUTPUT | equivalent to

--osp:error-output |

| OSPRAY_DEBUG | equivalent to

--osp:debug |

| OSPRAY_WARN_AS_ERROR | equivalent to

--osp:warn-as-error |

| OSPRAY_SET_AFFINITY | equivalent to

--osp:set-affinity |

| OSPRAY_LOAD_MODULES | equivalent to

--osp:load-modules, can be a comma separated list of

modules which will be loaded in order |

| OSPRAY_DEVICE | equivalent to

--osp:device: |

Note that these environment variables take precedence over values

specified through ospInit or manually set device

parameters.

Error Handling and Status Messages

The following errors are currently used by OSPRay:

| Name | Description |

|---|---|

| OSP_NO_ERROR | no error occurred |

| OSP_UNKNOWN_ERROR | an unknown error occurred |

| OSP_INVALID_ARGUMENT | an invalid argument was specified |

| OSP_INVALID_OPERATION | the operation is not allowed for the specified object |

| OSP_OUT_OF_MEMORY | there is not enough memory to execute the command |

| OSP_UNSUPPORTED_CPU | the CPU is not supported (minimum ISA is SSE4.1 on x86_64 and NEON on ARM64) |

| OSP_VERSION_MISMATCH | a module could not be loaded due to mismatching version |

These error codes are either directly return by some API functions, or are recorded to be later queried by the application via

OSPError ospDeviceGetLastErrorCode(OSPDevice);A more descriptive error message can be queried by calling

const char* ospDeviceGetLastErrorMsg(OSPDevice);Alternatively, the application can also register a callback function of type

typedef void (*OSPErrorCallback)(void *userData, OSPError, const char* errorDetails);via

void ospDeviceSetErrorCallback(OSPDevice, OSPErrorCallback, void *userData);to get notified when errors occur.

Applications may be interested in messages which OSPRay emits, whether for debugging or logging events. Applications can call

void ospDeviceSetStatusCallback(OSPDevice, OSPStatusCallback, void *userData);in order to register a callback function of type

typedef void (*OSPStatusCallback)(void *userData, const char* messageText);which OSPRay will use to emit status messages. By default, OSPRay

uses a callback which does nothing, so any output desired by an

application will require that a callback is provided. Note that

callbacks for C++ std::cout and std::cerr can

be alternatively set through ospInit or the

OSPRAY_LOG_OUTPUT environment variable.

Applications can clear either callback by passing NULL

instead of an actual function pointer.

Loading OSPRay Extensions at Runtime

OSPRay’s functionality can be extended via plugins (which we call

“modules”), which are implemented in shared libraries. To load module

name from libospray_module_<name>.so (on

Linux and Mac OS X) or ospray_module_<name>.dll (on

Windows) use

OSPError ospLoadModule(const char *name);Modules are searched in OS-dependent paths.

ospLoadModule returns OSP_NO_ERROR if the

plugin could be successfully loaded.

Shutting Down OSPRay

When the application is finished using OSPRay (typically on application exit), the OSPRay API should be finalized with

void ospShutdown();This API call ensures that the current device is cleaned up

appropriately. Due to static object allocation having non-deterministic

ordering, it is recommended that applications call

ospShutdown before the calling application process

terminates.

Objects

All entities of OSPRay (the renderer, volumes, geometries, lights, cameras, …) are a

logical specialization of OSPObject and share common

mechanism to deal with parameters and lifetime.

An important aspect of object parameters is that parameters do not get passed to objects immediately. Instead, parameters are not visible at all to objects until they get explicitly committed to a given object via a call to

void ospCommit(OSPObject);at which time all previously additions or changes to parameters are visible at the same time. If a user wants to change the state of an existing object (e.g., to change the origin of an already existing camera) it is perfectly valid to do so, as long as the changed parameters are recommitted.

The commit semantic allow for batching up multiple small changes, and specifies exactly when changes to objects will occur. This can impact performance and consistency for devices crossing a PCI bus or across a network.

Note that OSPRay uses reference counting to manage the lifetime of all objects, so one cannot explicitly “delete” any object. Instead, to indicate that the application does not need and does not access the given object anymore, call

void ospRelease(OSPObject);This decreases its reference count and if the count reaches

0 the object will automatically get deleted. Passing

NULL is not an error. Note that every handle returned via

the API needs to be released when the object is no longer needed, to

avoid memory leaks.

Sometimes applications may want to have more than one reference to an object, where it is desirable for the application to increment the reference count of an object. This is done with

void ospRetain(OSPObject);It is important to note that this is only necessary if the

application wants to call ospRelease on an object more than

once: objects which contain other objects as parameters internally

increment/decrement ref counts and should not be explicitly done by the

application.

Parameters

Parameters allow to configure the behavior of and to pass data to

objects. However, objects do not have an explicit interface for

reasons of high flexibility and a more stable compile-time API. Instead,

parameters are passed separately to objects in an arbitrary order, and

unknown parameters will simply be ignored (though a warning message will

be posted). The following function allows adding various types of

parameters with name id to a given object:

void ospSetParam(OSPObject, const char *id, OSPDataType type, const void *mem);The valid parameter names for all OSPObjects and what

types are valid are discussed in future sections.

Note that mem must always be a pointer to the

object, otherwise accidental type casting can occur. This is especially

true for pointer types (OSP_VOID_PTR and

OSPObject handles), as they will implicitly cast to

void\ *, but be incorrectly interpreted. To help with some

of these issues, there also exist variants of ospSetParam

for specific types, such as ospSetInt and

ospSetVec3f in the OSPRay utility library (found in

ospray_util.h). Note that half precision float parameters

OSP_HALF, OSP_VEC[234]H are not supported.

Users can also remove parameters that have been explicitly set from

ospSetParam. Any parameters which have been removed will go

back to their default value during the next commit unless a new

parameter was set after the parameter was removed. To remove a

parameter, use

void ospRemoveParam(OSPObject, const char *id);Data

OSPRay consumes data arrays from the application using a specific

object type, OSPData. There are several components to

describing a data array: element type, 1/2/3 dimensional striding, and

whether the array is shared with the application or copied into opaque,

OSPRay-owned memory.

Shared data arrays require that the application’s array memory

outlives the lifetime of the created OSPData, as OSPRay is

referring to application memory. Where this is not preferable,

applications use opaque arrays to allow the OSPData to own

the lifetime of the array memory. However, opaque arrays dictate the

cost of copying data into it, which should be kept in mind.

Thus, the most efficient way to specify a data array from the application is to created a shared data array, which is done with

OSPData ospNewSharedData(const void *sharedData,

OSPDataType,

uint64_t numItems1,

int64_t byteStride1 = 0,

uint64_t numItems2 = 1,

int64_t byteStride2 = 0,

uint64_t numItems3 = 1,

int64_t byteStride3 = 0,

OSPDeleterCallback = NULL,

void *userData = NULL);The call returns an OSPData handle to the created array.

The calling program guarantees that the sharedData pointer

will remain valid for the duration that this data array is being used.

The number of elements numItems must be positive (there

cannot be an empty data object). The data is arranged in three

dimensions, with specializations to two or one dimension (if some

numItems are 1). The distance between consecutive elements

(per dimension) is given in bytes with byteStride and can

also be negative. If byteStride is zero it will be

determined automatically (e.g., as sizeof(type)). Strides

do not need to be ordered, i.e., byteStride2 can be smaller

than byteStride1, which is equivalent to a transpose.

However, if the stride should be calculated, then an ordering in

dimensions is assumed to disambiguate, i.e.,

byteStride1 < byteStride2 < byteStride3.

An application can pass ownership of shared data to OSPRay (for example, when it temporarily created a modified version of its data only to make it compatible with OSPRay) by providing a deleter function that OSPRay will call whenever the time comes to deallocate the shared buffer. The deleter function has the following signature:

typedef void (*OSPDeleterCallback)(const void *userData, const void *sharedData);where sharedData will receive the address of the buffer

and userData will receive whatever additional state the

function needs to perform the deletion (both provided to

ospNewSharedData when sharing the data with OSPRay).

The enum type OSPDataType describes the different

element types that can be represented in OSPRay; valid constants are

listed in the table below.

| Type / Name | Description |

|---|---|

| OSP_DEVICE | API device object reference |

| OSP_DATA | data reference |

| OSP_OBJECT | generic object reference |

| OSP_CAMERA | camera object reference |

| OSP_FRAMEBUFFER | framebuffer object reference |

| OSP_FUTURE | future object reference |

| OSP_LIGHT | light object reference |

| OSP_MATERIAL | material object reference |

| OSP_TEXTURE | texture object reference |

| OSP_RENDERER | renderer object reference |

| OSP_WORLD | world object reference |

| OSP_GROUP | group object reference |

| OSP_INSTANCE | instance object reference |

| OSP_GEOMETRY | geometry object reference |

| OSP_GEOMETRIC_MODEL | geometric model object reference |

| OSP_VOLUME | volume object reference |

| OSP_VOLUMETRIC_MODEL | volumetric model object reference |

| OSP_TRANSFER_FUNCTION | transfer function object reference |

| OSP_IMAGE_OPERATION | image operation object reference |

| OSP_STRING | C-style zero-terminated character string |

| OSP_BOOL | 8 bit boolean |

| OSP_CHAR, OSP_VEC[234]C | 8 bit signed character scalar and [234]-element vector |

| OSP_UCHAR, OSP_VEC[234]UC | 8 bit unsigned character scalar and [234]-element vector |

| OSP_SHORT, OSP_VEC[234]S | 16 bit unsigned integer scalar and [234]-element vector |

| OSP_USHORT, OSP_VEC[234]US | 16 bit unsigned integer scalar and [234]-element vector |

| OSP_INT, OSP_VEC[234]I | 32 bit signed integer scalar and [234]-element vector |

| OSP_UINT, OSP_VEC[234]UI | 32 bit unsigned integer scalar and [234]-element vector |

| OSP_LONG, OSP_VEC[234]L | 64 bit signed integer scalar and [234]-element vector |

| OSP_ULONG, OSP_VEC[234]UL | 64 bit unsigned integer scalar and [234]-element vector |

| OSP_HALF, OSP_VEC[234]H | 16 bit half precision floating-point

scalar and [234]-element vector (IEEE 754 binary16) |

| OSP_FLOAT, OSP_VEC[234]F | 32 bit single precision floating-point scalar and [234]-element vector |

| OSP_DOUBLE, OSP_VEC[234]D | 64 bit double precision floating-point scalar and [234]-element vector |

| OSP_BOX[1234]I | 32 bit integer box (lower + upper bounds) |

| OSP_BOX[1234]F | 32 bit single precision floating-point box (lower + upper bounds) |

| OSP_LINEAR[23]F | 32 bit single precision floating-point linear transform ([23] vectors) |

| OSP_AFFINE[23]F | 32 bit single precision floating-point affine transform (linear transform plus translation) |

| OSP_QUATF | 32 bit single precision floating-point quaternion, in (i,j,k,w) layout |

| OSP_VOID_PTR | raw memory address (only found in module extensions) |

If the elements of the array are handles to objects, then their reference counter is incremented.

An opaque OSPData with memory allocated by OSPRay is

created with

OSPData ospNewData(OSPDataType,

uint64_t numItems1,

uint64_t numItems2 = 1,

uint64_t numItems3 = 1);To allow for (partial) copies or updates of data arrays use

void ospCopyData(const OSPData source,

OSPData destination,

uint64_t destinationIndex1 = 0,

uint64_t destinationIndex2 = 0,

uint64_t destinationIndex3 = 0);which will copy the whole1 content of the

source array into destination at the given

location destinationIndex. The OSPDataTypes of

the data objects must match. The region to be copied must be valid

inside the destination, i.e., in all dimensions,

destinationIndex + sourceSize <= destinationSize. The

affected region

[destinationIndex, destinationIndex + sourceSize) is marked

as dirty, which may be used by OSPRay to only process or update that

sub-region (e.g., updating an acceleration structure). If the

destination array is shared with OSPData by the application (created

with ospNewSharedData), then

- the source array must be shared as well (thus

ospCopyDatacannot be used to read opaque data) - if source and destination memory overlaps (aliasing), then behavior is undefined

- except if source and destination regions are identical (including

matching strides), which can be used by application to mark that region

as dirty (instead of the whole

OSPData)

To add a data array as parameter named id to another

object call also use

void ospSetObject(OSPObject, const char *id, OSPData);Volumes

Volumes are volumetric data sets with discretely sampled values in 3D

space, typically a 3D scalar field. To create a new volume object of

given type type use

OSPVolume ospNewVolume(const char *type);Note that OSPRay’s implementation forwards type directly

to Open VKL, allowing new Open VKL volume types to be usable within

OSPRay without the need to change (or even recompile) OSPRay.

Structured Regular Volume

Structured volumes only need to store the values of the samples, because their addresses in memory can be easily computed from a 3D position. A common type of structured volumes are regular grids.

Structured regular volumes are created by passing the type string

“structuredRegular” to ospNewVolume.

Structured volumes are represented through an OSPData 3D

array data (which may or may not be shared with the

application). The voxel data must be laid out in xyz-order2 and

can be compact (best for performance) or can have a stride between

voxels, specified through the byteStride1 parameter when

creating the OSPData. Only 1D strides are supported,

additional strides between scanlines (2D, byteStride2) and

slices (3D, byteStride3) are not.

The parameters understood by structured volumes are summarized in the table below.

| Type | Name | Default | Description |

|---|---|---|---|

| vec3f | gridOrigin | (0,0,0) | origin of the grid in object-space |

| vec3f | gridSpacing | (1,1,1) | size of the grid cells in object-space |

| OSPData | data | the actual voxel 3D data | |

| bool | cellCentered | false | whether the data is provided per cell (as opposed to per vertex) |

| uint | filter | OSP_VOLUME_FILTER_LINEAR |

filter used for reconstructing the field,

also allowed is OSP_VOLUME_FILTER_NEAREST and

OSP_VOLUME_FILTER_CUBIC |

| uint | gradientFilter | same as filter |

filter used during gradient computations |

| float | background | NaN |

value that is used when sampling an undefined region outside the volume domain |

The size of the volume is inferred from the size of the 3D array

data, as is the type of the voxel values (currently

supported are: OSP_UCHAR, OSP_SHORT,

OSP_USHORT, OSP_HALF, OSP_FLOAT,

and OSP_DOUBLE). Data can be provided either per cell or

per vertex (the default), selectable via the cellCentered

parameter (which will also affect the computed bounding box).

Structured Spherical Volume

Structured spherical volumes are also supported, which are created by

passing a type string of “structuredSpherical” to

ospNewVolume. The grid dimensions and parameters are

defined in terms of radial distance r, inclination angle θ, and azimuthal angle ϕ, conforming with the ISO

convention for spherical coordinate systems. The coordinate system and

parameters understood by structured spherical volumes are summarized

below.

| Type | Name | Default | Description |

|---|---|---|---|

| vec3f | gridOrigin | (0,0,0) | origin of the grid in units of (r,θ,ϕ); angles in degrees |

| vec3f | gridSpacing | (1,180/dim.y,360/dim.z) | size of the grid cells in units of (r,θ,ϕ), per default covering the full sphere; angles in degrees |

| OSPData | data | the actual voxel 3D data | |

| uint | filter | OSP_VOLUME_FILTER_LINEAR |

filter used for reconstructing the field,

also allowed is OSP_VOLUME_FILTER_NEAREST |

| uint | gradientFilter | same as filter |

filter used during gradient computations |

| float | background | NaN |

value that is used when sampling an undefined region outside the volume domain |

The dimensions (r,θ,ϕ) of the

volume are inferred from the size of the 3D array data, as

is the type of the voxel values (currently supported are:

OSP_UCHAR, OSP_SHORT, OSP_USHORT,

OSP_HALF, OSP_FLOAT, and

OSP_DOUBLE).

These grid parameters support flexible specification of spheres,

hemispheres, spherical shells, spherical wedges, and so forth. The grid

extents (computed as

[gridOrigin, gridOrigin + (dimensions - 1) * gridSpacing])

however must be constrained such that:

- r ≥ 0

- 0 ≤ θ ≤ 180

- 0 ≤ ϕ ≤ 360

Adaptive Mesh Refinement (AMR) Volume

OSPRay currently supports block-structured (Berger-Colella) AMR volumes. Volumes are specified as a list of blocks, which exist at levels of refinement in potentially overlapping regions. Blocks exist in a tree structure, with coarser refinement level blocks containing finer blocks. The cell width is equal for all blocks at the same refinement level, though blocks at a coarser level have a larger cell width than finer levels.

There can be any number of refinement levels and any number of blocks

at any level of refinement. An AMR volume type is created by passing the

type string “amr” to ospNewVolume.

Blocks are defined by three parameters: their bounds, the refinement level in which they reside, and the scalar data contained within each block.

Note that cell widths are defined per refinement level, not per block.

| Type | Name | Default | Description |

|---|---|---|---|

| uint | method | OSP_AMR_CURRENT |

OSPAMRMethod sampling method.

Supported methods are: |

OSP_AMR_CURRENT |

|||

OSP_AMR_FINEST |

|||

OSP_AMR_OCTANT |

|||

| float[] | cellWidth | NULL | array of each level’s cell width |

| box3i[] | block.bounds | NULL | data array of grid sizes (in voxels) for each AMR block |

| int[] | block.level | NULL | array of each block’s refinement level |

| OSPData[] | block.data | NULL | data

array of OSPData containing the actual scalar voxel data, only

OSP_FLOAT is supported as OSPDataType |

| vec3f | gridOrigin | (0,0,0) | origin of the grid |

| vec3f | gridSpacing | (1,1,1) | size of the grid cells |

| float | background | NaN |

value that is used when sampling an undefined region outside the volume domain |

Lastly, note that the gridOrigin and

gridSpacing parameters act just like the structured volume

equivalent, but they only modify the root (coarsest level) of

refinement.

In particular, OSPRay’s / Open VKL’s AMR implementation was designed

to cover Berger-Colella [1] and Chombo [2] AMR data. The

method parameter above determines the interpolation method

used when sampling the volume.

- OSP_AMR_CURRENT

- finds the finest refinement level at that cell and interpolates through this “current” level

- OSP_AMR_FINEST

- will interpolate at the closest existing cell in the volume-wide finest refinement level regardless of the sample cell’s level

- OSP_AMR_OCTANT

- interpolates through all available refinement levels at that cell. This method avoids discontinuities at refinement level boundaries at the cost of performance

Details and more information can be found in the publication for the implementation [3].

- M.J. Berger and P. Colella, “Local adaptive mesh refinement for shock hydrodynamics.” Journal of Computational Physics 82.1 (1989): 64-84. DOI: 10.1016/0021-9991(89)90035-1

- M. Adams, P. Colella, D.T. Graves, J.N. Johnson, N.D. Keen, T.J. Ligocki, D.F. Martin. P.W. McCorquodale, D. Modiano. P.O. Schwartz, T.D. Sternberg, and B. Van Straalen, “Chombo Software Package for AMR Applications – Design Document”, Lawrence Berkeley National Laboratory Technical Report LBNL-6616E.

- I. Wald, C. Brownlee, W. Usher, and A. Knoll, “CPU volume rendering of adaptive mesh refinement data”. SIGGRAPH Asia 2017 Symposium on Visualization – SA ’17, 18(8), 1–8. DOI: 10.1145/3139295.3139305

Unstructured Volume

Unstructured volumes can have their topology and geometry freely

defined. Geometry can be composed of tetrahedral, hexahedral, wedge or

pyramid cell types. The data format used is compatible with VTK and

consists of multiple arrays: vertex positions and values, vertex

indices, cell start indices, cell types, and cell values. An

unstructured volume type is created by passing the type string

“unstructured” to ospNewVolume.

Sampled cell values can be specified either per-vertex

(vertex.data) or per-cell (cell.data). If both

arrays are set, cell.data takes precedence.

Similar to a mesh, each cell is formed by a group of indices into the

vertices. For each vertex, the corresponding (by array index) data value

will be used for sampling when rendering, if specified. The index order

for a tetrahedron is the same as VTK_TETRA: bottom triangle

counterclockwise, then the top vertex.

For hexahedral cells, each hexahedron is formed by a group of eight

indices into the vertices and data values. Vertex ordering is the same

as VTK_HEXAHEDRON: four bottom vertices counterclockwise,

then top four counterclockwise.

For wedge cells, each wedge is formed by a group of six indices into

the vertices and data values. Vertex ordering is the same as

VTK_WEDGE: three bottom vertices counterclockwise, then top

three counterclockwise.

For pyramid cells, each cell is formed by a group of five indices

into the vertices and data values. Vertex ordering is the same as

VTK_PYRAMID: four bottom vertices counterclockwise, then

the top vertex.

To maintain VTK data compatibility, the index array may

be specified with cell sizes interleaved with vertex indices in the

following format: n, id1, ..., idn, m, id1, ..., idm.

This alternative index array layout can be enabled through

the indexPrefixed flag (in which case, the

cell.type parameter must be omitted).

| Type | Name | Default | Description |

|---|---|---|---|

| vec3f[] | vertex.position | data array of vertex positions | |

| float[] | vertex.data | data array of vertex data values to be sampled | |

| uint32[] / uint64[] | index | data array of indices (into the vertex array(s)) that form cells | |

| bool | indexPrefixed | false | indicates that the index

array is compatible to VTK, where the indices of each cell are prefixed

with the number of vertices |

| uint32[] / uint64[] | cell.index | data array of locations (into the index array), specifying the first index of each cell | |

| float[] | cell.data | data array of cell data values to be sampled | |

| uint8[] | cell.type | data

array of cell types (VTK compatible), only set if

indexPrefixed = false. Supported types are: |

|

OSP_TETRAHEDRON |

|||

OSP_HEXAHEDRON |

|||

OSP_WEDGE |

|||

OSP_PYRAMID |

|||

| bool | hexIterative | false | hexahedron interpolation method, defaults to fast non-iterative version which could have rendering inaccuracies may appear if hex is not parallelepiped |

| bool | precomputedNormals | false | whether to accelerate by precomputing, at a cost of 12 bytes/face |

| float | background | NaN |

value that is used when sampling an undefined region outside the volume domain |

VDB Volume

VDB volumes implement a data structure that is very similar to the

data structure outlined in Museth [1], they are created by passing the

type string “vdb” to ospNewVolume.

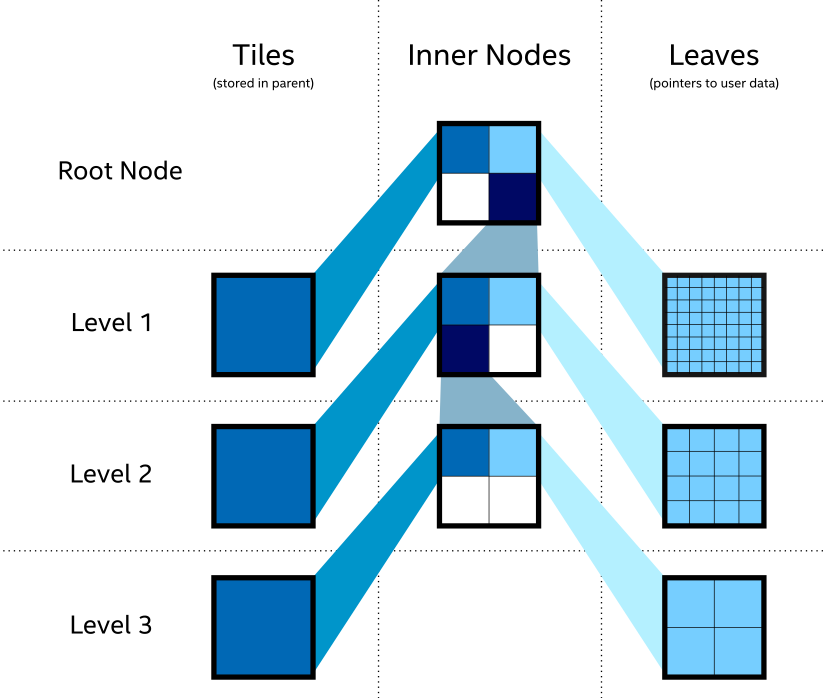

The data structure is a hierarchical regular grid at its core: Nodes are regular grids, and each grid cell may either store a constant value (this is called a tile), or child pointers. Nodes in VDB trees are wide: Nodes on the first level have a resolution of 323 voxels, on the next level 163, and on the leaf level 83 voxels. All nodes on a given level have the same resolution. This makes it easy to find the node containing a coordinate using shift operations (see [1]). VDB leaf nodes are implicit in OSPRay / Open VKL: they are stored as pointers to user-provided data.

VDB volumes interpret input data as constant cells (which are then

potentially filtered). This is in contrast to

structuredRegular volumes, which have a vertex-centered

interpretation.

The VDB implementation in OSPRay / Open VKL follows the following goals:

- Efficient data structure traversal on vector architectures.

- Enable the use of industry-standard

.vdbfiles created through the OpenVDB library. - Compatibility with OpenVDB on a leaf data level, so that

.vdbfile may be loaded with minimal overhead.

VDB volumes have the following parameters:

| Type | Name | Description |

|---|---|---|

| int | maxSamplingDepth | do not descend further than to this depth during sampling, the maximum value and the default is 3 |

| uint32[] | node.level | level on which each input node exists, may be 1, 2 or 3 (levels are counted from the root level = 0 down) |

| vec3i[] | node.origin | the node origin index (per input node) |

| OSPData[] | node.data | data

arrays with the node data (per input node). Nodes that are tiles are

expected to have single-item arrays. Leaf-nodes with grid data expected

to have compact 3D arrays in zyx layout (z changes most quickly) with

the correct number of voxels for the level. Only

OSP_FLOAT is supported as field

OSPDataType. |

| OSPData | nodesPackedDense | optionally provided instead of

node.data, a single array of all dense node data in a

contiguous zyx layout, provided in the same order as the corresponding

node.* parameters |

| OSPData | nodesPackedTile | optionally provided instead of

node.data, a single array of all tile node data in a

contiguous layout, provided in the same order as the corresponding

node.* parameters |

| uint32[] | node.format | for each input node, whether it is of

format OSP_VOLUME_FORMAT_DENSE_ZYX (and thus stored in

nodesPackedDense), or OSP_VOLUME_FORMAT_TILE

(stored in nodesPackedTile) |

| uint | filter | filter used for reconstructing the field,

default is OSP_VOLUME_FILTER_LINEAR, alternatively

OSP_VOLUME_FILTER_NEAREST, or

OSP_VOLUME_FILTER_CUBIC. |

| uint | gradientFilter | filter used for reconstructing the field

during gradient computations, default same as filter |

| float | background | value that is used when sampling an

undefined region outside the volume domain, default

NaN |

The nodesPackedDense and nodesPackedTile

together with node.format parameters may be provided

instead of node.data; this packed data layout may provide

better performance.

- Museth, K. VDB: High-Resolution Sparse Volumes with Dynamic Topology. ACM Transactions on Graphics 32(3), 2013. DOI: 10.1145/2487228.2487235

Particle Volume

Particle volumes consist of a set of points in space. Each point has

a position, a radius, and a weight typically associated with an

attribute. Particle volumes are created by passing the type string

“particle” to ospNewVolume.

A radial basis function defines the contribution of that particle. Currently, we use the Gaussian radial basis function $$\phi(P) = w \exp\left(-\frac{(P - p)^2}{2 r^2}\right),$$ where P is the particle position, p is the sample position, r is the radius and w is the weight. At each sample, the scalar field value is then computed as the sum of each radial basis function ϕ, for each particle that overlaps it.

The OSPRay / Open VKL implementation is similar to direct evaluation of samples in Reda et al. [2]. It uses an Embree-built BVH with a custom traversal, similar to the method in [1].

| Type | Name | Default | Description |

|---|---|---|---|

| vec3f[] | particle.position | data array of particle positions | |

| float[] | particle.radius | data array of particle radii | |

| float[] | particle.weight | NULL | optional data array of particle weights, specifying the height of the kernel. |

| float | radiusSupportFactor | 3.0 | The multiplier of the particle radius required for support. Larger radii ensure smooth results at the cost of performance. In the Gaussian kernel, the radius is one standard deviation (σ), so a value of 3 corresponds to 3σ. |

| float | clampMaxCumulativeValue | 0 | The maximum cumulative value possible, set by user. All cumulative values will be clamped to this, and further traversal (RBF summation) of particle contributions will halt when this value is reached. A value of zero or less turns this off. |

| bool | estimateValueRanges | true | Enable heuristic estimation of value

ranges which are used in internal acceleration structures as well as for

determining the volume’s overall value range. When set to

false, the user must specify

clampMaxCumulativeValue, and all value ranges will be

assumed [0–clampMaxCumulativeValue]. Disabling this switch

may improve volume commit time, but will make volume rendering less

efficient. |

A. Knoll, I. Wald, P. Navratil, A. Bowen, K. Reda, M.E., Papka, and K. Gaither, “RBF Volume Ray Casting on Multicore and Manycore CPUs”, 2014, Computer Graphics Forum, 33: 71–80. doi:10.1111/cgf.12363

K. Reda, A. Knoll, K. Nomura, M. E. Papka, A. E. Johnson and J. Leigh, “Visualizing large-scale atomistic simulations in ultra-resolution immersive environments”, 2013 IEEE Symposium on Large-Scale Data Analysis and Visualization (LDAV), Atlanta, GA, 2013, pp. 59–65.

Transfer Function

Transfer functions map the scalar values of volumes to color and

opacity and thus they can be used to visually emphasize certain features

of the volume. To create a new transfer function of given type

type use

OSPTransferFunction ospNewTransferFunction(const char *type);The returned handle can be assigned to a volumetric model (described

below) as parameter “transferFunction” using

ospSetObject.

One type of transfer function that is supported by OSPRay is the

linear transfer function, which interpolates between given equidistant

colors and opacities. It is create by passing the string

“piecewiseLinear” to ospNewTransferFunction

and it is controlled by these parameters:

| Type | Name | Description |

|---|---|---|

| vec3f[] | color | data array of colors (linear RGB) |

| float[] | opacity | data array of opacities |

| box1f | value | domain (scalar range) this function maps from |

The arrays color and opacity can be of

different length.

VolumetricModels

Volumes in OSPRay are given volume rendering appearance information through VolumetricModels. This decouples the physical representation of the volume (and possible acceleration structures it contains) to rendering-specific parameters (where more than one set may exist concurrently). To create a volume instance, call

OSPVolumetricModel ospNewVolumetricModel(OSPVolume);The passed volume can be NULL as long as the volume to

be used is passed as a parameter. If both a volume is specified on

object creation and as a parameter, the parameter value is used. If the

parameter value is later removed, the volume object passed on object

creation is again used.

| Type | Name | Default | Description |

|---|---|---|---|

| OSPVolume | volume | optional volume object this model references | |

| OSPTransferFunction | transferFunction | transfer function to use | |

| float | densityScale | 1.0 | makes volumes uniformly thinner or thicker |

| float | anisotropy | 0.0 | anisotropy of the (Henyey-Greenstein) phase function in [-1–1] (path tracer only), default to isotropic scattering |

| uint32 | id | -1u | optional user ID, for framebuffer channel

OSP_FB_ID_OBJECT |

Geometries

Geometries in OSPRay are objects that describe intersectable

surfaces. To create a new geometry object of given type

type use

OSPGeometry ospNewGeometry(const char *type);Note that in the current implementation geometries are limited to a maximum of 232 primitives.

Mesh

A mesh consisting of either triangles or quads is created by calling

ospNewGeometry with type string “mesh”. Once

created, a mesh recognizes the following parameters:

| Type | Name | Description |

|---|---|---|

| vec3f[] | vertex.position | data

array of vertex positions, overridden by motion.*

arrays |

| vec3f[] | normal | data

array of face-varying normals, overridden by motion.*

arrays |

| vec3f[] | vertex.normal | data

array of vertex-varying normals, overridden by motion.*

arrays |

| vec4f[] / vec3f[] | color | data array of face-varying colors (linear RGBA/RGB) |

| vec4f[] / vec3f[] | vertex.color | data array of vertex-varying colors (linear RGBA/RGB) |

| vec2f[] | texcoord | data array of face-varying texture coordinates |

| vec2f[] | vertex.texcoord | data array of vertex-varying texture coordinates |

| vec3ui[] / vec4ui[] | index | data array of (either triangle or quad) indices (into the vertex array(s)) |

| bool | quadSoup | when no explicit index is

given, indicates whether to assume a ‘soup’ of quads instead of

triangles, default false |

| vec3f[][] | motion.vertex.position | data array of vertex position arrays (uniformly distributed keys for deformation motion blur) |

| vec3f[][] | motion.normal | data array of face-varying normal arrays (uniformly distributed keys for deformation motion blur) |

| vec3f[][] | motion.vertex.normal | data array of vertex-varying normal arrays (uniformly distributed keys for deformation motion blur) |

| box1f | time | time associated with first and last key in

motion.* arrays (for deformation motion blur), default [0,

1] |

The data type of index arrays differentiates between the underlying

geometry, triangles are used for a index with vec3ui type

and quads for vec4ui type. Quads are internally handled as

a pair of two triangles, thus mixing triangles and quads is supported by

encoding some triangle as a quad with the last two vertex indices being

identical (w=z).

The vertex.position array is mandatory to create a valid

mesh.

The index array is optional. If none is provided, a

‘triangle soup’ is assumed, i.e., each three consecutive vertices form

one triangle; unless the boolean quadSoup is set to true,

then a ‘quad soup’ is assumed i.e., each four subsequent vertices form

one quad. If the size of the vertex.position array is not a

multiple of three for triangles or four for quads, the remainder

vertices are ignored.

Face-varying attributes (normal,

motion.normal, color, texcoord)

map unique values to each vertex of a primitive/face (triangle or quad),

thus attributes can be different for the same vertex that is shared by

multiple primitives. Essentially, face-varying attributes are a

‘attribute soup’ and behave similar to the implicit index, the size of

the array must be at least three times the number of triangles or four

times the number of quads, respectively. Face-varying attributes take

precedence over the respective vertex attributes

(vertex.normal, motion.vertex.normal,

vertex.color, vertex.texcoord) when both

arrays of the same attribute are present.

Subdivision

A mesh consisting of subdivision surfaces, created by specifying a

geometry of type “subdivision”. Once created, a subdivision

recognizes the following parameters:

| Type | Name | Description |

|---|---|---|

| vec3f[] | vertex.position | data array of vertex positions |

| vec4f[] | color | optional data array of face-varying colors (linear RGBA) |

| vec4f[] | vertex.color | optional data array of vertex-varying colors (linear RGBA) |

| vec2f[] | texcoord | optional data array of vertex-varying texture coordinates |

| vec2f[] | vertex.texcoord | optional data array of vertex-varying texture coordinates |

| float | level | global level of tessellation, default 5 |

| uint[] | index | data array of indices (into the vertex array(s)) |

| float[] | index.level | optional data array of per-edge levels of tessellation, overrides global level |

| uint[] | face | optional data array holding the number of indices/edges (3 to 15) per face, defaults to 4 (a pure quad mesh) |

| vec2i[] | edgeCrease.index | optional data array of edge crease indices |

| float[] | edgeCrease.weight | optional data array of edge crease weights |

| uint[] | vertexCrease.index | optional data array of vertex crease indices |

| float[] | vertexCrease.weight | optional data array of vertex crease weights |

| uint | mode | OSPSubdivisionMode

subdivision edge boundary mode, supported modes are: |

OSP_SUBDIVISION_NO_BOUNDARY |

||

OSP_SUBDIVISION_SMOOTH_BOUNDARY

(default) |

||

OSP_SUBDIVISION_PIN_CORNERS |

||

OSP_SUBDIVISION_PIN_BOUNDARY |

||

OSP_SUBDIVISION_PIN_ALL |

The vertex and index arrays are mandatory

to create a valid subdivision surface. If no face array is

present then a pure quad mesh is assumed (the number of indices must be

a multiple of 4). Optionally supported are edge and vertex creases.

Spheres

A geometry consisting of individual spheres, each of which can have

an own radius, is created by calling ospNewGeometry with

type string “sphere”. The spheres will not be tessellated

but rendered procedurally and are thus perfectly round. To allow a

variety of sphere representations in the application this geometry

allows a flexible way of specifying the data of center position and

radius within a data array:

| Type | Name | Default | Description |

|---|---|---|---|

| vec3f[] | sphere.position | data array of center positions | |

| float[] | sphere.radius | NULL | optional data array of the per-sphere radius |

| vec3f[] | sphere.normal | NULL | optional data array of normals (only for “oriented disc”) |

| vec2f[] | sphere.texcoord | NULL | optional data array of texture coordinates (constant per sphere) |

| float | radius | 0.01 | default radius for all spheres (if

sphere.radius is not set) |

| uint | type | OSPSphereType for rendering

the sphere. Supported types are: |

|

OSP_SPHERE (default) |

|||

OSP_DISC |

|||

OSP_ORIENTED_DISC |

Curves

A geometry consisting of multiple curves is created by calling

ospNewGeometry with type string “curve”. The

parameters defining this geometry are listed in the table below.

| Type | Name | Description |

|---|---|---|

| vec4f[] | vertex.position_radius | data array of vertex position and per-vertex radius |

| vec2f[] | vertex.texcoord | data array of per-vertex texture coordinates |

| vec4f[] | vertex.color | data array of corresponding vertex colors (linear RGBA) |

| vec3f[] | vertex.normal | data array of curve normals (only for “ribbon” curves) |

| vec4f[] | vertex.tangent | data array of curve tangents (only for “hermite” curves) |

| uint32[] | index | data array of indices to the first vertex or tangent of a curve segment |

| uint | type | OSPCurveType for rendering

the curve. Supported types are: |

OSP_FLAT |

||

OSP_ROUND |

||

OSP_RIBBON |

||

OSP_DISJOINT |

||

| uint | basis | OSPCurveBasis for defining

the curve. Supported bases are: |

OSP_LINEAR |

||

OSP_BEZIER |

||

OSP_BSPLINE |

||

OSP_HERMITE |

||

OSP_CATMULL_ROM |

Positions in vertex.position_radius parameter supports

per-vertex varying radii with data type vec4f[] and

instantiate Embree curves internally for the relevant type/basis

mapping.

The following section describes the properties of different curve basis’ and how they use the data provided in data buffers:

- OSP_LINEAR

- The indices point to the first of 2 consecutive control points in the vertex buffer. The first control point is the start and the second control point the end of the line segment. The curve goes through all control points listed in the vertex buffer.

- OSP_BEZIER

- The indices point to the first of 4 consecutive control points in the vertex buffer. The first control point represents the start point of the curve, and the 4th control point the end point of the curve. The Bézier basis is interpolating, thus the curve does go exactly through the first and fourth control vertex.

- OSP_BSPLINE

- The indices point to the first of 4 consecutive control points in the vertex buffer. This basis is not interpolating, thus the curve does in general not go through any of the control points directly. Using this basis, 3 control points can be shared for two continuous neighboring curve segments, e.g., the curves (p0,p1,p2,p3) and (p1,p2,p3,p4) are C1 continuous. This feature make this basis a good choice to construct continuous multi-segment curves, as memory consumption can be kept minimal.

- OSP_HERMITE

- It is necessary to have both vertex buffer and tangent buffer for using this basis. The indices point to the first of 2 consecutive points in the vertex buffer, and the first of 2 consecutive tangents in the tangent buffer. This basis is interpolating, thus does exactly go through the first and second control point, and the first order derivative at the begin and end matches exactly the value specified in the tangent buffer. When connecting two segments continuously, the end point and tangent of the previous segment can be shared.

- OSP_CATMULL_ROM

- The indices point to the first of 4 consecutive control points in the vertex buffer. If (p0,p1,p2,p3) represent the points then this basis goes through p1 and p2, with tangents as (p2−p0)/2 and (p3−p1)/2.

The following section describes the properties of different curve types’ and how they define the geometry of a curve:

- OSP_FLAT

- This type enables faster rendering as the curve is rendered as a connected sequence of ray facing quads.

- OSP_ROUND

- This type enables rendering a real geometric surface for the curve which allows closeup views. This mode renders a sweep surface by sweeping a varying radius circle tangential along the curve.

- OSP_RIBBON

-

The type enables normal orientation of the curve and requires a normal

buffer be specified along with vertex buffer. The curve is rendered as a

flat band whose center approximately follows the provided vertex buffer

and whose normal orientation approximately follows the provided normal

buffer. Not supported for basis

OSP_LINEAR. - OSP_DISJOINT

-

Only supported for basis

OSP_LINEAR; the segments are open and not connected at the joints, i.e., the curve segments are either individual cones or cylinders.

Boxes

OSPRay can directly render axis-aligned bounding boxes without the

need to convert them to quads or triangles. To do so create a boxes

geometry by calling ospNewGeometry with type string

“box”.

| Type | Name | Description |

|---|---|---|

| box3f[] | box | data array of boxes |

Planes

OSPRay can directly render planes defined by plane equation

coefficients in its implicit form ax + by + cz + d = 0.

By default planes are infinite but their extents can be limited by

defining optional bounding boxes. A planes geometry can be created by

calling ospNewGeometry with type string

“plane”.

| Type | Name | Description |

|---|---|---|

| vec4f[] | plane.coefficients | data array of plane coefficients (a,b,c,d) |

| box3f[] | plane.bounds | optional data array of bounding boxes |

Isosurfaces

OSPRay can directly render multiple isosurfaces of a volume without

first tessellating them. To do so create an isosurfaces geometry by

calling ospNewGeometry with type string

“isosurface”. The appearance information of the surfaces is

set through the Geometric Model. Per-isosurface colors can be set by

passing per-primitive colors to the Geometric Model, in order of the

isosurface array.

| Type | Name | Description |

|---|---|---|

| float | isovalue | single isovalues |

| float[] | isovalue | data array of isovalues |

| OSPVolume | volume | handle of the Volume to be isosurfaced |

GeometricModels

Geometries are matched with surface appearance information through GeometricModels. These take a geometry, which defines the surface representation, and applies either full-object or per-primitive color and material information. To create a geometric model, call

OSPGeometricModel ospNewGeometricModel(OSPGeometry);The passed geometry can be NULL as long as the geometry

to be used is passed as a parameter. If both a geometry is specified on

object creation and as a parameter, the parameter value is used. If the

parameter value is later removed, the geometry object passed on object

creation is again used.

Color and material are fetched with the primitive ID of the hit

(clamped to the valid range, thus a single color or material is fine),

or mapped first via the index array (if present). All

parameters are optional, however, some renderers (notably the path tracer) require a

material to be set. Materials are either handles of

OSPMaterial, or indices into the material

array on the renderer, which

allows to build a world which can

be used by different types of renderers.

An invertNormals flag allows to invert (shading) normal

vectors of the rendered geometry. That is particularly useful for

clipping. By changing normal vectors orientation one can control whether

inside or outside of the clipping geometry is being removed. For

example, a clipping geometry with normals oriented outside clips

everything what’s inside.

| Type | Name | Description |

|---|---|---|

| OSPGeometry | geometry | optional geometry object this model references |

| OSPMaterial / OSPMaterial[] / uint32 / uint32[] | material | optional (data array of per-primitive) material, may be an index into

the material parameter on the renderer (if it exists) |

| vec4f / vec4f[] | color | optional (data array of per-primitive) color assigned to the geometry (linear RGBA) |

| uint8[] | index | optional data array of per-primitive indices

into color and material |

| bool | invertNormals | inverts all shading normals (Ns), default false |

| uint32 | id | optional user ID, for framebuffer channel

OSP_FB_ID_OBJECT, default -1u |

Lights

To create a new light source of given type type use

OSPLight ospNewLight(const char *type);All light sources accept the following parameters:

| Type | Name | Default | Description |

|---|---|---|---|

| vec3f | color | white | color of the light (linear RGB) |

| float | intensity | 1 | intensity of the light (a factor) |

| uint | intensityQuantity | OSPIntensityQuantity to set

the radiometric quantity represented by intensity. The

default value depends on the light source. |

|

| bool | visible | true | whether the light can be directly seen |

In OSPRay the intensity parameter of a light source can

correspond to different types of radiometric quantities. The type of the

value represented by a light’s intensity parameter is set

using intensityQuantity, which accepts values from the enum

type OSPIntensityQuantity. The supported types of

OSPIntensityQuantity differ between the different light

sources (see documentation of each specific light source).

| Name | Description |

|---|---|

| OSP_INTENSITY_QUANTITY_POWER | the overall amount of light energy emitted by the light source into the scene, unit is W |

| OSP_INTENSITY_QUANTITY_INTENSITY | the overall amount of light emitted by the light in a given direction, unit is W/sr |

| OSP_INTENSITY_QUANTITY_RADIANCE | the amount of light emitted by a point on the light source in a given direction, unit is W/sr/m2 |

| OSP_INTENSITY_QUANTITY_IRRADIANCE | the amount of light arriving at a surface point, assuming the light is oriented towards to the surface, unit is W/m2 |

| OSP_INTENSITY_QUANTITY_SCALE | a linear scaling factor for light sources

with a built-in quantity (e.g., HDRI, or

sunSky, or when using

intensityDistribution). |

Photometric Lights

Measured light sources (IES, EULUMDAT, …) are supported by the

sphere, spot, and quad lights

when setting an intensityDistribution data array to modulate the intensity

per direction. The mapping is using the C-γ coordinate system (see also

below figure): the values of the first (or only) dimension of

intensityDistribution are uniformly mapped to γ in [0–π];

the first intensity value to 0, the last value to π, thus at least two

values need to be present.

If the array has a second dimension then the intensities are not

rotational symmetric around the main direction (where angle γ is zero),

but are accordingly mapped to the C-halfplanes in [0–2π]; the first

“row” of values to 0 and 2π, the other rows such that they have uniform

distance to its neighbors. The orientation of the C0-plane is specified

via c0.

| Type | Name | Description |

|---|---|---|

| float[] | intensityDistribution | luminous intensity distribution for photometric lights; can be 2D for asymmetric illumination; values are assumed to be uniformly distributed |

| vec3f | c0 | orientation, i.e., direction of the

C0-(half)plane (only needed if illumination via

intensityDistribution is asymmetric) |

When using an intensityDistribution then the default and

only valid value for intensityQuantity is

OSP_INTENSITY_QUANTITY_SCALE.

The following light types are supported by most OSPRay renderers.

Directional Light / Distant Light

The distant light (or traditionally the directional light) is thought

to be far away (outside of the scene), thus its light arrives (almost)

as parallel rays. It is created by passing the type string

“distant” to ospNewLight. The distant light

supports OSP_INTENSITY_QUANTITY_RADIANCE and

OSP_INTENSITY_QUANTITY_IRRADIANCE (default) as

intensityQuantity parameter value. In addition to the general parameters understood by all lights the

distant light supports the following special parameters:

| Type | Name | Default | Description |

|---|---|---|---|

| vec3f | direction | (0,0,1) | main emission direction of the distant light |

| float | angularDiameter | 0 | apparent size (angle in degree) of the light |

Setting the angular diameter to a value greater than zero will result in soft shadows when the renderer uses stochastic sampling (like the path tracer). For instance, the apparent size of the sun is about 0.53°.

Point Light / Sphere Light

The sphere light (or the special case point light) is a light

emitting uniformly in all directions from the surface toward the

outside. It does not emit any light toward the inside of the sphere. It

is created by passing the type string “sphere” to

ospNewLight. The point light supports only

OSP_INTENSITY_QUANTITY_SCALE when

intensityDistribution is set, or otherwise

OSP_INTENSITY_QUANTITY_POWER,

OSP_INTENSITY_QUANTITY_INTENSITY (then default) and

OSP_INTENSITY_QUANTITY_RADIANCE as

intensityQuantity parameter value. In addition to the general parameters understood by all lights and the

photometric parameters the sphere

light supports the following special parameters:

| Type | Name | Default | Description |

|---|---|---|---|

| vec3f | position | (0,0,0) | the center of the sphere light |

| float | radius | 0 | the size of the sphere light |

| vec3f | direction | (0,0,1) | main orientation of

intensityDistribution |

Setting the radius to a value greater than zero will result in soft shadows when the renderer uses stochastic sampling (like the path tracer).

Spotlight / Ring Light

The spotlight is a light emitting into a cone of directions. It is

created by passing the type string “spot” to

ospNewLight. The spotlight supports only

OSP_INTENSITY_QUANTITY_SCALE when

intensityDistribution is set, or otherwise

OSP_INTENSITY_QUANTITY_POWER,

OSP_INTENSITY_QUANTITY_INTENSITY (then default) and

OSP_INTENSITY_QUANTITY_RADIANCE as

intensityQuantity parameter value. In addition to the general parameters understood by all lights and the

photometric parameters the spotlight

supports the special parameters listed in the table.

| Type | Name | Default | Description |

|---|---|---|---|

| vec3f | position | (0,0,0) | the center of the spotlight |

| vec3f | direction | (0,0,1) | main emission direction of the spot |

| float | openingAngle | 180 | full opening angle (in degree) of the spot; outside of this cone is no illumination |

| float | penumbraAngle | 5 | size (angle in degree) of the “penumbra”,

the region between the rim (of the illumination cone) and full intensity

of the spot; should be smaller than half of

openingAngle |

| float | radius | 0 | the size of the spotlight, the radius of a

disk with normal direction |

| float | innerRadius | 0 | in combination with radius

turns the disk into a ring |

Setting the radius to a value greater than zero will result in soft shadows when the renderer uses stochastic sampling (like the path tracer). Additionally setting the inner radius will result in a ring instead of a disk emitting the light.

Quad Light

The quad3 light is a planar, procedural area

light source emitting uniformly on one side into the half-space. It is

created by passing the type string “quad” to

ospNewLight. The quad light supports only

OSP_INTENSITY_QUANTITY_SCALE when

intensityDistribution is set, or otherwise

OSP_INTENSITY_QUANTITY_POWER,

OSP_INTENSITY_QUANTITY_INTENSITY and

OSP_INTENSITY_QUANTITY_RADIANCE (then default) as

intensityQuantity parameter. In addition to the general parameters understood by all lights and the

photometric parameters the quad light

supports the following special parameters:

| Type | Name | Default | Description |

|---|---|---|---|

| vec3f | position | (0,0,0) | position of one vertex of the quad light |

| vec3f | edge1 | (1,0,0) | vector to one adjacent vertex |

| vec3f | edge2 | (0,1,0) | vector to the other adjacent vertex |

The emission side is determined by the cross product of

edge1×edge2. which is also the main emission

direction for intensityDistribution. Note that only

renderers that use stochastic sampling (like the path tracer) will

compute soft shadows from the quad light. Other renderers will just

sample the center of the quad light, which results in hard shadows.

Cylinder Light

The cylinder light is a cylinderical, procedural area light source

emitting uniformly outwardly into the space beyond the boundary. It is

created by passing the type string “cylinder” to

ospNewLight. The cylinder light supports

OSP_INTENSITY_QUANTITY_POWER,

OSP_INTENSITY_QUANTITY_INTENSITY and

OSP_INTENSITY_QUANTITY_RADIANCE (default) as

intensityQuantity parameter. In addition to the general parameters understood by all lights the

cylinder light supports the following special parameters:

| Type | Name | Default | Description |

|---|---|---|---|

| vec3f | position0 | (0,0,0) | position of the start of the cylinder |

| vec3f | position1 | (0,0,1) | position of the end of the cylinder |

| float | radius | 1 | radius of the cylinder |

Note that only renderers that use stochastic sampling (like the path tracer) will compute soft shadows from the cylinder light. Other renderers will just sample the closest point on the cylinder light, which results in hard shadows.

HDRI Light

The HDRI light is a textured light source surrounding the scene and

illuminating it from infinity. It is created by passing the type string

“hdri” to ospNewLight. The values of the HDRI

correspond to radiance and therefore the HDRI light only accepts

OSP_INTENSITY_QUANTITY_SCALE as

intensityQuantity parameter value. In addition to the general parameters the HDRI light supports the

following special parameters:

| Type | Name | Default | Description |

|---|---|---|---|

| vec3f | up | (0,1,0) | up direction of the light |

| vec3f | direction | (0,0,1) | direction to which the center of the texture will be mapped to (analog to panoramic camera) |

| OSPTexture | map | environment map in latitude / longitude format |

Note that the SciVis renderer only shows the HDRI light in the background (like an environment map) without computing illumination of the scene.

Ambient Light

The ambient light surrounds the scene and illuminates it from

infinity with constant radiance (determined by combining the parameters color and

intensity). It is created by passing the type string

“ambient” to ospNewLight. The ambient light

supports OSP_INTENSITY_QUANTITY_RADIANCE and

OSP_INTENSITY_QUANTITY_IRRADIANCE (default) as

intensityQuantity parameter value.

Note that the SciVis renderer uses ambient lights to control the color and intensity of the computed ambient occlusion (AO).

Sun-Sky Light

The sun-sky light is a combination of a distant light

for the sun and a procedural hdri light for the sky. It is

created by passing the type string “sunSky” to

ospNewLight. The sun-sky light surrounds the scene and

illuminates it from infinity and can be used for rendering outdoor

scenes. The radiance values are calculated using the Hošek-Wilkie sky

model and solar radiance function. The underlying model of the sun-sky

light returns radiance values and therefore the light only accepts

OSP_INTENSITY_QUANTITY_SCALE as

intensityQuantity parameter value. To rescale the returned

radiance of the sky model the default value for the

intensity parameter is set to 0.025. In

addition to the general parameters the following

special parameters are supported:

| Type | Name | Default | Description |

|---|---|---|---|

| vec3f | up | (0,1,0) | zenith of sky |

| vec3f | direction | (0,−1,0) | main emission direction of the sun |

| float | turbidity | 3 | atmospheric turbidity due to particles, in [1–10] |

| float | albedo | 0.3 | ground reflectance, in [0–1] |

| float | horizonExtension | 0.01 | extend the sky dome by stretching the horizon, fraction of the lower hemisphere to cover, in [0–1] |

The lowest elevation for the sun is restricted to the horizon.

Note that the SciVis renderer only computes illumination from the sun (yet the sky is still shown in the background, like an environment map).

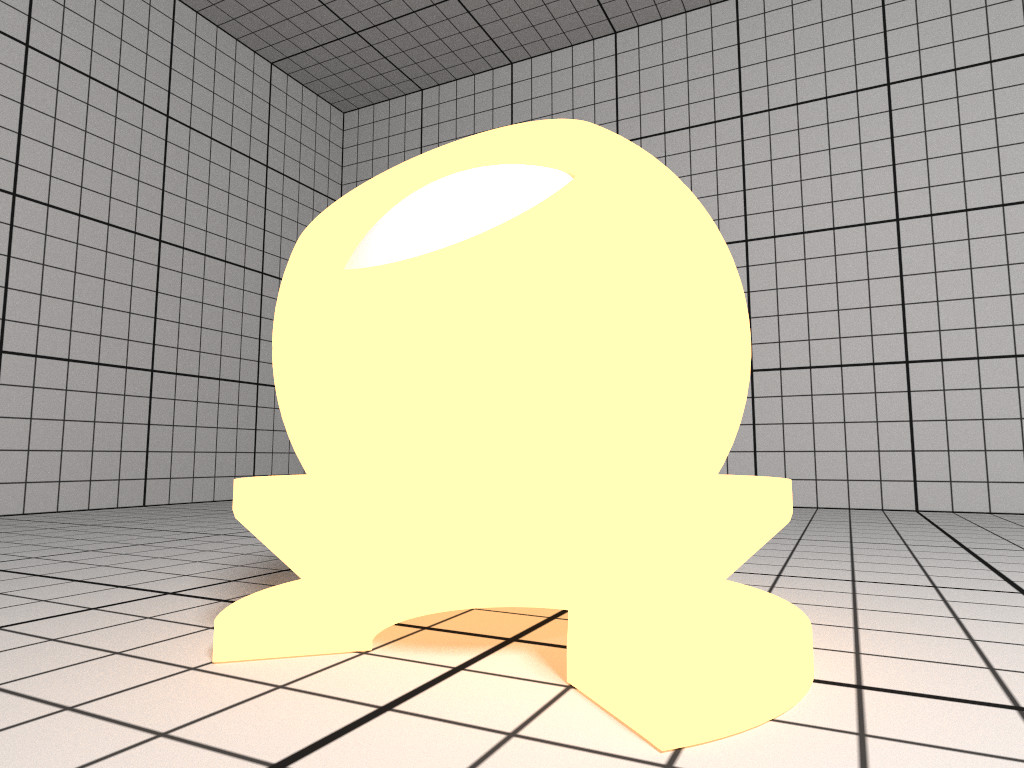

Emissive Objects

The path tracer will consider illumination by geometries which have a light emitting material assigned (for example the Luminous or Principled material).

Materials

Materials describe how light interacts with surfaces, they give

objects their distinctive look. To create a new material of given type

type call

OSPMaterial ospNewMaterial(const char *material_type);The returned handle can then be used to assign the material to a given geometry with

void ospSetObject(OSPGeometricModel, "material", OSPMaterial);OBJ Material

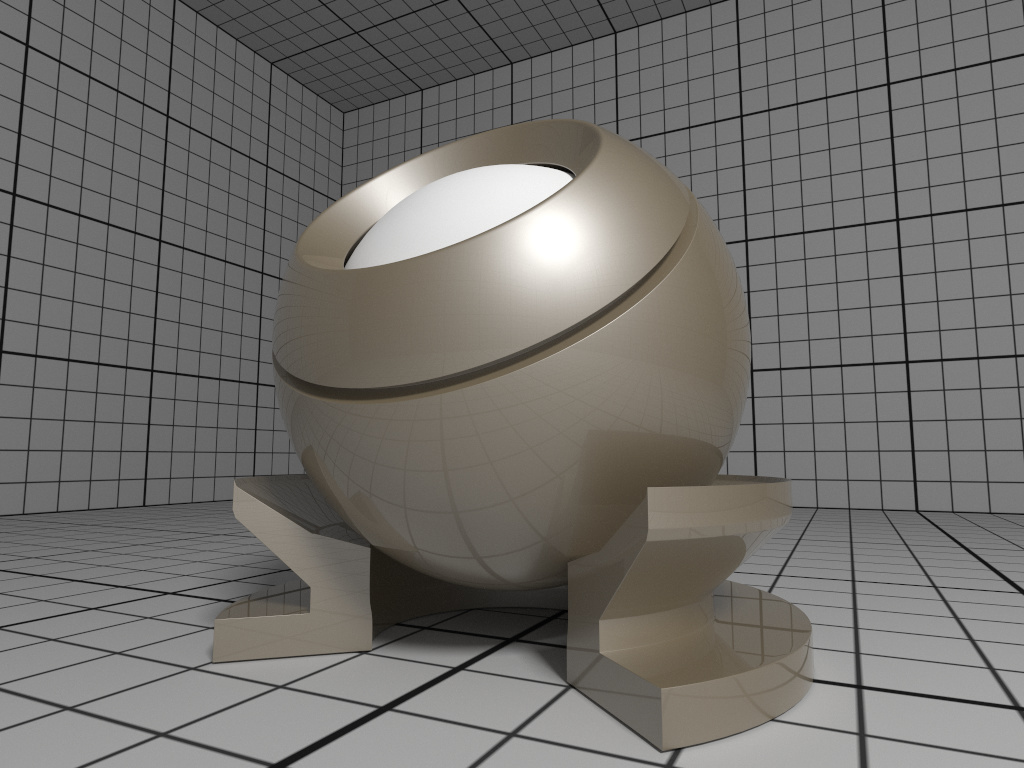

The OBJ material is the workhorse material supported by both the SciVis renderer and the path tracer (the Ambient Occlusion renderer only

uses the kd and d parameter). It offers widely

used common properties like diffuse and specular reflection and is based

on the MTL material

format of Lightwave’s OBJ scene files. To create an OBJ material

pass the type string “obj” to ospNewMaterial.

Its main parameters are

| Type | Name | Default | Description |

|---|---|---|---|

| vec3f | kd | white 0.8 | diffuse color (linear RGB) |

| vec3f | ks | black | specular color (linear RGB) |

| float | ns | 10 | shininess (Phong exponent), usually in [2–104] |

| float | d | opaque | opacity |

| vec3f | tf | black | transparency filter color (linear RGB) |

| OSPTexture | map_bump | NULL | normal map |

In particular when using the path tracer it is important to adhere to

the principle of energy conservation, i.e., that the amount of light

reflected by a surface is not larger than the light arriving. Therefore

the path tracer issues a warning and renormalizes the color parameters

if the sum of kd, ks, and tf is

larger than one in any color channel. Similarly important to mention is

that almost all materials of the real world reflect at most only about

80% of the incoming light. So even for a white sheet of paper or white

wall paint do better not set kd larger than 0.8; otherwise

rendering times are unnecessary long and the contrast in the final

images is low (for example, the corners of a white room would hardly be

discernible, as can be seen in the figure below).

If present, the color component of geometries is also used for the diffuse color

kd and the alpha component is also used for the opacity

d.

Normal mapping can simulate small geometric features via the texture

map_bump. The normals n in the normal map are with respect

to the local tangential shading coordinate system and are encoded as

½(n+1), thus a texel (0.5,0.5,1)4

represents the unperturbed shading normal (0,0,1). Because of this encoding an sRGB

gamma texture format is ignored and normals are

always fetched as linear from a normal map. Note that the orientation of

normal maps is important for a visually consistent look: by convention

OSPRay uses a coordinate system with the origin in the lower left

corner; thus a convexity will look green toward the top of the texture

image (see also the example image of a normal map). If this is not the

case flip the normal map vertically or invert its green channel.

Note that tf colored transparency is implemented in the

SciVis and the path tracer but normal mapping with map_bump

is currently supported in the path tracer only.

All parameters (except tf) can be textured by passing a

texture handle, prefixed with

“map_”. The fetched texels are multiplied by the respective

parameter value. If only the texture is given (but not the corresponding

parameter), only the texture is used (the default value of the parameter

is not multiplied). The color textures map_kd and

map_ks are typically in one of the sRGB gamma encoded

formats, whereas textures map_ns and map_d are

usually in a linear format (and only the first component is used).

Additionally, all textures support texture

transformations.

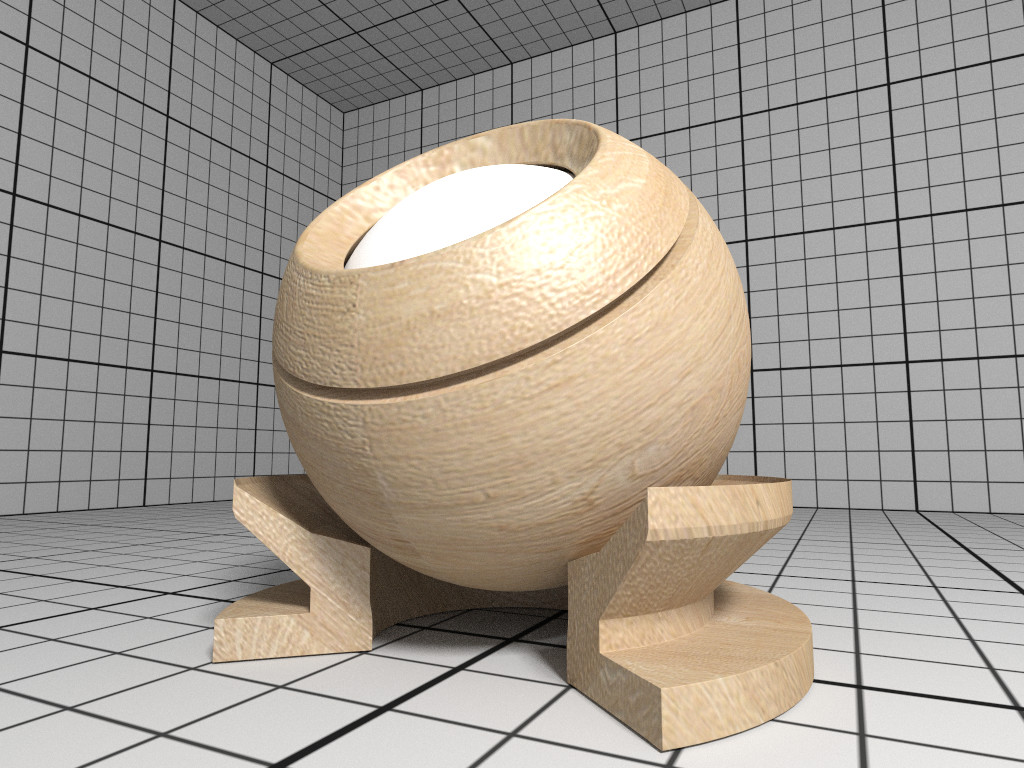

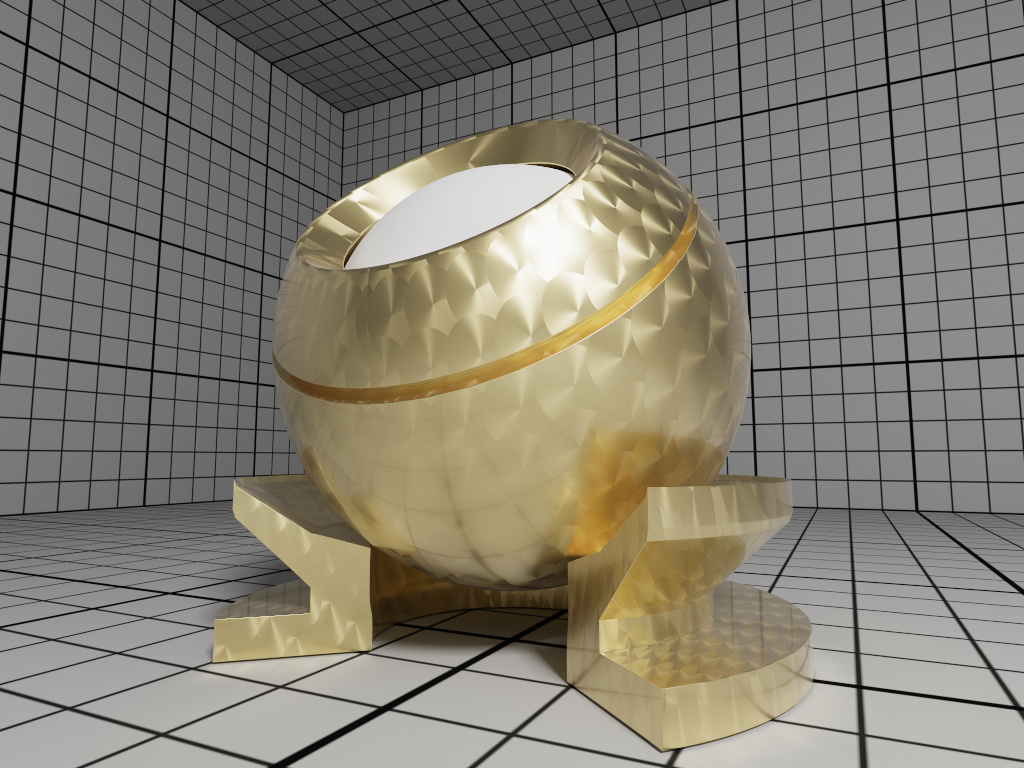

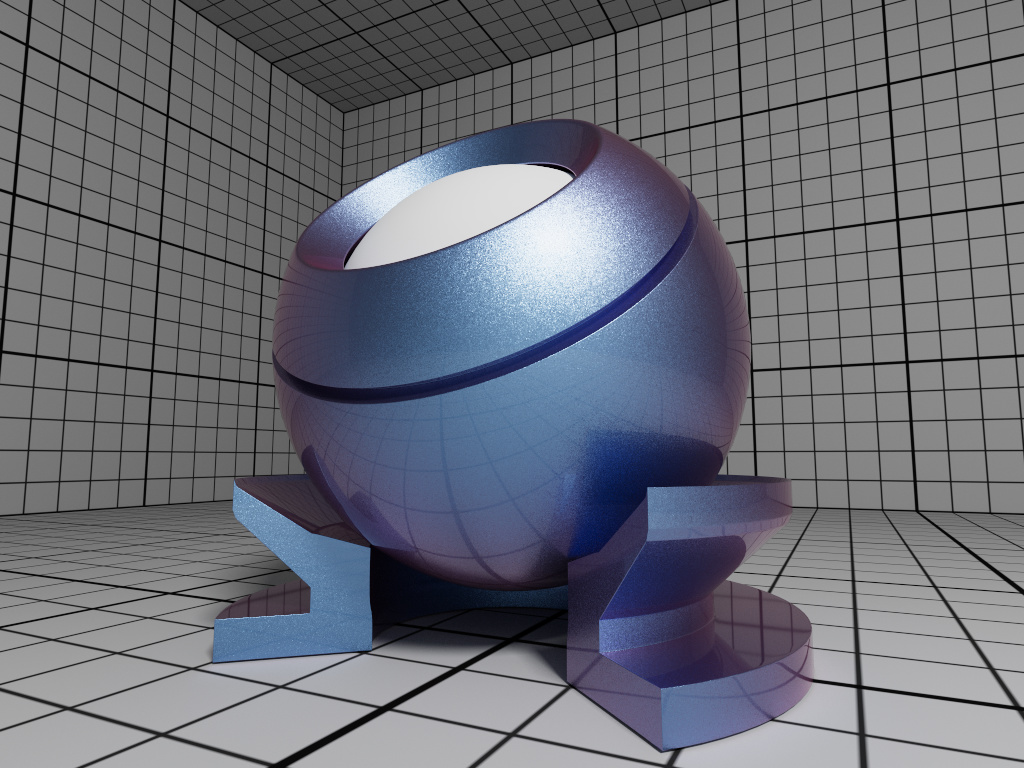

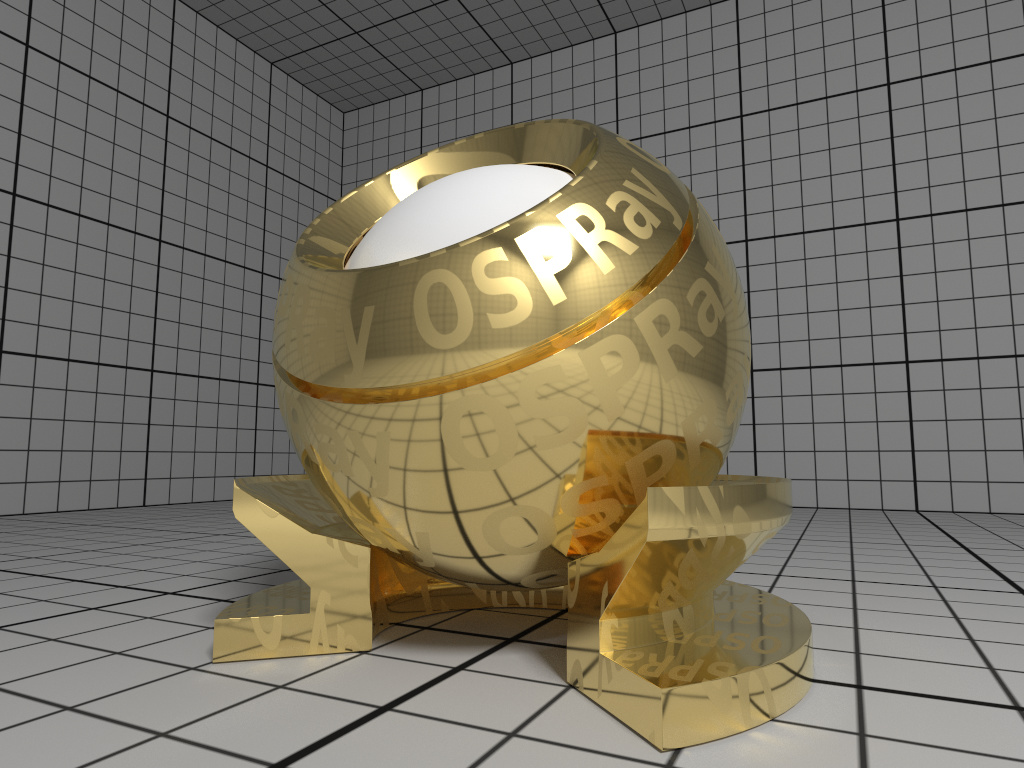

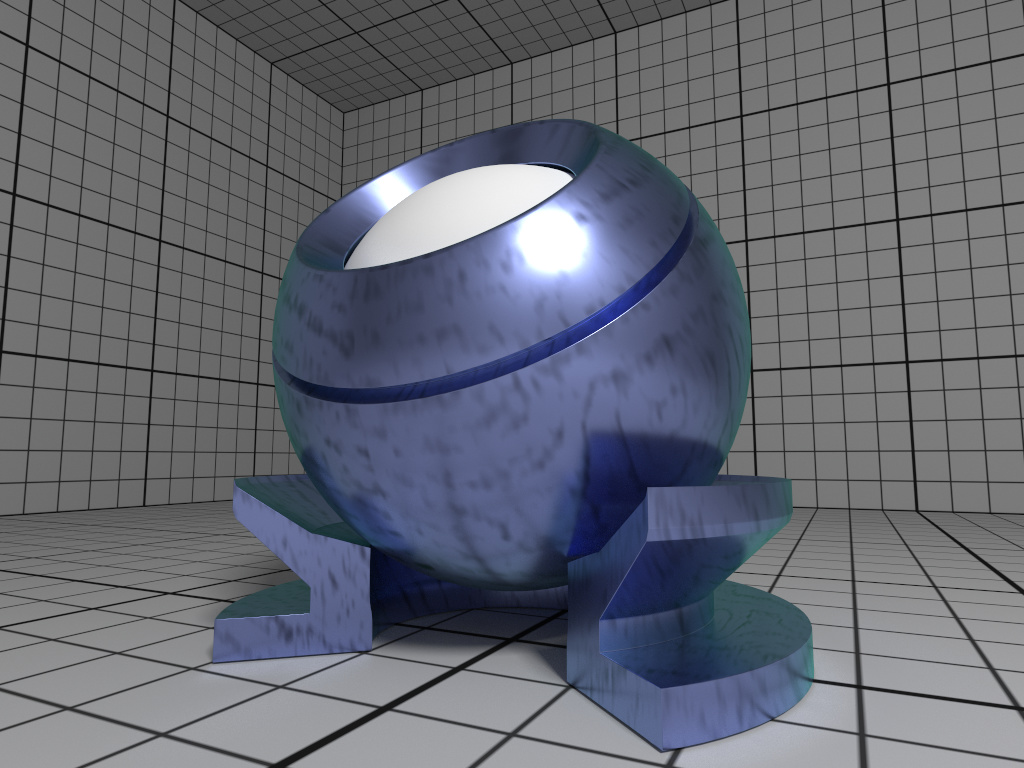

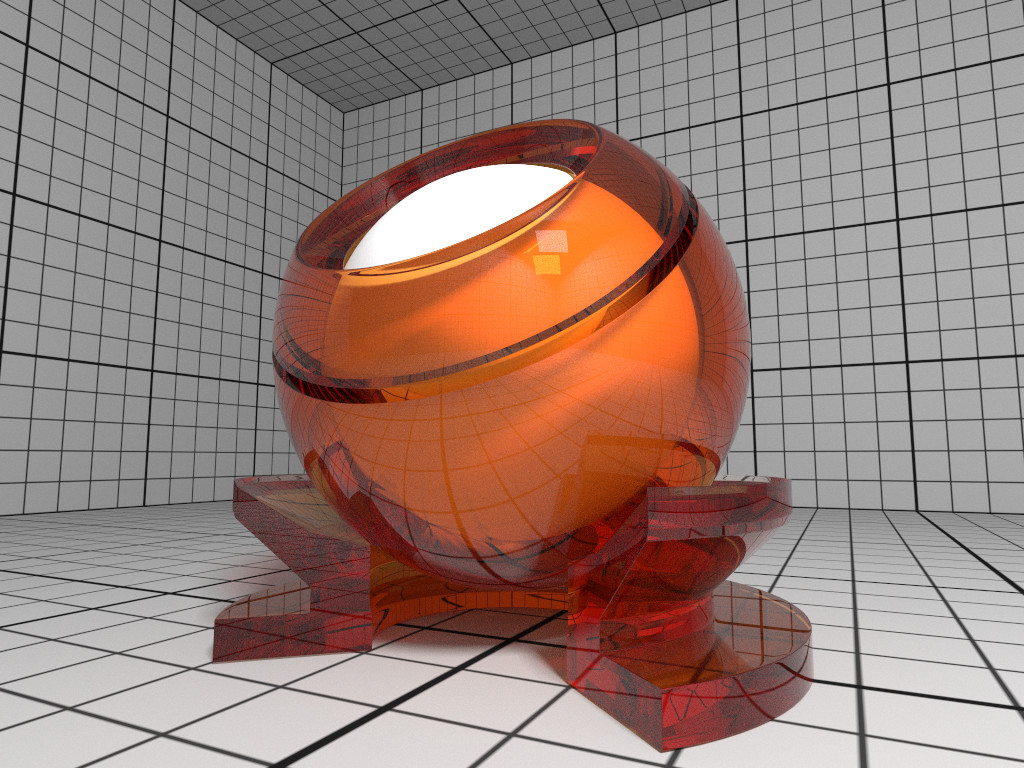

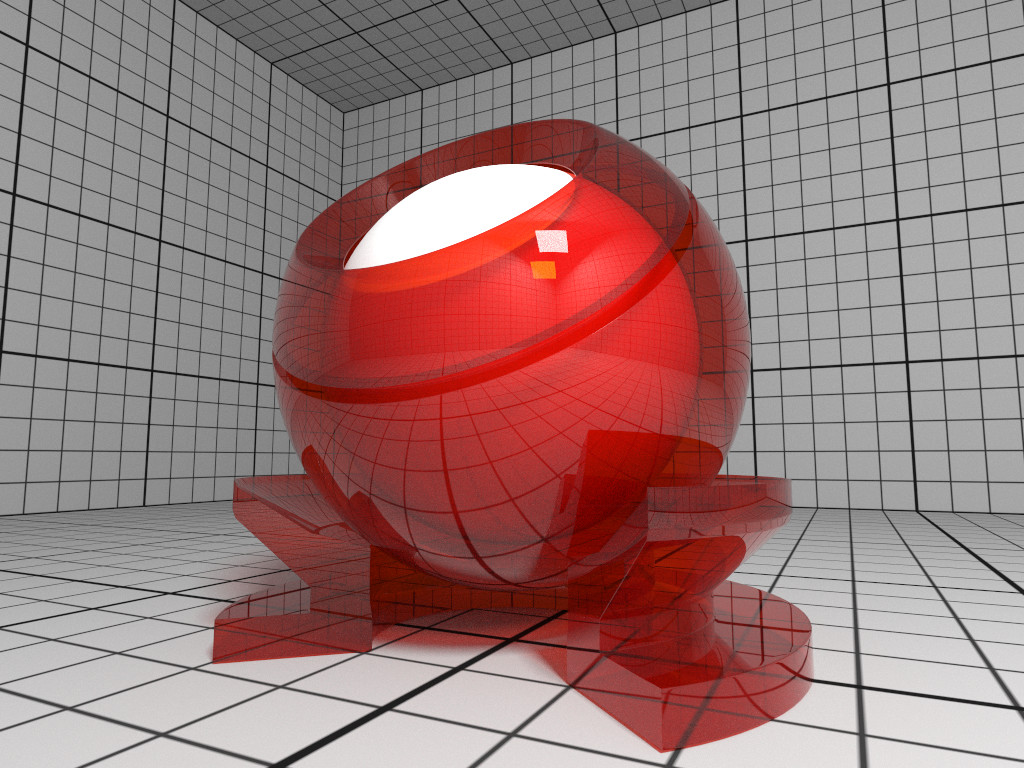

Principled

The Principled material is the most complex material offered by the

path tracer, which is

capable of producing a wide variety of materials (e.g., plastic, metal,

wood, glass) by combining multiple different layers and lobes. It uses

the GGX microfacet distribution with approximate multiple scattering for

dielectrics and metals, uses the Oren-Nayar model for diffuse

reflection, and is energy conserving. To create a Principled material,

pass the type string “principled” to

ospNewMaterial. Its parameters are listed in the table

below.

| Type | Name | Default | Description |

|---|---|---|---|

| vec3f | baseColor | white 0.8 | base reflectivity (diffuse and/or metallic, linear RGB) |

| vec3f | edgeColor | white | edge tint (metallic only, linear RGB) |

| float | metallic | 0 | mix between dielectric (diffuse and/or specular) and metallic (specular only with complex IOR) in [0–1] |

| float | diffuse | 1 | diffuse reflection weight in [0–1] |

| float | specular | 1 | specular reflection/transmission weight in [0–1] |

| float | ior | 1 | dielectric index of refraction |

| float | transmission | 0 | specular transmission weight in [0–1] |

| vec3f | transmissionColor | white | attenuated color due to transmission (Beer’s law, linear RGB) |

| float | transmissionDepth | 1 | distance at which color attenuation is equal to transmissionColor |

| float | roughness | 0 | diffuse and specular roughness in [0–1], 0 is perfectly smooth |

| float | anisotropy | 0 | amount of specular anisotropy in [0–1] |

| float | rotation | 0 | rotation of the direction of anisotropy in [0–1], 1 is going full circle |

| float | normal | 1 | default normal map/scale for all layers |

| float | baseNormal | 1 | base normal map/scale (overrides default normal) |

| bool | thin | false | flag specifying whether the material is thin or solid |

| float | thickness | 1 | thickness of the material (thin only), affects the amount of color attenuation due to specular transmission |

| float | backlight | 0 | amount of diffuse transmission (thin only) in [0–2], 1 is 50% reflection and 50% transmission, 2 is transmission only |

| float | coat | 0 | clear coat layer weight in [0–1] |

| float | coatIor | 1.5 | clear coat index of refraction |

| vec3f | coatColor | white | clear coat color tint (linear RGB) |

| float | coatThickness | 1 | clear coat thickness, affects the amount of color attenuation |

| float | coatRoughness | 0 | clear coat roughness in [0–1], 0 is perfectly smooth |

| float | coatNormal | 1 | clear coat normal map/scale (overrides default normal) |

| float | sheen | 0 | sheen layer weight in [0–1] |

| vec3f | sheenColor | white | sheen color tint (linear RGB) |

| float | sheenTint | 0 | how much sheen is tinted from sheenColor toward baseColor |

| float | sheenRoughness | 0.2 | sheen roughness in [0–1], 0 is perfectly smooth |

| float | opacity | 1 | cut-out opacity/transparency, 1 is fully opaque |

| vec3f | emissiveColor | black | color (and intensity) of the emitted light |

All parameters can be textured by passing a texture handle, prefixed with “map_”

(e.g., “map_baseColor”). texture

transformations are supported as well.

CarPaint

The CarPaint material is a specialized version of the Principled

material for rendering different types of car paints. To create a

CarPaint material, pass the type string “carPaint” to

ospNewMaterial. Its parameters are listed in the table

below.

| Type | Name | Default | Description |