WO2021031454A1 - Système et procédé de jumelage numérique et dispositif informatique - Google Patents

Système et procédé de jumelage numérique et dispositif informatique Download PDFInfo

- Publication number

- WO2021031454A1 WO2021031454A1 PCT/CN2019/123194 CN2019123194W WO2021031454A1 WO 2021031454 A1 WO2021031454 A1 WO 2021031454A1 CN 2019123194 W CN2019123194 W CN 2019123194W WO 2021031454 A1 WO2021031454 A1 WO 2021031454A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- virtual

- real

- source data

- scene

- dimensional

- Prior art date

Links

Images

Classifications

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N13/00—Stereoscopic video systems; Multi-view video systems; Details thereof

- H04N13/10—Processing, recording or transmission of stereoscopic or multi-view image signals

- H04N13/106—Processing image signals

- H04N13/122—Improving the 3D impression of stereoscopic images by modifying image signal contents, e.g. by filtering or adding monoscopic depth cues

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T15/00—3D [Three Dimensional] image rendering

- G06T15/04—Texture mapping

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04L—TRANSMISSION OF DIGITAL INFORMATION, e.g. TELEGRAPHIC COMMUNICATION

- H04L65/00—Network arrangements, protocols or services for supporting real-time applications in data packet communication

- H04L65/10—Architectures or entities

- H04L65/1045—Proxies, e.g. for session initiation protocol [SIP]

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04L—TRANSMISSION OF DIGITAL INFORMATION, e.g. TELEGRAPHIC COMMUNICATION

- H04L65/00—Network arrangements, protocols or services for supporting real-time applications in data packet communication

- H04L65/60—Network streaming of media packets

- H04L65/75—Media network packet handling

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N13/00—Stereoscopic video systems; Multi-view video systems; Details thereof

- H04N13/10—Processing, recording or transmission of stereoscopic or multi-view image signals

- H04N13/106—Processing image signals

- H04N13/161—Encoding, multiplexing or demultiplexing different image signal components

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N13/00—Stereoscopic video systems; Multi-view video systems; Details thereof

- H04N13/10—Processing, recording or transmission of stereoscopic or multi-view image signals

- H04N13/106—Processing image signals

- H04N13/167—Synchronising or controlling image signals

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N13/00—Stereoscopic video systems; Multi-view video systems; Details thereof

- H04N13/20—Image signal generators

- H04N13/261—Image signal generators with monoscopic-to-stereoscopic image conversion

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N13/00—Stereoscopic video systems; Multi-view video systems; Details thereof

- H04N13/20—Image signal generators

- H04N13/296—Synchronisation thereof; Control thereof

Definitions

- the embodiments of the present application relate to the field of computer technology, and in particular to a digital twin system, method, and computer equipment.

- the digital wave represented by new technologies such as the Internet of Things, big data, and artificial intelligence is sweeping the world.

- the physical world and the corresponding digital world are forming two systems that develop and interact in parallel.

- the digital world exists to serve the physical world. Because the digital world has become efficient and orderly, the digital twin technology has emerged as the times require. It has gradually expanded from the manufacturing industry to the urban space, and has profoundly affected urban planning, construction and development.

- the urban information model based on multi-source data fusion is the core, and the intelligent facilities and perception systems deployed across the city are the prerequisites.

- the intelligent private network that supports the efficient operation of twin cities is the guarantee.

- the digital twin cities can have a relatively high level of digitalization and require operating mechanisms. Provide support for modeling, realizing collaborative optimization of virtual and real space, and highlighting multi-dimensional intelligent decision support.

- the digital twin system only displays the three-dimensional model in the three-dimensional display interface, and cannot control on-site equipment based on real-time detection.

- the embodiments of the application provide a digital twin system, method, and computer equipment, so that while constructing and displaying data scenes, it can also control on-site equipment based on real-time detection conditions, so that scene elements can be known, measured, and controlled. .

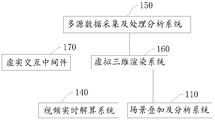

- the embodiments of the present application provide a digital twin system, including a scene overlay and analysis system, a video real-time solution system, a multi-source data acquisition and processing analysis system, a virtual 3D rendering system, and a virtual-real interaction middleware.

- the scene overlay and analysis system saves the three-dimensional scene of the scene and uses the three-dimensional scene as the base map;

- Video real-time solution system which performs real-time solution on the received video stream to obtain video frames

- Multi-source data acquisition and processing analysis system receiving and storing multi-source data, and performing basic analysis and processing, and converting multi-source data into a three-dimensional representation format

- the virtual three-dimensional rendering system uses the three-dimensional scene as a base map, maps and merges the video frames in the three-dimensional scene, and performs position matching and fusion of the multi-source data in the three-dimensional scene, and combines the virtual and real

- the interactive middleware performs mapping in the three-dimensional scene, renders and interacts with the fused three-dimensional scene, generates an interactive instruction in response to the interactive operation of the virtual-real interaction middleware, and sends it to the virtual-real interaction middleware;

- the virtual and real interaction middleware is used to send out the interaction instructions issued by the virtual 3D rendering system.

- the video stream is generated by a multi-channel image acquisition system collecting images at multiple locations on the spot, and the video stream generated by the multi-channel image acquisition system is returned through a multi-channel image real-time return control system.

- the system also includes a data synchronization system for data synchronization of the video streams returned by the multi-channel image real-time return control system.

- the data synchronization is specifically time synchronization, so that the returned videos of the same batch The stream is in the same time slice space.

- the video real-time solution system includes a video frame extraction module and a hardware decoder, wherein:

- the video frame extraction module uses FFMPEG library to extract frame data from the video stream

- the hardware decoder is used to calculate the frame data to obtain the video frame.

- the multi-source data acquisition and processing analysis system includes a multi-source data acquisition system and a multi-source data analysis system, wherein:

- Multi-source data acquisition system for receiving and storing multi-source data returned by multi-source sensors

- Multi-source data analysis system used for basic analysis and processing of multi-source data, and convert multi-source data into three-dimensional representation format.

- the virtual-real interaction middleware includes an instruction receiving module and an instruction transmission module, wherein:

- the instruction receiving module is used to receive interactive instructions issued by the virtual 3D rendering system

- the instruction transmission module is used to transmit the interactive instruction to the entity control system pointed to by the interactive instruction.

- the rendering position of the multi-source data in the three-dimensional scene corresponds to the position of the virtual-real interaction middleware.

- the virtual three-dimensional rendering system also responds to changes in multi-source data to change the rendering state of the virtual-real interactive middleware in the three-dimensional scene.

- an embodiment of the present application provides a digital twin method, including:

- the scene overlay and analysis system saves the on-site three-dimensional scene, and uses the three-dimensional scene as a base map;

- the video real-time solution system performs real-time solution on the received video stream to obtain the video frame;

- the multi-source data acquisition and processing analysis system accepts and stores multi-source data, performs basic analysis and processing, and converts the multi-source data into a three-dimensional representation format;

- the virtual three-dimensional rendering system uses the three-dimensional scene as the base map, maps and fuses the video frames in the three-dimensional scene, matches and fuses the multi-source data in the three-dimensional scene, and integrates the virtual and real interaction middleware Performing mapping in the three-dimensional scene, and rendering and interacting with the merged three-dimensional scene;

- the virtual three-dimensional rendering system generates and sends interactive instructions to the virtual-real interactive middleware in response to the interactive operation of the virtual-real interactive middleware;

- the virtual-real interaction middleware sends out the interaction instructions issued by the virtual 3D rendering system.

- embodiments of the present application provide a computer device, including: a display screen, an input device, a memory, and one or more processors;

- the display screen is used to display virtual and real interactive interfaces

- the input device is used to receive interactive operations

- the memory is used to store one or more programs

- the one or more processors When the one or more programs are executed by the one or more processors, the one or more processors implement the digital twin method as described in the second aspect.

- an embodiment of the present application provides a storage medium containing computer-executable instructions, wherein the computer-executable instructions are used to execute the digital data described in the second aspect when the computer-executable instructions are executed by a computer Twin method.

- the embodiment of the application collects multiple images on site through a multiple image acquisition system, and returns the video stream in real time via the multi-channel image real-time return control system and synchronizes the time of the video stream.

- the synchronized video stream is calculated by the video in real time

- the system performs real-time calculation to obtain the video frame;

- the multi-source sensor detects the on-site environment and generates the corresponding multi-source data, and transmits it to the multi-source data acquisition and processing analysis system for analysis and processing, and converts it into a three-dimensional scene that can be displayed Three-dimensional representation format; then the on-site three-dimensional scene is used as the base map, and the virtual three-dimensional rendering system will match, map, and merge the video frame and multi-source data in the three-dimensional scene, and render the fused three-dimensional scene, and at the same time, the fusion

- the latter three-dimensional scene can be visualized and intuitively displayed, and the fused three-dimensional scene can be interacted through the virtual three-dimensional rendering system.

- the interactive instructions generated by the interactive operation are sent to the entity control system through the virtual-real interaction middleware, and the entity control system responds to the interactive instructions Control field equipment.

- the digital twin system maps, merges, and visualizes the three-dimensional scene, real-time video frames, and on-site multi-source data.

- the three-dimensional display interface is more realistic and comprehensive, and the virtual three-dimensional rendering system realizes the integration

- the virtual-real interaction middleware sends interactive instructions to the physical control system used to control the field equipment, so as to realize the control of the field equipment and truly realize the knowable, Measurable and controllable.

- FIG. 1 is a schematic structural diagram of a digital twin system provided by an embodiment of the present application.

- Figure 2 is a schematic structural diagram of another digital twin system provided by an embodiment of the present application.

- FIG. 3 is a schematic structural diagram of another digital twin system provided by an embodiment of the present application.

- FIG. 4 is a schematic flowchart of a digital twin method provided by an embodiment of the present application.

- Fig. 5 is a schematic structural diagram of a computer device provided by an embodiment of the present application.

- Figure 1 shows a schematic structural diagram of a digital twin system provided by an embodiment of the present application.

- the digital twin system includes a scene overlay and analysis system 110, a video real-time solution system 140, a multi-source data acquisition and processing analysis system 150, a virtual three-dimensional rendering system 160, and a virtual-real interaction middleware 170. among them:

- the scene overlay and analysis system 110 stores a three-dimensional scene on the spot, and uses the three-dimensional scene as a base map.

- the source of the 3D scene can be added from an external server, or it can be obtained by 3D modeling locally. After the 3D scene is obtained, it is saved locally, and the 3D scene is used as the base map for other concerns. The data in the three-dimensional scene is fused, and the three-dimensional scene is used as the starting point for basic analysis.

- the video real-time solution system 140 performs real-time solution on the received video stream to obtain video frames.

- the multi-source data acquisition and processing analysis system 150 receives and stores multi-source data, performs basic analysis and processing, and converts the multi-source data into a three-dimensional representation format.

- the virtual three-dimensional rendering system 160 uses the three-dimensional scene as the base map to map and fuse video frames in the three-dimensional scene, position matching and fusion of multi-source data in the three-dimensional scene, and implement the virtual-real interaction middleware 170 in the three-dimensional scene Mapping, rendering and interacting with the fused three-dimensional scene, and generating interaction instructions in response to the interactive operation of the virtual-real interaction middleware 170 and sending them to the virtual-real interaction middleware 170.

- the virtual three-dimensional rendering system 160 maps and merges video frames in a three-dimensional scene, it determines the mapping relationship between pixels in the video frame and three-dimensional points in the three-dimensional scene, and maps the video frame in the three-dimensional scene according to the mapping relationship. Perform texture mapping in the scene, and perform smooth transition processing on the overlapped area of the texture mapping, thereby fusing the video frame into the three-dimensional scene.

- the virtual 3D rendering system 160 performs position matching and fusion of multi-source data in the 3D scene, it is based on the position correspondence between the multi-source sensor and the virtual-real interaction middleware 170, that is, according to the position information or the position information carried in the multi-source data.

- the device identification number determines the position of the multi-source data corresponding to the 3D scene, and maps the multi-source data in the 3D scene according to the target expression form, so that the position of the multi-source data rendering in the 3D scene corresponds to the position of the virtual-real interaction middleware 170 , So as to complete the position matching and fusion of multi-source data in the 3D scene.

- the virtual-real interaction middleware 170 is used to send out the interaction instructions issued by the virtual 3D rendering system 160.

- the interactive instructions point to the equipment to be controlled and are used to instruct the field equipment to perform corresponding actions.

- the video real-time solution system 140 performs real-time solution to the received video stream and obtains the video frame, while the multi-source data collection and processing analysis system 150 processes the received multi-source data and converts it into a three-dimensional representation format. Then the video frame, multi-source data and 3D scene are mapped, fused and visualized and displayed intuitively through the virtual 3D rendering system 160, and the rendered 3D scene can be interactively operated.

- the interactive commands generated by the interaction are sent through the virtual and real interaction middleware 170

- the field device can execute corresponding actions in response to interactive instructions, thereby realizing the control of the field device.

- FIG. 2 shows a schematic structural diagram of another digital twin system provided by an embodiment of the present application.

- the digital twin system includes a scene overlay and analysis system 110, a video real-time solution system 140, a multi-source data acquisition and processing analysis system 150, a virtual three-dimensional rendering system 160 and a virtual-real interaction middleware 170. among them:

- the scene overlay and analysis system 110 stores a three-dimensional scene on the spot, and uses the three-dimensional scene as a base map.

- the video real-time solution system 140 performs real-time solution on the received video stream to obtain video frames.

- the video real-time resolution system 140 includes a video frame extraction module 141 and a hardware decoder 142, where:

- the video frame extraction module 141 uses the FFMPEG library to extract frame data from the video stream.

- the FFMPEG library is a set of open source computer programs that can be used to record, convert digital audio and video, and convert them into streams, which can meet the requirements of extracting frame data in this embodiment.

- the hardware decoder 142 is used to resolve the frame data to obtain a video frame.

- the hardware decoder 142 is an independent video decoding module built in the NVIDIA graphics card.

- the video stream is generated by the multi-channel image acquisition system 190 (consisting of a multi-channel video acquisition device) collecting images at multiple locations on the scene.

- the multi-source data acquisition and processing analysis system 150 receives and stores multi-source data, performs basic analysis and processing, and converts the multi-source data into a three-dimensional representation format.

- the multi-source data acquisition and processing analysis system 150 includes a multi-source data acquisition system 151 and a multi-source data analysis system 152, among which:

- the multi-source data collection system 151 is used to receive and store multi-source data returned by multi-source sensors.

- the selection of multi-source sensors is based on the target, equipment and actual conditions of the scene, and is installed in the corresponding position.

- Install a multi-source data access switch on site to access and aggregate monitoring data from multi-source sensors, and transmit it to the multi-source data access switch set on the side of the multi-source data acquisition system 151 via wired or wireless means.

- the source data access switch sends the received multi-source data to the multi-source data collection system 151, and the multi-source data collection system 151 then sends the received multi-source data to the multi-source data analysis system 152.

- the multi-source data analysis system 152 is used to perform basic analysis and processing on multi-source data and convert the multi-source data into a three-dimensional representation format.

- the multi-source data analysis system 152 receives the multi-source data according to the demand and performs basic analysis and processing of the multi-source data, such as AD conversion, Threshold analysis, trend analysis, early warning analysis, numerical range, working status, etc., its three-dimensional representation format should be understood as the format corresponding to the target expression in the virtual three-dimensional rendering system 160, and its target expression can be the real-time value of the monitored data , Real-time status, data table, color and other forms of one or more combinations.

- basic analysis and processing of the multi-source data such as AD conversion, Threshold analysis, trend analysis, early warning analysis, numerical range, working status, etc.

- its three-dimensional representation format should be understood as the format corresponding to the target expression in the virtual three-dimensional rendering system 160, and its target expression can be the real-time value of the monitored data , Real-time status, data table, color and other forms of one or more combinations.

- the virtual three-dimensional rendering system 160 uses the three-dimensional scene as the base map to map and fuse video frames in the three-dimensional scene, position matching and fusion of multi-source data in the three-dimensional scene, and implement the virtual-real interaction middleware 170 in the three-dimensional scene Mapping, rendering and interacting with the fused three-dimensional scene, and generating interaction instructions in response to the interactive operation of the virtual-real interaction middleware 170 and sending them to the virtual-real interaction middleware 170.

- the virtual-real interaction middleware 170 is used to send out the interaction instructions issued by the virtual 3D rendering system 160.

- the virtual-real interaction middleware 170 includes an instruction receiving module 171 and an instruction transmission module 172.

- the instruction receiving module 171 is used to receive interactive instructions issued by the virtual 3D rendering system 160; the instruction transmission module 172 is used to transmit the interactive instructions to The device to which the interactive command points.

- the video frame extraction module 141 and the hardware decoder 142 perform real-time calculation of the received video stream to obtain the video frame.

- the multi-source data acquisition system 151 receives the multi-source data, which is processed by the multi-source data analysis system 152. Converted into a three-dimensional representation format, and then map, merge and visualize the video frame, multi-source data and three-dimensional scene through the virtual three-dimensional rendering system 160 for intuitive display, and can perform interactive operations on the rendered three-dimensional scene, and send the interactive instructions generated by the interaction

- the field device can perform corresponding actions in response to the interactive instruction, thereby realizing the control of the field device.

- Fig. 3 shows a schematic structural diagram of another digital twin system provided by an embodiment of the present application.

- the digital twin system includes a scene superimposition and analysis system 110, a data synchronization system 120, a video real-time solution system 140, a multi-source data acquisition and processing analysis system 150, a virtual 3D rendering system 160, and a virtual-real interaction middleware 170

- the data synchronization system 120 is connected to the multi-channel image real-time return control system 130

- the multi-channel image real-time return control system 130 is connected to the multi-channel image acquisition system 190

- the virtual-real interaction middleware 170 is connected to the physical control system 180.

- the scene superimposition and analysis system 110 stores a three-dimensional scene on site, and uses the three-dimensional scene as a base map.

- the source of the 3D scene can be added from an external server, or it can be obtained from local 3D modeling. After obtaining the 3D scene, save it locally and use the 3D scene as the base map. Fusion on the three-dimensional scene, the three-dimensional scene as the starting point of the basic analysis.

- the scene overlay and analysis system 110 divides the three-dimensional data of the three-dimensional scene into blocks, and when the on-site three-dimensional scene is updated, the scene overlay and analysis system 110 receives the three-dimensional update data packet of the corresponding block, and the three-dimensional update data packet The three-dimensional data for the updated block should be included.

- the scene overlay and analysis system 110 replaces the three-dimensional data of the corresponding block with the three-dimensional data in the three-dimensional update data package to ensure the timeliness of the three-dimensional scene.

- the multi-channel image acquisition system 190 includes a multi-channel video acquisition device, which is used to collect images from multiple locations on the scene and generate a video stream.

- the multi-channel video capture device should include a video capture device (such as a camera) that supports a maximum number of not less than 100.

- each video capture device is not less than 2 million pixels, and the resolution is 1920X1080.

- the following functions can also be selected according to actual needs: integrated ICR dual filter day and night switching, fog function, electronic anti-shake, multiple white balances Mode switching, video automatic iris, support for H.264 encoding, etc.

- Each video capture device monitors different areas of the site, and the monitoring range of the multi-channel video capture device should cover the range of the site corresponding to the three-dimensional scene, that is, the range of interest on the site should be monitored.

- multi-channel image real-time return control system 130 is used to return the video stream generated by the multi-channel image acquisition system 190.

- the effective transmission distance of the multi-channel image real-time return control system 130 should be no less than 3KM

- the video code stream should be no less than 8Mpbs

- the delay should be no more than 80ms to ensure the timeliness of the display effect.

- an access switch is set on the side of the multi-channel image acquisition system 190 to collect the video streams generated by the multi-channel image acquisition system 190, and aggregate the collected video streams to the aggregation switch or the middle station, the aggregation switch or the middle station

- the video stream is preprocessed and sent to the multi-channel image real-time return control system 130, and the multi-channel image real-time return control system 130 returns the video stream to the data synchronization system 120 for synchronization processing.

- connection between the aggregation switch and the access switches on both sides can be connected in a wired and/or wireless manner.

- wired it can be connected via RS232, RS458, RJ45, bus, etc.

- wireless if the distance between each other is close, wireless communication can be performed through WiFi, ZigBee, Bluetooth and other near field communication modules.

- the long-distance wireless communication connection can be carried out through the wireless bridge, 4G module, 5G module, etc.

- the data synchronization system 120 receives the video stream returned by the multi-channel image real-time return control system 130 and is used for data synchronization of the returned video stream.

- the synchronized video stream is sent to the video real-time solution system 140 for solution.

- the data synchronization is specifically time synchronization, so that the returned video streams of the same batch are located in the same time slice space.

- the data synchronization system 120 should support the data synchronization of the video stream returned by the maximum number of not less than 100 video capture devices.

- the time slice space can be understood as the abstraction of several fixed-size real time intervals.

- the video real-time solution system 140 is configured to perform real-time solution on the video stream to obtain video frames.

- the real-time video resolution system 140 includes a video frame extraction module 141 and a hardware decoder 142, wherein:

- the video frame extraction module 141 uses the FFMPEG library to extract frame data from the video stream.

- the FFMPEG library is a set of open source computer programs that can be used to record, convert digital audio and video, and convert them into streams, which can meet the requirements of extracting frame data in this embodiment.

- the hardware decoder 142 is used to resolve the frame data to obtain a video frame.

- the hardware decoder 142 is an independent video decoding module built in the NVIDIA graphics card, supports H.264 and H.265 decoding, and has a maximum resolution of 8K.

- the multi-source data acquisition and processing analysis system 150 receives and stores the multi-source data returned by the multi-source sensor, performs basic analysis and processing, and converts the multi-source data into a three-dimensional representation format.

- the multi-source data acquisition and processing analysis system 150 includes a multi-source data acquisition system 151 and a multi-source data analysis system 152, in which:

- the multi-source data collection system 151 is used to receive and store multi-source data returned by multi-source sensors.

- the multi-source sensor includes at least resistive sensors, capacitive sensors, inductive sensors, voltage sensors, pyroelectric sensors, impedance sensors, magnetoelectric sensors, photoelectric sensors, resonance sensors, and Hall sensors , Ultrasonic sensor, isotope sensor, electrochemical sensor, microwave sensor, etc. one or more.

- the selection of multi-source sensors is based on the target, equipment and actual conditions of the scene, and is installed in the corresponding position.

- Install a multi-source data access switch on site to access and aggregate monitoring data from multi-source sensors, and transmit it to the multi-source data access switch set on the side of the multi-source data acquisition system 151 via wired or wireless means.

- the source data access switch sends the received multi-source data to the multi-source data collection system 151, and the multi-source data collection system 151 then sends the received multi-source data to the multi-source data analysis system 152.

- the multi-source data analysis system 152 is used to perform basic analysis and processing on multi-source data and convert the multi-source data into a three-dimensional representation format.

- the multi-source data analysis system 152 receives the multi-source data according to the demand and performs basic analysis and processing of the multi-source data, such as AD conversion, Threshold analysis, trend analysis, early warning analysis, numerical range, working status, etc., its three-dimensional representation format should be understood as the format corresponding to the target expression in the virtual three-dimensional rendering system 160, and its target expression can be the real-time value of the monitored data , Real-time status, data table, color and other forms of one or more combinations.

- basic analysis and processing of the multi-source data such as AD conversion, Threshold analysis, trend analysis, early warning analysis, numerical range, working status, etc.

- its three-dimensional representation format should be understood as the format corresponding to the target expression in the virtual three-dimensional rendering system 160, and its target expression can be the real-time value of the monitored data , Real-time status, data table, color and other forms of one or more combinations.

- the virtual three-dimensional rendering system 160 uses the three-dimensional scene as the base map to map and fuse video frames in the three-dimensional scene, match and fuse multi-source data in the three-dimensional scene, and integrate the virtual-real interaction middleware 170 in the three-dimensional scene. Mapping is performed in the scene, and the merged three-dimensional scene is rendered and interacted. In response to the interactive operation of the virtual-real interaction middleware 170, an interaction instruction is generated and sent to the virtual-real interaction middleware 170.

- the mapping relationship between the pixels in the video frame and the 3D points in the 3D scene is determined, and the texture mapping of the video frame in the 3D scene is performed according to the mapping relationship.

- the overlapped area of the texture mapping is smoothly transitioned, so that the video frame is merged into the three-dimensional scene.

- the position correspondence between the multi-source sensor and the virtual-real interaction middleware 170 is determined according to the position information or the device identification number carried in the multi-source data.

- the source data corresponds to the position in the 3D scene, and the multi-source data is mapped in the 3D scene according to the target expression form, so that the position where the multi-source data is rendered in the 3D scene corresponds to the position of the virtual-real interaction middleware 170, thereby completing the multi-source

- the data is matched and fused in the 3D scene.

- the virtual three-dimensional rendering system 160 also changes the rendering state of the virtual-real interaction middleware 170 in the three-dimensional scene in response to changes in multi-source data.

- the color or performance state of the virtual and real interaction middleware 170 in the three-dimensional scene can be correspondingly changed according to the value range or working status of the multi-source data of the corresponding device, such as distinguishing different value ranges with different colors, Different working states are expressed in the form of switch states.

- the virtual-real interaction middleware 170 is used to implement the transmission of interaction instructions between the virtual three-dimensional rendering system 160 and the entity control system 180.

- the entity control system 180 receives an interactive instruction from the virtual-real interactive middleware 170, and performs corresponding control on the field device in response to the interactive instruction.

- the virtual-real interaction middleware 170 includes an instruction receiving module 171 and an instruction transmission module 172.

- the instruction receiving module 171 is used to receive interactive instructions issued by the virtual 3D rendering system 160; the instruction transmission module 172 is used to transmit the interactive instructions to The entity control system 180 pointed to by the interactive instruction.

- the instruction receiving module 171 of the virtual-real interaction middleware 170 may be displayed in a position corresponding to the multi-source data in the three-dimensional scene.

- the expression of the instruction receiving module 171 may be a physical button

- the form of the three-dimensional model may also be the form of the three-dimensional model of the corresponding device.

- the physical control system 180 includes a controller for controlling the device set on site, and the controller can control the device in response to an interactive instruction.

- the command transmission module 172 and the controller may be connected to each other in a wired and/or wireless manner. When connected via wired, it can be connected via RS232, RS458, RJ45, bus, etc. When connected via wireless, it can be connected via WiFi, ZigBee, Bluetooth, wireless bridge, 4G module, 5G module, etc., in the control When the number of devices is large, data can be collected and distributed through the switch.

- the virtual 3D rendering system 160 When the user selects the instruction receiving module 171 in the 3D scene for interactive operation, the virtual 3D rendering system 160 generates a corresponding interactive instruction according to the multi-source data of the corresponding device and the preset interactive response mode and sends it to the instruction receiving module 171. Instructions include control instructions and location information or device identification numbers for the device. The instruction transmission module 172 sends the interactive instruction to the corresponding controller according to the location information or the device identification number, and the controller controls the field device in response to the control instruction.

- the multi-channel images collected by the multi-channel image acquisition system 190 are sent back to the data synchronization system 120 through the multi-channel image real-time return control system 130 in real time for time synchronization.

- the video real-time resolution system 140 performs time synchronization on the synchronized

- the video stream is calculated in real time to obtain the video frame.

- the multi-source data acquisition system 151 receives the multi-source data, which is processed by the multi-source data analysis system 152 and converted into a three-dimensional representation format, and then the video frame, multi-source data and three-dimensional scene

- the interactive instructions generated by the interaction are sent to the instruction receiving module 171 in the virtual interactive middleware, and the instruction transmission module 172 is sent to the entity control system 180, and the entity control system 180

- the central controller controls the device to perform corresponding actions according to the location information of the device pointed to by the interactive instruction and the control instruction, so as to realize the control of the field device.

- FIG 4 shows a schematic flow chart of a digital twin method provided by an embodiment of the present application.

- the digital twin method provided in this embodiment can be executed by a digital twin system, which can be implemented by hardware and/or software. , And integrated in the computer.

- the digital twin method includes:

- the scene overlay and analysis system saves the three-dimensional scene on site, and uses the three-dimensional scene as a base map.

- the source of the 3D scene can be added from an external server, or it can be obtained by 3D modeling locally. After the 3D scene is obtained, it is saved locally, and the 3D scene is used as the base map, that is, other concerns The data in the three-dimensional scene is fused, and the three-dimensional scene is used as the starting point for basic analysis.

- the scene overlay and analysis system divides the three-dimensional data of the three-dimensional scene into blocks, and when the on-site three-dimensional scene is updated, the scene overlay and analysis system receives the three-dimensional update data package of the corresponding block, and the three-dimensional update data package should include The pointed block is used for updated 3D data.

- the scene overlay and analysis system replaces the 3D data of the corresponding block with the 3D data in the 3D update data package to ensure the timeliness of the 3D scene.

- the video real-time calculation system performs real-time calculation on the received video stream to obtain a video frame.

- the video real-time solution system includes a video frame extraction module and a hardware decoder, where:

- the video frame extraction module uses FFMPEG library to extract frame data from the video stream.

- the FFMPEG library is a set of open source computer programs that can be used to record, convert digital audio and video, and convert them into streams, which can meet the requirements of extracting frame data in this embodiment.

- the hardware decoder is used to calculate the frame data to obtain the video frame.

- the hardware decoder is an independent video decoding module built in the NVIDIA graphics card.

- the multi-source data acquisition and processing analysis system receives and stores multi-source data, performs basic analysis and processing, and converts the multi-source data into a three-dimensional representation format.

- the multi-source data acquisition and processing analysis system includes a multi-source data acquisition system and a multi-source data analysis system, among which:

- Multi-source data acquisition system used to receive and store multi-source data returned by multi-source sensors.

- the selection of multi-source sensors is based on the target, equipment and actual conditions of the scene, and is installed in the corresponding position.

- Install a multi-source data access switch on site to access and aggregate the monitoring data of multi-source sensors, and transmit it to the multi-source data access switch set on the side of the multi-source data acquisition system via wired or wireless means.

- the multi-source data access switch sends the received multi-source data to the multi-source data acquisition system, and the multi-source data acquisition system sends the received multi-source data to the multi-source data analysis system .

- a multi-source data analysis system is used to perform basic analysis and processing on multi-source data and convert the multi-source data into a three-dimensional representation format.

- the multi-source data analysis system receives multi-source data according to demand and performs basic analysis and processing of the multi-source data, such as AD conversion and threshold analysis , Trend analysis, early warning analysis, numerical range, working status, etc., its three-dimensional representation format should be understood as the format corresponding to the target expression in the virtual three-dimensional rendering system, and its target expression can be the real-time value and real-time status of the monitored data , Data table, color and other forms of one or more combinations.

- the virtual 3D rendering system uses the 3D scene as the base map, maps and merges the video frame in the 3D scene, matches and merges the position of the multi-source data in the 3D scene, and interacts the virtual and real

- the middleware performs mapping in the three-dimensional scene, and renders and interacts with the merged three-dimensional scene.

- S205 The virtual three-dimensional rendering system generates an interactive instruction in response to the interactive operation on the virtual-real interactive middleware and sends it to the virtual-real interactive middleware.

- the mapping relationship between the pixels in the video frame and the 3D points in the 3D scene is determined, and the texture mapping of the video frame in the 3D scene is performed according to the mapping relationship.

- the overlapped area of the texture mapping is smoothly transitioned, so that the video frame is merged into the three-dimensional scene.

- the multi-source is determined according to the position correspondence between the multi-source sensor and the virtual-real interaction middleware, that is, according to the location information or device identification number carried in the multi-source data

- the data corresponds to the position in the 3D scene

- the multi-source data is mapped in the 3D scene according to the target expression form, so that the rendering position of the multi-source data in the 3D scene corresponds to the position of the virtual and real interactive middleware, thereby completing the multi-source data Position matching and fusion in the 3D scene.

- the virtual 3D rendering system also responds to changes in multi-source data to change the rendering state of the virtual and real interactive middleware in the 3D scene.

- the color or performance state of the virtual and real interactive middleware in the three-dimensional scene can be correspondingly changed according to the value range or working status of the multi-source data of the corresponding device, such as distinguishing different value ranges with different colors.

- the working status is expressed in the form of switch status.

- S206 The virtual-real interaction middleware sends out the interaction instruction issued by the virtual 3D rendering system.

- the virtual-real interactive middleware is used to realize the transmission of interactive instructions between the virtual 3D rendering system and the physical control system.

- the physical control system receives the interactive instructions from the virtual-real interactive middleware, and controls the field devices in response to the interactive instructions. .

- the virtual-real interaction middleware includes an instruction receiving module and an instruction transmission module.

- the instruction receiving module is used to receive the interactive instruction issued by the virtual 3D rendering system; the instruction transmission module is used to transmit the interactive instruction to the entity control directed by the interactive instruction. system.

- the received video stream is solved in real time by the video real-time solving system, the video frame obtained from the result of the calculation, and the multi-source data collection and processing analysis system processes the received multi-source data and converts it into a three-dimensional representation Format, and then map, merge and visualize the video frame, multi-source data and 3D scene through the virtual 3D rendering system.

- Interactive operations can be performed on the rendered 3D scene.

- the interactive commands generated by the interaction are sent through the virtual and real interaction middleware.

- the field device can execute corresponding actions in response to interactive instructions, thereby realizing the control of the field device.

- FIG. 5 is a schematic structural diagram of a computer device provided by an embodiment of this application.

- the computer equipment provided by this embodiment includes: a display screen 24, an input device 25, a memory 22, a communication module 23, and one or more processors 21; the communication module 23 is used to communicate with the outside world;

- the display screen 24 is used to display virtual and real interactive interfaces;

- the input device 25 is used to receive interactive operations;

- the memory 22 is used to store one or more programs; when the one or more programs are The one or more processors 21 execute, so that the one or more processors 21 implement the digital twin method and system functions as provided in the embodiments of the present application.

- the memory 22 can be used to store software programs, computer-executable programs, and modules, such as the digital twin method and system functions described in any embodiment of the present application.

- the memory 22 may mainly include a program storage area and a data storage area.

- the program storage area may store an operating system and an application program required by at least one function; the data storage area may store data created according to the use of the device, etc.

- the memory 22 may include a high-speed random access memory, and may also include a non-volatile memory, such as at least one magnetic disk storage device, a flash memory device, or other non-volatile solid-state storage devices.

- the memory 22 may further include a memory remotely provided with respect to the processor, and these remote memories may be connected to the device through a network.

- networks include but are not limited to the Internet, corporate intranets, local area networks, mobile communication networks, and combinations thereof.

- the computer device further includes a communication module 23, which is used to establish wired and/or wireless connections with other devices and perform data transmission.

- the processor 21 executes various functional applications and data processing of the device by running software programs, instructions, and modules stored in the memory 22, that is, realizes the aforementioned digital twin method and system functions.

- the digital twin system and computer equipment provided above can be used to execute the digital twin method provided in the above embodiments, and have corresponding functions and beneficial effects.

- the embodiment of the present application also provides a storage medium containing computer-executable instructions, when the computer-executable instructions are executed by a computer processor, they are used to execute the digital twin method provided in the embodiments of the present application to implement the embodiments of the present application.

- the functions of the digital twin system provided.

- Storage medium any of various types of storage devices or storage devices.

- the term "storage medium” is intended to include: installation media, such as CD-ROM, floppy disk or tape device; computer system memory or random access memory, such as DRAM, DDR RAM, SRAM, EDO RAM, Rambus RAM, etc. ; Non-volatile memory, such as flash memory, magnetic media (such as hard disk or optical storage); registers or other similar types of memory elements.

- the storage medium may further include other types of memory or a combination thereof.

- the storage medium may be located in the first computer system in which the program is executed, or may be located in a different second computer system connected to the first computer system through a network (such as the Internet).

- the second computer system may provide program instructions to the first computer for execution.

- storage media may include two or more storage media that may reside in different locations (for example, in different computer systems connected through a network).

- the storage medium may store program instructions executable by one or more processors 21 (for example, embodied as a computer program).

- the storage medium containing computer-executable instructions provided by the embodiments of the present application is not limited to the digital twin method described above, and can also execute the digital twin methods provided in any embodiment of the present application. Related operations to realize the functions of the digital twin system provided by any embodiment of the present application.

- the digital twin system and computer equipment provided in the above embodiments can execute the digital twin method provided in any embodiment of this application.

- the digital twin provided in any embodiment of this application. System and method.

Landscapes

- Engineering & Computer Science (AREA)

- Multimedia (AREA)

- Signal Processing (AREA)

- Computer Networks & Wireless Communication (AREA)

- Computer Graphics (AREA)

- Physics & Mathematics (AREA)

- General Physics & Mathematics (AREA)

- Theoretical Computer Science (AREA)

- Testing, Inspecting, Measuring Of Stereoscopic Televisions And Televisions (AREA)

- Processing Or Creating Images (AREA)

Abstract

Applications Claiming Priority (2)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN201910775128.8 | 2019-08-21 | ||

| CN201910775128.8A CN110505464A (zh) | 2019-08-21 | 2019-08-21 | 一种数字孪生系统、方法及计算机设备 |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| WO2021031454A1 true WO2021031454A1 (fr) | 2021-02-25 |

Family

ID=68588763

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| PCT/CN2019/123194 WO2021031454A1 (fr) | 2019-08-21 | 2019-12-05 | Système et procédé de jumelage numérique et dispositif informatique |

Country Status (2)

| Country | Link |

|---|---|

| CN (2) | CN110505464A (fr) |

| WO (1) | WO2021031454A1 (fr) |

Cited By (23)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN112925496A (zh) * | 2021-03-30 | 2021-06-08 | 四川虹微技术有限公司 | 一种基于数字孪生的三维可视化设计方法及系统 |

| CN112991552A (zh) * | 2021-03-10 | 2021-06-18 | 中国商用飞机有限责任公司北京民用飞机技术研究中心 | 人体虚实匹配方法、装置、设备及存储介质 |

| CN113406968A (zh) * | 2021-06-17 | 2021-09-17 | 广东工业大学 | 基于数字孪生的无人机自主起降巡航方法 |

| CN113538863A (zh) * | 2021-04-13 | 2021-10-22 | 交通运输部科学研究院 | 一种隧道数字孪生场景构建方法及计算机设备 |

| CN113554063A (zh) * | 2021-06-25 | 2021-10-26 | 西安电子科技大学 | 一种工业数字孪生虚实数据融合方法、系统、设备、终端 |

| CN113919106A (zh) * | 2021-09-29 | 2022-01-11 | 大连理工大学 | 基于增强现实和数字孪生的地下管道结构安全评价方法 |

| CN114212609A (zh) * | 2021-12-15 | 2022-03-22 | 北自所(北京)科技发展股份有限公司 | 一种数字孪生纺织成套装备卷装作业方法 |

| CN114217555A (zh) * | 2021-12-09 | 2022-03-22 | 浙江大学 | 基于数字孪生场景的低延时远程遥控方法和系统 |

| CN114359475A (zh) * | 2021-12-03 | 2022-04-15 | 广东电网有限责任公司电力科学研究院 | 一种gis设备数字孪生三维模型展示方法、装置及设备 |

| CN114584571A (zh) * | 2021-12-24 | 2022-06-03 | 北京中电飞华通信有限公司 | 基于空间计算技术的电网场站数字孪生同步通信方法 |

| CN114710495A (zh) * | 2022-04-29 | 2022-07-05 | 深圳市瑞云科技有限公司 | 基于云渲染的houdini分布式联机解算方法 |

| CN114740969A (zh) * | 2022-02-09 | 2022-07-12 | 北京德信电通科技有限公司 | 一种融合数字孪生与虚拟现实的互动系统及其方法 |

| CN114827144A (zh) * | 2022-04-12 | 2022-07-29 | 中煤科工开采研究院有限公司 | 一种煤矿综采工作面三维虚拟仿真决策分布式系统 |

| CN115412463A (zh) * | 2021-05-28 | 2022-11-29 | 中国移动通信有限公司研究院 | 时延测量方法、装置及数字孪生网络 |

| CN115409944A (zh) * | 2022-09-01 | 2022-11-29 | 浙江巨点光线智慧科技有限公司 | 基于低代码数字孪生的三维场景渲染及数据修正系统 |

| CN116307740A (zh) * | 2023-05-16 | 2023-06-23 | 苏州和歌信息科技有限公司 | 基于数字孪生城市的起火点分析方法及系统、设备及介质 |

| WO2023133971A1 (fr) * | 2022-01-11 | 2023-07-20 | 长沙理工大学 | Système double numérique de micro-ordinateur à puce unique virtuel web3d basé sur zigbee |

| CN117095135A (zh) * | 2023-10-19 | 2023-11-21 | 云南三耳科技有限公司 | 可在线编辑的工业三维场景建模布置方法、装置 |

| CN117152324A (zh) * | 2023-09-04 | 2023-12-01 | 艾迪普科技股份有限公司 | 基于三维播放器的数据驱动方法和装置 |

| WO2023231793A1 (fr) * | 2022-05-31 | 2023-12-07 | 京东方科技集团股份有限公司 | Procédé de virtualisation de scène physique, dispositif électronique, support de stockage lisible par ordinateur et produit programme d'ordinateur |

| CN117421940A (zh) * | 2023-12-19 | 2024-01-19 | 山东交通学院 | 数字孪生轻量化模型与物理实体之间全局映射方法及装置 |

| CN117593498A (zh) * | 2024-01-19 | 2024-02-23 | 北京云庐科技有限公司 | 一种数字孪生场景配置方法和系统 |

| CN118429589A (zh) * | 2024-07-04 | 2024-08-02 | 湖南禀创信息科技有限公司 | 基于像素流的数字孪生系统、方法、设备及存储介质 |

Families Citing this family (19)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN110505464A (zh) * | 2019-08-21 | 2019-11-26 | 佳都新太科技股份有限公司 | 一种数字孪生系统、方法及计算机设备 |

| CN111401154B (zh) * | 2020-02-29 | 2023-07-18 | 同济大学 | 一种基于ar的物流精准配送透明化辅助作业装置 |

| CN111708919B (zh) * | 2020-05-28 | 2021-07-30 | 北京赛博云睿智能科技有限公司 | 一种大数据处理方法及系统 |

| CN111738571A (zh) * | 2020-06-06 | 2020-10-02 | 北京王川景观设计有限公司 | 一种基于数字孪生的地热能综合体开发及应用的信息系统 |

| CN111857520B (zh) * | 2020-06-16 | 2024-07-26 | 广东希睿数字科技有限公司 | 基于数字孪生的3d可视化交互显示方法与系统 |

| CN111754754A (zh) * | 2020-06-19 | 2020-10-09 | 上海奇梦网络科技有限公司 | 一种基于数字孪生技术的设备实时监控方法 |

| WO2022032688A1 (fr) * | 2020-08-14 | 2022-02-17 | Siemens Aktiengesellschaft | Procédé d'aide à distance et dispositif |

| US20220067229A1 (en) * | 2020-09-03 | 2022-03-03 | International Business Machines Corporation | Digital twin multi-dimensional model record using photogrammetry |

| CN112037543A (zh) * | 2020-09-14 | 2020-12-04 | 中德(珠海)人工智能研究院有限公司 | 基于三维建模的城市交通灯控制方法、装置、设备和介质 |

| CN112346572A (zh) * | 2020-11-11 | 2021-02-09 | 南京梦宇三维技术有限公司 | 一种虚实融合实现方法、系统和电子设备 |

| CN112509148A (zh) * | 2020-12-04 | 2021-03-16 | 全球能源互联网研究院有限公司 | 一种基于多特征识别的交互方法、装置及计算机设备 |

| CN112950758B (zh) * | 2021-01-26 | 2023-07-21 | 长威信息科技发展股份有限公司 | 一种时空孪生可视化构建方法及系统 |

| CN112991742B (zh) * | 2021-04-21 | 2021-08-20 | 四川见山科技有限责任公司 | 一种实时交通数据的可视化仿真方法及系统 |

| CN113593039A (zh) * | 2021-08-05 | 2021-11-02 | 广东鸿威国际会展集团有限公司 | 基于数字孪生技术的企业数字化展示方法及系统 |

| CN113963100B (zh) * | 2021-10-25 | 2022-04-29 | 广东工业大学 | 一种数字孪生仿真场景的三维模型渲染方法及系统 |

| CN113987850B (zh) * | 2021-12-28 | 2022-03-22 | 湖南视觉伟业智能科技有限公司 | 基于多源多模态数据的数字孪生模型更新维护方法及系统 |

| CN114332741B (zh) * | 2022-03-08 | 2022-05-10 | 盈嘉互联(北京)科技有限公司 | 一种面向建筑数字孪生的视频检测方法及系统 |

| CN114966695B (zh) * | 2022-05-11 | 2023-11-14 | 南京慧尔视软件科技有限公司 | 雷达的数字孪生影像处理方法、装置、设备及介质 |

| CN115550687A (zh) * | 2022-09-23 | 2022-12-30 | 中国电信股份有限公司 | 三维模型场景交互方法、系统、设备、装置及存储介质 |

Citations (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN102789348A (zh) * | 2011-05-18 | 2012-11-21 | 北京东方艾迪普科技发展有限公司 | 交互式三维图形视频可视化系统 |

| US20170287199A1 (en) * | 2016-03-31 | 2017-10-05 | Umbra Software Oy | Three-dimensional model creation and rendering with improved virtual reality experience |

| CN108040081A (zh) * | 2017-11-02 | 2018-05-15 | 同济大学 | 一种地铁车站数字孪生监控运维系统 |

| CN109359507A (zh) * | 2018-08-24 | 2019-02-19 | 南京理工大学 | 一种车间人员数字孪生体模型快速构建方法 |

| CN110505464A (zh) * | 2019-08-21 | 2019-11-26 | 佳都新太科技股份有限公司 | 一种数字孪生系统、方法及计算机设备 |

Family Cites Families (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN101908232B (zh) * | 2010-07-30 | 2012-09-12 | 重庆埃默科技有限责任公司 | 一种交互式场景仿真系统及场景虚拟仿真方法 |

| US9877016B2 (en) * | 2015-05-27 | 2018-01-23 | Google Llc | Omnistereo capture and render of panoramic virtual reality content |

| CN106131536A (zh) * | 2016-08-15 | 2016-11-16 | 万象三维视觉科技(北京)有限公司 | 一种裸眼3d增强现实互动展示系统及其展示方法 |

| CN106131530B (zh) * | 2016-08-26 | 2017-10-31 | 万象三维视觉科技(北京)有限公司 | 一种裸眼3d虚拟现实展示系统及其展示方法 |

| CN109819233B (zh) * | 2019-01-21 | 2020-12-08 | 合肥哈工热气球数字科技有限公司 | 一种基于虚拟成像技术的数字孪生系统 |

-

2019

- 2019-08-21 CN CN201910775128.8A patent/CN110505464A/zh active Pending

- 2019-11-21 CN CN201911145077.7A patent/CN110753218B/zh active Active

- 2019-12-05 WO PCT/CN2019/123194 patent/WO2021031454A1/fr active Application Filing

Patent Citations (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN102789348A (zh) * | 2011-05-18 | 2012-11-21 | 北京东方艾迪普科技发展有限公司 | 交互式三维图形视频可视化系统 |

| US20170287199A1 (en) * | 2016-03-31 | 2017-10-05 | Umbra Software Oy | Three-dimensional model creation and rendering with improved virtual reality experience |

| CN108040081A (zh) * | 2017-11-02 | 2018-05-15 | 同济大学 | 一种地铁车站数字孪生监控运维系统 |

| CN109359507A (zh) * | 2018-08-24 | 2019-02-19 | 南京理工大学 | 一种车间人员数字孪生体模型快速构建方法 |

| CN110505464A (zh) * | 2019-08-21 | 2019-11-26 | 佳都新太科技股份有限公司 | 一种数字孪生系统、方法及计算机设备 |

Cited By (37)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN112991552A (zh) * | 2021-03-10 | 2021-06-18 | 中国商用飞机有限责任公司北京民用飞机技术研究中心 | 人体虚实匹配方法、装置、设备及存储介质 |

| CN112991552B (zh) * | 2021-03-10 | 2024-03-22 | 中国商用飞机有限责任公司北京民用飞机技术研究中心 | 人体虚实匹配方法、装置、设备及存储介质 |

| CN112925496A (zh) * | 2021-03-30 | 2021-06-08 | 四川虹微技术有限公司 | 一种基于数字孪生的三维可视化设计方法及系统 |

| CN113538863A (zh) * | 2021-04-13 | 2021-10-22 | 交通运输部科学研究院 | 一种隧道数字孪生场景构建方法及计算机设备 |

| CN115412463A (zh) * | 2021-05-28 | 2022-11-29 | 中国移动通信有限公司研究院 | 时延测量方法、装置及数字孪生网络 |

| CN115412463B (zh) * | 2021-05-28 | 2024-06-04 | 中国移动通信有限公司研究院 | 时延测量方法、装置及数字孪生网络 |

| CN113406968A (zh) * | 2021-06-17 | 2021-09-17 | 广东工业大学 | 基于数字孪生的无人机自主起降巡航方法 |

| CN113406968B (zh) * | 2021-06-17 | 2023-08-08 | 广东工业大学 | 基于数字孪生的无人机自主起降巡航方法 |

| CN113554063A (zh) * | 2021-06-25 | 2021-10-26 | 西安电子科技大学 | 一种工业数字孪生虚实数据融合方法、系统、设备、终端 |

| CN113554063B (zh) * | 2021-06-25 | 2024-04-23 | 西安电子科技大学 | 一种工业数字孪生虚实数据融合方法、系统、设备、终端 |

| CN113919106A (zh) * | 2021-09-29 | 2022-01-11 | 大连理工大学 | 基于增强现实和数字孪生的地下管道结构安全评价方法 |

| CN113919106B (zh) * | 2021-09-29 | 2024-05-14 | 大连理工大学 | 基于增强现实和数字孪生的地下管道结构安全评价方法 |

| CN114359475A (zh) * | 2021-12-03 | 2022-04-15 | 广东电网有限责任公司电力科学研究院 | 一种gis设备数字孪生三维模型展示方法、装置及设备 |

| CN114359475B (zh) * | 2021-12-03 | 2024-04-16 | 广东电网有限责任公司电力科学研究院 | 一种gis设备数字孪生三维模型展示方法、装置及设备 |

| CN114217555A (zh) * | 2021-12-09 | 2022-03-22 | 浙江大学 | 基于数字孪生场景的低延时远程遥控方法和系统 |

| CN114212609A (zh) * | 2021-12-15 | 2022-03-22 | 北自所(北京)科技发展股份有限公司 | 一种数字孪生纺织成套装备卷装作业方法 |

| CN114212609B (zh) * | 2021-12-15 | 2023-11-14 | 北自所(北京)科技发展股份有限公司 | 一种数字孪生纺织成套装备卷装作业方法 |

| CN114584571B (zh) * | 2021-12-24 | 2024-02-27 | 北京中电飞华通信有限公司 | 基于空间计算技术的电网场站数字孪生同步通信方法 |

| CN114584571A (zh) * | 2021-12-24 | 2022-06-03 | 北京中电飞华通信有限公司 | 基于空间计算技术的电网场站数字孪生同步通信方法 |

| WO2023133971A1 (fr) * | 2022-01-11 | 2023-07-20 | 长沙理工大学 | Système double numérique de micro-ordinateur à puce unique virtuel web3d basé sur zigbee |

| CN114740969A (zh) * | 2022-02-09 | 2022-07-12 | 北京德信电通科技有限公司 | 一种融合数字孪生与虚拟现实的互动系统及其方法 |

| CN114827144A (zh) * | 2022-04-12 | 2022-07-29 | 中煤科工开采研究院有限公司 | 一种煤矿综采工作面三维虚拟仿真决策分布式系统 |

| CN114827144B (zh) * | 2022-04-12 | 2024-03-01 | 中煤科工开采研究院有限公司 | 一种煤矿综采工作面三维虚拟仿真决策分布式系统 |

| CN114710495B (zh) * | 2022-04-29 | 2023-08-01 | 深圳市瑞云科技有限公司 | 基于云渲染的houdini分布式联机解算方法 |

| CN114710495A (zh) * | 2022-04-29 | 2022-07-05 | 深圳市瑞云科技有限公司 | 基于云渲染的houdini分布式联机解算方法 |

| WO2023231793A1 (fr) * | 2022-05-31 | 2023-12-07 | 京东方科技集团股份有限公司 | Procédé de virtualisation de scène physique, dispositif électronique, support de stockage lisible par ordinateur et produit programme d'ordinateur |

| CN115409944A (zh) * | 2022-09-01 | 2022-11-29 | 浙江巨点光线智慧科技有限公司 | 基于低代码数字孪生的三维场景渲染及数据修正系统 |

| CN115409944B (zh) * | 2022-09-01 | 2023-06-02 | 浙江巨点光线智慧科技有限公司 | 基于低代码数字孪生的三维场景渲染及数据修正系统 |

| CN116307740A (zh) * | 2023-05-16 | 2023-06-23 | 苏州和歌信息科技有限公司 | 基于数字孪生城市的起火点分析方法及系统、设备及介质 |

| CN117152324A (zh) * | 2023-09-04 | 2023-12-01 | 艾迪普科技股份有限公司 | 基于三维播放器的数据驱动方法和装置 |

| CN117095135B (zh) * | 2023-10-19 | 2024-01-02 | 云南三耳科技有限公司 | 可在线编辑的工业三维场景建模布置方法、装置 |

| CN117095135A (zh) * | 2023-10-19 | 2023-11-21 | 云南三耳科技有限公司 | 可在线编辑的工业三维场景建模布置方法、装置 |

| CN117421940B (zh) * | 2023-12-19 | 2024-03-19 | 山东交通学院 | 数字孪生轻量化模型与物理实体之间全局映射方法及装置 |

| CN117421940A (zh) * | 2023-12-19 | 2024-01-19 | 山东交通学院 | 数字孪生轻量化模型与物理实体之间全局映射方法及装置 |

| CN117593498A (zh) * | 2024-01-19 | 2024-02-23 | 北京云庐科技有限公司 | 一种数字孪生场景配置方法和系统 |

| CN117593498B (zh) * | 2024-01-19 | 2024-04-26 | 北京云庐科技有限公司 | 一种数字孪生场景配置方法和系统 |

| CN118429589A (zh) * | 2024-07-04 | 2024-08-02 | 湖南禀创信息科技有限公司 | 基于像素流的数字孪生系统、方法、设备及存储介质 |

Also Published As

| Publication number | Publication date |

|---|---|

| CN110753218A (zh) | 2020-02-04 |

| CN110505464A (zh) | 2019-11-26 |

| CN110753218B (zh) | 2021-12-10 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| WO2021031454A1 (fr) | Système et procédé de jumelage numérique et dispositif informatique | |

| WO2021031455A1 (fr) | Système, procédé et dispositif pour réaliser une réalité augmentée tridimensionnelle de fusion vidéo multicanal | |

| KR102201763B1 (ko) | 360 비디오 시스템에서 오버레이 처리 방법 및 그 장치 | |

| TWI691197B (zh) | 用於全視差光場壓縮之預處理器 | |

| KR102258446B1 (ko) | 360 비디오 시스템에서 오버레이 처리 방법 및 그 장치 | |

| US9983592B2 (en) | Moving robot, user terminal apparatus and control method thereof | |

| CN109859326B (zh) | 一种变电站跨平台二三维图形联动展示系统和方法 | |

| US20190379884A1 (en) | Method and apparatus for processing overlay media in 360 degree video system | |

| CN115187742B (zh) | 自动驾驶仿真测试场景生成方法、系统及相关装置 | |

| US9736369B2 (en) | Virtual video patrol system and components therefor | |

| CN106155287A (zh) | 虚拟导览控制系统与方法 | |

| KR101876114B1 (ko) | 3d 모델링 구현을 위한 단말기, 서버, 이들을 포함하는 시스템 및 이를 이용하는 3d 모델링 방법 | |

| AU2023296339B2 (en) | Monitoring system and method based on digital converter station | |

| CN103440319A (zh) | 历史信息动态显示方法和系统 | |

| CN113074714A (zh) | 一种基于多数据融合的多态势感知传感器及其处理方法 | |

| CN109495685A (zh) | 一种摄像机日夜切换控制方法、装置和存储介质 | |

| CN115131484A (zh) | 图像渲染方法、计算机可读存储介质以及图像渲染设备 | |

| CN113110731B (zh) | 媒体内容生成的方法和装置 | |

| WO2022191070A1 (fr) | Procédé, dispositif et programme de diffusion en continu d'objet 3d | |

| CN112182286B (zh) | 一种基于三维实景地图的智能视频管控方法 | |

| CN112291550A (zh) | 自由视点图像生成方法、装置、系统及可读存储介质 | |

| CN202694599U (zh) | 数字化三维立体沙盘显示装置 | |

| US11756260B1 (en) | Visualization of configurable three-dimensional environments in a virtual reality system | |

| CN112218132B (zh) | 一种全景视频图像显示方法及显示设备 | |

| CN104581018A (zh) | 一种实现二维地图与卫星影像交互的视频监控方法 |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| 121 | Ep: the epo has been informed by wipo that ep was designated in this application |

Ref document number: 19942273 Country of ref document: EP Kind code of ref document: A1 |

|

| NENP | Non-entry into the national phase |

Ref country code: DE |

|

| 122 | Ep: pct application non-entry in european phase |

Ref document number: 19942273 Country of ref document: EP Kind code of ref document: A1 |

|

| 32PN | Ep: public notification in the ep bulletin as address of the adressee cannot be established |

Free format text: NOTING OF LOSS OF RIGHTS PURSUANT TO RULE 112(1) EPC (EPO FORM 1205A DATED 27/09/2022) |

|

| 122 | Ep: pct application non-entry in european phase |

Ref document number: 19942273 Country of ref document: EP Kind code of ref document: A1 |