CN111382232A - Question and answer information processing method and device and computer equipment - Google Patents

Question and answer information processing method and device and computer equipment Download PDFInfo

- Publication number

- CN111382232A CN111382232A CN202010159784.8A CN202010159784A CN111382232A CN 111382232 A CN111382232 A CN 111382232A CN 202010159784 A CN202010159784 A CN 202010159784A CN 111382232 A CN111382232 A CN 111382232A

- Authority

- CN

- China

- Prior art keywords

- information

- reply

- vectors

- word

- pending

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Pending

Links

Images

Classifications

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F16/00—Information retrieval; Database structures therefor; File system structures therefor

- G06F16/30—Information retrieval; Database structures therefor; File system structures therefor of unstructured textual data

- G06F16/33—Querying

- G06F16/332—Query formulation

- G06F16/3329—Natural language query formulation or dialogue systems

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F16/00—Information retrieval; Database structures therefor; File system structures therefor

- G06F16/30—Information retrieval; Database structures therefor; File system structures therefor of unstructured textual data

- G06F16/33—Querying

- G06F16/3331—Query processing

- G06F16/334—Query execution

- G06F16/3344—Query execution using natural language analysis

Landscapes

- Engineering & Computer Science (AREA)

- Physics & Mathematics (AREA)

- Theoretical Computer Science (AREA)

- Artificial Intelligence (AREA)

- Computational Linguistics (AREA)

- Data Mining & Analysis (AREA)

- Databases & Information Systems (AREA)

- General Engineering & Computer Science (AREA)

- General Physics & Mathematics (AREA)

- Mathematical Physics (AREA)

- Human Computer Interaction (AREA)

- Information Retrieval, Db Structures And Fs Structures Therefor (AREA)

Abstract

The application provides a question-answer information processing method, a question-answer information processing device and computer equipment, under the condition that a plurality of answer scenes are simultaneously triggered by inquiry information provided by a user, namely, the pending reply information under a plurality of different reply scenes associated with the query information is obtained, the application can obtain the logical relationship among the plurality of pending reply information, and obtaining respective first word vectors of a plurality of pieces of information to be answered and second word vectors representing the logical relationship as model input, inputting a pre-trained answer fusion model for processing, thereby obtaining a complex target reply message containing semantic logic, making the reply more natural and coherent, further improving the user experience, compared with the prior art that a manual labeling mode is adopted to provide target response information, the processing mode greatly reduces manpower and material resources brought by manual answer labeling.

Description

Technical Field

The present application relates to the field of communications technologies, and in particular, to a method and an apparatus for processing question and answer information, and a computer device.

Background

Along with the development of artificial intelligence technology, more and more enterprises use intelligent customer service systems, and the self-service replaces manual work, so that the customer inquiry can be responded in real time, the communication and exchange efficiency of customers is greatly improved, and the labor cost is reduced.

In practical application, the intelligent customer service system obtains the query information, may query a plurality of target reply information in the process of querying the matched target reply information from a plurality of preset reply information, and sequentially feeds back the plurality of target reply information to the user, and then the user understands the meaning commonly expressed by the plurality of target reply information, so that the process is complicated, and the accuracy of the intelligent customer service system in feeding back the reply information is reduced.

Disclosure of Invention

In view of this, the present application provides a method for processing question and answer information, where the method includes:

acquiring inquiry information;

obtaining pending reply information under a plurality of different reply scenes associated with the query information and a plurality of logical relations among the pending reply information;

acquiring respective first word vectors of a plurality of pieces of to-be-determined reply information and corresponding second word vectors of associated words corresponding to the logical relationship;

inputting the obtained plurality of first word vectors and the corresponding second word vectors into a reply fusion model to obtain target reply information aiming at the inquiry information;

and outputting the target reply information.

Optionally, the obtaining a logical relationship between a plurality of pieces of the pending reply information includes:

and querying a reply relation graph to obtain a plurality of logical relations among the pending reply information, wherein the reply relation graph contains the logical relations among the reply information in different reply scenes.

Optionally, the obtaining process of the reply relationship graph includes:

acquiring candidate reply information corresponding to different reply scenes and a logic relation between the different candidate reply information;

and taking the candidate reply information corresponding to the different reply scenes as nodes, and constructing the reply relation graph according to the logical relation.

Optionally, the inputting the obtained multiple first word vectors and the corresponding second word vectors into a reply fusion model to obtain target reply information for the query information includes:

inputting the obtained multiple first word vectors into a coding network of a reply fusion model for coding to obtain corresponding first coding vectors;

processing the obtained multiple first coding vectors and the corresponding second word vectors through an attention mechanism to obtain multiple context vectors of the to-be-determined reply information;

and inputting the obtained context vector into a decoding network of the reply fusion model for decoding processing to obtain target reply information aiming at the inquiry information.

Optionally, the encoding network and the decoding network are both recurrent neural networks.

Optionally, the processing, by the attention mechanism, the obtained multiple first encoding vectors and corresponding second word vectors to obtain multiple context vectors of the to-be-determined reply information includes:

similarity calculation is carried out on elements contained in different first encoding vectors to obtain word weights of corresponding undetermined reply information, wherein the word weights indicate the importance degree of corresponding words relative to other undetermined reply information with a logical relationship;

and performing weighted operation on the obtained multiple first coding vectors by using the obtained word weights to obtain multiple context vectors of the to-be-determined reply information.

Optionally, the obtaining pending reply information in a plurality of different reply scenarios associated with the query information includes:

semantic analysis is carried out on the inquiry information, and pending reply information related to the inquiry information is screened from a candidate reply information set according to a semantic analysis result;

and if the pending reply information comprises pending reply information in a plurality of different reply scenes, executing the step of obtaining the logical relationship among the plurality of pending reply information.

The present application also provides a question-answering information processing apparatus, the apparatus including:

the information acquisition module is used for acquiring inquiry information of the product;

the obtaining module is used for obtaining pending reply information under a plurality of different reply scenes associated with the query information and a plurality of logical relations among the pending reply information;

a word vector acquisition module, configured to acquire a first word vector of each of the multiple pieces of to-be-determined reply information and a second word vector of a relevant word corresponding to the corresponding logical relationship;

the target reply information obtaining module is used for inputting the obtained plurality of first word vectors and the corresponding second word vectors into a reply fusion model to obtain target reply information aiming at the inquiry information;

and the information output module is used for outputting the target reply information.

Optionally, the target reply information obtaining module includes:

the encoding processing unit is used for inputting the obtained first word vectors into an encoding network of the reply fusion model for encoding processing to obtain corresponding first encoding vectors;

the context vector obtaining unit is used for processing the obtained multiple first coding vectors and the corresponding second word vectors through an attention mechanism to obtain multiple context vectors of the to-be-determined reply information;

and the decoding processing unit is used for inputting the obtained context vector into the decoding network of the answer fusion model to perform decoding processing, so as to obtain target answer information aiming at the inquiry information.

The present application further provides a computer device, comprising:

a communication interface;

a memory for storing a program for implementing the question-answering information processing method as described above;

and the processor is used for loading and executing the program stored in the memory and realizing the steps of the question answering information processing method.

Therefore, compared with the prior art, the present application provides a method, an apparatus, and a computer device for processing question and answer information, where, under the condition that a plurality of answer scenes are triggered by query information provided by a user at the same time, that is, under the condition that the computer device queries pending answer information in a plurality of different answer scenes associated with the query information, the present embodiment may obtain a logical relationship between the plurality of pending answer information, and then, input a pre-trained answer fusion model by using a first word vector of each of the plurality of pending answer information and a second word vector representing the logical relationship as model inputs, so as to obtain a complex target answer information containing semantic logic, so that the answer is more natural and coherent, further improve user experience, and simultaneously, compared with the prior art, adopt a manual labeling mode to provide the target answer information, the processing mode greatly reduces manpower and material resources brought by manual answer labeling.

Drawings

In order to more clearly illustrate the embodiments of the present application or the technical solutions in the prior art, the drawings needed to be used in the description of the embodiments or the prior art will be briefly introduced below, it is obvious that the drawings in the following description are only embodiments of the present application, and for those skilled in the art, other drawings can be obtained according to the provided drawings without creative efforts.

Fig. 1 is a diagram showing a system architecture for implementing the method for processing question and answer information proposed in the present application;

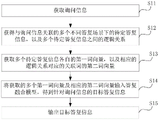

FIG. 2 is a diagram illustrating a hardware architecture of an alternative example of a computer device as set forth herein;

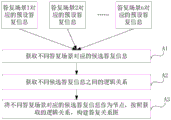

FIG. 3 is a flow chart illustrating an alternative example of a method for processing question and answer information according to the present application;

fig. 4 is a schematic flow chart showing still another alternative example of the question-answering information processing method proposed in the present application;

fig. 5 is a schematic flow chart illustrating an alternative example of constructing a reply relationship diagram in the question-answering information processing method proposed in the present application;

fig. 6 is a schematic view showing a scene flow of still another alternative example of the question-answering information processing method proposed in the present application;

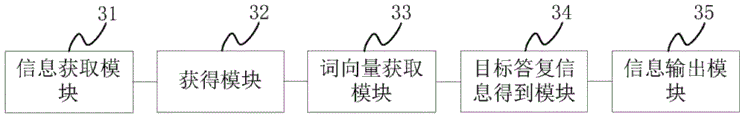

fig. 7 is a schematic structural diagram showing an alternative example of the question-answering information processing apparatus proposed in the present application;

fig. 8 is a schematic structural diagram showing still another alternative example of the question-answering information processing apparatus proposed by the present application;

fig. 9 shows a schematic structural diagram of still another alternative example of the question-answering information processing apparatus proposed in the present application.

Detailed Description

The technical solutions in the embodiments of the present application will be clearly and completely described below with reference to the drawings in the embodiments of the present application, and it is obvious that the described embodiments are only a part of the embodiments of the present application, and not all of the embodiments. All other embodiments, which can be derived by a person skilled in the art from the embodiments given herein without making any creative effort, shall fall within the protection scope of the present application.

It should be noted that, for convenience of description, only the portions related to the related invention are shown in the drawings. The embodiments and features of the embodiments in the present application may be combined with each other without conflict.

It should be understood that "system", "apparatus", "unit" and/or "module" as used herein is a method for distinguishing different components, elements, parts or assemblies at different levels. However, other words may be substituted by other expressions if they accomplish the same purpose.

As used in this application and the appended claims, the terms "a," "an," "the," and/or "the" are not intended to be inclusive in the singular, but rather are intended to be inclusive in the plural unless the context clearly dictates otherwise. In general, the terms "comprises" and "comprising" merely indicate that steps and elements are included which are explicitly identified, that the steps and elements do not form an exclusive list, and that a method or apparatus may include other steps or elements. An element defined by the phrase "comprising an … …" does not exclude the presence of other identical elements in the process, method, article, or apparatus that comprises the element.

In the description of the embodiments herein, "/" means "or" unless otherwise specified, for example, a/B may mean a or B; "and/or" herein is merely an association describing an associated object, and means that there may be three relationships, e.g., a and/or B, which may mean: a exists alone, A and B exist simultaneously, and B exists alone. In addition, in the description of the embodiments of the present application, "a plurality" means two or more than two. The terms "first", "second" and the like are used for descriptive purposes only and are not to be construed as indicating or implying relative importance or implicitly indicating the number of technical features indicated. Thus, a feature defined as "first" or "second" may explicitly or implicitly include one or more of that feature.

Additionally, flow charts are used herein to illustrate operations performed by systems according to embodiments of the present application. It should be understood that the preceding or following operations are not necessarily performed in the exact order in which they are performed. Rather, the various steps may be processed in reverse order or simultaneously. Meanwhile, other operations may be added to the processes, or a certain step or several steps of operations may be removed from the processes.

For the prior art scheme described in the background section, for query information provided by a user, a system may query multiple pieces of to-be-determined response information associated with the query information from a large number of preset response information (which may be manually labeled), and because the current system directly feeds the multiple pieces of to-be-determined response information back to a client of the user for output, the user needs to analyze and understand the relationship between the multiple pieces of to-be-determined response information by himself to obtain target response information, which is cumbersome and affects the accuracy of the system in feeding back the response information.

In order to improve the problems, the system is expected to directly provide more intuitive target response information which does not need to be analyzed and understood by a user, namely for the situation described above, the system is expected to process a plurality of inquired pending response information to obtain a natural and coherent target response information which is fed back to the user, the user does not need to rely on self knowledge to store and analyze and understand, the workload of the user is reduced, and the accuracy of the system for feeding back the response information is greatly improved.

Under the above-described inventive concept, the present application provides a new method for processing question and answer information, and the specific implementation process may refer to but is not limited to the description of the corresponding parts of the following embodiments, which are not described in detail herein.

Referring to fig. 1, a system architecture diagram for implementing the method for processing question and answer information provided by the present application is shown, where the system may include an intelligent customer service system, and the present application does not limit a specific application scenario of the system, and as shown in fig. 1, the system may include a terminal 11 and a computer device 12, where:

the terminal 11 may be a smart phone, a tablet computer, a wearable device, an ultra-mobile personal computer (UMPC), a netbook, a Personal Digital Assistant (PDA), an e-book reader, a desktop computer, or other electronic devices, and the user may start a client in the terminal 11, access the smart customer service system, input query information to obtain target response information fed back by the smart customer service system according to the query information, and refer to the following description of the corresponding embodiments regarding how the smart customer service system obtains the target response information.

The client may be a dedicated application installed in the terminal 11, such as some shopping applications, some social applications, some consulting applications, and the like, and may also be a browser in the terminal 11, in which case, the user may access the intelligent customer service system by inputting a corresponding website in the browser, and the application does not limit the type of the terminal 11 and the implementation manner of accessing the computer device 12, and may be determined according to a specific application scenario.

The computer device 12 may be a service device supporting a service of the intelligent customer service system, specifically, may be formed by one or more servers, and may also be a terminal with strong data processing and computing capabilities, and the application does not limit the product type of the computer device 12.

In general, if the client sending the query message is a professional application installed in the terminal 11, the computer device may be a service device matched with the client, such as a server providing services for the client; if the client sending the query information is a browser, that is, the client accesses the intelligent customer service system through a web page, the system may further include a server 13 matched with the client, and the client may send the query information to the server 13 and forward the query information to the corresponding computer device 12 through the server 13, in which case the computer device 12 may be a server or an electronic device with a certain data processing capability.

It should be understood that the system components for implementing the method for processing question and answer information are not limited to the above terminal 11 and computer device 12, and may further include a data storage device capable of being connected to the computer device in a communication manner, and storing the content such as the preset reply information, and of course, the data storage device may also be disposed in the computer device 12, and the present application does not limit the system components for implementing the method for processing answer information.

Referring to fig. 2, a schematic diagram of a hardware structure of an alternative example of a computer device proposed in the present application is shown, where the computer device may be a server as described in the above embodiments, or an electronic device with certain data processing and computing capabilities, and the present application does not limit the product type of the computer device, and as shown in fig. 2, the computer device may include: a communication interface 21, a memory 22, and a processor 23, wherein:

the number of each of the communication interface 21, the memory 22, and the processor 23 may be at least one, and the communication interface 21, the memory 22, and the processor 23 may communicate with each other through a communication bus.

The communication interface 21 may be an interface of a wireless communication module and/or a wired communication module, such as an interface of a WIFI module, a GPRS module, a GSM module, and other communication modules, and may further include an interface such as a USB interface, a serial/parallel interface, and other interfaces, which are used to implement data interaction between internal components of the computer device, and specifically, the communication interface of the computer device may be configured according to specific network communication requirements, and the type and number of the communication interface 21 are not limited in this application.

In practical application, the query information, the preset reply information, the candidate reply information, and the like may be acquired through the communication interface, and may also be used to implement data transmission and the like between the components of the computer device, which may be determined according to the specific communication requirements of the query and reply information processing method, and this embodiment is not described in detail herein.

The memory 22 may store a program that implements the question-answering information processing method proposed in the embodiment of the present application.

In practical application of this embodiment, the memory 22 may also be used to store various intermediate data, acquired data, output data, and the like generated in the process of processing the question and answer information, such as pending answer information in different answer scenes, logical relationships between different pending answer information, candidate answer information, and the like.

Optionally, the memory may store program codes for implementing the functional modules included in the virtual device, and may specifically be a high-speed RAM memory, or a non-volatile memory (non-volatile memory), such as at least one disk memory.

The processor 23 may be a Central Processing Unit (CPU), or an Application Specific Integrated Circuit (ASIC), a Digital Signal Processor (DSP), an Application Specific Integrated Circuit (ASIC), an off-the-shelf programmable gate array (FPGA) or other programmable logic device, etc.

In the present application, the processor 23 may be configured to load and execute the program stored in the memory 22 to implement the steps of the question and answer information processing method proposed in the present application, and as for the steps of the question and answer information processing method, reference may be made to the following description of corresponding parts of the embodiments of the method, which are not described in detail herein.

It should be understood that the structure of the computer device shown in fig. 2 is not limited to the computer device in the embodiment of the present application, and in practical applications, the computer device may include more or less components than those shown in fig. 2, or some components in combination, which is not listed here.

Referring to fig. 3, a flow chart of an alternative example of the method for processing question and answer information provided by the present application is shown, and the method may be applied to a computer device, the composition structure of the computer device and the product type thereof, which may be determined according to actual requirements by referring to, but not limited to, the description of the above embodiment of the computer device. As shown in fig. 3, the method for processing question and answer information provided by this embodiment may include, but is not limited to, the following steps:

step S11, acquiring inquiry information;

the query information may be any query content input by the user, and the specific query content included in the query information is not limited in the present application and may be determined according to a specific application scenario.

In a possible implementation manner, a user can log in an application platform where an intelligent customer service system to be asked for is located by using a terminal, and query information to be known is directly input in a query input box of the application platform, for example, the user can input "select which button to take a picture? The terminal may detect the query information input for the query input box and send the query information to the computer device, and currently, the computer device may also detect in real time or periodically whether the query information input for the query input box exists or not to obtain the query information, and the like. It can be seen that, the specific implementation manner of the computer device for acquiring the query information is not limited in the present application, and is not limited to the two implementation manners listed above.

In addition, for the input of the query information, the user may input the query information to the query input box by using an input device such as a keyboard or a touch screen, or may input the query information to the query input box by using a voice input method.

Of course, in some embodiments, the query information may not be directly input by the user in the query input box, or may be obtained by the user operating on an application platform, where the application platform automatically generates the query information based on the operation, for example, the application platform may push the question information that may be interested in the user to the user based on the browsing history of the user, the interest of the user, the hotspot question of the currently browsed object, and the like, and output the question information on the current interface, so that the user may select one question information from the question information as the query information and send the query information to the computer device. But is not limited to the manner in which the inquiry information is generated as described in the present embodiment.

Step S12, obtaining pending reply information under a plurality of different reply scenes associated with the query information and logic relations among the pending reply information;

in order to improve the efficiency of question answering and reduce the labor cost, a large number of questions (namely query information) which may be proposed by a user are generally counted, and at least one piece of answer information is configured for each question, so that after a certain query information is proposed by the user, the system can rapidly and relatively accurately give the answer information.

However, in practical applications, there are cases that a system queries multiple pre-configured reply messages for one query message, and in order to make the reply messages finally fed back to the user naturally coherent, the application proposes to analyze the multiple reply messages to obtain one target reply message. In contrast, according to research, the application finds that a large number of pre-configured reply messages often belong to different reply scenes, and certain logical relationships, such as turning relationships, progressive relationships, parallel relationships and the like, may exist among the reply messages in the different reply scenes. Therefore, in order to obtain naturally coherent target response information, the application can analyze the response information according to the logical relationship between the response information in different response scenes to obtain the target response information.

Specifically, in order to improve the efficiency of obtaining the target reply information, the present application may classify a large number of preconfigured reply information in reply scenarios, that is, determine reply scenarios to which the large number of preconfigured reply information belong, so that each reply scenario may have at least one preset reply information, and determine a logical relationship between the reply information of different reply scenarios by analyzing the reply information content of the different reply scenarios, where a specific analysis process is not described in detail.

For convenience of subsequent description, a set of different response scenes S may be denoted as S, and a response set formed by the preset response information R in each response scene may be denoted as R. It can be seen that, S ═ S1, S2, …, sn }, each of which may represent a response scenario of preset response information in the smart customer service system; r { R1, R2, …, rm } each element in the set R may represent a preset reply message in a reply scenario, and the application does not limit the specific values of n and m.

In combination with the above analysis, it should be understood that, for the preset reply information configured in advance, it is usually determined for the query information that may be provided by the user, that is, each preset reply information has a certain association relationship with each preset query information, so that, after the computer device obtains the query information input by the current user, the computer device may query the preset reply information associated with the query information by using the association relationships, and in order to distinguish other preset reply information, the application marks the preset reply information associated with the query information as pending reply information.

It should be understood that if the computer device queries that the number of the pending reply messages associated with the query message is one, that is, the pending reply messages in a reply scenario, the application may directly determine that the pending reply messages are the target reply message output; if the number of the pending reply messages queried by the computer device is multiple and belongs to different reply scenarios, that is, the computer device obtains the pending reply messages in multiple reply scenarios associated with the query message, and needs to perform further analysis processing on the pending reply messages to obtain a target reply message. In this case, the present application may obtain the logical relationship between the plurality of pending reply messages.

The logical relationship between the preset reply messages in different reply scenarios may be determined by analyzing the content of the preset reply message according to the description of the corresponding parts above, or may be determined by manual analysis, and the like.

It should be noted that, the logical relationship between different preset reply messages in the reply scenario a and the reply scenario B may be the same or different, and may be determined according to the specific content of the preset reply message. The application may record a set of logical relationships between preset reply messages of different reply scenarios as G, { G1: d ═ Gi.j;g2…;gm…}(i,j<n),di,jThe method can represent a sentence formed by fusing preset reply information in the ith reply scene with preset reply information in the jth reply scene, m can represent m logical relations in total between the preset reply information of all different reply scenes, and each logical relation corresponds to a related word gk (k) contained in the fused sentence<m)。

It can be seen that, for the logical relationship (i.e. semantic logical relationship) of different response scenarios, the related word gk capable of representing the logical relationship can be used to represent, and the related word is integrated into the composite sentence (i.e. target response information) composed of multiple pieces of pending response information, so that the composite sentence can not only indicate the content of the multiple pieces of pending response information, but also indicate the logical relationship between the content of the multiple pieces of pending response information, and further make the obtained composite sentence more natural and coherent.

The method and the device can determine and store the relevant words gk applied to each logic relationship according to actual conditions so as to be called in actual use. For example, the related words corresponding to the transition relationship may be "… although …", "… but …", "… but/…", and the related words corresponding to the parallel relationship may be "both … and …", "one side …", and the like; the related words corresponding to the progressive relationship may be "not only … but also …", "not only … but also …", "not only … but also …", or the like; the relevant words corresponding to the selection relation may be "… or …", "… inferior to …", "… or" and the like, and for relevant words corresponding to other logical relations such as causal relation, assumed relation, bearing relation, conditional relation and the like, the relevant words may be determined according to semantic grammar, and detailed description thereof is omitted.

Step S13, acquiring respective first word vectors of a plurality of pieces of undetermined reply information and second word vectors of relevant words corresponding to corresponding logical relations;

in succession to the above analysis, in this embodiment, how to generate a composite sentence containing a logical relationship (which may be represented by a corresponding related word) for the user according to the logical relationship among the multiple response scenes in the case that the user asks a problem that multiple response scenes coexist, so as to better express the logical relationship among the response information corresponding to each response scene.

Based on this, in this embodiment, a pre-constructed model may be used to implement fusion processing on the obtained multiple pieces of to-be-determined response information and corresponding associated words, so that after obtaining multiple pieces of to-be-determined response information and associated words representing corresponding logical relationships, in this embodiment, words included in each piece of to-be-determined response information may be converted by a vector conversion method to obtain word vectors, and words included in the associated words are converted to obtain word vectors.

Among them, Word embedding, also known as Word embedded Natural Language Processing (NLP), is a collective term for a set of language modeling and feature learning techniques in which words or phrases from a vocabulary are mapped to vectors of real numbers. Conceptually, it involves mathematical embedding from a one-dimensional space of each word to a continuous vector space with lower dimensions. The method and the device can process the words contained in the reply information to be answered by utilizing algorithms such as a neural network and the like to obtain corresponding first word vectors, and similarly, process the obtained associated words to obtain corresponding second word vectors, and a specific implementation process is not detailed.

Step S14, inputting the obtained multiple first word vectors and corresponding second word vectors into a reply fusion model to obtain target reply information aiming at the inquiry information;

in this embodiment, the reply fusion model is the model for realizing the fusion of the multiple pieces of pending reply information and the associated words to obtain the target reply information, and may be obtained by training the sample reply information and the associated words corresponding to the sample reply information based on a machine learning algorithm.

In some embodiments, a reply scene set S composed of a plurality of reply scenes may be predefined in the above manner, corresponding to a combination R composed of a plurality of preset reply messages in each reply scene, and relationships between different reply scenes may be categorized by using the preset reply messages corresponding to the different reply scenes to define logical relationships between the different reply scenes; and according to the logical relationship between different response scenes, fusing corresponding preset response information to obtain a composite sentence, thereby defining a fused response data set D under different response scenes, wherein D is { D ═ D }i.j}(i,j<n),di,jThe method can represent a composite sentence formed by fusing preset reply information in the ith reply scene and preset reply information in the jth reply scene, so that the composite sentence can be used as standard reply information to correct a model obtained by training in the model training process, and the model output accuracy is improved.

The sentence templates with corresponding logical relationships can be obtained through analysis for the compound sentences in the set D, so as to assist in implementing the fusion processing of the plurality of preset reply information corresponding to the logical relationships.

In addition, the logical relationship existing between the different response scenes may specifically be a set G of logical relationships formed by the related words gk in the sentences included in the set D. With regard to the specific definition process of these sets, and the definition representation thereof, reference may be made, but not limited to, the manner described above

Based on the preprocessing, simple preset reply information can be selected from the preset reply data set R corresponding to the different reply scenes as sample reply information, respective word vectors are obtained through vector conversion processing, associated words representing the logical relationship corresponding to the reply scene are inquired from the combination G and are used as sample associated words to be converted into corresponding word vectors, then machine learning algorithms such as neural networks can be utilized to train the word vectors of the sample reply information and the word vectors of the corresponding sample associated words to obtain a compound sentence which contains the sample associated words and can indicate the content of the sample reply information, the compound sentence is semantically compared with the compound sentence corresponding to the corresponding preset reply scene in the sentence D, if the comparison result does not meet the constraint condition, the model training can be continued according to the mode, until the comparison result meets the constraint condition, the content of the constraint condition is not limited, for example, the semantic similarity is smaller than a threshold value.

In order to improve the accuracy of model output, the training of the model can be realized by combining an attention mechanism in the model training process. Under the condition of limited computing capacity, the attention mechanism is a resource allocation scheme of a main means for solving the information overload problem, the computing resources are allocated to more important tasks and can be regarded as bionic, the machine simulates the attention behavior of human reading and hearing, and the computer selects forgetting and associated context by the attention mechanism.

Based on the method, the weight of the attention mechanism can be added to a hidden layer of the neural network, so that the content which does not conform to the attention model is weakened or forgotten. For example, for each word contained in the preset reply information of different reply scenes, the attention mechanism is utilized to determine the more important and accurate word for the expressed semantics, and the weight of the words is increased, so that the probability that the word is selected as the word of the target reply information (i.e. the composite sentence) is improved in the subsequent training process, and the model output accuracy is further improved. The concrete implementation process of how to utilize the neural network and the attention mechanism to realize the training of the reply fusion model is not detailed in detail.

By combining the analysis of the above answer-complex fusion model, in the process of processing the first word vector of each of the plurality of pieces of undetermined reply information and the second word vector of the associated word representing the logical relationship among the plurality of pieces of undetermined reply information, not only the respective semantics of the plurality of pieces of undetermined reply information are considered, but also the semantic logical relationship among different pieces of undetermined reply information is considered, and the logical relationship is fused into the target reply information obtained by model processing.

It should be noted that the target response information obtained in this embodiment may be added to the set D to implement optimization of the response fusion model used in this application, and further improve accuracy of model output.

In step S15, the target reply information is output.

In this embodiment, after obtaining the target reply information of the query information provided by the user, the computer device may send the target reply information to a client interface of the user providing the query information for the user to view, and may also send the target reply information to a preset bound terminal for output, and the like, which is not described in detail in the present application for the specific implementation process of step S15.

In summary, in a case where a plurality of response scenarios are triggered by query information provided by a user at the same time, that is, in a case where a computer device queries pending response information in a plurality of different response scenarios associated with the query information, this embodiment may obtain a logical relationship between the pending response information, and then, perform a first word vector for each of the pending response information, and a second word vector representing the logical relationship is used as a model input, a pre-trained answer fusion model is input for processing, thereby obtaining a complex target reply message containing semantic logic, making the reply more natural and coherent, further improving the user experience, compared with the prior art that a manual labeling mode is adopted to provide target response information, the processing mode greatly reduces manpower and material resources brought by manual answer labeling.

Referring to fig. 4, a schematic flow chart of another optional example of the question and answer information processing method provided in the present application is shown, where this embodiment may be an optional detailed implementation manner of the question and answer information processing method provided in the foregoing embodiment, and as shown in fig. 4, this method may include:

step S21, acquiring inquiry information;

step S22, obtaining pending reply information under a plurality of different reply scenes associated with the query information;

for specific implementation processes of step S21 and step S22, reference may be made to the description of corresponding parts in the foregoing embodiments, and details are not repeated in this embodiment. In addition, the present embodiment mainly describes how the computer device obtains the target response information and feeds back the target response information to the user when the query information provided by the user triggers multiple response scenarios, but it should be understood that, in some embodiments, the query information provided by the user may further trigger one response scenario, and in this case, the pending response information associated with the query information may be directly fed back to the user.

Therefore, after the computer device acquires the query information, semantic analysis can be performed on the query information, pending response information associated with the query information is screened from a subsequent response information set according to a semantic analysis result, and if a plurality of queried pending response information are provided and correspond to a plurality of different response scenes, corresponding target response information can be obtained according to the query information processing mode provided by the embodiment; if a pending reply message is inquired, the pending reply message can be directly output as a target reply message.

Step S23, inquiring the reply relation graph to obtain the logic relation among a plurality of pending reply information;

in combination with the above analysis, in practical applications, when there is a situation where multiple response scenarios coexist in the user question, that is, when the query information provided by the user may trigger multiple response scenarios, if each response scenario is independently responded, pending response information of different response scenarios is obtained and put together for response, and the user needs to understand the relationship between the multiple pending response information and the meaning of the multiple pending response information. In order to improve the above problem, the present embodiment considers the logical relationship between different response scenarios, so as to combine the logical relationship to implement the sentence reconstruction of the pending response information in the different response scenarios, and obtain more coherent and logical response information.

Therefore, the present application may define the logical relationship between different response scenarios according to rules such as semantic grammar, etc., to construct the response relationship graph, so that the response relationship graph may include the logical relationship between the response information in different response scenarios, and the embodiment does not limit the specific construction process of the response relationship graph.

In one possible implementation, referring to a flowchart of an alternative example of constructing an answer relationship graph shown in fig. 5, the constructing method may include:

step A1, acquiring candidate reply information corresponding to different reply scenes;

in combination with the above analysis, the present application may classify the reply scenes of the pre-reply messages, and use the preset reply messages belonging to each reply scene as the candidate reply messages of the reply scene, or select some preset reply messages from the preset reply messages corresponding to each reply scene to determine as the candidate reply messages, for example, select the preset reply messages with higher utilization rate or better user feedback as the candidate reply messages, and the like.

Step A2, obtaining the logical relationship between different candidate reply messages;

regarding the manner of obtaining the logical relationship between different candidate reply information, reference may be made to the above description of the process of obtaining the logical relationship between the reply information in different reply scenarios, and this embodiment is not described again.

And step A3, taking candidate reply information corresponding to different reply scenes as nodes, and constructing a reply relation graph according to the acquired logical relation.

The answer relationship graph of the present embodiment may be a directed graph, that is, a method for representing the relationship between objects, and may be composed of some small dots (called vertices or nodes) and straight lines or curves (called edges) connecting the dots. If each edge of the graph is assigned a direction, the resulting graph is called a directed graph, and its edges are also called directed edges.

Based on this, in this embodiment, candidate reply information corresponding to different reply scenarios may be used as nodes of a directed graph, and a directed edge between the nodes of the directed graph is determined according to a logical relationship between different candidate reply information, so as to obtain a reply relationship graph, so that when a logical relationship between pending reply information is subsequently queried, a plurality of pending reply information may be directly determined from the reply relationship graph, and a logical relationship corresponding to the directed edge between the plurality of pending reply information is determined as a logical relationship between the plurality of pending reply information, where a logical precedence order between two connected nodes is indicated according to a direction of the directed edge.

In some embodiments, for the above-mentioned reply relationship graph, a corresponding reply relationship graph may also be constructed for different predetermined reply scenarios in the above-mentioned manner, in this case, the reply relationship graph may have different reply scenarios as nodes, and the directed edges between the corresponding nodes are determined by the logical relationship between the different reply scenarios. In this construction mode, the logical relationship between the corresponding pieces of pending reply information can be obtained by inquiring the logical relationship between the reply scenes to which the pieces of pending reply information belong.

It should be noted that, if a new response scenario is added, the response relationship graph may be updated accordingly, so as to improve the accuracy of subsequent processing of the question and answer information. The storage mode and the updating mode of the reply relational graph are not limited in the application.

Step S24, acquiring the relevant words corresponding to the obtained logical relationship;

for the associated words representing different logical relationships, reference may be made to the description of the corresponding parts in the above embodiments, which is not described in detail in this embodiment.

Step S25, acquiring respective first word vectors of a plurality of pieces of to-be-determined reply information and second word vectors corresponding to corresponding associated words;

step S26, inputting the obtained multiple first word vectors into a coding network of a reply fusion model for coding, and obtaining corresponding first coding vectors;

for the training process of the reply fusion model, reference may be made to the description of the above embodiments, and details are not repeated. In some embodiments, the coding network of the reply fusion model may be a recurrent neural network, such as a Bi-directional Long Short-Term Memory (Bi-LSTM), and the specific coding process of the first word vector is not described in detail in this application, by using this network structure to provide complete past and future context information for each point in the input vector of the output layer.

Still taking the Bi-LSTM coding network as an example for explanation, the undetermined reply information ri in the reply scene i and the undetermined reply information rj in the reply scene j are converted to obtain corresponding first word vectors, the first word vectors are input into the Bi-LSTM coding, the sentence hidden layer vector representation is obtained, and the first coding vectors corresponding to different undetermined reply information are obtained and are marked as hiAnd hj. To encode the first word vector of ri to obtain a first encoded vector hiFor example, the encoding process may be performed according to, but not limited to, the following equation:

in the above formula, fLSTM() An LSTM-based coding function, i.e. the coding network described above, can be represented, and the coding calculation process of such a coding network is not described in detail herein.Representing the exclusive or logical operator. Since this embodiment uses Bi-LSTM, a bidirectional long-and-short time memory network, two LSTM networks are used to perform forward and backward operations, respectively, in the above formulaIt is possible to express the operation in the forward direction,backward operations can be represented, and the application does not detail the specific forward and backward operations based on LSTM.

Step S27, processing the obtained multiple first coding vectors and corresponding second word vectors through an attention mechanism to obtain multiple context vectors of the to-be-determined reply information;

for the analysis of the attention mechanism, reference may be made to the description of the corresponding parts above, and the operation principle of the present embodiment is not described in detail.

In addition, with reference to the above description of the inventive concept of the present application, when processing the first encoding vector corresponding to a plurality of pieces of information to be answered, the present application combines the second word vector corresponding to the logical relationship between the plurality of pieces of information to be answered, so that the context vector obtained by the processing can represent the semantics of the plurality of pieces of information to be answered, and can also represent the semantic logic between the plurality of pieces of information to be answered.

In addition, in order to improve the accuracy of the obtained context vector, the present application combines an attention mechanism to process a plurality of first encoding vectors and corresponding second word vectors, and the specific processing manner is not limited. In some embodiments, the weight of each constituent element of each first encoding vector may be calculated using the following formula to determine the elements that make up the context vector.

In the above formula, v and W may both represent parameters preset in the model, and may be determined according to actual requirements, the present application does not limit the numerical values of both, ψ (t) may represent the second word vector of all words in the associated word g1, and h may represent the second word vector of all words in the associated word g1itCan represent hiThe hidden layer state of the last layer, such as the output of the last layer of the hidden layer in the neural network, is not described in detail.

Based on the above analysis, in some possible implementations, the step S27 may include:

step B1, similarity calculation is carried out on elements contained in different first encoding vectors to obtain word weights of corresponding undetermined reply information;

the word weight can indicate the importance degree of the corresponding word relative to another pending reply message with a logical relationship, and in general, the greater the word weight, the more important the corresponding word relative to the another pending reply message is, so that the semantics of the pending reply message can be embodied more accurately.

Note that the present application does not limit the method of calculating the similarity in step B1.

And step B2, performing weighted operation on the obtained multiple first coding vectors by using the obtained word weights to obtain multiple context vectors of the to-be-determined reply information.

As described in the above equations (3) and (4),

step S28, inputting the obtained context vector into a decoding network of the reply fusion model for decoding processing to obtain target reply information aiming at the inquiry information;

in this embodiment, the decoding network of the reply fusion model and the coding network may have the same network structure, and may both be a recurrent neural network, and specifically may be the Bi-LSTM network, and after the network hidden layer state is obtained in the above manner, the generation probability of each word in the obtained context vector may be continuously calculated, so that a word with a higher generation probability is selected to constitute the target reply information, and a specific operation method is not limited.

Following the above example of the network structure of the reply fusion model, the present embodiment may obtain the target reply information by using the calculation result of the following formula, but is not limited to the calculation formula given in the present application

h't=fLSTM(h't-1,di,jt-1) (5)

In the above formula, softmax () may represent a normalization function, and the operation principle is not limited in the present application, Wp、bpCan represent the parameters preset in the reply fusion model, the application does not limit the concrete numerical values, h,tCan represent the hidden state of a hidden layer produced at time t in a decoding network, where fLSTM() May represent an LSTM-based decoding function, di,jtThe method can represent a composite sentence obtained by processing the pending reply information respectively corresponding to the reply scene i and the reply scene j by the reply fusion model and performing fusion processing at the time t.

It should be noted that the network structure of the decoding network for reply fusion model and the decoding process of the context vector thereof are not limited to the above-described implementation.

In step S29, the target reply information is output.

In summary, in this embodiment, with reference to the scene schematic diagram shown in fig. 6, in a case that multiple pieces of to-be-determined response information associated with the obtained query information are obtained and belong to different response scenes, relevant words representing a logical relationship between the multiple pieces of to-be-determined response information may be obtained, then, a first word vector of each piece of to-be-determined response information and a second word vector of a corresponding relevant word are obtained, each first word vector and each second word vector are used as model inputs and input to a response fusion model obtained through pre-training for processing, for example, by encoding the first word vector corresponding to the to-be-determined response information of different response scenes, a corresponding first encoding vector output by a hidden layer is obtained, and then, by combining with the processing of the second word vector representing the logical relationship, a context vector that simultaneously expresses semantics of each piece of to-be-determined response information and the logical relationship between the pieces of to-be-determined response information is obtained, and then decoding the context vector to obtain target reply information which simultaneously expresses the semantics of each to-be-replied information and the logic relation between the to-be-replied information, so that the reply accuracy and reliability are improved compared with a mode of directly performing listed output on a plurality of to-be-replied information, and manpower and material resources are reduced because the user does not need to perform semantic analysis on the output to-be-replied information.

Referring to fig. 7, a schematic structural diagram of an alternative example of the question answering information processing apparatus proposed in the present application, which may be applied to a computer device, is shown in fig. 7, and the apparatus may include:

an information acquisition module 31, configured to acquire inquiry information;

an obtaining module 32, configured to obtain pending reply information in a plurality of different reply scenarios associated with the query information, and a plurality of logical relationships between the pending reply information;

in some embodiments, as shown in fig. 8, the obtaining module 32 may include:

a semantic analysis unit 321 configured to perform semantic analysis on the query information;

a screening unit 322, configured to screen pending reply information associated with the query information from the candidate reply information set according to a semantic analysis result;

the query unit 323 is configured to query the response relationship graph to obtain a logical relationship between multiple pieces of pending response information when the pending response information includes multiple pieces of pending response information in different response scenarios.

In some embodiments, in order to implement the construction of the response relationship graph, the question-answer information processing apparatus provided in the present application may further include:

the candidate reply information acquisition module is used for acquiring candidate reply information corresponding to different reply scenes;

the logic relation acquisition module is used for acquiring the logic relation among different candidate reply messages;

and the construction module is used for constructing the reply relation graph by taking the candidate reply information corresponding to the different reply scenes as nodes according to the logical relation.

It should be noted that, for the specific construction process of the reply relationship diagram, reference may be made to the description of the corresponding part of the foregoing method embodiment, and details are not described again.

A word vector obtaining module 33, configured to obtain respective first word vectors of the multiple pieces of to-be-determined reply information, and corresponding second word vectors of associated words corresponding to the logical relationship;

a target reply information obtaining module 34, configured to input the obtained multiple first word vectors and the corresponding second word vectors into a reply fusion model, so as to obtain target reply information for the query information;

in some embodiments, as shown in fig. 9, the target reply information obtaining module 34 may include:

the encoding processing unit 341 is configured to input the obtained plurality of first word vectors into an encoding network of the reply fusion model to perform encoding processing, so as to obtain corresponding first encoding vectors;

the context vector obtaining unit 342 is configured to process, through an attention mechanism, the obtained multiple first encoding vectors and the corresponding second word vectors to obtain multiple context vectors of the to-be-determined reply information;

in one possible implementation, the context vector obtaining unit 342 may include:

the similarity calculation unit is used for performing similarity calculation on elements contained in different first encoding vectors to obtain word weights of corresponding undetermined reply information, wherein the word weights indicate the importance degree of corresponding words relative to another undetermined reply information with a logical relationship;

and the weighting operation unit is used for performing weighting operation on the obtained multiple first coding vectors by using the obtained word weights to obtain multiple context vectors of the to-be-determined reply information.

The decoding processing unit 343 is configured to input the obtained context vector into the decoding network of the reply fusion model to perform decoding processing, so as to obtain target reply information for the query information.

In this embodiment, the encoding network and the decoding network are both cyclic neural networks, and may specifically be a BiLSTM network, and how to implement the encoding and decoding processes by using such a network structure may be determined by referring to the above embodiments and the operation principle of such a bidirectional cyclic neural network, which is not described in detail in this embodiment.

And an information output module 35, configured to output the target reply information.

It should be noted that, various modules, units, and the like in the embodiments of the foregoing apparatuses may be stored in the memory as program modules, and the processor executes the program modules stored in the memory to implement corresponding functions, and for the functions implemented by the program modules and their combinations and the achieved technical effects, reference may be made to the description of corresponding parts in the embodiments of the foregoing methods, which is not described in detail in this embodiment.

The present application also provides a storage medium on which a computer program can be stored, where the computer program can be called and loaded by a processor to implement the steps of the question-answering information processing method described in the above embodiments.

Embodiments of the present application also provide a computer device, as shown in fig. 2 above, which may include, but is not limited to: the information interface 21, the memory 22, the processor 23, and the like, regarding the composition structure and the functions of the computer device, refer to the description of the corresponding parts of the above embodiments, and are not described again in this embodiment.

Finally, it should be noted that, in the present specification, the embodiments are described in a progressive or parallel manner, each embodiment focuses on differences from other embodiments, and the same and similar parts among the embodiments may be referred to each other. For the device and the computer equipment disclosed by the embodiment, the description is relatively simple because the device and the computer equipment correspond to the method disclosed by the embodiment, and the relevant points can be referred to the method part for description.

The previous description of the disclosed embodiments is provided to enable any person skilled in the art to make or use the present application. Various modifications to these embodiments will be readily apparent to those skilled in the art, and the generic principles defined herein may be applied to other embodiments without departing from the spirit or scope of the application. Thus, the present application is not intended to be limited to the embodiments shown herein but is to be accorded the widest scope consistent with the principles and novel features disclosed herein.

Claims (10)

1. A question-answer information processing method, the method comprising:

acquiring inquiry information;

obtaining pending reply information under a plurality of different reply scenes associated with the query information and a plurality of logical relations among the pending reply information;

acquiring respective first word vectors of a plurality of pieces of to-be-determined reply information and corresponding second word vectors of associated words corresponding to the logical relationship;

inputting the obtained plurality of first word vectors and the corresponding second word vectors into a reply fusion model to obtain target reply information aiming at the inquiry information;

and outputting the target reply information.

2. The method of claim 1, said obtaining a logical relationship between a plurality of said pending reply messages, comprising:

and querying a reply relation graph to obtain a plurality of logical relations among the pending reply information, wherein the reply relation graph contains the logical relations among the reply information in different reply scenes.

3. The method of claim 2, wherein the obtaining of the reply relationship graph comprises:

acquiring candidate reply information corresponding to different reply scenes and a logic relation between the different candidate reply information;

and taking the candidate reply information corresponding to the different reply scenes as nodes, and constructing the reply relation graph according to the logical relation.

4. The method according to claim 1, wherein the inputting the obtained plurality of first word vectors and the corresponding second word vectors into a reply fusion model to obtain target reply information for the query information includes:

inputting the obtained multiple first word vectors into a coding network of a reply fusion model for coding to obtain corresponding first coding vectors;

processing the obtained multiple first coding vectors and the corresponding second word vectors through an attention mechanism to obtain multiple context vectors of the to-be-determined reply information;

and inputting the obtained context vector into a decoding network of the reply fusion model for decoding processing to obtain target reply information aiming at the inquiry information.

5. The method of claim 4, the encoding network and the decoding network both being recurrent neural networks.

6. The method according to claim 4, wherein said processing, by means of an attention mechanism, the obtained plurality of first encoding vectors and the corresponding second word vectors to obtain a plurality of context vectors of the pending reply information comprises:

similarity calculation is carried out on elements contained in different first encoding vectors to obtain word weights of corresponding undetermined reply information, wherein the word weights indicate the importance degree of corresponding words relative to other undetermined reply information with a logical relationship;

and performing weighted operation on the obtained multiple first coding vectors by using the obtained word weights to obtain multiple context vectors of the to-be-determined reply information.

7. The method of claim 1, said obtaining pending response information for a plurality of different response scenarios associated with said query information, comprising:

semantic analysis is carried out on the inquiry information, and pending reply information related to the inquiry information is screened from a candidate reply information set according to a semantic analysis result;

and if the pending reply information comprises pending reply information in a plurality of different reply scenes, executing the step of obtaining the logical relationship among the plurality of pending reply information.

8. A question-answering information processing apparatus, the apparatus comprising:

the information acquisition module is used for acquiring inquiry information;

the obtaining module is used for obtaining pending reply information under a plurality of different reply scenes associated with the query information and a plurality of logical relations among the pending reply information;

a word vector acquisition module, configured to acquire a first word vector of each of the multiple pieces of to-be-determined reply information and a second word vector of a relevant word corresponding to the corresponding logical relationship;

the target reply information obtaining module is used for inputting the obtained plurality of first word vectors and the corresponding second word vectors into a reply fusion model to obtain target reply information aiming at the inquiry information;

and the information output module is used for outputting the target reply information.

9. The apparatus according to claim 8, wherein said target reply information obtaining module comprises:

the encoding processing unit is used for inputting the obtained first word vectors into an encoding network of the reply fusion model for encoding processing to obtain corresponding first encoding vectors;

the context vector obtaining unit is used for processing the obtained multiple first coding vectors and the corresponding second word vectors through an attention mechanism to obtain multiple context vectors of the to-be-determined reply information;

and the decoding processing unit is used for inputting the obtained context vector into the decoding network of the answer fusion model to perform decoding processing, so as to obtain target answer information aiming at the inquiry information.

10. A computer device, the computer device comprising:

a communication interface;

a memory for storing a program for implementing the question-answer information processing method according to any one of claims 1 to 7;

a processor for loading and executing the program stored in the memory to realize the steps of the question-answering information processing method according to any one of claims 1 to 7.

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202010159784.8A CN111382232A (en) | 2020-03-09 | 2020-03-09 | Question and answer information processing method and device and computer equipment |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202010159784.8A CN111382232A (en) | 2020-03-09 | 2020-03-09 | Question and answer information processing method and device and computer equipment |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| CN111382232A true CN111382232A (en) | 2020-07-07 |

Family

ID=71221521

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN202010159784.8A Pending CN111382232A (en) | 2020-03-09 | 2020-03-09 | Question and answer information processing method and device and computer equipment |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN111382232A (en) |

Cited By (2)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN111949791A (en) * | 2020-07-28 | 2020-11-17 | 中国工商银行股份有限公司 | Text classification method, device and equipment |

| CN113035200A (en) * | 2021-03-03 | 2021-06-25 | 科大讯飞股份有限公司 | Voice recognition error correction method, device and equipment based on human-computer interaction scene |

Citations (6)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN103853842A (en) * | 2014-03-20 | 2014-06-11 | 百度在线网络技术(北京)有限公司 | Automatic question and answer method and system |

| CN107870964A (en) * | 2017-07-28 | 2018-04-03 | 北京中科汇联科技股份有限公司 | A kind of sentence sort method and system applied to answer emerging system |

| CN109829040A (en) * | 2018-12-21 | 2019-05-31 | 深圳市元征科技股份有限公司 | A kind of Intelligent dialogue method and device |

| US20190228098A1 (en) * | 2018-01-19 | 2019-07-25 | International Business Machines Corporation | Facilitating answering questions involving reasoning over quantitative information |

| CN110297897A (en) * | 2019-06-21 | 2019-10-01 | 科大讯飞(苏州)科技有限公司 | Question and answer processing method and Related product |

| CN110704591A (en) * | 2019-09-27 | 2020-01-17 | 联想(北京)有限公司 | Information processing method and computer equipment |

-

2020

- 2020-03-09 CN CN202010159784.8A patent/CN111382232A/en active Pending

Patent Citations (6)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN103853842A (en) * | 2014-03-20 | 2014-06-11 | 百度在线网络技术(北京)有限公司 | Automatic question and answer method and system |

| CN107870964A (en) * | 2017-07-28 | 2018-04-03 | 北京中科汇联科技股份有限公司 | A kind of sentence sort method and system applied to answer emerging system |

| US20190228098A1 (en) * | 2018-01-19 | 2019-07-25 | International Business Machines Corporation | Facilitating answering questions involving reasoning over quantitative information |

| CN109829040A (en) * | 2018-12-21 | 2019-05-31 | 深圳市元征科技股份有限公司 | A kind of Intelligent dialogue method and device |

| CN110297897A (en) * | 2019-06-21 | 2019-10-01 | 科大讯飞(苏州)科技有限公司 | Question and answer processing method and Related product |

| CN110704591A (en) * | 2019-09-27 | 2020-01-17 | 联想(北京)有限公司 | Information processing method and computer equipment |

Non-Patent Citations (2)

| Title |

|---|

| 栾克鑫;杜新凯;孙承杰;刘秉权;王晓龙;: "基于注意力机制的句子排序方法", 中文信息学报 * |

| 田文洪;高印权;黄厚文;黎在万;张朝阳;: "基于多任务双向长短时记忆网络的隐式句间关系分析", 中文信息学报 * |

Cited By (3)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|