TSGBench is the inaugural TSG benchmark designed for the Time Series Generation (TSG) task. We are excited to share that TSGBench has received the Best Research Paper Award Nomination at VLDB 2024 🏆

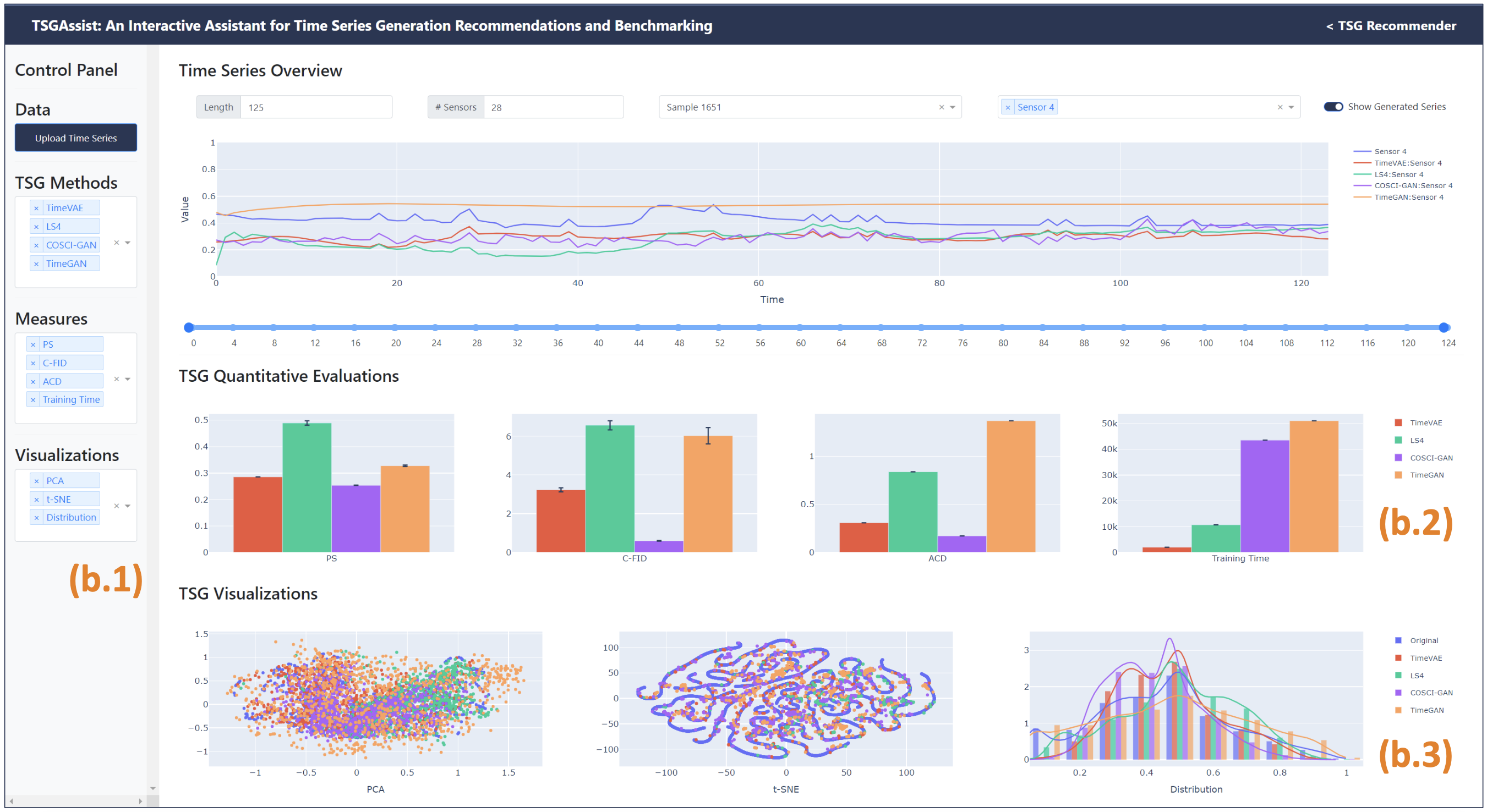

TSGAssist is an interactive assistant that integrates the strengths of TSGBench and utilizes Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG) for TSG recommendations and benchmarking 🤖📊

We are actively exploring industrial collaborations in time series analytics. Please feel free to reach out (yihao_ang AT comp.nus.edu.sg) if interested 🤝✨

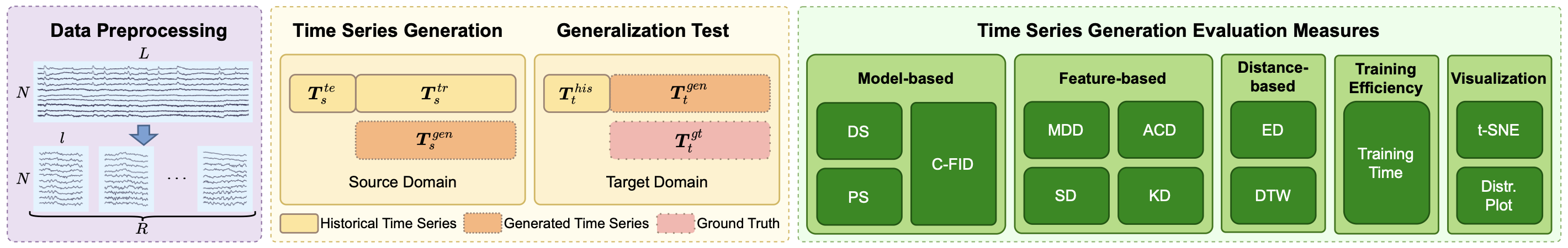

Time Series Generation (TSG) is crucial in a range of applications, including data augmentation, anomaly detection, and privacy preservation. Given an input time series, TSG aims to produce time series akin to the original, preserving temporal dependencies and dimensional correlations while ensuring the generated time series remains useful for various downstream tasks.

TSGBench surveys a diverse range of Time Series Generation (TSG) methods by different backbone models and their specialties. The table below provides an overview of these methods along with their references.

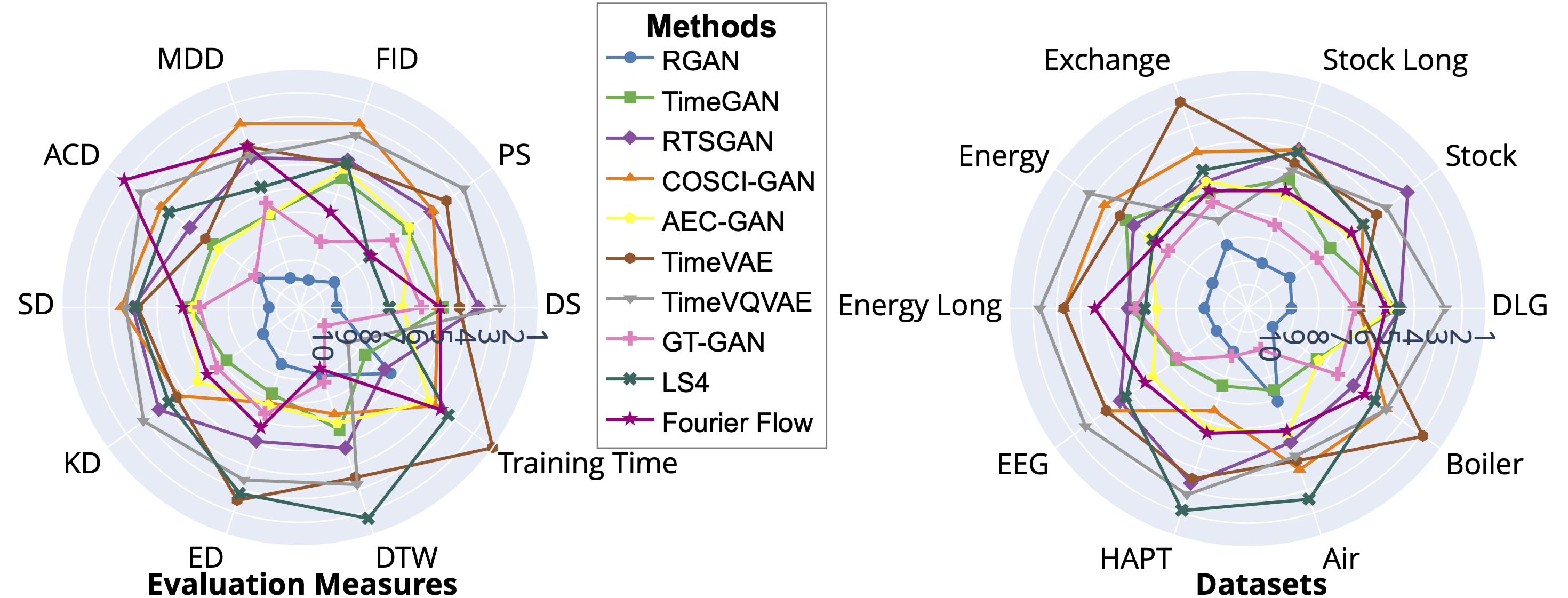

TSGBench selects ten real-world datasets from various domains, ensuring a wide coverage of scenarios for TSG evaluation. Here,

| Dataset | Domain | Link | |||

|---|---|---|---|---|---|

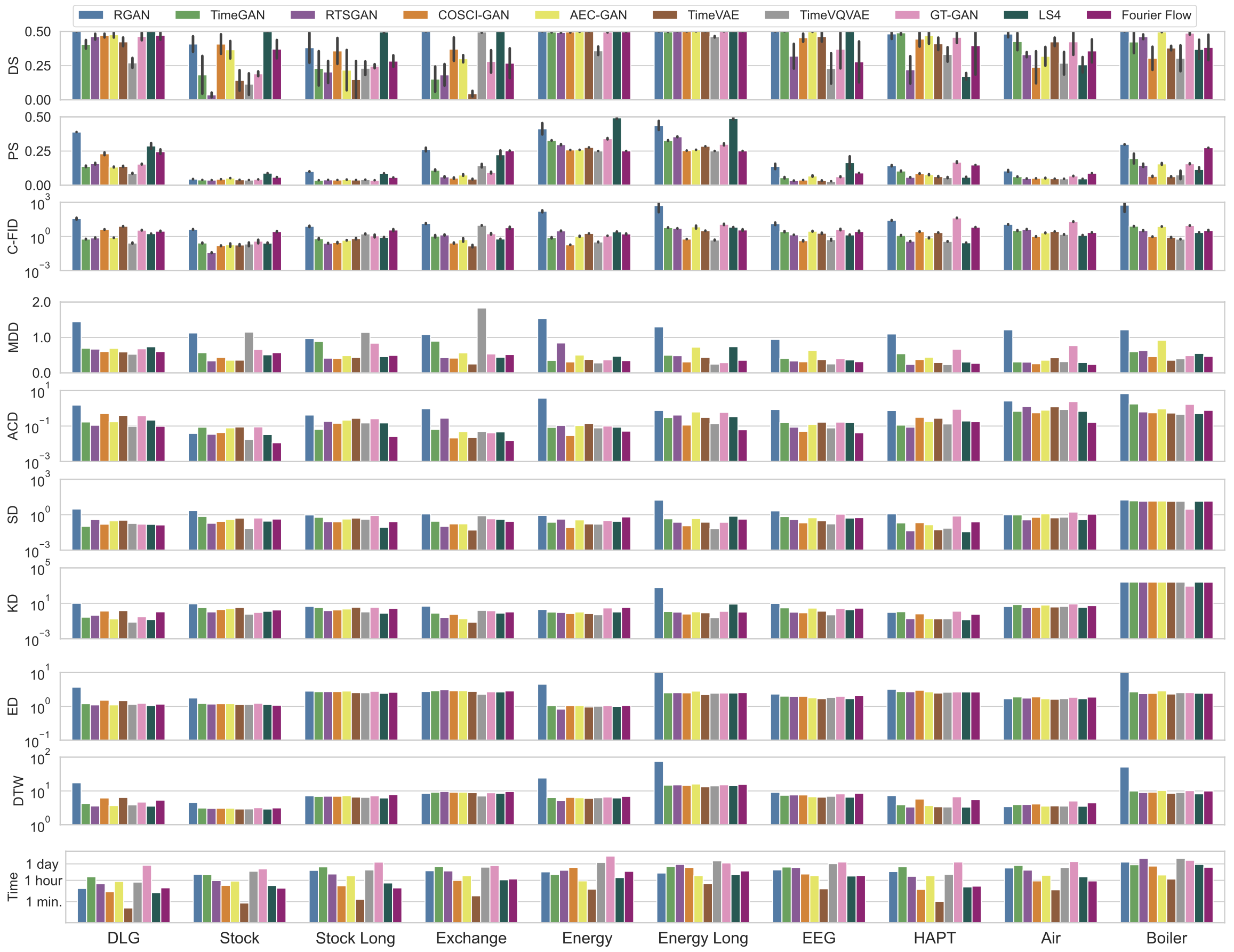

| DLG | 246 | 14 | 20 | Traffic | https://archive.ics.uci.edu/dataset/157/dodgers+loop+sensor |

| Stock | 3294 | 24 | 6 | Financial | https://finance.yahoo.com/quote/GOOG/history?p=GOOG |

| Stock Long | 3204 | 125 | 6 | Financial | https://finance.yahoo.com/quote/GOOG/history?p=GOOG |

| Exchange | 6715 | 125 | 8 | Financial | https://github.com/laiguokun/multivariate-time-series-data |

| Energy | 17739 | 24 | 28 | Appliances | https://archive.ics.uci.edu/dataset/374/appliances+energy+prediction |

| Energy Long | 17649 | 125 | 28 | Appliances | https://archive.ics.uci.edu/dataset/374/appliances+energy+prediction |

| EEG | 13366 | 128 | 14 | Medical | https://archive.ics.uci.edu/dataset/264/eeg+eye+state |

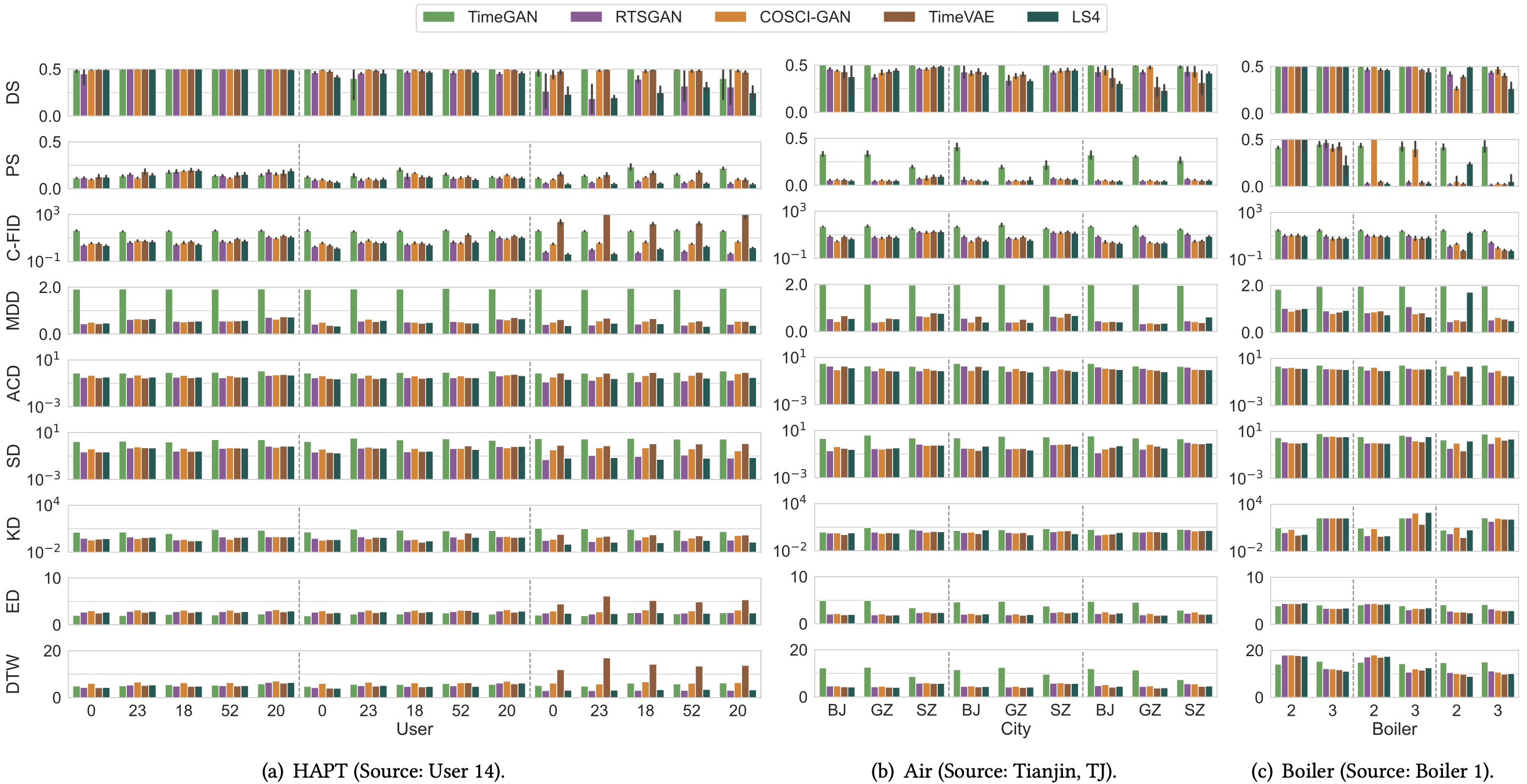

| HAPT | 1514 | 128 | 6 | Medical | https://archive.ics.uci.edu/dataset/341/smartphone+based+recognition+of+human+activities+and+postura+transitions |

| Air | 7731 | 168 | 6 | Sensor | https://www.microsoft.com/en-us/research/project/urban-air/ |

| Boiler | 80935 | 192 | 11 | Industrial | https://github.com/DMIRLAB-Group/SASA/tree/main/datasets/Boiler |

TSGBench considers the following evaluation measures, ranking analysis, and a novel generalization test by Domain Adaptation (DA).

- Model-based Measures

- Discriminitive Score (DS)

- Predictive Score (PS)

- Contextual-FID (C-FID)

- Feature-based Measures

- Marginal Distribution Difference (MDD)

- AutoCorrelation Difference (ACD)

- Skewness Difference (SD)

- Kurtosis Difference (KD)

- Distance-based Measures

- Euclidean Distance (ED)

- Dynamic Time Warping (DTW)

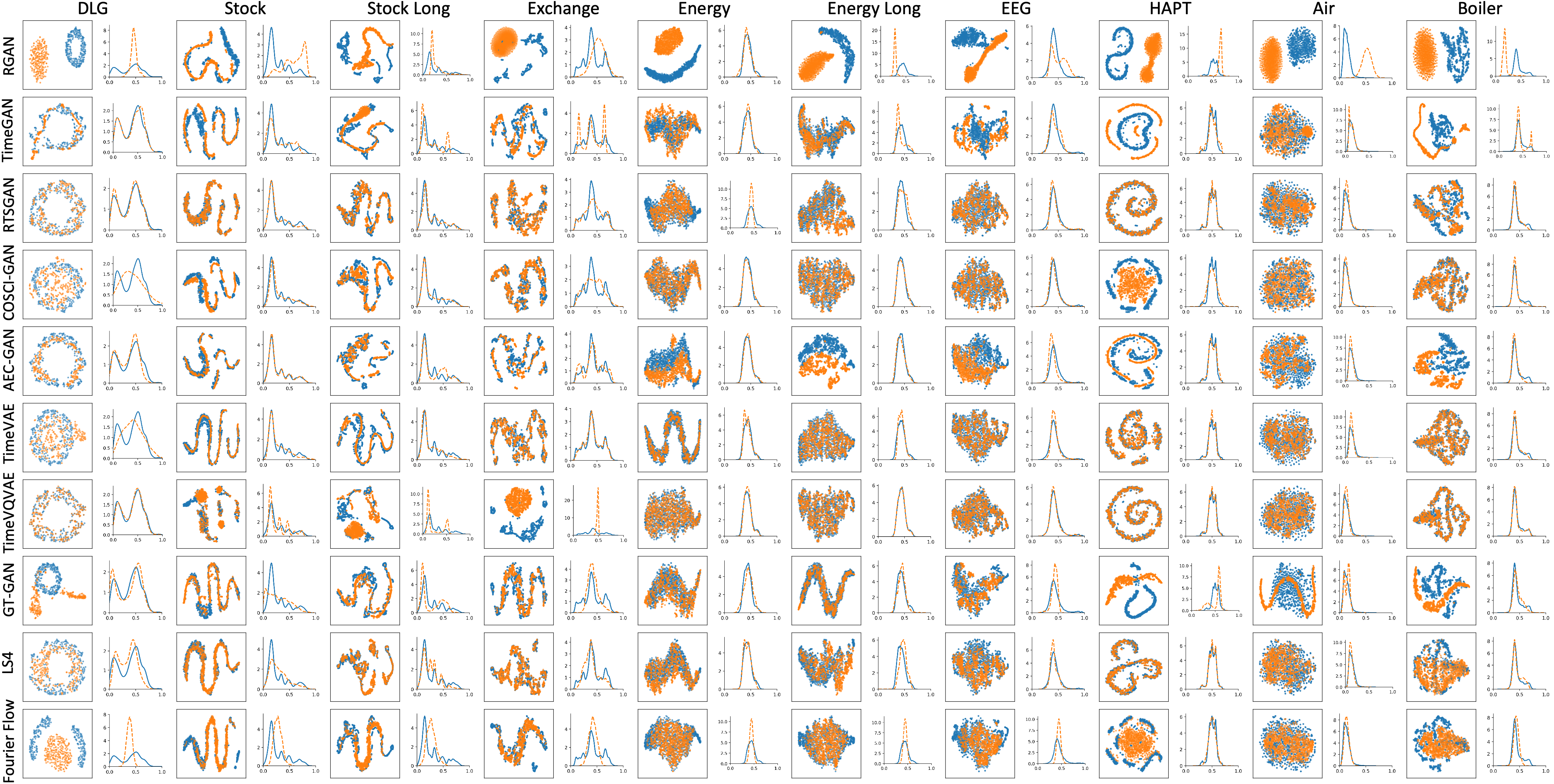

- Visualization

- t-SNE

- Distribution Plot

- Training Efficiency

- Training Time

TSGAssist is an interactive assistant harnessing LLMs and RAG for time series generation recommendations and benchmarking.

- It offers multi-round personalized recommendations through a conversational interface that bridges the cognitive gap,

- It enables the direct application and instant evaluation of users' data, providing practical insights into the effectiveness of various methods.

We recommend using conda to create a virtual environment for TSGBench.

conda create -n tsgbench python=3.7

conda activate tsgbench

conda install --file requirements.txt

The configuration file ./config/config.yaml contains various settings to run TSGBench. It is structured into the following sections:

- Preprocessing: Configures data preprocessing. Specify the input data path using the

preprocessing.original_data_pathand the output path for processed data usingpreprocessing.output_ori_path. - Generation: Contains the settings related to data generation.

- Evaluation: Includes the parameters required for evaluating the model's performance.

-

Set Input Data: Update the

preprocessing.original_data_pathinconfig.yamlto specify the location of your input data. -

Run TSGBench: Execute the main script by running

python ./main.py. By default, this will run the preprocessing, generation, and evaluation stages in sequence. You can skip or adjust these steps by modifying the relevant sections in the configuration file. In particular,(1) Preprocessing: During preprocessing, data is processed and saved to the path specified by

preprocessing.output_ori_pathin the configuration file.(2) Generation: Place your designated model structure under the

./modeldirectory. In./src/generation, point the model entry to your model. If necessary, provide pretrained parameters by specifying them undergeneration.pretrain_path. Generated data will be saved atgeneration.output_gen_path.(3) Evaluation: Select specific evaluation measures by updating the

evaluation.method_listin the configuration file. The evaluation results will be saved to the path specified inevaluation.result_path.

Please consider citing our work if you use TSGBench (and/or TSGAssist) in your research:

# TSGBench

@article{ang2023tsgbench,

title = {TSGBench: Time Series Generation Benchmark},

author = {Ang, Yihao and Huang, Qiang and Bao, Yifan and Tung, Anthony KH and Huang, Zhiyong},

journal = {Proc. {VLDB} Endow.},

volume = {17},

number = {3},

pages = {305--318},

year = {2023}

}

# TSGAssist

@article{ang2024tsgassist,

title = {TSGAssist: An Interactive Assistant Harnessing LLMs and RAG for Time Series Generation Recommendations and Benchmarking},

author = {Ang, Yihao and Bao, Yifan and Huang, Qiang and Tung, Anthony KH and Huang, Zhiyong},

journal = {Proc. {VLDB} Endow.},

volume = {17},

number = {12},

pages = {4309--4312},

year = {2024}

}