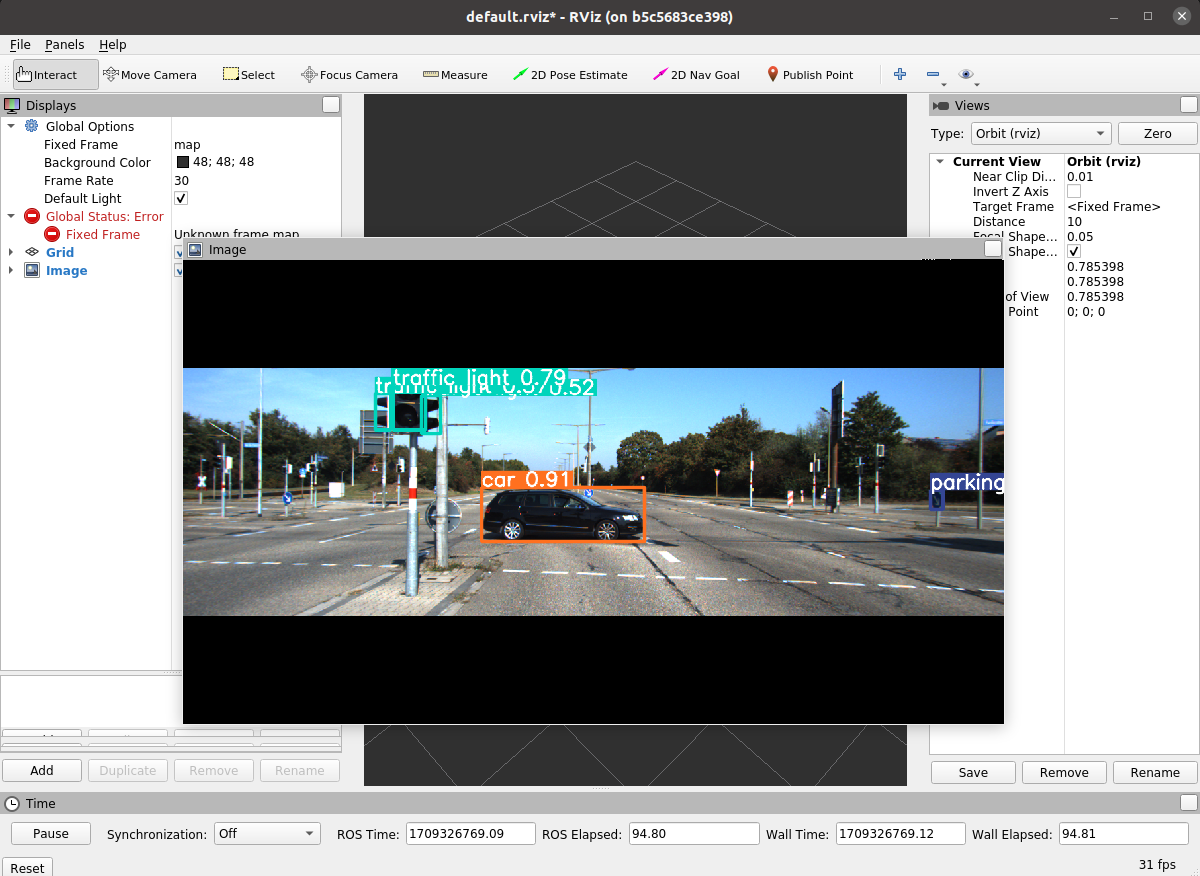

Add ROS package for YOLOv9

$ cd (your_ros_ws)/src

$ git clone https://github.com/SanghyunPark01/yolov9_ros.git

$ cd ..

$ catkin_make

- modify

ros/config/config.yaml - modify variable

yolo_pathinros/scripts/detect_ros.py(top of code) - modify config file path in

ros/launch/detect.launch

$ chmod +x (your_ros_ws/src/yolov9_ros/ros/scripts/detect_ros.py)

$ roslaunch yolov9_ros detect.launch

Publish:

-

sensor_msgs/PointCloud

header->stamp : same as image time channels->name L size L object_1's name L object_2's name : : channels->vallues L number of object L object_1's [conf,x1,y1,x2,y2] L object_2's [conf,x1,y1,x2,y2] : : -

senor_msgs/Image (Optional) -> modify

config.yaml

Implementation of paper - YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information

MS COCO

| Model | Test Size | APval | AP50val | AP75val | Param. | FLOPs |

|---|---|---|---|---|---|---|

| YOLOv9-S | 640 | 46.8% | 63.4% | 50.7% | 7.1M | 26.4G |

| YOLOv9-M | 640 | 51.4% | 68.1% | 56.1% | 20.0M | 76.3G |

| YOLOv9-C | 640 | 53.0% | 70.2% | 57.8% | 25.3M | 102.1G |

| YOLOv9-E | 640 | 55.6% | 72.8% | 60.6% | 57.3M | 189.0G |

Expand

Custom training: WongKinYiu#30 (comment)

ONNX export: WongKinYiu#2 (comment) WongKinYiu#40 (comment) WongKinYiu#130 (comment)

TensorRT inference: WongKinYiu#34 (comment) WongKinYiu#79 (comment)

C# ONNX inference: WongKinYiu#95 (comment)

C# OpenVINO inference: WongKinYiu#95 (comment)

OpenCV: WongKinYiu#113 (comment)

Hugging Face demo: WongKinYiu#45 (comment)

CoLab demo: WongKinYiu#18

ONNXSlim export: WongKinYiu#37

YOLOv9 ByteTrack: WongKinYiu#78 (comment)

YOLOv9 DeepSORT: WongKinYiu#98 (comment)

YOLOv9 counting: WongKinYiu#84 (comment)

YOLOv9 face detection: WongKinYiu#121 (comment)

AnyLabeling tool: WongKinYiu#48 (comment)

AX650N deploy: WongKinYiu#96 (comment)

Conda environment: WongKinYiu#93

AutoDL docker environment: WongKinYiu#112 (comment)

Docker environment (recommended)

Expand

# create the docker container, you can change the share memory size if you have more.

nvidia-docker run --name yolov9 -it -v your_coco_path/:/coco/ -v your_code_path/:/yolov9 --shm-size=64g nvcr.io/nvidia/pytorch:21.11-py3

# apt install required packages

apt update

apt install -y zip htop screen libgl1-mesa-glx

# pip install required packages

pip install seaborn thop

# go to code folder

cd /yolov9yolov9-c-converted.pt yolov9-e-converted.pt yolov9-c.pt yolov9-e.pt gelan-c.pt gelan-e.pt

# evaluate converted yolov9 models

python val.py --data data/coco.yaml --img 640 --batch 32 --conf 0.001 --iou 0.7 --device 0 --weights './yolov9-c-converted.pt' --save-json --name yolov9_c_c_640_val

# evaluate yolov9 models

#python val_dual.py --data data/coco.yaml --img 640 --batch 32 --conf 0.001 --iou 0.7 --device 0 --weights './yolov9-c.pt' --save-json --name yolov9_c_640_val

# evaluate gelan models

# python val.py --data data/coco.yaml --img 640 --batch 32 --conf 0.001 --iou 0.7 --device 0 --weights './gelan-c.pt' --save-json --name gelan_c_640_valYou will get the results:

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.530

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.702

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.578

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.362

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.585

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.693

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.392

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.652

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.702

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.541

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.760

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.844

Data preparation

bash scripts/get_coco.sh- Download MS COCO dataset images (train, val, test) and labels. If you have previously used a different version of YOLO, we strongly recommend that you delete

train2017.cacheandval2017.cachefiles, and redownload labels

Single GPU training

# train yolov9 models

python train_dual.py --workers 8 --device 0 --batch 16 --data data/coco.yaml --img 640 --cfg models/detect/yolov9-c.yaml --weights '' --name yolov9-c --hyp hyp.scratch-high.yaml --min-items 0 --epochs 500 --close-mosaic 15

# train gelan models

# python train.py --workers 8 --device 0 --batch 32 --data data/coco.yaml --img 640 --cfg models/detect/gelan-c.yaml --weights '' --name gelan-c --hyp hyp.scratch-high.yaml --min-items 0 --epochs 500 --close-mosaic 15Multiple GPU training

# train yolov9 models

python -m torch.distributed.launch --nproc_per_node 8 --master_port 9527 train_dual.py --workers 8 --device 0,1,2,3,4,5,6,7 --sync-bn --batch 128 --data data/coco.yaml --img 640 --cfg models/detect/yolov9-c.yaml --weights '' --name yolov9-c --hyp hyp.scratch-high.yaml --min-items 0 --epochs 500 --close-mosaic 15

# train gelan models

# python -m torch.distributed.launch --nproc_per_node 4 --master_port 9527 train.py --workers 8 --device 0,1,2,3 --sync-bn --batch 128 --data data/coco.yaml --img 640 --cfg models/detect/gelan-c.yaml --weights '' --name gelan-c --hyp hyp.scratch-high.yaml --min-items 0 --epochs 500 --close-mosaic 15@article{wang2024yolov9,

title={{YOLOv9}: Learning What You Want to Learn Using Programmable Gradient Information},

author={Wang, Chien-Yao and Liao, Hong-Yuan Mark},

booktitle={arXiv preprint arXiv:2402.13616},

year={2024}

}

@article{chang2023yolor,

title={{YOLOR}-Based Multi-Task Learning},

author={Chang, Hung-Shuo and Wang, Chien-Yao and Wang, Richard Robert and Chou, Gene and Liao, Hong-Yuan Mark},

journal={arXiv preprint arXiv:2309.16921},

year={2023}

}

Parts of code of YOLOR-Based Multi-Task Learning are released in the repository.

Expand

- https://github.com/AlexeyAB/darknet

- https://github.com/WongKinYiu/yolor

- https://github.com/WongKinYiu/yolov7

- https://github.com/VDIGPKU/DynamicDet

- https://github.com/DingXiaoH/RepVGG

- https://github.com/ultralytics/yolov5

- https://github.com/meituan/YOLOv6