This is the official implementation of our paper:

[CVPR2024] DiffInDScene: Diffusion-based High-Quality 3D Indoor Scene Generation

Xiaoliang Ju*, Zhaoyang Huang*, Yijin Li, Guofeng Zhang, Yu Qiao, Hongsheng Li

[paper][sup][arXiv][project page]

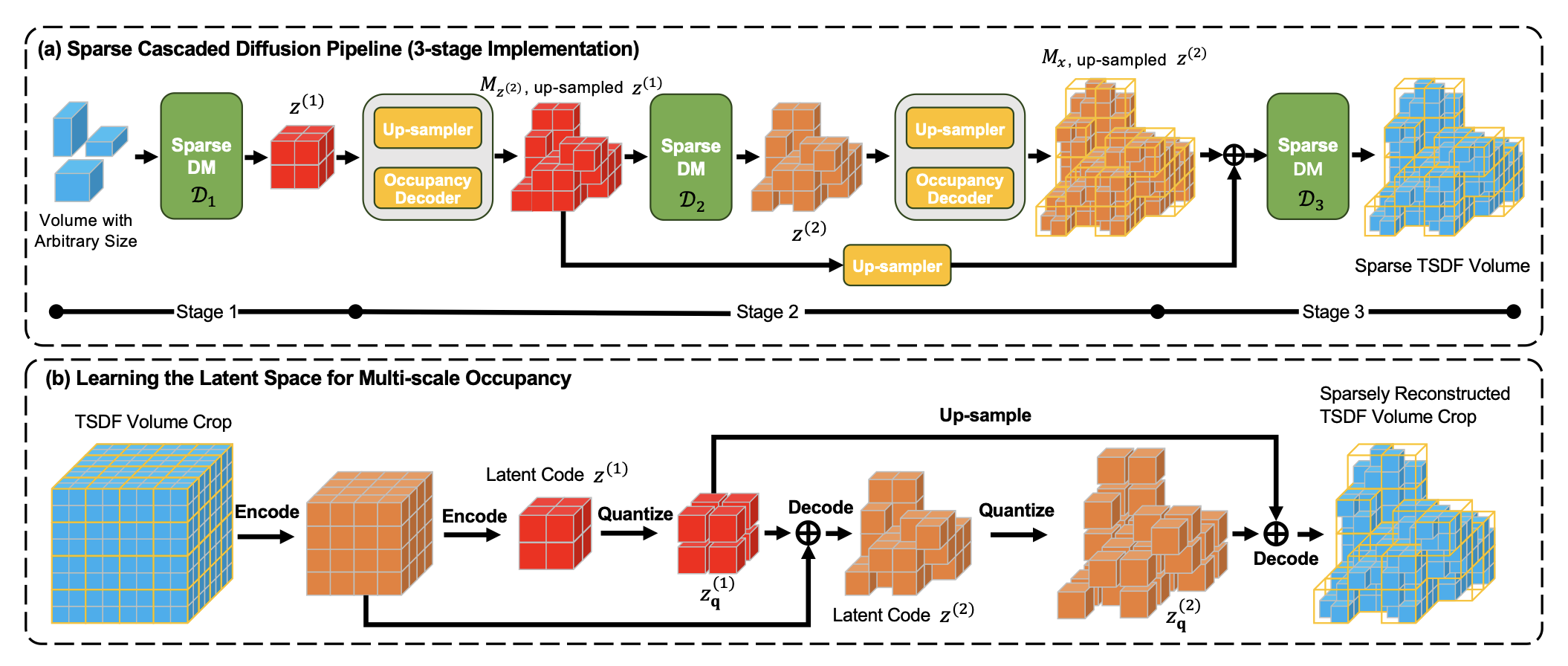

DiffInDScene generates large indoor scene with a coarse-to-fine fashion:

which consists of a multi-scale PatchVQGAN for occupancy encoding and a cascaded sparse diffusion model.

which consists of a multi-scale PatchVQGAN for occupancy encoding and a cascaded sparse diffusion model.

This repo provides or will provide

- code for data processing

- code for inference

- code for training

- extension to other dataset

We mainly use 3D-FRONT as our dataset.

The code for data processing is developed based on the repo BlenderProc-3DFront and SDFGen.

The pipeline mainly consists of following steps

- Extract resources from original dataset and join them to a scene.

- Use blender to remesh the scene to be watertight mesh.

- Generate SDF of the scene.

- Compress *.sdf to *.npz

Example scripts:

# generate watertight meshes

blenderproc run examples/datasets/front_3d_with_improved_mat/process_3dfront.py ${PATH-TO-3D-FUTURE-model} ${PATH-TO-3D-FRONT-texture} ${MESH_OUT_FOLDER}

# generate SDF for every mesh

sh examples/datasets/front_3d_with_improved_mat/sdf_gen.sh ${MESH_OUT_FOLDER} ${PATH-TO-SDFGen}

# compress *.sdf to *.npz

python examples/datasets/front_3d_with_improved_mat/npz_tsdf.py ${MESH_OUT_FOLDER} ${NPZ_OUT_DIR}Our sparse diffusion is implemented based on TorchSparse. For it is still under rapid developing, we provide the commit hash of the version we used: 1a10fda15098f3bf4fa2d01f8bee53e85762abcf.

The main codebases of our framework includes VQGAN, VQ-VAE-2, and Diffusers, and we only melt the necessary parts into our repo to avoid code dependency.

We employ DreamSpace to texture the generated meshes. You can also substitute it to other similar texturing tools.

We provide our conda environment configuration as file dev_env.yml.

Every part of our model corresponds to a individual configuration folder located in utils/config/samples/, with an instruction file as readme.md.

Training script:

python main/train.py utils/config/samples/tsdf_gumbel_ms_vqgan

Testing script:

python main/test.py utils/config/samples/tsdf_gumbel_ms_vqgan

and the latents will be saved in your designated output path.

python main/train.py utils/config/samples/sketch_VAE

The cascaded diffusion consists of 3 levels as described in our paper, which can be trained individually by setting "level" variable in config/samples/cascaded_ldm/model/pyramid_occ_denoiser.yaml.

The training script is

python main/train.py utils/config/samples/cascaded_ldm

and the inference script is

python main/test.py utils/config/samples/cascaded_ldm

@inproceedings{ju2024diffindscene,

title={DiffInDScene: Diffusion-based High-Quality 3D Indoor Scene Generation},

author={Ju, Xiaoliang and Huang, Zhaoyang and Li, Yijin and Zhang, Guofeng and Qiao, Yu and Li, Hongsheng},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={4526--4535},

year={2024}

}