Overview - Document Visual Question Answering

The "Document Visual Question Answering" (DocVQA) challenge, focuses on a specific type of Visual Question Answering task, where visually understanding the information on a document image is necessary in order to provide an answer. This goes over and above passing a document image through OCR, and involves understanding all types of information conveyed by a document. Textual content (handwritten or typewritten), non-textual elements (marks, tick boxes, separators, diagrams), layout (page structure, forms, tables), and style (font, colours, highlighting), to mention just a few, are pieces of information that can be potentially necessary for responding to the question at hand.

The DocVQA challenge is a continuous effort linked to various events. The challenge was originally organised in the context of the CVPR 2020 Workshop on Text and Documents in the Deep Learning Era. From this first event a paper with the results was presented in the Document Analysis Systems International Workshop that can be found here. The second edition will take place in the context of the Int. Conference on Document Analysis and Recognition (ICDAR) 2021.

|

|

|

|

|

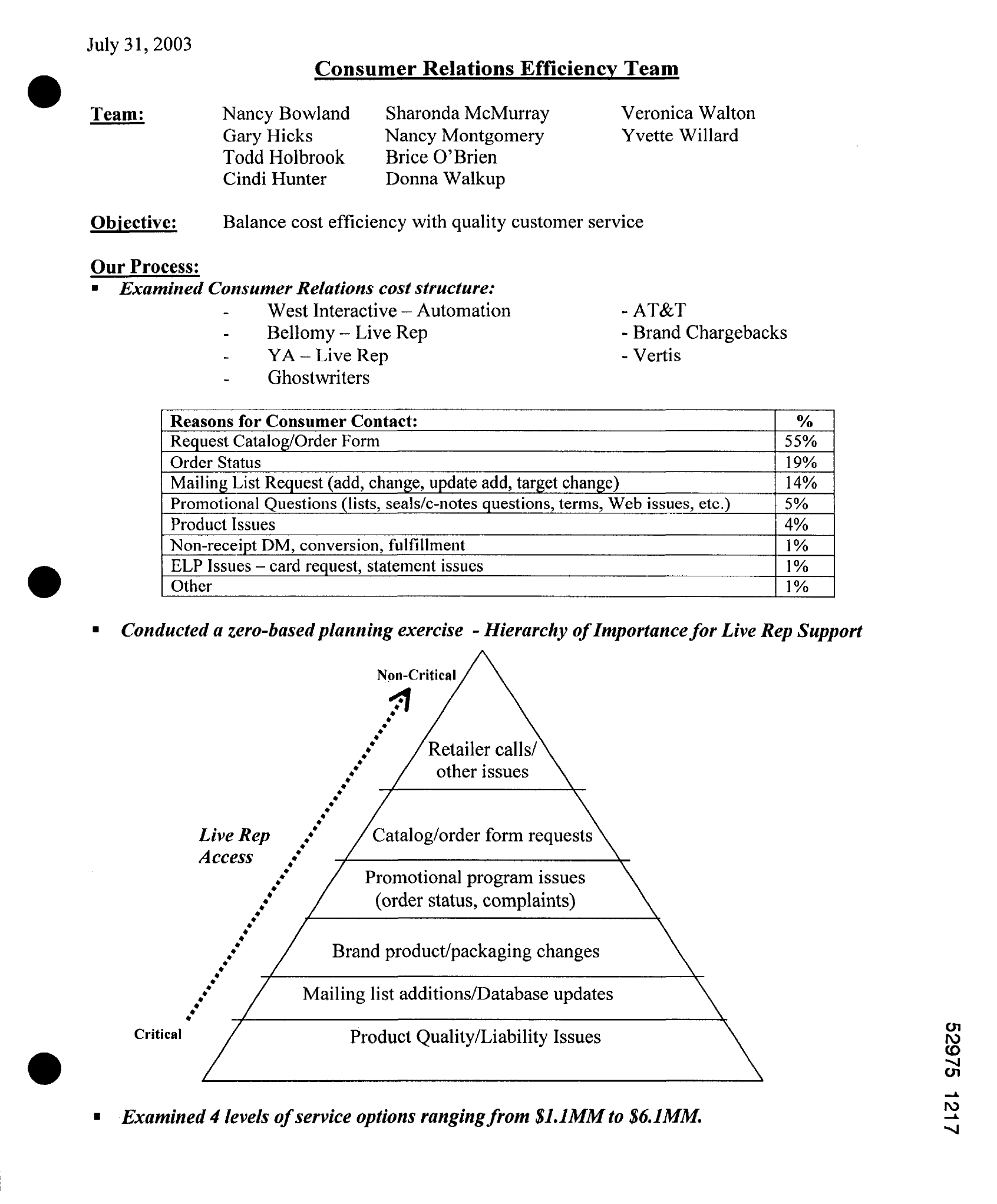

What is the issue at the top of the pyramid? Retailer calls/ other issues Which is the least critical issue for live rep support? Retailer calls/other issues Which is the most critical issue for live rep support? Product quality/liability issues |

|

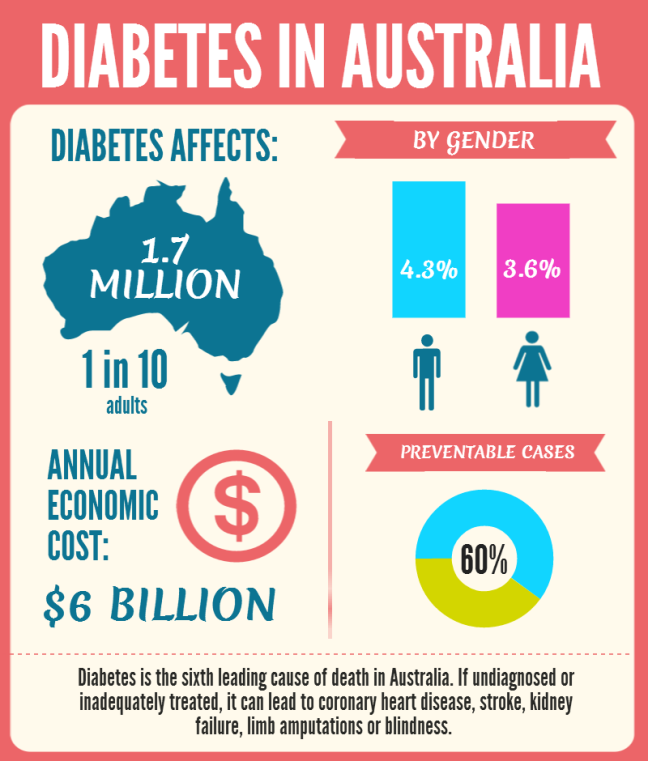

How many females are affected by diabetes 3.6% What percentage of cases can be prevented 60% What could lead to blindness or stroke diabetes |

Figure 1. Example documents from DocVQA Task 1 (left) and Task 3 (right) with its Questions and Answers.

Contemporary Document Analysis and Recognition (DAR) research tends to focus on generic information extraction tasks (character recognition, table extraction, word spotting), largely disconnected from the final purpose the extracted information is used for. The DocVQA challenge, seeks to inspire a “purpose-driven” point of view in Document Analysis and Recognition research, where the document content is extracted and used to respond to high-level tasks defined by the human consumers of this information. In this sense DocVQA provides a high-level task that should dynamically drive information extraction algorithms to conditionally interpret document images.

On the other hand, Visual Question Answering (VQA) as it is currently applied in real scene images is vulnerable to learning coincidental correlations in the data without forming a deeper understanding of the scene. In the case of DocVQA, more profound relations between the question aims (as expressed in natural language), and the document image content (that needs to be extracted and understood) are necessary to establish.

A large-scale dataset of document images reflecting real-world document variety, along with question and answer pairs will be released according to the schedule on the right.

The challenge comprises three different tasks that we briefly describe here. For more detailed information, refer to Tasks section.

- Task 1 - Single Page Document VQA: Is a typical VQA style task, where natural language questions are defined over single page documents, and an answer needs to be generated by interpreting the document image. No list of pre-defined responses will be given, hence the problem cannot be easily treated as an n-way classification task.

- Publications: DocVQA: A Dataset for VQA on Document Images

- Task 2 - Document Collection VQA: Is a retrieval-style task where given a question, the aim is to identify and retrieve all the documents in a large document collection that are relevant to answering this question as well as the answer.

- Publications: Document Collection Visual Question Answering

- Task 3 - Infographics VQA: This is a new task introduced as part of the 2021 challenge (ICDAR 2021 edition). The task is similar to the Task 1 where questions are posed on Infographic images instead of business and industry documents. More details of the task can be found under "Tasks" tab.

- Publication: InfographicsVQA

- Task 4 - Multipage Document VQA: Aiming to mimic real applications where systems must process documents with multiple pages. In this task the questions are posed over multipage industry scanned documents. In addition, following an explainability trend, we also evaluate the answer page prediction accuracy as a complementary metric, which helps to understand if methods are answering the questions from the input document images, or from prior learned bias.

If you are interested in Privacy Preserving and Federated Learning in Document Visual Question Answering, check out our new dataset and task in this platform!

Publications

-

Tito, R., Karatzas, D., Valveny, E. Hierarchical multimodal transformers for Multipage DocVQA on Pattern Recognition 2023 [arxiv:2212.05935]

-

Mathew, M., Bagal, V., Tito, R., Karatzas, D., Valveny, E., Jawahar, C. V. InfographicsVQA on WACV 2022 [arxiv:2104.12756]

-

Tito, R., Karatzas, D., Valveny, E. Document Collection Visual Question Answering on ICDAR 2021 [arxiv:2104.14336]

-

Tito, R., Mathew, M., Jawahar, C. V., Valveny, E., Karatzas, D. ICDAR 2021 Competition on Document Visual Question Answering on ICDAR 2021 [arxiv:2111.05547]

-

Mathew, M., Karatzas, D., Manmatha, R., Jawahar, C. V. DocVQA: A Dataset for VQA on Document Images on WACV 2021 [arxiv:2007.00398]

-

Mathew, M., Tito, R., Karatzas, D., Manmatha, R., & Jawahar, C. V. Document Visual Question Answering Challenge 2020 on DAS 2020 [arxiv:2008.08899]

Challenge News

- 09/01/2023

QA Types made public - 02/19/2023

Multipage DocVQA Dataset is public! - 03/31/2021

ICDAR competition deadline extended - 02/11/2021

Infographics Test Set Available - 08/01/2020

The challenge continues in ICDAR 2021

Important Dates

19 February 2023: MP-DocVQA Dataset released.

ICDAR 2021 edition

6 September 2021: Presentation at the Document VQA workshop at ICDAR 2021

30 April 2021: Results available online

10 April 2021: Deadline for Competition submissions

11 February 2021: Test set available

23 December 2020: Release of full training data for Infographics VQA.

10 November 2020: Release of training data subset for new task "Infographics VQA"

CVPR 2020 edition

16-18 June 2020: CVPR workshop

15 May 2020 (23:59 PST): Submission of results

20 April 2020: Test set available

19 March 2020 : Text Transcriptions for Train_v0.1 Documents available

16 March 2020: Training set v0.1 available