WO2024089852A1 - Control device, robot system, and control method - Google Patents

Control device, robot system, and control method Download PDFInfo

- Publication number

- WO2024089852A1 WO2024089852A1 PCT/JP2022/040235 JP2022040235W WO2024089852A1 WO 2024089852 A1 WO2024089852 A1 WO 2024089852A1 JP 2022040235 W JP2022040235 W JP 2022040235W WO 2024089852 A1 WO2024089852 A1 WO 2024089852A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- end effector

- height

- workpiece

- control device

- robot

- Prior art date

Links

- 238000000034 method Methods 0.000 title claims abstract description 42

- 239000012636 effector Substances 0.000 claims abstract description 79

- 230000008569 process Effects 0.000 claims abstract description 29

- 238000001514 detection method Methods 0.000 claims abstract description 23

- 238000003384 imaging method Methods 0.000 claims description 40

- 230000008859 change Effects 0.000 claims description 10

- 238000012545 processing Methods 0.000 description 39

- 230000032258 transport Effects 0.000 description 14

- 238000012937 correction Methods 0.000 description 12

- 238000010586 diagram Methods 0.000 description 12

- 238000005259 measurement Methods 0.000 description 7

- 238000003780 insertion Methods 0.000 description 6

- 230000037431 insertion Effects 0.000 description 6

- 230000006870 function Effects 0.000 description 4

- 238000005286 illumination Methods 0.000 description 3

- 230000035945 sensitivity Effects 0.000 description 3

- 230000000694 effects Effects 0.000 description 2

- 239000000758 substrate Substances 0.000 description 2

- 230000000052 comparative effect Effects 0.000 description 1

- 238000002474 experimental method Methods 0.000 description 1

- 230000006872 improvement Effects 0.000 description 1

- 239000000463 material Substances 0.000 description 1

- 238000003672 processing method Methods 0.000 description 1

- 230000004044 response Effects 0.000 description 1

- 239000007787 solid Substances 0.000 description 1

Images

Classifications

-

- B—PERFORMING OPERATIONS; TRANSPORTING

- B25—HAND TOOLS; PORTABLE POWER-DRIVEN TOOLS; MANIPULATORS

- B25J—MANIPULATORS; CHAMBERS PROVIDED WITH MANIPULATION DEVICES

- B25J19/00—Accessories fitted to manipulators, e.g. for monitoring, for viewing; Safety devices combined with or specially adapted for use in connection with manipulators

- B25J19/02—Sensing devices

- B25J19/04—Viewing devices

-

- G—PHYSICS

- G05—CONTROLLING; REGULATING

- G05B—CONTROL OR REGULATING SYSTEMS IN GENERAL; FUNCTIONAL ELEMENTS OF SUCH SYSTEMS; MONITORING OR TESTING ARRANGEMENTS FOR SUCH SYSTEMS OR ELEMENTS

- G05B19/00—Programme-control systems

- G05B19/02—Programme-control systems electric

- G05B19/18—Numerical control [NC], i.e. automatically operating machines, in particular machine tools, e.g. in a manufacturing environment, so as to execute positioning, movement or co-ordinated operations by means of programme data in numerical form

- G05B19/404—Numerical control [NC], i.e. automatically operating machines, in particular machine tools, e.g. in a manufacturing environment, so as to execute positioning, movement or co-ordinated operations by means of programme data in numerical form characterised by control arrangements for compensation, e.g. for backlash, overshoot, tool offset, tool wear, temperature, machine construction errors, load, inertia

Definitions

- This specification discloses a control device, a robot system, and a control method.

- a robot system has been proposed that measures the position of a measurement piece formed to the shape of a workpiece with high precision from an image of the measurement piece held by a robot hand and positioned on a camera, and calculates the coordinate alignment of the robot body and the camera from the control position on the robot body and the measurement piece position (see, for example, Patent Document 1).

- This robot system is said to be able to easily calibrate the coordinate alignment.

- a workpiece may be picked up by an end effector attached to the robot, and the workpiece may be fixed at a specified height and imaged in order to be detected.

- the workpiece height directly, or the height of the end effector indirectly.

- This disclosure has been made in consideration of these issues, and its primary objective is to provide a control device, robot system, and control method that can further improve height accuracy.

- the control device of the present disclosure includes: A control device for a robot equipped with an end effector that picks up a workpiece, a control unit that executes a height detection process of causing the end effector to pick up a jig having reference portions provided at predetermined intervals, capturing an image of the jig, and detecting a height of the end effector based on the reference portions included in the captured image; It is equipped with the following:

- This control device detects the height of the end effector using the spacing of reference points in an image captured of a jig with reference points set at a specified interval, so it is less affected by mounting accuracy and detection sensitivity compared to, for example, a device that attaches a height jig to the end effector and detects the height with a touchdown sensor, and can further improve height accuracy.

- FIG. 1 is a schematic explanatory diagram showing an example of a robot system 10.

- FIG. 2 is an explanatory diagram showing an example of an imaging unit 14.

- FIG. 2 is an explanatory diagram showing an example of a mounting table 17 and a calibration jig 18.

- FIG. 2 is a perspective view showing an example of an end effector 22 .

- FIG. 4 is an explanatory diagram showing an example of correspondence information 43 stored in a storage unit 42.

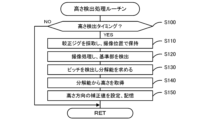

- 10 is a flowchart showing an example of a height detection processing routine.

- 11 is a flowchart showing an example of a mounting process routine.

- FIG. 10 is an explanatory diagram showing an example of another robot system 10B.

- FIG. 1 is an explanatory diagram of a robot system 100 having a touchdown sensor 119. 6 shows the measurement results of mounting accuracy when using the touchdown sensor 119. 4 shows the results of measurement of mounting accuracy when using the calibration jig 18 of the present disclosure.

- FIG. 1 is a schematic explanatory diagram showing an example of a robot system 10 according to the present disclosure.

- FIG. 2 is a schematic explanatory diagram showing an example of an imaging unit 14.

- FIG. 3 is an explanatory diagram showing an example of a mounting table 17 and a calibration jig 18.

- FIG. 4 is a perspective view showing an example of an end effector 22.

- FIG. 5 is an explanatory diagram showing an example of correspondence information 43 stored in a memory unit 42.

- the left-right direction (X-axis), front-back direction (Y-axis), and up-down direction (Z-axis) are as shown in FIGS. 1 and 2.

- the robot system 10 is, for example, a device that mounts a workpiece 31 on a processing object 30, and includes a working device 11 and a supplying device 25.

- the working device 11 includes a transport device 12, an imaging unit 14, a mounting table 17, a working section 20, and a control device 40.

- the processing object 30 may include an insertion section into which a protruding section 33 of the workpiece 31 is inserted, and examples of the processing object 30 include a substrate or a three-dimensional base material (solid object).

- the workpiece 31 is an electronic component and has a main body 32 and a protrusion 33.

- the protrusions 33 are terminal pins and multiple protrusions 33 are formed on the main body 32.

- the workpiece 31 is fixed by inserting the protrusions 33 into an insertion portion of the processing object 30.

- the protrusions 33 have a tapered portion 34 having a tapered surface that narrows toward the tip, and a tip portion 35, which is the tip surface, formed on the tip side.

- the protrusions 33 will also be referred to as "terminals" or "pins” below.

- the transport device 12 is a unit that carries in, transports, fixes at the mounting position, and removes the processing object 30.

- the transport device 12 transports the processing object 30 using a pair of conveyor belts that are spaced apart.

- the imaging unit 14 is a device that captures an image of the underside of the workpiece 31 that is collected and held by the working section 20.

- the imaging unit 14 is disposed between the conveying device 12 and the supply device 25.

- the imaging unit 14 includes an imaging section 15 and an illumination section 16.

- the imaging range of the imaging section 15 is above the imaging section 15, as shown in FIG. 2.

- the imaging section 15 has an imaging element that generates an electric charge by receiving light and outputs the generated electric charge, such as a CMOS image sensor or a CCD image sensor.

- the imaging section 15 has a depth of field of at least the distance between the main body 32 and the protruding section 33.

- the illumination section 16 irradiates light onto the workpiece 31 held by the working section 20.

- the imaging unit 14 captures an image of the workpiece 31 when the articulated arm robot 21 holding the workpiece 31 stops above the imaging section 15 or while moving, and outputs the captured image to the control device 40.

- the control device 40 can use this captured image to inspect whether the shape and location of the workpiece 31 are normal, and detect the amount of misalignment, such as the position or rotation, of the workpiece 31 when it was collected.

- the mounting table 17 is a table used for mounting a member, and is provided adjacent to the imaging unit 14.

- a calibration jig 18 is placed on the mounting table 17.

- the calibration jig 18 is a jig used to calibrate the height of the end effector 22 and/or the workpiece 31 before the articulated arm robot 21 picks up the workpiece 31 and performs the process of imaging it with the imaging unit 14.

- the calibration jig 18 has reference parts 19 provided at predetermined intervals Lx, Ly on its underside.

- the reference parts 19 are marks that can detect the respective intervals.

- the reference parts 19 are circular marks, but are not limited to circles as long as the position can be determined and the length can be grasped.

- the reference parts 19 may also be in a checkerboard pattern. In the robot system 10, the height of the calibration jig 18 at the time of imaging is determined according to the intervals of the reference parts 19.

- the working unit 20 is a robot that places the workpiece 31 on the processing object 30.

- the working unit 20 includes a multi-joint arm robot 21, an end effector 22, and a camera 23.

- the multi-joint arm robot 21 is a work robot connected to a first arm and a second arm that rotate around a rotation axis.

- the end effector 22 is a member that performs the work of picking up the workpiece 31 and placing it on the processing object 30, and is rotatably connected to the tip of the multi-joint arm robot 21. For example, as shown in FIG.

- the end effector 22 may include a mechanical chuck 22a that physically grasps and picks up the workpiece 31, or a suction nozzle 22b that sucks up and picks up the workpiece 31 by negative pressure.

- the end effector 22 may include either the mechanical chuck 22a or the suction nozzle 22b.

- the camera 23 captures an image of the downward direction, and is used, for example, to capture an image of the workpiece 31 placed on the supply device 25 and recognize the posture of the workpiece 31. This camera 23 is fixed to the tip of the articulated arm robot 21.

- the supply device 25 is a device that supplies the workpieces 31.

- the supply device 25 includes a supply placement section 26, a vibration section 27, and a part supply section 28.

- the supply placement section 26 is configured as a belt conveyor that has a placement surface and places the workpieces 31 with an indefinite posture on it and moves them in the forward and backward directions.

- the vibration section 27 applies vibration to the supply placement section 26 to change the posture of the workpieces 31 on the supply placement section 26.

- the part supply section 28 is disposed at the rear upper part of the supply placement section 26 (not shown) and is a device that stores the workpieces 31 and supplies them to the supply placement section 26.

- the part supply section 28 releases the workpieces 31 to the supply placement section 26 when the number of parts on the placement surface falls below a predetermined number or periodically.

- the working section 20 uses the articulated arm robot 21, the end effector 22, and the camera 23 to recognize and pick up the workpieces 31 that are in a posture that can be picked up and placed on the supply placement section 26.

- the control device 40 is a device used in a robot equipped with an end effector 22 that picks up the workpiece 31.

- the control device 40 has a microprocessor centered on the CPU 41, and controls the entire device including the transport device 12, the imaging unit 14, the working unit 20, and the supply device 25.

- the CPU 41 is a control unit, and has the functions of an image control unit in an image processing device that executes image processing of the imaging unit 14, and an implementation control unit in an implementation device that controls the multi-joint arm robot 21.

- the control device 40 outputs control signals to the transport device 12, the imaging unit 14, the working unit 20, and the supply device 25, while inputting signals from the transport device 12, the imaging unit 14, the working unit 20, and the supply device 25.

- the control device 40 is equipped with a memory unit 42, a display device 47, and an input device 48.

- the memory unit 42 is a large-capacity storage medium such as a HDD or a flash memory that stores various application programs and various data files. As shown in FIG. 5, the memory unit 42 stores correspondence information 43.

- the correspondence information 43 associates the resolution calculated from the pitch of the reference portion 19 with the height of the reference portion 19.

- the correspondence information 43 uses a unit resolution ( ⁇ m/pixel/mm) that indicates the amount of change in resolution relative to the change in height of the end effector 22, and experimentally determines the relationship between the resolution ( ⁇ m/pixel) and the height H of the workpiece 31 picked by the end effector 22, and has been determined such that the height H tends to increase as the resolution increases.

- the display device 47 is a display that displays information about the robot system 10 and various input screens.

- the input device 48 includes a keyboard and a mouse for input by the operator.

- the control device 40 executes height detection processing to cause the end effector 22 to pick up the calibration jig 18, capture an image of the calibration jig 18, and detect the height of the end effector 22 based on the reference portion 19 included in the captured image.

- the CPU 41 may also detect the height of the end effector 22 using a unit resolution that is the amount of change in resolution relative to a change in height of the reference portion 19 included in the captured image.

- the CPU 41 also executes processing to cause the imaging unit 15 to capture the workpiece 31 picked up by the end effector 22 using the detected height of the end effector 22, and detect the workpiece 31.

- the CPU 41 executes height detection processing at a specified calibration timing.

- FIG. 6 is a flowchart showing an example of a height detection process routine executed by the CPU 41. This routine is stored in the storage unit 42, and is executed at predetermined intervals after the robot system 10 is started. When this routine is started, the CPU 41 first determines whether it is height detection timing (S100).

- the CPU 41 determines that it is height detection timing when a calibration process is executed immediately after the robot system 10 is started, or when recalibration is performed after a predetermined time has elapsed since the operation. If it is not height detection timing, the CPU 41 ends this routine as it is.

- the CPU 41 executes height detection processing of the end effector 22, which will be described below. Specifically, the CPU 41 controls the articulated arm robot 21 to pick up the calibration jig 18 with the end effector 22 and hold the calibration jig 18 at the imaging position of the imaging unit 14 (S110). The end effector 22 attached to the working unit 20 is the one that actually picks up the workpiece 31. Next, the CPU 41 performs imaging processing of the calibration jig 18, detects the reference portion 19 (S120), counts the number of pixels between the reference portions 19 in the captured image, and divides the actual distance between the reference portions 19 by the number of pixels to obtain the resolution (S130). Next, the CPU 41 obtains the height H from the obtained resolution using the correspondence information 43 (S140).

- the control device 40 can determine the height of the end effector 22 or the height of the calibration jig 18 using the captured image of the calibration jig 18.

- the CPU 41 uses the acquired height H to determine the difference with respect to the reference height, sets a correction value in the height direction to eliminate this difference, stores the set correction value in the memory unit 42 (S150), and ends this routine.

- the control device 40 can acquire the height of the end effector 22 in a non-contact state by imaging the calibration jig 18 on which the reference portion 19 is provided, and set a correction value to correct that height.

- FIG. 7 is a flow chart showing an example of a mounting process routine executed by the CPU 41.

- the mounting process routine is stored in the memory unit 42, and is executed in response to a start command from the operator.

- the CPU 41 first reads and acquires mounting condition information (S200).

- the mounting condition information includes information such as the shape and size of the processing object 30 and the workpiece 31, as well as the placement position and placement number of the workpiece 31.

- the CPU 41 causes the transport device 12 to transport the processing object 30 to the mounting position and fix it (S210), and controls the part supply unit 28 to supply the workpiece 31 to the supply placement unit 26 (S220).

- the CPU 41 controls the multi-joint arm robot 21 to move the camera 23 above the supply placement unit 26, and causes the camera 23 to capture an image of the workpiece 31 on the supply placement unit 26, and performs a process to recognize the workpiece 31 that can be picked up (S230).

- the CPU 41 recognizes the workpiece 31 on the supply placement unit 26 with the protrusion 33 on the lower side and the main body 32 on the upper side as a pickable part. When there is no workpiece 31 that can be picked up, the CPU 41 drives the vibration unit 27 to change the position of the workpiece 31. When there is no workpiece 31 on the supply placement unit 26, the CPU 41 drives the part supply unit 28 to supply the workpiece 31 onto the supply placement unit 26.

- the CPU 41 drives the articulated arm robot 21 and the end effector 22, and causes the end effector 22 to pick up the workpiece 31 using the height correction value and move it to the top of the imaging unit 14 (S240).

- the working unit 20 performs height correction and picks up the workpiece 31, so that the workpiece 31 can be picked up more reliably.

- the CPU 41 also adjusts the imaging position and the control of the articulated arm robot 21 in advance so that the center position of the end effector 22 moves to the center of the image.

- the CPU 41 moves the end effector 22 to an imaging position using the height correction value, stops it at that position, and images the workpiece 31 (S250).

- the control device 40 can image the workpiece 31 at the corrected position, and can obtain a more appropriate image.

- the CPU 41 uses the captured image to check the tip 35 of the protrusion 33 (S260) and determines whether the workpiece 31 is usable or not (S270).

- the CPU 41 determines that the workpiece 31 is usable when the tips 35 of all protrusions 33 are detected to be within a predetermined tolerance range.

- This tolerance range may be determined by determining the relationship between the positional deviation of the tips 35 and their placement on the processing object 30, and may be determined as a range within which the workpiece 31 can be properly placed on the processing object 30.

- the CPU 41 executes a process to place the workpiece 31 at a predetermined position on the processing object 30 (S280). At this time, the CPU 41 may perform position correction based on the position of the tip 35 and place it on the processing object 30. The working unit 20 performs height correction and places the workpiece 31, so that the workpiece 31 can be placed more reliably.

- the CPU 41 stops using the workpiece 31 and discards it, and notifies the operator of this with a message (S290). The operator is notified, for example, by displaying a message or an icon on the display device 47 to the effect that the protruding portion 33 of the workpiece 31 cannot be inserted into the insertion portion of the processing object 30.

- the CPU 41 determines whether there is a next work 31 to be placed (S300), and if there is, executes the process from S220 onwards. That is, the CPU 41 repeatedly executes the process of supplying the work 31 to the supply placement section 26 as necessary, recognizing the work 31 that can be picked up, picking up the work 31, taking an image of the work 31 with the imaging unit 14, determining whether the work 31 is usable, and placing it.

- the CPU 41 determines whether there is a processing object 30 on which the work 31 should be placed next (S310), and if there is a next processing object 30, executes the process from S210 onwards.

- the CPU 41 ejects the processing object 30 on which the work 31 has been placed, carries in the next processing object 30, and executes the process from S220 onwards. On the other hand, when there is no next processing object 30 in S310, the CPU 41 ends this routine.

- the control device 40 of this embodiment is an example of a control device of this disclosure

- the CPU 41 is an example of a control unit

- the end effector 22 is an example of an end effector

- the calibration jig 18 is an example of a jig

- the articulated arm robot 21 is an example of an arm robot

- the robot system 10 is an example of a robot system. Note that this embodiment also clarifies an example of a control method of this disclosure by explaining the operation of the robot system 10.

- the control device 40 of this embodiment described above is used in a robot equipped with an end effector 22 that picks up a workpiece 31.

- This control device 40 has a CPU 41 as a control unit that executes a height detection process that causes the end effector 22 to pick up a calibration jig 18 having reference portions 19 provided at predetermined intervals, images the calibration jig 18, and detects the height of the end effector 22 based on the reference portions 19 included in the captured image.

- This control device 40 detects the height H of the end effector 22 using the intervals between the reference portions 19 in an image obtained by capturing an image of the calibration jig 18 having reference portions 19 provided at predetermined intervals. Therefore, compared to, for example, a device that attaches a height jig to the end effector 22 and detects the height using a touchdown sensor, the influence of mounting accuracy and detection sensitivity is smaller, and height accuracy can be further improved.

- the CPU 41 as the control unit detects the height H of the end effector 22 using the unit resolution, which is the amount of change in resolution relative to the change in height of the reference portion 19 included in the captured image, and the height accuracy can be further improved using the unit resolution. Furthermore, the CPU 41 uses the detected height H of the end effector 22 to have the imaging unit capture the workpiece 31 picked up by the end effector 22, and detects the workpiece 31. In order to do this, the accuracy of the imaging process of the workpiece 31 can be further improved using the detection result of the height detection process in which the calibration jig 18 is imaged and image-processed. Furthermore, the CPU 41 executes the height detection process at a predetermined calibration timing, and therefore can execute the height detection process as a calibration process of the device.

- the accuracy in the height direction of the multi-joint arm robot 21 can be further improved by using the captured image of the calibration jig 18, in which the reference portions 19 are provided at predetermined intervals.

- the robot system 10 also includes an articulated arm robot 21 equipped with an end effector 22 that picks up the workpiece 31, and a control device 40. This robot system 10 can further improve the height accuracy, just like the control device 40.

- control device 40 of the working device 11 has the functions of the control device of the present disclosure, but this is not particularly limited, and a control device may be provided in the working device 11 or the articulated arm robot 21.

- the CPU 41 has the functions of a control unit, but this is not particularly limited, and a control unit may be provided separately from the CPU 41.

- the height H of the end effector 22 is detected using an image captured of the calibration jig 18, so the accuracy of the height can be further improved.

- the CPU 41 detects the height H of the end effector 22 using the unit resolution, but this is not particularly limited as long as the height is detected using a captured image of the calibration jig 18.

- the correspondence information 43 may be the correspondence between the pitch of the reference portion 19 and the height H, and the CPU 41 may directly determine the height H from the pitch of the reference portion 19.

- a correction value in the height direction of the end effector 22 is calculated from the captured image of the calibration jig 18, and this correction value is used to collect, capture, and position the workpiece 31, but the use of the correction value may be omitted in one or more of these processes.

- the workpiece 31 is provided with a protrusion 33, and the protrusion 33 is inserted into the insertion portion of the processing object 30.

- this is not particularly limited, and the workpiece 31 may not have a protrusion 33, and the processing object 30 may not have an insertion portion.

- the workpiece 31 having a protrusion 33 has a greater effect on mounting accuracy, and the processing disclosed herein is more meaningful.

- the end effector 22 is described as having a mechanical chuck 22a and a suction nozzle 22b, but either the mechanical chuck 22a or the suction nozzle 22b may be omitted.

- FIG. 8 is an explanatory diagram showing an example of another robot system 10B.

- This robot system 10B includes a mounting device 11B and a management device 60.

- the mounting device 11B includes a transport device 12B that transports a board 30B, a supply device 25B that includes a feeder 29, an imaging unit 14, a mounting unit 20B, and a control device 40.

- the mounting unit 20B includes a mounting table 17 on which the calibration jig 18 is placed, a mounting head 21B, an end effector 22B attached to the mounting head 21B, and a head moving unit 24 that moves the mounting head 21B in the XY direction.

- the control device 40 is the same as in the above embodiment. Even when using such a mounting device 11B, the height accuracy of the XY robot can be improved when placing a workpiece 31 having a protruding portion protruding from the main body onto the processing object 30, and thus the accuracy of mounting the workpiece 31 onto the processing object 30 can be improved.

- control device 40 has been described as control device 40, but it is not particularly limited to this and may be a control method for a robot, or this control method may be a program executed by a computer.

- control method of the present disclosure may be configured as follows.

- the control method of the present disclosure is a control method executed by a computer that controls a robot equipped with an end effector that picks up a workpiece, comprising: (a) causing the end effector to pick up a jig having reference portions provided at predetermined intervals and capturing an image of the jig; (b) executing a height detection process to detect a height of the end effector based on the reference portion included in the obtained captured image; It includes.

- the height of the end effector is detected using the spacing of reference parts in an image captured of a jig having reference parts provided at a predetermined interval, thereby further improving the accuracy of the height.

- various aspects of the control device described above may be adopted, and steps may be added to achieve each function of the control device described above.

- the "height of the end effector” may be the height of a predetermined position (e.g., the bottom end) of the end effector, or may be synonymous with the height of the workpiece being picked by the end effector.

- Experimental example 1 corresponds to a comparative example

- experimental example 2 corresponds to an embodiment of the present disclosure.

- FIG 9 is an explanatory diagram of a robot system 100 having a conventional touchdown sensor 119.

- the robot system 100 comprises an imaging unit 14 having an imaging section 15, a multi-joint arm robot 21, a contact jig 118, and a touchdown sensor 119.

- the contact jig 118 is brought into contact with the touchdown sensor 119, and the height of the end effector 22 is detected at the position of contact.

- a robot system 10 was created that detects the height of the end effector 22 using an image captured by the calibration jig 18 (see Figures 1 to 5).

- the height H of the end effector 22 was detected using an image captured by the calibration jig 18, and the mounting accuracy of the workpiece 31 was measured.

- the angle of the workpiece 31 was changed to 0°, 90°, 180°, and 270° to determine the mounting accuracy.

- FIG. 10 shows the measurement results of the mounting accuracy when the touchdown sensor 119 was used.

- FIG. 11 shows the measurement results of the mounting accuracy when the calibration jig 18 of the present disclosure was used.

- the robot system 10 of the present disclosure which detects the height of the end effector 22 using the captured image of the calibration jig 18, can perform height detection with higher accuracy, and thus can improve the mounting accuracy of the workpiece 31.

- the image processing device, mounting device, mounting system, and image processing method disclosed herein can be used, for example, in the field of electronic component mounting.

Landscapes

- Engineering & Computer Science (AREA)

- Human Computer Interaction (AREA)

- Manufacturing & Machinery (AREA)

- Physics & Mathematics (AREA)

- General Physics & Mathematics (AREA)

- Automation & Control Theory (AREA)

- Robotics (AREA)

- Mechanical Engineering (AREA)

- Manipulator (AREA)

Abstract

A control device according to the present disclosure is used for a robot equipped with an end effector for picking up workpieces, and comprises a control unit that executes a height detection process in which the end effector is made to pick up a jig on which reference parts are provided at prescribed intervals, the jig is photographed, and the height of the end effector is detected on the basis of a reference part included in the obtained photographic image.

Description

本明細書では、制御装置、ロボットシステム及び制御方法を開示する。

This specification discloses a control device, a robot system, and a control method.

従来、ロボットシステムとしては、高精度にワーク形状に形成した計測ピースと、ロボットハンドで把持されてカメラ上に位置決めされた計測ピースの画像から計測ピース位置を計測し、ロボット本体側の制御位置と計測ピース位置からロボット本体とカメラの座標合わせの計算を行うものが提案されている(例えば、特許文献1参照)。このロボットシステムでは、簡単に座標合わせの較正が可能であるとしている。

Conventionally, a robot system has been proposed that measures the position of a measurement piece formed to the shape of a workpiece with high precision from an image of the measurement piece held by a robot hand and positioned on a camera, and calculates the coordinate alignment of the robot body and the camera from the control position on the robot body and the measurement piece position (see, for example, Patent Document 1). This robot system is said to be able to easily calibrate the coordinate alignment.

ところで、上述したロボットシステムでは、例えば、ロボットに配設されたエンドエフェクタによってワークを採取し、このワークを検出するために所定の高さに固定して撮像することがある。このロボットシステムでは、直接的にはワークの高さ、あるいは間接的にはエンドエフェクタの高さの精度をより向上することが求められていた。

In the robot system described above, for example, a workpiece may be picked up by an end effector attached to the robot, and the workpiece may be fixed at a specified height and imaged in order to be detected. In this robot system, there is a demand for further improvement in the accuracy of the workpiece height directly, or the height of the end effector indirectly.

本開示は、このような課題に鑑みなされたものであり、高さの精度をより向上することができる制御装置、ロボットシステム及び制御方法を提供することを主目的とする。

This disclosure has been made in consideration of these issues, and its primary objective is to provide a control device, robot system, and control method that can further improve height accuracy.

本開示では、上述の主目的を達成するために以下の手段を採った。

In this disclosure, the following measures have been taken to achieve the above-mentioned main objective.

本開示の制御装置は、

ワークを採取するエンドエフェクタを備えたロボットに用いられる制御装置であって、

所定間隔で基準部が設けられたジグを前記エンドエフェクタに採取させ、前記ジグを撮像し、得られた撮像画像に含まれる前記基準部に基づいて前記エンドエフェクタの高さを検出する高さ検出処理を実行する制御部、

を備えたものである。 The control device of the present disclosure includes:

A control device for a robot equipped with an end effector that picks up a workpiece,

a control unit that executes a height detection process of causing the end effector to pick up a jig having reference portions provided at predetermined intervals, capturing an image of the jig, and detecting a height of the end effector based on the reference portions included in the captured image;

It is equipped with the following:

ワークを採取するエンドエフェクタを備えたロボットに用いられる制御装置であって、

所定間隔で基準部が設けられたジグを前記エンドエフェクタに採取させ、前記ジグを撮像し、得られた撮像画像に含まれる前記基準部に基づいて前記エンドエフェクタの高さを検出する高さ検出処理を実行する制御部、

を備えたものである。 The control device of the present disclosure includes:

A control device for a robot equipped with an end effector that picks up a workpiece,

a control unit that executes a height detection process of causing the end effector to pick up a jig having reference portions provided at predetermined intervals, capturing an image of the jig, and detecting a height of the end effector based on the reference portions included in the captured image;

It is equipped with the following:

この制御装置では、所定間隔で基準部が設けられたジグを撮像した撮像画像の基準部の間隔などを用いてエンドエフェクタの高さを検出するため、例えば、エンドエフェクタに高さジグを装着しタッチダウンセンサで高さを検出するものなどに比して装着精度や検出感度の影響がより少なく、高さの精度をより向上することができる。

This control device detects the height of the end effector using the spacing of reference points in an image captured of a jig with reference points set at a specified interval, so it is less affected by mounting accuracy and detection sensitivity compared to, for example, a device that attaches a height jig to the end effector and detects the height with a touchdown sensor, and can further improve height accuracy.

本実施形態を図面を参照しながら以下に説明する。図1は、本開示であるロボットシステム10の一例を示す概略説明図である。図2は、撮像ユニット14の一例を示す概略説明図である。図3は、載置台17及び較正ジグ18の一例を示す説明図である。図4は、エンドエフェクタ22の一例を示す斜視図である。図5は、記憶部42に記憶された対応情報43の一例を示す説明図である。なお、本実施形態において、左右方向(X軸)、前後方向(Y軸)及び上下方向(Z軸)は、図1、2に示した通りとする。

This embodiment will be described below with reference to the drawings. FIG. 1 is a schematic explanatory diagram showing an example of a robot system 10 according to the present disclosure. FIG. 2 is a schematic explanatory diagram showing an example of an imaging unit 14. FIG. 3 is an explanatory diagram showing an example of a mounting table 17 and a calibration jig 18. FIG. 4 is a perspective view showing an example of an end effector 22. FIG. 5 is an explanatory diagram showing an example of correspondence information 43 stored in a memory unit 42. In this embodiment, the left-right direction (X-axis), front-back direction (Y-axis), and up-down direction (Z-axis) are as shown in FIGS. 1 and 2.

ロボットシステム10は、例えば、処理対象物30にワーク31を実装処理する装置であり、作業装置11と、供給装置25とを備えている。作業装置11は、搬送装置12と、撮像ユニット14と、載置台17と、作業部20と、制御装置40とを備えている。処理対象物30は、ワーク31の突出部33を挿入する挿入部を備えたものとしてもよく、例えば、基板や、3次元形状の基材(立体物)などが挙げられる。

The robot system 10 is, for example, a device that mounts a workpiece 31 on a processing object 30, and includes a working device 11 and a supplying device 25. The working device 11 includes a transport device 12, an imaging unit 14, a mounting table 17, a working section 20, and a control device 40. The processing object 30 may include an insertion section into which a protruding section 33 of the workpiece 31 is inserted, and examples of the processing object 30 include a substrate or a three-dimensional base material (solid object).

ワーク31は、電子部品であり、本体32と、突出部33とを有する。突出部33は、端子ピンであり、本体32に複数形成されている。ワーク31は、例えば、突出部33が処理対象物30の挿入部に挿入されて固定される。突出部33は、先端に向けて細く形成されるテーパ面を有するテーパ部34と、先端面である先端部35とがその先端側に形成されている。なお、説明の便宜のため、以下では突出部33を「端子」や「ピン」とも称する。

The workpiece 31 is an electronic component and has a main body 32 and a protrusion 33. The protrusions 33 are terminal pins and multiple protrusions 33 are formed on the main body 32. For example, the workpiece 31 is fixed by inserting the protrusions 33 into an insertion portion of the processing object 30. The protrusions 33 have a tapered portion 34 having a tapered surface that narrows toward the tip, and a tip portion 35, which is the tip surface, formed on the tip side. For ease of explanation, the protrusions 33 will also be referred to as "terminals" or "pins" below.

搬送装置12は、処理対象物30の搬入、搬送、実装位置での固定、搬出を行うユニットである。搬送装置12は、間隔を開けて設けられた1対のコンベアベルトにより処理対象物30を搬送する。

The transport device 12 is a unit that carries in, transports, fixes at the mounting position, and removes the processing object 30. The transport device 12 transports the processing object 30 using a pair of conveyor belts that are spaced apart.

撮像ユニット14は、作業部20に採取され保持された状態のワーク31の下面側の画像を撮像する装置である。撮像ユニット14は、搬送装置12と供給装置25との間に配設されている。この撮像ユニット14は、撮像部15と、照明部16とを備えている。撮像部15の撮像範囲は、図2に示すように、撮像部15の上方である。撮像部15は、例えば、CMOSイメージセンサやCCDイメージセンサなど、受光により電荷を発生させ発生した電荷を出力する撮像素子を有する。この撮像部15は、本体32と突出部33との距離の被写界深度を少なくとも有する。照明部16は、作業部20に保持されたワーク31に光を照射するものである。撮像ユニット14は、ワーク31を保持した多関節アームロボット21が撮像部15の上方で停止する際、又は移動中にワーク31の画像を撮像し、撮像画像を制御装置40へ出力する。制御装置40は、この撮像画像によって、ワーク31の形状及び部位が正常であるか否かの検査や、ワーク31の採取時の位置や回転などのずれ量の検出などを実行することができる。

The imaging unit 14 is a device that captures an image of the underside of the workpiece 31 that is collected and held by the working section 20. The imaging unit 14 is disposed between the conveying device 12 and the supply device 25. The imaging unit 14 includes an imaging section 15 and an illumination section 16. The imaging range of the imaging section 15 is above the imaging section 15, as shown in FIG. 2. The imaging section 15 has an imaging element that generates an electric charge by receiving light and outputs the generated electric charge, such as a CMOS image sensor or a CCD image sensor. The imaging section 15 has a depth of field of at least the distance between the main body 32 and the protruding section 33. The illumination section 16 irradiates light onto the workpiece 31 held by the working section 20. The imaging unit 14 captures an image of the workpiece 31 when the articulated arm robot 21 holding the workpiece 31 stops above the imaging section 15 or while moving, and outputs the captured image to the control device 40. The control device 40 can use this captured image to inspect whether the shape and location of the workpiece 31 are normal, and detect the amount of misalignment, such as the position or rotation, of the workpiece 31 when it was collected.

載置台17は、部材を載置する際に用いられる台であり、撮像ユニット14に隣接して設けられている。載置台17には、較正ジグ18が載置されている。較正ジグ18は、多関節アームロボット21にワーク31を採取させ撮像ユニット14で撮像する処理を行う前に、エンドエフェクタ22及び/又はワーク31の高さを較正するために用いられるジグである。この較正ジグ18は、図3に示すように、その下面に所定間隔Lx,Lyで基準部19が設けられている。基準部19は、各々の間隔を検出可能なマークである。ここでは、基準部19は、円形のマークであるものとしたが、位置を求めて長さを把握できるものであれば、特に円形に限定されず、楕円形や多角形、例えば、三角形、矩形、五角形、六角形としてもよいし、記号としてもよい。また、基準部19を市松模様にしてもよい。ロボットシステム10では、基準部19の間隔に応じて、撮像時の較正ジグ18の高さを求める。

The mounting table 17 is a table used for mounting a member, and is provided adjacent to the imaging unit 14. A calibration jig 18 is placed on the mounting table 17. The calibration jig 18 is a jig used to calibrate the height of the end effector 22 and/or the workpiece 31 before the articulated arm robot 21 picks up the workpiece 31 and performs the process of imaging it with the imaging unit 14. As shown in FIG. 3, the calibration jig 18 has reference parts 19 provided at predetermined intervals Lx, Ly on its underside. The reference parts 19 are marks that can detect the respective intervals. Here, the reference parts 19 are circular marks, but are not limited to circles as long as the position can be determined and the length can be grasped. They may be ovals or polygons, such as triangles, rectangles, pentagons, and hexagons, or may be symbols. The reference parts 19 may also be in a checkerboard pattern. In the robot system 10, the height of the calibration jig 18 at the time of imaging is determined according to the intervals of the reference parts 19.

作業部20は、ワーク31を処理対象物30へ配置させるロボットである。作業部20は、多関節アームロボット21と、エンドエフェクタ22と、カメラ23とを備えている。多関節アームロボット21は、回動軸を中心に回動する第1アームや第2アームを接続した作業ロボットである。エンドエフェクタ22は、ワーク31を採取して処理対象物30に配置する作業を行う部材であり、多関節アームロボット21の先端に回動可能に接続されている。エンドエフェクタ22は、例えば、図4に示すように、ワーク31を物理的に把持して採取するメカニカルチャック22aや負圧によりワーク31を吸着して採取する吸着ノズル22bを備えているものとしてもよい。なお、エンドエフェクタ22は、メカニカルチャック22a及び吸着ノズル22bのいずれかを備えるものとしてもよい。カメラ23は、下方を撮像するものであり、例えば、供給装置25に載置されたワーク31を撮像し、ワーク31の姿勢などを認識する際に使用される。このカメラ23は、多関節アームロボット21の先端に固定されている。

The working unit 20 is a robot that places the workpiece 31 on the processing object 30. The working unit 20 includes a multi-joint arm robot 21, an end effector 22, and a camera 23. The multi-joint arm robot 21 is a work robot connected to a first arm and a second arm that rotate around a rotation axis. The end effector 22 is a member that performs the work of picking up the workpiece 31 and placing it on the processing object 30, and is rotatably connected to the tip of the multi-joint arm robot 21. For example, as shown in FIG. 4, the end effector 22 may include a mechanical chuck 22a that physically grasps and picks up the workpiece 31, or a suction nozzle 22b that sucks up and picks up the workpiece 31 by negative pressure. The end effector 22 may include either the mechanical chuck 22a or the suction nozzle 22b. The camera 23 captures an image of the downward direction, and is used, for example, to capture an image of the workpiece 31 placed on the supply device 25 and recognize the posture of the workpiece 31. This camera 23 is fixed to the tip of the articulated arm robot 21.

供給装置25は、ワーク31を供給する装置である。供給装置25は、供給載置部26と、加振部27と、部品供給部28とを備えている。供給載置部26は、載置面を有し姿勢不定のワーク31を載置し、前後方向へ移動させるベルトコンベアとして構成されている。加振部27は、供給載置部26に振動を与え、供給載置部26上のワーク31の姿勢を変更させるものである。部品供給部28は、供給載置部26の後方上部に配設されており(不図示)、ワーク31を収容して供給載置部26へ供給する装置である。部品供給部28は、載置面上の部品が所定数未満になるか、又は定期的に供給載置部26へワーク31を放出する。作業部20は、多関節アームロボット21、エンドエフェクタ22及びカメラ23を用い、供給載置部26上で採取、配置可能な姿勢のワーク31を認識してこれを採取する。

The supply device 25 is a device that supplies the workpieces 31. The supply device 25 includes a supply placement section 26, a vibration section 27, and a part supply section 28. The supply placement section 26 is configured as a belt conveyor that has a placement surface and places the workpieces 31 with an indefinite posture on it and moves them in the forward and backward directions. The vibration section 27 applies vibration to the supply placement section 26 to change the posture of the workpieces 31 on the supply placement section 26. The part supply section 28 is disposed at the rear upper part of the supply placement section 26 (not shown) and is a device that stores the workpieces 31 and supplies them to the supply placement section 26. The part supply section 28 releases the workpieces 31 to the supply placement section 26 when the number of parts on the placement surface falls below a predetermined number or periodically. The working section 20 uses the articulated arm robot 21, the end effector 22, and the camera 23 to recognize and pick up the workpieces 31 that are in a posture that can be picked up and placed on the supply placement section 26.

制御装置40は、ワーク31を採取するエンドエフェクタ22を備えたロボットに用いられる装置である。制御装置40は、CPU41を中心とするマイクロプロセッサを有しており、搬送装置12、撮像ユニット14、作業部20及び供給装置25などを含む装置全体の制御を司る。CPU41は、制御部であり、撮像ユニット14の画像処理を実行する画像処理装置における画像制御部と、多関節アームロボット21の制御を行う実装装置における実装制御部との機能を兼ね備えている。この制御装置40は搬送装置12や撮像ユニット14、作業部20、供給装置25へ制御信号を出力する一方、搬送装置12や撮像ユニット14、作業部20、供給装置25からの信号を入力する。制御装置40は、CPU41のほか、記憶部42や、表示装置47、入力装置48を備えている。記憶部42は、各種アプリケーションプログラムや各種データファイルを記憶する、HDDやフラッシュメモリなどの大容量の記憶媒体である。記憶部42には、図5に示すように、対応情報43が記憶されている。対応情報43は、基準部19のピッチから求められた分解能と、基準部19の高さとが対応付けられている。この対応情報43は、エンドエフェクタ22の高さ変化に対する分解能の変化量を示す単位分解能(μm/pixel/mm)を用い、分解能(μm/pixel)と、エンドエフェクタ22により採取されたワーク31の高さHとの関係を実験により求め、分解能が大きくなると高さHが大きくなる傾向に定められている。制御装置40は、一の分解能が求められると、対応情報43を用いることによって、それに対応する高さを一義的に求めることができる。なお、分解能y(μm/pixel)、単位分解能a(μm/pixel/mm)、高さx(mm)、切片b(μm/pixel)としたとき、y=ax+bの関係式が成り立つ。表示装置47は、ロボットシステム10に関する情報や各種入力画面などを表示するディスプレイである。入力装置48は、作業者が入力するキーボードやマウスなどを含む。

The control device 40 is a device used in a robot equipped with an end effector 22 that picks up the workpiece 31. The control device 40 has a microprocessor centered on the CPU 41, and controls the entire device including the transport device 12, the imaging unit 14, the working unit 20, and the supply device 25. The CPU 41 is a control unit, and has the functions of an image control unit in an image processing device that executes image processing of the imaging unit 14, and an implementation control unit in an implementation device that controls the multi-joint arm robot 21. The control device 40 outputs control signals to the transport device 12, the imaging unit 14, the working unit 20, and the supply device 25, while inputting signals from the transport device 12, the imaging unit 14, the working unit 20, and the supply device 25. In addition to the CPU 41, the control device 40 is equipped with a memory unit 42, a display device 47, and an input device 48. The memory unit 42 is a large-capacity storage medium such as a HDD or a flash memory that stores various application programs and various data files. As shown in FIG. 5, the memory unit 42 stores correspondence information 43. The correspondence information 43 associates the resolution calculated from the pitch of the reference portion 19 with the height of the reference portion 19. The correspondence information 43 uses a unit resolution (μm/pixel/mm) that indicates the amount of change in resolution relative to the change in height of the end effector 22, and experimentally determines the relationship between the resolution (μm/pixel) and the height H of the workpiece 31 picked by the end effector 22, and has been determined such that the height H tends to increase as the resolution increases. When a certain resolution is determined, the control device 40 can uniquely determine the corresponding height by using the correspondence information 43. Note that when the resolution is y (μm/pixel), the unit resolution a (μm/pixel/mm), the height x (mm), and the intercept b (μm/pixel), the relational expression y=ax+b holds. The display device 47 is a display that displays information about the robot system 10 and various input screens. The input device 48 includes a keyboard and a mouse for input by the operator.

この制御装置40は、上記較正ジグ18をエンドエフェクタ22に採取させ、較正ジグ18を撮像し、得られた撮像画像に含まれる基準部19に基づいてエンドエフェクタ22の高さを検出する高さ検出処理を実行する。また、CPU41は、撮像画像に含まれる基準部19の高さ変化に対する分解能の変化量である単位分解能を用いてエンドエフェクタ22の高さを検出するものとしてもよい。更に、CPU41は、検出したエンドエフェクタ22の高さを用いて、エンドエフェクタ22が採取したワーク31を撮像部15に撮像させ、ワーク31を検出する処理も実行する。なお、CPU41は、所定の較正タイミングにおいて高さ検出処理を実行する。

The control device 40 executes height detection processing to cause the end effector 22 to pick up the calibration jig 18, capture an image of the calibration jig 18, and detect the height of the end effector 22 based on the reference portion 19 included in the captured image. The CPU 41 may also detect the height of the end effector 22 using a unit resolution that is the amount of change in resolution relative to a change in height of the reference portion 19 included in the captured image. Furthermore, the CPU 41 also executes processing to cause the imaging unit 15 to capture the workpiece 31 picked up by the end effector 22 using the detected height of the end effector 22, and detect the workpiece 31. The CPU 41 executes height detection processing at a specified calibration timing.

次に、こうして構成された本実施形態のロボットシステム10の動作、まず制御装置40がエンドエフェクタ22の高さを較正する処理について説明する。なお、ここでは、メカニカルチャック22aでエンドエフェクタ22の高さを検出する例を主として説明し、吸着ノズル22bでの処理の説明を省略するが、吸着ノズル22bを用いても下記説明と同様にエンドエフェクタ22の高さを検出することができる。図6は、CPU41が実行する高さ検出処理ルーチンの一例を示すフローチャートである。このルーチンは、記憶部42に記憶され、ロボットシステム10が起動されたあと所定間隔で実行される。このルーチンを開始すると、まず、CPU41は、高さ検出タイミングであるか否かを判定する(S100)。CPU41は、ロボットシステム10の起動直後の較正処理の実行時や、作業を所定時間経過したあとの再較正時などに高さ検出タイミングであると判定する。高さ検出タイミングでないときには、CPU41は、このままこのルーチンを終了する。

Next, the operation of the robot system 10 of this embodiment thus configured will be described, firstly the process in which the control device 40 calibrates the height of the end effector 22. Note that here, an example in which the mechanical chuck 22a detects the height of the end effector 22 will be mainly described, and a description of the process in the suction nozzle 22b will be omitted, but the height of the end effector 22 can be detected using the suction nozzle 22b in the same manner as described below. FIG. 6 is a flowchart showing an example of a height detection process routine executed by the CPU 41. This routine is stored in the storage unit 42, and is executed at predetermined intervals after the robot system 10 is started. When this routine is started, the CPU 41 first determines whether it is height detection timing (S100). The CPU 41 determines that it is height detection timing when a calibration process is executed immediately after the robot system 10 is started, or when recalibration is performed after a predetermined time has elapsed since the operation. If it is not height detection timing, the CPU 41 ends this routine as it is.

一方、高さ検出タイミングであるときには、CPU41は、以下に説明する、エンドエフェクタ22の高さ検出処理を実行する。具体的には、CPU41は、エンドエフェクタ22により較正ジグ18を採取させ、撮像ユニット14の撮像位置で較正ジグ18を保持するよう多関節アームロボット21を制御する(S110)。なお、作業部20に装着されるエンドエフェクタ22は、実際にワーク31を採取するものをそのまま用いる。次に、CPU41は、較正ジグ18を撮像処理し、基準部19を検出し(S120)、撮像画像の基準部19と基準部19との間のピクセル数を数え、基準部19間の実際の距離をピクセル数で除算し、分解能を取得する(S130)。続いて、CPU41は、得られた分解能から対応情報43を用いて高さHを取得する(S140)。

On the other hand, when it is height detection timing, the CPU 41 executes height detection processing of the end effector 22, which will be described below. Specifically, the CPU 41 controls the articulated arm robot 21 to pick up the calibration jig 18 with the end effector 22 and hold the calibration jig 18 at the imaging position of the imaging unit 14 (S110). The end effector 22 attached to the working unit 20 is the one that actually picks up the workpiece 31. Next, the CPU 41 performs imaging processing of the calibration jig 18, detects the reference portion 19 (S120), counts the number of pixels between the reference portions 19 in the captured image, and divides the actual distance between the reference portions 19 by the number of pixels to obtain the resolution (S130). Next, the CPU 41 obtains the height H from the obtained resolution using the correspondence information 43 (S140).

図5に示すように、より低い高さH1に較正ジグ18があるときには、基準部19のピッチであるLx及びLyのピクセル数は大きくなり、分解能はより低い値になる。一方、より高い高さH2に較正ジグ18があるときには、基準部19のピッチであるLx及びLyのピクセル数は小さくなり、分解能はより高い値になる。このように、制御装置40は、較正ジグ18を撮像した撮像画像を用いて、エンドエフェクタ22の高さ、あるいは、較正ジグ18の高さを求めることができる。

As shown in FIG. 5, when the calibration jig 18 is at a lower height H1, the number of pixels of Lx and Ly, which are the pitch of the reference portion 19, becomes larger, and the resolution becomes lower. On the other hand, when the calibration jig 18 is at a higher height H2, the number of pixels of Lx and Ly, which are the pitch of the reference portion 19, becomes smaller, and the resolution becomes higher. In this way, the control device 40 can determine the height of the end effector 22 or the height of the calibration jig 18 using the captured image of the calibration jig 18.

S140のあと、CPU41は、取得した高さHを用い、基準高さとの差分を求め、この差分を解消する高さ方向の補正値を設定し、設定した補正値を記憶部42に記憶し(S150)、このルーチンを終了する。このように、制御装置40は、基準部19が設けられた較正ジグ18を撮像することによって、非接触状態でエンドエフェクタ22の高さを取得し、その高さを補正する補正値を設定することができる。

After S140, the CPU 41 uses the acquired height H to determine the difference with respect to the reference height, sets a correction value in the height direction to eliminate this difference, stores the set correction value in the memory unit 42 (S150), and ends this routine. In this way, the control device 40 can acquire the height of the end effector 22 in a non-contact state by imaging the calibration jig 18 on which the reference portion 19 is provided, and set a correction value to correct that height.

次に、設定した高さ方向の補正値を用いて、処理対象物30へワーク31を載置する処理について説明する。ここでは、具体例として、処理対象物30に形成された挿入部へワーク31の突出部33を挿入させてワーク31を配置する実装処理について説明する。図7は、CPU41が実行する実装処理ルーチンの一例を示すフローチャートである。実装処理ルーチンは、記憶部42に記憶され、作業者による開始指示により実行される。

Next, a process for placing the workpiece 31 on the processing object 30 using the set height correction value will be described. As a specific example, a mounting process for positioning the workpiece 31 by inserting the protruding portion 33 of the workpiece 31 into an insertion portion formed on the processing object 30 will be described. Figure 7 is a flow chart showing an example of a mounting process routine executed by the CPU 41. The mounting process routine is stored in the memory unit 42, and is executed in response to a start command from the operator.

このルーチンを開始すると、まず、CPU41は、実装条件情報を読み出して取得する(S200)。実装条件情報には、処理対象物30やワーク31の形状やサイズなどの情報のほか、ワーク31の配置位置、配置数などが含まれている。次に、CPU41は、搬送装置12に処理対象物30を実装位置まで搬送させて固定処理させ(S210)、供給載置部26にワーク31を供給するよう部品供給部28を制御する(S220)。次に、CPU41は、多関節アームロボット21を制御し、カメラ23を供給載置部26の上方に移動させ、供給載置部26上のワーク31をカメラ23に撮像させ、採取可能なワーク31を認識する処理を行う(S230)。CPU41は、供給載置部26上のワーク31のうち、突出部33が下側、本体32が上側にあるワーク31を採取可能部品として認識する。なお、採取可能なワーク31がないときには、CPU41は、加振部27を駆動してワーク31の姿勢を変更させる。また、供給載置部26上にワーク31が存在しないときには、CPU41は、部品供給部28を駆動し、ワーク31を供給載置部26上へ供給させる。

When this routine is started, the CPU 41 first reads and acquires mounting condition information (S200). The mounting condition information includes information such as the shape and size of the processing object 30 and the workpiece 31, as well as the placement position and placement number of the workpiece 31. Next, the CPU 41 causes the transport device 12 to transport the processing object 30 to the mounting position and fix it (S210), and controls the part supply unit 28 to supply the workpiece 31 to the supply placement unit 26 (S220). Next, the CPU 41 controls the multi-joint arm robot 21 to move the camera 23 above the supply placement unit 26, and causes the camera 23 to capture an image of the workpiece 31 on the supply placement unit 26, and performs a process to recognize the workpiece 31 that can be picked up (S230). The CPU 41 recognizes the workpiece 31 on the supply placement unit 26 with the protrusion 33 on the lower side and the main body 32 on the upper side as a pickable part. When there is no workpiece 31 that can be picked up, the CPU 41 drives the vibration unit 27 to change the position of the workpiece 31. When there is no workpiece 31 on the supply placement unit 26, the CPU 41 drives the part supply unit 28 to supply the workpiece 31 onto the supply placement unit 26.

次に、CPU41は、多関節アームロボット21及びエンドエフェクタ22を駆動し、高さ方向の補正値を用いてワーク31をエンドエフェクタ22に採取させ、撮像ユニット14の上部へ移動させる(S240)。作業部20は、高さ補正を行い、ワーク31を採取するため、より確実にワーク31を採取することができる。また、CPU41は、エンドエフェクタ22の中心位置が画像中心に移動するように、予め撮像位置や多関節アームロボット21の制御を調整する。次に、CPU41は、高さ方向の補正値を用いた撮像位置へエンドエフェクタ22を移動させ、その位置で停止させてワーク31を撮像する(S250)。制御装置40は、補正した位置でワーク31を撮像することができ、より適切な撮像画像を得ることができる。次に、CPU41は、撮像画像を用いて突出部33の先端部35を確認し(S260)、このワーク31が使用可能か否かを判定する(S270)。CPU41は、全ての突出部33の先端部35が所定の許容範囲内に検出されたとき、ワーク31を使用可能と判定する。この許容範囲は、先端部35の位置ずれと処理対象物30への配置との関係を求め、ワーク31が処理対象物30に正常に配置される範囲に求められているものとしてもよい。

Next, the CPU 41 drives the articulated arm robot 21 and the end effector 22, and causes the end effector 22 to pick up the workpiece 31 using the height correction value and move it to the top of the imaging unit 14 (S240). The working unit 20 performs height correction and picks up the workpiece 31, so that the workpiece 31 can be picked up more reliably. The CPU 41 also adjusts the imaging position and the control of the articulated arm robot 21 in advance so that the center position of the end effector 22 moves to the center of the image. Next, the CPU 41 moves the end effector 22 to an imaging position using the height correction value, stops it at that position, and images the workpiece 31 (S250). The control device 40 can image the workpiece 31 at the corrected position, and can obtain a more appropriate image. Next, the CPU 41 uses the captured image to check the tip 35 of the protrusion 33 (S260) and determines whether the workpiece 31 is usable or not (S270). The CPU 41 determines that the workpiece 31 is usable when the tips 35 of all protrusions 33 are detected to be within a predetermined tolerance range. This tolerance range may be determined by determining the relationship between the positional deviation of the tips 35 and their placement on the processing object 30, and may be determined as a range within which the workpiece 31 can be properly placed on the processing object 30.

S270でワーク31が使用可能であるときには、CPU41は、ワーク31を処理対象物30の所定位置へ載置する処理を実行する(S280)。このとき、CPU41は、先端部35の位置に基づいて位置補正を行って処理対象物30へ配置してもよい。作業部20は、高さ補正を行い、ワーク31を配置するため、より確実にワーク31を配置することができる。一方、S270でワーク31が使用可能でないときには、CPU41は、このワーク31の使用を中止して廃棄すると共に、その旨のメッセージを作業者へ報知する(S290)。作業者への報知は、例えば、表示装置47上にワーク31の突出部33が処理対象物30の挿入部に挿入可能でない旨のメッセージやアイコンなどを表示させるものとする。

When the workpiece 31 is usable in S270, the CPU 41 executes a process to place the workpiece 31 at a predetermined position on the processing object 30 (S280). At this time, the CPU 41 may perform position correction based on the position of the tip 35 and place it on the processing object 30. The working unit 20 performs height correction and places the workpiece 31, so that the workpiece 31 can be placed more reliably. On the other hand, when the workpiece 31 is not usable in S270, the CPU 41 stops using the workpiece 31 and discards it, and notifies the operator of this with a message (S290). The operator is notified, for example, by displaying a message or an icon on the display device 47 to the effect that the protruding portion 33 of the workpiece 31 cannot be inserted into the insertion portion of the processing object 30.

S290のあと、または、S280のあと、CPU41は、次の配置するべきワーク31があるか否かを判定し(S300)、次のワーク31があるときには、S220以降の処理を実行する。即ち、CPU41は、必要に応じて供給載置部26にワーク31を供給し、採取可能なワーク31を認識し、ワーク31を採取して撮像ユニット14で撮像し、ワーク31が使用可能か否かを判定して配置させる処理を繰り返し実行する。一方、S300で、この処理対象物30に配置する次のワーク31がないときには、CPU41は、次にワーク31を配置すべき処理対象物30があるか否かを判定し(S310)、次の処理対象物30があるときには、S210以降の処理を実行する。即ち、CPU41は、ワーク31の配置が終了した処理対象物30を排出させ、次の処理対象物30を搬入し、S220以降の処理を実行する。一方、S310で次の処理対象物30がないときには、CPU41は、このルーチンを終了する。

After S290 or S280, the CPU 41 determines whether there is a next work 31 to be placed (S300), and if there is, executes the process from S220 onwards. That is, the CPU 41 repeatedly executes the process of supplying the work 31 to the supply placement section 26 as necessary, recognizing the work 31 that can be picked up, picking up the work 31, taking an image of the work 31 with the imaging unit 14, determining whether the work 31 is usable, and placing it. On the other hand, when there is no next work 31 to be placed on this processing object 30 in S300, the CPU 41 determines whether there is a processing object 30 on which the work 31 should be placed next (S310), and if there is a next processing object 30, executes the process from S210 onwards. That is, the CPU 41 ejects the processing object 30 on which the work 31 has been placed, carries in the next processing object 30, and executes the process from S220 onwards. On the other hand, when there is no next processing object 30 in S310, the CPU 41 ends this routine.

ここで、本実施形態の構成要素と本開示の構成要素との対応関係を明らかにする。本実施形態の制御装置40が本開示の制御装置の一例であり、CPU41が制御部の一例であり、エンドエフェクタ22がエンドエフェクタの一例であり、較正ジグ18がジグの一例であり、多関節アームロボット21がアームロボットの一例であり、ロボットシステム10がロボットシステムの一例である。なお、本実施形態では、ロボットシステム10の動作を説明することにより本開示の制御方法の一例も明らかにしている。

Here, the correspondence between the components of this embodiment and the components of this disclosure will be clarified. The control device 40 of this embodiment is an example of a control device of this disclosure, the CPU 41 is an example of a control unit, the end effector 22 is an example of an end effector, the calibration jig 18 is an example of a jig, the articulated arm robot 21 is an example of an arm robot, and the robot system 10 is an example of a robot system. Note that this embodiment also clarifies an example of a control method of this disclosure by explaining the operation of the robot system 10.

以上説明した本実施形態の制御装置40は、ワーク31を採取するエンドエフェクタ22を備えたロボットに用いられるものである。この制御装置40は、所定間隔で基準部19が設けられた較正ジグ18をエンドエフェクタ22に採取させ、較正ジグ18を撮像し、得られた撮像画像に含まれる基準部19に基づいてエンドエフェクタ22の高さを検出する高さ検出処理を実行する制御部としてのCPU41を備える。この制御装置40では、所定間隔で基準部19が設けられた較正ジグ18を撮像した撮像画像の基準部19の間隔などを用いてエンドエフェクタ22の高さHを検出するため、例えば、エンドエフェクタ22に高さジグを装着しタッチダウンセンサで高さを検出するものなどに比して装着精度や検出感度の影響がより少なく、高さの精度をより向上することができる。

The control device 40 of this embodiment described above is used in a robot equipped with an end effector 22 that picks up a workpiece 31. This control device 40 has a CPU 41 as a control unit that executes a height detection process that causes the end effector 22 to pick up a calibration jig 18 having reference portions 19 provided at predetermined intervals, images the calibration jig 18, and detects the height of the end effector 22 based on the reference portions 19 included in the captured image. This control device 40 detects the height H of the end effector 22 using the intervals between the reference portions 19 in an image obtained by capturing an image of the calibration jig 18 having reference portions 19 provided at predetermined intervals. Therefore, compared to, for example, a device that attaches a height jig to the end effector 22 and detects the height using a touchdown sensor, the influence of mounting accuracy and detection sensitivity is smaller, and height accuracy can be further improved.

また、制御部としてのCPU41は、撮像画像に含まれる基準部19の高さ変化に対する分解能の変化量である単位分解能を用いてエンドエフェクタ22の高さHを検出するため、単位分解能を用いて高さの精度をより向上することができる。更に、CPU41は、検出したエンドエフェクタ22の高さHを用いて、エンドエフェクタ22が採取したワーク31を撮像部に撮像させ、ワーク31を検出するため、較正ジグ18を撮像して画像処理する高さ検出処理の検出結果を用いてワーク31の撮像処理の精度をより高めることができる。更にまた、CPU41は、所定の較正タイミングにおいて高さ検出処理を実行するため、装置の較正処理として高さ検出処理を実行することができる。そして、作業部20は、多関節アームロボット21を備えるものであるため、所定間隔で基準部19が設けられた較正ジグ18を撮像した撮像画像を用いることによって、多関節アームロボット21での高さ方向の精度をより向上することができる。

The CPU 41 as the control unit detects the height H of the end effector 22 using the unit resolution, which is the amount of change in resolution relative to the change in height of the reference portion 19 included in the captured image, and the height accuracy can be further improved using the unit resolution. Furthermore, the CPU 41 uses the detected height H of the end effector 22 to have the imaging unit capture the workpiece 31 picked up by the end effector 22, and detects the workpiece 31. In order to do this, the accuracy of the imaging process of the workpiece 31 can be further improved using the detection result of the height detection process in which the calibration jig 18 is imaged and image-processed. Furthermore, the CPU 41 executes the height detection process at a predetermined calibration timing, and therefore can execute the height detection process as a calibration process of the device. And since the working unit 20 is equipped with the multi-joint arm robot 21, the accuracy in the height direction of the multi-joint arm robot 21 can be further improved by using the captured image of the calibration jig 18, in which the reference portions 19 are provided at predetermined intervals.

また、ロボットシステム10は、ワーク31を採取するエンドエフェクタ22を備えた多関節アームロボット21と、制御装置40と、を備える。このロボットシステム10では、制御装置40と同様に、高さの精度をより向上することができる。

The robot system 10 also includes an articulated arm robot 21 equipped with an end effector 22 that picks up the workpiece 31, and a control device 40. This robot system 10 can further improve the height accuracy, just like the control device 40.

なお、本開示は上述した実施形態に何ら限定されることはなく、本開示の技術的範囲に属する限り種々の態様で実施し得ることはいうまでもない。

It goes without saying that this disclosure is in no way limited to the above-described embodiments, and can be implemented in various forms as long as they fall within the technical scope of this disclosure.

例えば、上述した実施形態では、作業装置11の制御装置40が本開示の制御装置の機能を有するものとしたが、特にこれに限定されず、作業装置11や多関節アームロボット21に制御装置を設けるものとしてもよい。同様に、上述した実施形態では、CPU41が制御部の機能を有するものとしたが、特にこれに限定されず、CPU41とは別に制御部を設けるものとしてもよい。この作業装置11においても、較正ジグ18を撮像した撮像画像を用いてエンドエフェクタ22の高さHを検出するため、高さの精度をより向上することができる。

For example, in the above-described embodiment, the control device 40 of the working device 11 has the functions of the control device of the present disclosure, but this is not particularly limited, and a control device may be provided in the working device 11 or the articulated arm robot 21. Similarly, in the above-described embodiment, the CPU 41 has the functions of a control unit, but this is not particularly limited, and a control unit may be provided separately from the CPU 41. In this working device 11, the height H of the end effector 22 is detected using an image captured of the calibration jig 18, so the accuracy of the height can be further improved.

上述した実施形態では、CPU41は、単位分解能を用いて、エンドエフェクタ22の高さHを検出するものとしたが、較正ジグ18の撮像画像を用いて高さを検出するものとすれば、特にこれに限定されない。例えば、対応情報43は、基準部19のピッチと高さHとの対応関係とし、CPU41は、基準部19のピッチから直接高さHを求めるものとしてもよい。

In the above embodiment, the CPU 41 detects the height H of the end effector 22 using the unit resolution, but this is not particularly limited as long as the height is detected using a captured image of the calibration jig 18. For example, the correspondence information 43 may be the correspondence between the pitch of the reference portion 19 and the height H, and the CPU 41 may directly determine the height H from the pitch of the reference portion 19.

上述した実施形態では、較正ジグ18の撮像画像からエンドエフェクタ22の高さ方向の補正値を求め、この補正値を用いてワーク31の採取、撮像及び配置を行うものとしたが、これらの処理の1以上において補正値の使用を省略してもよい。

In the above-described embodiment, a correction value in the height direction of the end effector 22 is calculated from the captured image of the calibration jig 18, and this correction value is used to collect, capture, and position the workpiece 31, but the use of the correction value may be omitted in one or more of these processes.

上述した実施形態では、ワーク31に突出部33が設けられ、突出部33が処理対象物30の挿入部に挿入されるものとして説明したが、特にこれに限定されず、突出部33を備えないワーク31としてもよいし、挿入部を有しない処理対象物30としてもよい。なお、ワーク31は、突出部33を備えるものとした方が装着精度への影響が大きく、本開示の処理を行う意義がより高い。

In the above embodiment, the workpiece 31 is provided with a protrusion 33, and the protrusion 33 is inserted into the insertion portion of the processing object 30. However, this is not particularly limited, and the workpiece 31 may not have a protrusion 33, and the processing object 30 may not have an insertion portion. Note that the workpiece 31 having a protrusion 33 has a greater effect on mounting accuracy, and the processing disclosed herein is more meaningful.

上述した実施形態では、エンドエフェクタ22は、メカニカルチャック22aと、吸着ノズル22bとを備えるものとして説明したが、メカニカルチャック22a及び吸着ノズル22bのいずれかを省略してもよい。

In the above embodiment, the end effector 22 is described as having a mechanical chuck 22a and a suction nozzle 22b, but either the mechanical chuck 22a or the suction nozzle 22b may be omitted.

上述した実施形態では、作業部20は、多関節アームロボット21を有するものとして説明したが、エンドエフェクタ22あるいはワーク31の高さを較正ジグ18の撮像画像を用いて補正するものであれば特にこれに限定されず、例えば、XYロボットの構成を有するものとしてもよい。図8は、別のロボットシステム10Bの一例を示す説明図である。このロボットシステム10Bは、実装装置11Bと、管理装置60とを備える。実装装置11Bは、基板30Bを搬送する搬送装置12Bと、フィーダ29を備えた供給装置25Bと、撮像ユニット14と、実装部20Bと、制御装置40とを備える。実装部20Bは、較正ジグ18を載置した載置台17と、実装ヘッド21Bと、実装ヘッド21Bに装着されたエンドエフェクタ22Bと、実装ヘッド21BをXY方向に移動させるヘッド移動部24とを備える。制御装置40は、上述した実施形態と同様である。このような実装装置11Bを用いた場合でも、本体から突出した突出部を有するワーク31を処理対象物30へ配置するに際して、XYロボットにおいて高さの精度をより向上することができ、ひいてはワーク31の処理対象物30への装着精度をより高めることができる。

In the above embodiment, the working unit 20 has been described as having a multi-joint arm robot 21, but is not limited thereto as long as the height of the end effector 22 or the workpiece 31 is corrected using the captured image of the calibration jig 18, and may have the configuration of an XY robot, for example. FIG. 8 is an explanatory diagram showing an example of another robot system 10B. This robot system 10B includes a mounting device 11B and a management device 60. The mounting device 11B includes a transport device 12B that transports a board 30B, a supply device 25B that includes a feeder 29, an imaging unit 14, a mounting unit 20B, and a control device 40. The mounting unit 20B includes a mounting table 17 on which the calibration jig 18 is placed, a mounting head 21B, an end effector 22B attached to the mounting head 21B, and a head moving unit 24 that moves the mounting head 21B in the XY direction. The control device 40 is the same as in the above embodiment. Even when using such a mounting device 11B, the height accuracy of the XY robot can be improved when placing a workpiece 31 having a protruding portion protruding from the main body onto the processing object 30, and thus the accuracy of mounting the workpiece 31 onto the processing object 30 can be improved.

上述した実施形態では、本開示の制御装置を制御装置40として説明したが、特にこれに限定されず、ロボットの制御方法としてもよいし、この制御方法をコンピュータが実行するプログラムとしてもよい。

In the above embodiment, the control device of the present disclosure has been described as control device 40, but it is not particularly limited to this and may be a control method for a robot, or this control method may be a program executed by a computer.

ここで、本開示の制御方法は、以下のように構成してもよい。例えば、本開示の制御方法は、ワークを採取するエンドエフェクタを備えたロボットを制御するコンピュータが実行する制御方法であって、

(a)所定間隔で基準部が設けられたジグを前記エンドエフェクタに採取させ、前記ジグを撮像するステップと、

(b)得られた撮像画像に含まれる前記基準部に基づいて前記エンドエフェクタの高さを検出する高さ検出処理を実行するステップと、

を含むものである。 Here, the control method of the present disclosure may be configured as follows. For example, the control method of the present disclosure is a control method executed by a computer that controls a robot equipped with an end effector that picks up a workpiece, comprising:

(a) causing the end effector to pick up a jig having reference portions provided at predetermined intervals and capturing an image of the jig;

(b) executing a height detection process to detect a height of the end effector based on the reference portion included in the obtained captured image;

It includes.

(a)所定間隔で基準部が設けられたジグを前記エンドエフェクタに採取させ、前記ジグを撮像するステップと、

(b)得られた撮像画像に含まれる前記基準部に基づいて前記エンドエフェクタの高さを検出する高さ検出処理を実行するステップと、

を含むものである。 Here, the control method of the present disclosure may be configured as follows. For example, the control method of the present disclosure is a control method executed by a computer that controls a robot equipped with an end effector that picks up a workpiece, comprising:

(a) causing the end effector to pick up a jig having reference portions provided at predetermined intervals and capturing an image of the jig;

(b) executing a height detection process to detect a height of the end effector based on the reference portion included in the obtained captured image;

It includes.

この制御方法では、上述した制御装置と同様に、所定間隔で基準部が設けられたジグを撮像した撮像画像の基準部の間隔などを用いてエンドエフェクタの高さを検出するため、高さの精度をより向上することができる。なお、この制御方法において、上述した制御装置の種々の態様を採用してもよいし、また、上述した制御装置の各機能を実現するようなステップを追加してもよい。なお、「エンドエフェクタの高さ」とは、エンドエフェクタの所定位置(例えば下端)の高さとしてもよいし、エンドエフェクタが採取しているワークの高さと同義とするものとしてもよい。

In this control method, as with the control device described above, the height of the end effector is detected using the spacing of reference parts in an image captured of a jig having reference parts provided at a predetermined interval, thereby further improving the accuracy of the height. Note that in this control method, various aspects of the control device described above may be adopted, and steps may be added to achieve each function of the control device described above. Note that the "height of the end effector" may be the height of a predetermined position (e.g., the bottom end) of the end effector, or may be synonymous with the height of the workpiece being picked by the end effector.

本明細書では、出願当初の請求項4において「請求項1又は2に記載の制御装置」を「請求項1~3のいずれか1項に記載の制御装置」に変更した技術思想や、出願当初の請求項5において「請求項1又は2に記載の制御装置」を「請求項1~4のいずれか1項に記載の制御装置」に変更した技術思想、出願当初の請求項6において「請求項1又は2に記載の制御装置」を「請求項1~5のいずれか1項に記載の制御装置」に変更した技術思想も開示されている。

This specification also discloses the technical idea of changing "the control device according to claim 1 or 2" to "the control device according to any one of claims 1 to 3" in claim 4 as originally filed, the technical idea of changing "the control device according to claim 1 or 2" to "the control device according to any one of claims 1 to 4" in claim 5 as originally filed, and the technical idea of changing "the control device according to claim 1 or 2" to "the control device according to any one of claims 1 to 5" in claim 6 as originally filed.

以下には、本開示のロボットシステム10を具体的に作製し、処理対象物30へのワーク31の装着精度を検討した例を実験例として説明する。実験例1が比較例に相当し、実験例2が本開示の実施例に相当する。

Below, we will explain experimental examples in which the robot system 10 of the present disclosure was specifically fabricated and the accuracy of mounting the workpiece 31 on the processing target object 30 was examined. Experimental example 1 corresponds to a comparative example, and experimental example 2 corresponds to an embodiment of the present disclosure.

実験例1として、タッチダウンセンサを用いてエンドエフェクタの高さを検出し、ワークの装着精度を測定した。図9は、従来のタッチダウンセンサ119を有するロボットシステム100の説明図である。なお、ロボットシステム100において、ロボットシステム10と同様の構成には、同じ符号を付してその説明を省略する。ロボットシステム100は、図9に示すように、撮像部15を有する撮像ユニット14と、多関節アームロボット21と、接触ジグ118と、タッチダウンセンサ119とを備える。ロボットシステム100では、接触ジグ118をタッチダウンセンサ119に接触させ、接触した位置でエンドエフェクタ22の高さを検出する。

In experimental example 1, the height of the end effector was detected using a touchdown sensor, and the mounting accuracy of the workpiece was measured. Figure 9 is an explanatory diagram of a robot system 100 having a conventional touchdown sensor 119. Note that in the robot system 100, the same components as those in the robot system 10 are given the same reference numerals and their description will be omitted. As shown in Figure 9, the robot system 100 comprises an imaging unit 14 having an imaging section 15, a multi-joint arm robot 21, a contact jig 118, and a touchdown sensor 119. In the robot system 100, the contact jig 118 is brought into contact with the touchdown sensor 119, and the height of the end effector 22 is detected at the position of contact.

実験例2として、較正ジグ18の撮像画像を用いてエンドエフェクタ22の高さを検出するロボットシステム10を作製した(図1~5参照)。ロボットシステム10では、較正ジグ18の撮像画像を用いてエンドエフェクタ22の高さHを検出し、ワーク31の装着精度を測定した。なお、装着実験では、ワーク31の角度を0°、90°、180°及び270°に変更してその装着精度を求めた。

As experimental example 2, a robot system 10 was created that detects the height of the end effector 22 using an image captured by the calibration jig 18 (see Figures 1 to 5). In the robot system 10, the height H of the end effector 22 was detected using an image captured by the calibration jig 18, and the mounting accuracy of the workpiece 31 was measured. In the mounting experiment, the angle of the workpiece 31 was changed to 0°, 90°, 180°, and 270° to determine the mounting accuracy.

図10は、タッチダウンセンサ119を用いた際の装着精度の測定結果である。図11は、本開示の較正ジグ18を用いた際の装着精度の測定結果である。図10に示すように、タッチダウンセンサ119を用いた場合は、タッチダウンセンサの感度や精度、下降動作の分解能、エンドエフェクタ22の付け替え時の組付誤差などの影響があり、その装着精度は、まだ十分ではなかった。一方、図11に示すように、較正ジグ18の撮像画像を用いてエンドエフェクタ22の高さを検出した本開示のロボットシステム10では、より高精度な高さ検出を行うことができ、ひいてはワーク31の装着精度を高めることができることが明らかとなった。

FIG. 10 shows the measurement results of the mounting accuracy when the touchdown sensor 119 was used. FIG. 11 shows the measurement results of the mounting accuracy when the calibration jig 18 of the present disclosure was used. As shown in FIG. 10, when the touchdown sensor 119 was used, the mounting accuracy was still insufficient due to the influence of the sensitivity and accuracy of the touchdown sensor, the resolution of the lowering operation, and the assembly error when replacing the end effector 22. On the other hand, as shown in FIG. 11, it was clear that the robot system 10 of the present disclosure, which detects the height of the end effector 22 using the captured image of the calibration jig 18, can perform height detection with higher accuracy, and thus can improve the mounting accuracy of the workpiece 31.

本開示の画像処理装置、実装装置、実装システム及び画像処理方法は、例えば、電子部品の実装分野に利用可能である。

The image processing device, mounting device, mounting system, and image processing method disclosed herein can be used, for example, in the field of electronic component mounting.

10,10B,100 ロボットシステム、11 作業装置、11B 実装装置、12,12B 搬送装置、13 搬送部、14 撮像ユニット、15 撮像部、16 照明部、17 載置台、18 較正ジグ、19 基準部、20 作業部、20B 実装部、21 多関節アームロボット、21B 実装ヘッド、22,22B エンドエフェクタ、22a メカニカルチャック、22b 吸着ノズル、23 カメラ、24 ヘッド移動部、25,25B 供給装置、26 供給載置部、27 加振部、28 部品供給部、29 フィーダ、30 処理対象物、30B 基板、31 ワーク、32 本体、33 突出部、34 テーパ部、35 先端部、40 制御装置、41 CPU、42 記憶部、43 対応情報、47 表示装置、48 入力装置、60 管理装置、H 高さ。