WO2006088641A1 - Profiling digital-image input devices - Google Patents

Profiling digital-image input devices Download PDFInfo

- Publication number

- WO2006088641A1 WO2006088641A1 PCT/US2006/003457 US2006003457W WO2006088641A1 WO 2006088641 A1 WO2006088641 A1 WO 2006088641A1 US 2006003457 W US2006003457 W US 2006003457W WO 2006088641 A1 WO2006088641 A1 WO 2006088641A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- coordinate values

- color

- image

- color chart

- independent

- Prior art date

Links

Classifications

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N1/00—Scanning, transmission or reproduction of documents or the like, e.g. facsimile transmission; Details thereof

- H04N1/46—Colour picture communication systems

- H04N1/56—Processing of colour picture signals

- H04N1/60—Colour correction or control

- H04N1/603—Colour correction or control controlled by characteristics of the picture signal generator or the picture reproducer

- H04N1/6033—Colour correction or control controlled by characteristics of the picture signal generator or the picture reproducer using test pattern analysis

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N1/00—Scanning, transmission or reproduction of documents or the like, e.g. facsimile transmission; Details thereof

- H04N1/46—Colour picture communication systems

- H04N1/56—Processing of colour picture signals

- H04N1/60—Colour correction or control

- H04N1/6083—Colour correction or control controlled by factors external to the apparatus

- H04N1/6086—Colour correction or control controlled by factors external to the apparatus by scene illuminant, i.e. conditions at the time of picture capture, e.g. flash, optical filter used, evening, cloud, daylight, artificial lighting, white point measurement, colour temperature

Definitions

- the device-independent data XYZ 301 is illustrated as being in the CIEXYZ device-independent color space, any device-independent color space may be used.

- FIGS. 3, 4, and 5 illustrate device- independent data as XYZ data, particular steps in FIGS. 3, 4, and 5 may operate on the device-independent data in other color spaces. Accordingly, conversions of XYZ data into other color spaces are implied by these steps and are not illustrated in the figures, because such conversions are well known to one of ordinary skill in the art.

- the input to step 402 is the RGB-XYZILLCORR array 313 of associated device- dependent data RGB and illumination-corrected device-independent data XYZ 1 LLC O RR-

- optimal parameters arc 403 that describe the 1- dimensional tone curves R, G, and B of the device-dependent data RGB (in RGB- XYZILLCORR) are generated.

- RGB-XYZILLCORR 313 is passed unmodified through step 402 to step 404.

- the process of generating optimized ⁇ -rc 403 may be performed by selecting initial parameters for ⁇ -rc, according to an embodiment of the present invention, and applying the initially selected parameters for ⁇ xc to the device- dependent data RGB to generate predicted device-independent coordinate values. Then, an error between the generated predicted device-independent coordinate values and the measured device-independent coordinate values (XYZ ⁇ LCORR ) is calculated. The process is repeated with newly selected parameters for ⁇ xc until the calculated error is minimized.

- the parameters for arc 403 that produce the minimum error are output at step 402 as the optimal parameters ⁇ -rc 403.

- the process of step 402, according to an embodiment of the present invention may be represented as follows.

- ⁇ c is a parameter for adjusting contrast, i.e., a means for reducing the output of the function f c () for values 0.0 ⁇ x ⁇ 0.5 and increasing the output of function f c () for 0.5 ⁇ x ⁇ 1.0.

- Many mathematical functions exist that can perform such an adjustment e.g., 3 rd order polynomials, splines, etc.

- the concept of performing such an adjustment is well- known in the art (c.f. the "auto-contrast" feature in applications such as Adobe® PhotoShop®) although it is often in the context of an aesthetic improvement to an image rather than attempting to characterize a device with regard to color data.

- double evalGammaModel double *RGBMax, double *gamma, double *blackBias, double filmContrastCorr, int rgbtndex, const double& cVal

- Parameters (X S A 407 may be optimized using the error minimization routines described above with respect to steps 402 and/or 404.

- the selective adjustments performed by parameters (XSA 407 at step 406 are made to one or more colors such that there is substantially no risk of introducing artifacts or unwanted corruption to images converted or rendered with profiles that have been modified with this approach.

- any method may be used at step 406, an inventive process of performing selective adjustments to a device-independent color space as part of step 406 is described below with respect to FIG. 5. The embodiment illustrated with FIG.

- step 408 may include adjusted etc parameters ⁇ c' 409 , adjusted ⁇ x ⁇ c parameters arc' 410, and adjusted CCSA parameters CI S A' 411. ⁇ c' 409 , arc' 410 and as A' 411 are output from step 408 and included in ParameterList 315.

Landscapes

- Engineering & Computer Science (AREA)

- Multimedia (AREA)

- Signal Processing (AREA)

- Image Processing (AREA)

- Facsimile Image Signal Circuits (AREA)

- Color Image Communication Systems (AREA)

- Color, Gradation (AREA)

Abstract

In a system and a method for profiling a digital-image input device, profiling of the digital-image input device is performed based at least on an image of a color chart and an estimated illumination of the color chart generated by comparing illumination of device-dependent coordinate values for the image of the color chart with illumination of device-independent coordinate values of the color chart. Because the estimated illumination of the color chart is performed on data pertaining to the color chart, the present invention may generate a profile without reference to data pertaining to scenery in the image outside of the color chart. Consequently, the present invention may generate a profile irrespective of the relative exposure of the color chart with respect to other scenery in the image.

Description

PROFILING DIGITAL-IMAGE INPUT DEVICES

FIELD OF THE INVENTION

This invention relates to profiling a digital-image input device using data representing an image of a color chart acquired by the digital-image input device. In particular, the invention is capable of generating a profile for the digital-image input device regardless of the relative exposure of the color chart with respect to other scenery in the acquired image.

BACKGROUND OF THE INVENTION

A digital-image input device, such as a digital camera or a scanner, converts light reflected from an object into digital data representing an image of the object. Typically, the digital data is divided into units, each unit describing the color of a portion, or pixel, of the image. Accordingly, the image may be described as a two-dimensional array of pixels (x, y). Further, each unit of digital data typically describes the color of a pixel by describing the amount, or intensity, of each primary color red, green, and blue, present in the pixel. For example, the digital data may indicate that the pixel at x=0 and y=0 has a red intensity of 200, a green intensity of 134, and a blue intensity of 100, where the intensity of each primary color is represented by eight bits. (Eight bits allows 256 combinations, so each primary color may have a value of 0-255, in this example, where 255 indicates the highest level of intensity and zero indicates no intensity, or black.).

The digital data produced by a digital-image input device is referred to herein as "device dependent data," because different digital-image input devices typically produce different digital data representing the same image acquired under the same conditions. For example, a first digital camera may indicate that a first pixel of an image has a red component of 200, whereas a second digital camera may indicate that the same pixel of an equivalent image taken under the same conditions has a red component of 202. For another example, the first digital camera may record the red in an apple as 200, and the second digital camera may record the red in the same part of the apple (as imaged under the same conditions) as 202. Because the device-dependent data generated

by a digital-image input device typically specifies the red, green, and blue color components associated with each pixel, it is often referred to as "RGB" data.

The differences between device-dependent data from two different devices arise from minute differences in the imaging components in each device. These differences create problems when the images are output by a digital- image output device, such as a color ink-jet printer, a CRT monitor, or an LCD monitor. For example, the image of the apple taken by the first digital camera discussed above will appear differently than the image of the apple taken by the second digital camera when output to the same color ink-jet printer. To further complicate matters, digital-image output devices also have the same types of discrepancies between each other that digital-image input devices have. For example, a user may want to view an image of a red square on one CRT monitor while a customer simultaneously views the same image on another CRT monitor. Assume that the digital-image input device used to image the red square recorded all pixels of the red square as red^OO, green-0, and blue=0. Commonly, when the two monitors display the same image, each monitor displays a slightly different red color even though they have received the same digital data from the input device.

The same differences commonly exist when printing the same image to two different printers. However, it should be noted that the digital image data processed by printers typically describes each pixel in an image according to the amount, or intensity, of each secondary color cyan, magenta, and yellow, as well as black present in the pixel. Accordingly, the device-dependent digital image data processed by printers is referred to as "CMYK" data (as opposed to RGB device-dependent data associated with digital-image input devices.) (Monitors, on the other hand, display data in RGB format).

Color profiles provide a solution to the color discrepancies between devices discussed above. Each digital-image input device typically has its own color profile that maps its device-dependent data into device-independent data. Correspondingly, each digital-image output device typically has its own color profile that converts device-independent data into device-dependent data usable by the output device to print colors representative of the device-independent data.

Device-independent data describes the color of pixels in an image in a universal manner, i.e., a device-independent color space. A device-independent color space assigns a unique value to every color, where the unique value for each color is determined using calibrated instruments and lighting conditions. Examples of device-independent color spaces are CIEXYZ, CIELAB, CIE Yxy, and CIE LCH, known in the art. Device-independent data is sometimes referred to herein as "device-independent coordinates." Device-independent data in the CIEXYZ color space is referred to herein as "XYZ data," or just "XYZ." Device-independent in the CIELAB color space is referred to herein as "LAB data," "CIELAB" or just "LAB."

Theoretically, color profiles allow a user to acquire an image of an object using any digital-image input device and to output an accurate representation of the object from a digital-image output device. With reference to FIG. 1, for example, an image of an object 101 is acquired using a digital-image input device 102. The image is represented in FIG. 1 as RGB 103. Then, the image RGB 103 is converted into device-independent data (XYZ 105, for example) using the digital-image input device's color profile 104. The device- independent data XYZ 105 is then converted using the output device's color profile 106 into device-dependent data (CMYK 107, for example) specific to the output device 108. The output device 108 uses its device-dependent data CMYK 107 to generate an accurate representation 109 of the object 101.

Conventionally, a color profile is generated by acquiring a test image of a color chart located in front of scenery under a standard lighting condition. For example, a digital camera may be used to acquire a test image of a color chart with a basket of fruit in the background. A color chart generally is a physical chart that includes different color patches, each patch associated with a device-independent color value, such as a color value identifying a CIELAB color, when viewed under a standard lighting condition, such as D50, known in the art. By comparing the device-dependent data acquired from the test image with the device-independent data known to be associated with the color chart, a color profile for the digital-image input device may be generated.

However, many conventional schemes have difficulty or fail altogether when the color chart is illuminated differently than the scenery in the test image, or when the color chart, the scene, or both are over-exposed. Further, several of the better conventional schemes for generating a color profile need to operate on uncorrected raw 16-bit RGB device-dependent data to be successful, because more standard 8-bit image formats, such as TIFF or JPG, tend to exhibit clipping. Accordingly, a need in the art exists for quality color profiling capable of operating on (a) over-exposed or under-exposed color charts relative to scenery and (b) standard 8 -bit formatted device-dependent data. SUMMARY OF THE INVENTION

The above-described problems are addressed and a technical solution is achieved in the art by a system and a method for profiling a digital- image input device according to the present invention. In an embodiment of the present invention, profiling of the digital-image input device is performed based at least on an image of a color chart and an estimated illumination of the color chart generated by comparing illumination of device-dependent coordinate values for the image of the color chart with illumination of device-independent coordinate values of the color chart. Because the estimated illumination of the color chart is performed on data pertaining to the color chart, the present invention may generate a profile without reference to data pertaining to scenery in the image outside of the color chart. Consequently, the present invention may generate a profile irrespective of the relative exposure of the color chart with respect to other scenery in the image. Further, the image of the color chart may be in a standard 8- bit image format, as the system and the method disclosed herein operate well on data that is clipped.

According to an embodiment of the present invention, the device- independent coordinate values of the color chart are linearly scaled based upon a scaling factor associated with the comparison of the illumination of the device- dependent coordinate values and the illumination of the device-independent coordinate values. Optimized parameters describing tone curves and chromati cities of the profile may be calculated to enhance the generated profile based at least on the linearly scaled device-independent coordinate values.

Further, selective adjustments to the device-independent coordinate values may be performed to enhance the generated profile.

BRIEF DESCRIPTION OF THE DRAWINGS

The present invention will be more readily understood from the detailed description of preferred embodiments presented below considered in conjunction with the attached drawings, of which:

FIG. 1 illustrates a conventional arrangement for acquiring and outputting an image;

FIG. 2 illustrates a system for generating a color profile for a digital-image input device, according to an embodiment of the present invention;

FIG. 3 illustrates a method for generating a color profile for a digital-image input device, according to an embodiment of the present invention; FIG. 4 illustrates a method for calculating a parameter list used to calculate final device independent coordinates of the profile, which may be used as step 314 in FIG. 3, according to an embodiment of the present invention; and

FIG. 5 illustrates a method for performing selective adjustments to the device independent color space, which may be used independently or as step 406 in FIG. 4, according to an embodiment of the present invention.

It is to be understood that the attached drawings are for purposes of illustrating the concepts of the invention and may not be to scale.

DETAILED DESCRIPTION OF THE INVENTION

The present invention alleviates problems associated with underexposure or over-exposure of a color chart relative to scenery in a test image used to generate a color profile for a digital-image input device. In particular, the present invention generates the color profile based upon device-dependent and device-independent data both associated with the color chart. Stated differently, the present invention may generate the color profile without regard to the scenery in the test image (i.e., the device-dependent data acquired from the scenery). Accordingly, the present invention is not necessarily dependent upon a relative exposure of the color chart with respect to the scenery. Of course, the present invention may incorporate the use of image data associated with the scenery, however such use is not necessary. Therefore, although the present invention is

often described in the context of being dependent upon image data associated with the color chart, one skilled in the art will appreciate that the present invention does not exclude the additional use of image data associated with the scenery in the test image. Further, the present invention has been determined to operate well on image data that is clipped, and, accordingly, standard 8-bit format image data may be used.

FIG. 2 illustrates a system 200 for generating a color profile for a digital-image input device, according to an embodiment of the present invention. The system 200 may include a digital-image input device 202 communicatively connected to a computer system 204, which is communicatively connected to a data storage system 206. The computer system 204 may include one or more computers communicatively connected and may or may not require the assistance of an operator 208. The digital-image input device 202 acquires a test image of a color chart and optionally a scene outside of the color chart. Examples of the digital-image input device 202 include a digital camera or a scanner. The digital- image input device 202 transmits the test image to the computer system 204, which generates a color profile for the digital-image input device 202 based upon the test image and an estimated illumination of the color chart as discussed in more detail with reference to FIGS. 3 and 4. The color profile may be stored in the data storage system 206, output to other optional devices 210, or otherwise output from the computer system 204. It should be noted that the information needed by the computer system 204, such as the test image and information pertaining to the color chart, may be provided to the computer system 204 by any means, and not necessarily by the digital-image input device 202. The data storage system 206 may include one or more computer- accessible memories. The data storage system 206 may be a distributed datastorage system including multiple computer-accessible memories communicatively connected via a plurality of computers, devices, or both. On the other hand, the data storage system 206 need not be a distributed data-storage system and, consequently, may include one or more computer-accessible memories located within a single computer or device.

The term "computer" is intended to include any data processing device, such as a desktop computer, a laptop computer, a mainframe computer, a personal digital assistant, a Blackberry, and/or any other device for processing data, and/or managing data, and/or handling data, whether implemented with electrical and/or magnetic and/or optical and/or biological components, and/or otherwise.

The phrase "computer-accessible memory" is intended to include any computer-accessible data storage device, whether volatile or nonvolatile, electronic, and/or magnetic, and/or optical, and/or otherwise, including but not limited to, floppy disks, hard disks, Compact Discs, DVDs, flash memories, ROMs, and RAMs.

The phrase "communicatively connected" is intended to include any type of connection, whether wired, wireless, or both, between devices, and/or computers, and/or programs in which data may be communicated. Further, the phrase "communicatively connected" is intended to include a connection between devices and/or programs within a single computer, a connection between devices and/or programs located in different computers, and a connection between devices not located in computers at all. In this regard, although the data storage system 206 is shown separately from the computer system 204, one skilled in the art will appreciate that the data storage system 206 may be stored completely or partially within the computer system 204.

FIG. 3 illustrates a method 300 for generating a color profile for the digital-image input device 202, according to an embodiment of the present invention. The method 300 is executed as hardware, software, and/or firmware by the computer system 204, according to an embodiment of the present invention. At step 302, the computer system 204 receives device-dependent data from the digital-image input device 202 or from some other source. The device-dependent data received at step 302 is RGB data, according to an embodiment of the present invention. The device-dependent data represents a test image of at least a color chart. It should be noted that although this application is described in the context of using a color chart, any object that has known device- independent values associated with its colors may be used. It should also be noted

that the device-dependent data received at step 302 need not include data pertaining to a scene outside of the color chart in the test image.

The operator 208, who also may operate the digital-image input device 202, may specify the location and orientation of the color chart in the test image. Alternatively, the orientation of the color chart may be determined automatically by the computer system 204. The average of the device-dependent RGB values received at step 302, for each color patch in the color chart may be determined, such that a single device-dependent RGB value for each color patch is output from step 302. Collectively, the device-dependent RGB values, each associated with a color patch, are referred to as an array of device-dependent RGB values represented as RGB 303.

At step 304, the array of RGB values, RGB 303, is associated with device-independent data XYZ 301 previously measured for the color chart used for the test image. The association of the device dependent data RGB 303 with the corresponding device-independent data XYZ 301 may be stored as a two- dimensional array, represented as "RGB-XYZ" 305 in FIG. 3. The array may include two columns, one for the device-dependent data and one for the device- independent data, where each row contains associated data for a single color patch in the color chart. For example, the following software code could be used to define such an array:

RGB rgbChartValue[i]

Lab labMeasuredValuefi], where 0<i<numPatches

rgbChartValue represents RGB 303 in FIG. 3. labMeasuredValue represents the previously measured device-independent data XYZ 301 associated with the color chart, converted and stored as Lab data. numPatches represents the number of patches in the color chart. One skilled in the art will appreciate that any manner of representing the association of the device-dependent data RGB 303 and the device-independent data XYZ 301 may be used.

Although the device-independent data XYZ 301 is illustrated as being in the CIEXYZ device-independent color space, any device-independent

color space may be used. Further, although FIGS. 3, 4, and 5 illustrate device- independent data as XYZ data, particular steps in FIGS. 3, 4, and 5 may operate on the device-independent data in other color spaces. Accordingly, conversions of XYZ data into other color spaces are implied by these steps and are not illustrated in the figures, because such conversions are well known to one of ordinary skill in the art.

At step 306, the gray patches in the device-independent data XYZ 301 are identified. The gray patches may be identified using the device- independent data XYZ stored in the array RGB-XYZ 305. The identified gray patches output at step 306 are represented as GrayPatches 307. RGB-XYZ 305 may pass through step 306 to step 308 unmodified. Although not required, it may be advantageous to identify gray patches in the LAB color space as opposed to the XYZ color space. In particular, in the LAB color space, gray colors are associated with the coordinates "a" and "b" approximately equal to zero, thereby providing for a simple calculation. Accordingly, the device-independent data XYZ in the array RGB-XYZ 305 may be converted to LAB prior to performing step 306. The following software code illustrates a way of identifying the GrayPatches 307, as well as the benefits of operating in the LAB color space.

graylndex=θ;

For (i=0; KnumPatches; i++)

{

If (labMeasuredValue[i].a()<GrayRange && labMeasuredValue[i].b()<GrayRange) {

LabGrayValue.lab[grayIndex]= labMeasuredValue[i] ; rgbGrayValues[grayIndex] = rgbChartValues[i]; graylndex++; }

nuniGrayPatches^graylndex;

labMeasuredValue[i].a() is the "a" value (in LAB) of the current patch i. Similarly, labMeasuredValue[i] .b() is the "b" value (in LAB) of the current patch i. GrayRange is the value used to determine if a color is gray. According to an

embodiment of the present invention, GrayRange is 5 delta E. LabGrayValue.lab[], when output, contains the array of gray patches 307 in the CIELAB device-independent color space. rgbGrayValues[] represents the device dependent gray values extracted from the RGB image of the color chart. numGrayPatches is the total number of identified gray patches.

According to an embodiment of the present invention, GrayPatches 307 includes both the device-independent measured data array (referred to as LabGrayValue[] in the software code, above) as well as the corresponding device dependent gray values extracted from the RGB image of the chart (referred to as rgbGrayValues[] in the software code, above). It may be convenient to sort the array of LabGrayValue[i] and rgbGray Values [i] where index i=0,..., numGrayPatches maps to lightest -> darkest, unless it is known in advance that the chart data is already sorted in this manner.

At step 308, the brightest within-range gray patch in GrayPatches 307 is identified and output as Brightest Patch 309. According to an embodiment of the present invention, the brightest within-range gray patch is the gray patch (in device-independent coordinates) that has associated device-dependent data of R<255, G<255, and B<255. Stated differently, the brightest gray patch having associated non-overexposed device-dependent data is identified. The device- independent data and the device-dependent data necessary for performing step 308 may be obtained from the array RGB-XYZ 305. GrayPatches 307 and RGB-XYZ 305 may be passed through step 308 unchanged to step 310. The following software code illustrates a way to accomplish step 308, which assumes that the input GrayPatches 307, as well as the array RGB-XYZ 305, have been sorted in order of intensity (coordinate "L" in LAB) (from brightest to darkest):

int maxGrayPatchlndex = 0; float maxL=0.0;

For (i=0;i<numGrayPatches;i-H-) { if (LabGrayValue.lab[i]>maxL && rgbGrayValues[i].r() < 255&& rgbGrayValues [i].g() < 255&& rgbGrayValues [i].b()

< 255)

{ maxL=LabgrayValue[i] ; maxGrayPatchlndex =i;

} }

maxGrayPatchlndex, upon completion of the above-code, is the location in the array labGrayValue.lab[] (GrayPatches 307) that stores the brightest in-range gray patch. maxL, upon completion of the above-code, is the L* value of the brightest in-range gray patch from labGrayValue.lab[] (GrayPatches 307). maxL is subsequently converted to Ymeasured, discussed below. rbgChartValues[] represents the device-dependent data from the array RGB-XYZ 305, such that rgbChartValues[i] .r() is the red component, rgbChartValues[i] .g() is the green component, and rgbChartValues[i].b() is the blue component.

At steps 310 and 312, in order to compensate for the effects of over-illumination or underillumination of the color chart, the device-independent data of the color chart (stored in the array RGB-XYZ 305) is scaled by an illumination-correction factor (XILLCORR- (XILLCORR* according to an embodiment of the present invention, is generated by comparing the illumination of the BrightesfPatch 309 in device-independent coordinates and the illumination of the same patch as indicated by the associated device-dependent coordinates. In other words, the measured illumination of the BrightestPatch 309 is compared against the illumination of the same patch as recorded by the digital-image input device in the RGB data 303. According to an embodiment of the present invention, αiLLcoRR is generated as follows.

C1LLCORR — (Yestimated) / (Ymeasured)

Yestimated is an estimated illumination of the RGB data 303 for the brightest in- range gray patch. Yestimated may be represented as follows.

-* estimated ~ J Y C* V0 ' ^i0 ' "ia )

where the index "i0" refers to maxGrayPatchlndex. YmeaSured is the illumination of the XYZ data 301 as identified by BrightestPatch 309.

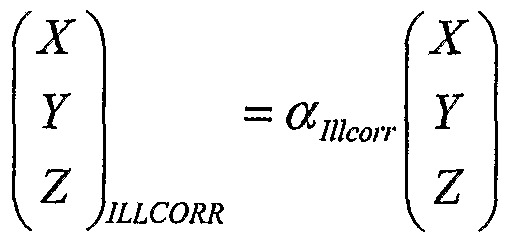

At step 312, according to an embodiment of the present invention, illumination-corrected device-independent data is generated by linearly scaling the device-independent data XYZ 301 by OIILLCORR- Such illumination-corrected device-independent data is represented in FIG. 3 as XYZ1LLCORR- The one-to-one color-patch association between XYZILLCORR and the RGB data 303 is represented as RGB-XYZiLLcoRR 313. According to an embodiment of the present invention, XYZILLCORR is generated by multiplying all values of the XYZ data 301 by αiLLcoRR, as illustrated below.

The vector on the right represents the XYZ data 301 and the vector on the left represents XYZILLCORR- This correction assures that the brightest in-range gray patch continues to have a value that corresponds to the original RGB value for that patch assuming an initial camera gamma of 2.2. According to this embodiment of the present invention, it is assumed that values of gamma and RGB chromaticites have been initialized to the default digital-image input device profile settings, e.g., sRGB or Adobe RGB, known in the art.

At step 314, an optimized list of parameters 315 used to generate final device independent coordinates of the digital-image input device profile is calculated. FIG. 4, discussed below, illustrates an inventive method for generating such parameters, according to an embodiment of the present invention. However, any method for calculating these parameters, known in the art, may be used. The parameter list 315 is used at step 316, as well as RGB 303 and XYZ 301, to generate a profile 317 for the digital-image input device 202.

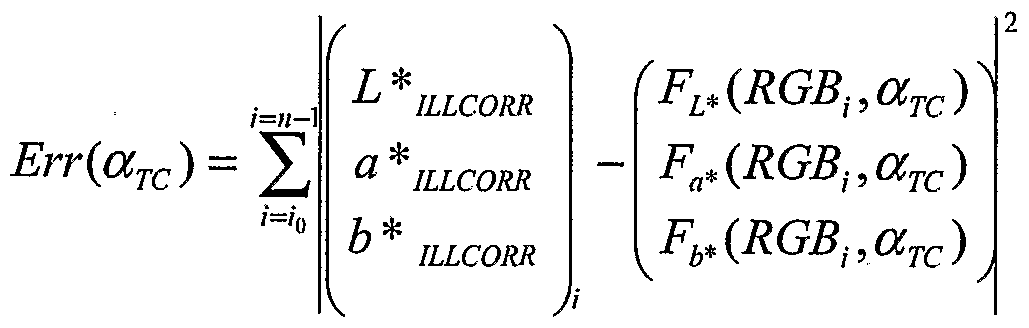

FIG. 4 illustrates an exploded view of the substeps that may be performed at step 314, according to an embodiment of the present invention. The input to step 402 is the RGB-XYZILLCORR array 313 of associated device- dependent data RGB and illumination-corrected device-independent data XYZ1LLCORR- At step 402, optimal parameters arc 403 that describe the 1- dimensional tone curves R, G, and B of the device-dependent data RGB (in RGB- XYZILLCORR) are generated. RGB-XYZILLCORR 313 is passed unmodified through step 402 to step 404.

The process of generating optimized α-rc 403 may be performed by selecting initial parameters for α-rc, according to an embodiment of the present invention, and applying the initially selected parameters for αxc to the device- dependent data RGB to generate predicted device-independent coordinate values. Then, an error between the generated predicted device-independent coordinate values and the measured device-independent coordinate values (XYZ^LCORR) is calculated. The process is repeated with newly selected parameters for αxc until the calculated error is minimized. The parameters for arc 403 that produce the minimum error are output at step 402 as the optimal parameters α-rc 403. The process of step 402, according to an embodiment of the present invention, may be represented as follows.

The index "i" refers to the "n" gray patches on the color chart, where the index "io" refers to maxGrayPatchlndex, which is the location in the array labGrayValue.lab[] (GrayPatches 307) that stores the brightest in-range gray patch. The (L*a*b*iLLCORR) vector represents the LAB coordinate values corresponding to XYZ1LLCORR- The (FLAB(RGBJ, ατc)) vector represents the function used to calculate the predicted device-independent coordinates corresponding to the device-dependent data RGB 303. Any function, known in the art, may be used for FLAB(RGBJ, αTc). Using well-known methods, such as

Newton's method or Powell's method, the parameters arc may be varied automatically in such a manner as to minimize the value of the least squared error function Err(αχc). The resulting values of arc may, in some cases, be optimal values for use in predicting device independent coordinates from RGB 303. According to an embodiment of the present invention, the following expression may be used as FLAB(R|5 «TC) for the red tone channel.

fR(R,RMax,Rbias, γR,βc) = RMax(l.0- RbiJ[fc(R,βc)]n + ^!as

fR is a function that defines the one-dimensional response of the red tone channel, such that R.Max, Rbias, YR» and βc represent arc in the case where the digital-image input device is a digital camera. The above expression may be used similarly for the green and blue tone channels, where βc = 0 in the contrast function fc(R) defined by:

fc(χΦc) = x + fid0-5 + 0.5(2(jt - 0.5))3 - x) .

Note that for βc = 0, the contrast function fc(x)=x. Rjviax is a linear scaling factor and indicates the maximum red color recorded by the digital-image input device. Rbias, a black bias offset, indicates the darkest red color recorded by the digital- image input device 202. γR is the gamma, known in the art, of the digital-image input device associated with the red color. Alternatively, γR may equal γσ and JB, which all may equal the overall gamma of the device 202. βc is a parameter for adjusting contrast, i.e., a means for reducing the output of the function fc() for values 0.0<x<0.5 and increasing the output of function fc() for 0.5<x<1.0. Many mathematical functions exist that can perform such an adjustment, e.g., 3rd order polynomials, splines, etc. The concept of performing such an adjustment is well- known in the art (c.f. the "auto-contrast" feature in applications such as Adobe® PhotoShop®) although it is often in the context of an aesthetic improvement to an image rather than attempting to characterize a device with regard to color data.

The following software code illustrates how step 402 may be performed, according to an embodiment of the present invention. It is assumed in

this software code that all GrayPatches represented in RGB-XYZJLLCORR 313 are sorted in order of brightest to darkest.

MinimizeEriOr(sumSquareGrayDeltaE, parameterList,

NumParameters) where sumSquareGrayDeltaEQ is defined as: for (i=brightestValidGray; i<nGray; i++)

{ xyzPred=evaluateModel(grayRGB[i]); labPred=mColMetric->XYZToLab(xyzPred); labMeas=grayLab [i] ; xyzMeas=mColMetric->LabToXYZ(labMeas); xyzMeas *= Y_IlluminationCorr; labMeas=mColMetric->XYZtoLab(xyzMeas) ; errSq=distanceSquaredLab(labPred, labMeas); sumSq+=errSq;

} return(sumSq);

parameterList represents α-rc 403 output by step 402, and NumParameters represents the number of parameters in arc 403. brightest ValidGray represents the location of the brightest in-range gray patch in XYZILLCORR- nGray represents the total number of gray patches. xyzPred is a predicted device independent value corresponding to a device-dependent value in RGB 303. evaluateModel() is a function that generates the predicted device independent value xyzPred, and is akin to FLAB(RGB;, αxc), discussed above. labPred represents the LAB version of xyzPred, such that xyzPred is converted to LAB space using the function mColMetric->XYZToLab(). labMeas represents the LAB device-independent values of the current gray patch (grayLab[]). xyzMeas represents the XYZ version of labMeas. xyzMeas* represents xyzMeas corrected by

Y_IlluminationCorr, which represents (XILLCORR- errSq is an individual squared error and sumSq is the total squared error.

The values of α-rc 403 should be adjusted to minimize the sum of the calculated squared errors (sumSq, for example). The mathematical model (evaluateModelO, for example) used to predict the device-independent coordinates associated with RGB (in RGB-XYZJLLCORR) may be any model known in the art. However, it is important that a selected model provide smoothness (i.e., low values of second order derivatives and/or no unwanted visual banding or artifacts when the profile is used for characterizing images) and as few parameters as possible, while achieving accurate predictions. The following software code illustrates a mathematical model (evalGammaModelQ used as FLAB(RGBJ, CITC), discussed above) that predicts device-independent coordinates for the device dependent data RGB, according to an embodiment of the present invention.

double evalGammaModel (double *RGBMax, double *gamma, double *blackBias, double filmContrastCorr, int rgbtndex, const double& cVal)

{ double val, corrVal, result^O.O; corrVal=0.5+0.5*pow(2.0*(cVal-0.5),3.0)-cVal; val=c Val+filmConstrastCorr*corrVal; if(rgblndex != iFixedMaxRGB) result=RGBMax[rgbIndex]*(l .0- blackBias[rgbIndex])* pow(val, gamma[rgb Index]) + blackBias[rgbIndex] ; else result=(l .0-blackBias[rgbIndex])* pow(val, gamma[rgb Index]) + blackBiasfrgblndex] ; if(cVaKO.O)

result=0.0; return(result);

} In this example, arc 403 includes RGBMax, gamma, blackBias, and filmContrastCorr. RGBMax corresponds to the linear scaling factor RMax, described above, as well as the GMAX and BMAX counterparts. Gamma corresponds to YR, described above, as well as the γo and γe counterparts. blackBias corresponds to Rbias, described above, as well as the Gbias and Bbias counterparts. filmContrastCorr corresponds to β0. Note that rgblndex indicates which channel is being calculated (rgbIndex=0,l,2=>R,G,B) and cVal is the input color value (R,G, or B) which is being converted to the corresponding output color value modified by the evalGamrnaModel() function. The value "result" is calculated to obtain R,G,B (out) for input index rgblndex=θ,l,2. The resultingRGB vector is multiplied by the RGB->XYZ matrix described below to obtain the calculated values of XYZ. The above calculation of the sum square error is repeated after methodically adjusting parameters α-rc 403 in the RGB- >XYZ calculation until the sum square error is minimized.

Note that the above tone curve calculation begins with a contrast adjustment applied to the RGB input values. This contrast adjustment is a 3rd order polynomial with constraints that no correction is applied to values RGB=O, 0.50, or 1.0 (assume RGB normalized to 1.0). The correction is multiplied by filmContrastCorr, default=0.0, which can be positive or negative (decreasing or increasing contrast, respectively) and added to the RGB value. The modified values are used to calculate a standard gamma function with a black bias offset (defined by blackBias[]), a linear scaling factor for each R, G, B channel (RGBMax[]) and a value of Gamma for each RGB channel (gammaf]). In this example, the total number of adjustable parameters arc 403 is ten: RGBMax, gamma for R, G, and B, blackBias for R, G, and B, and a single value of filmContrastCorr for all three channels. Note that if the number of data values for the color chart is 6 gray patches, there are 18 data points to calculate on (6 times 3 (for L*,a*,b*) = 18). In the event that the first two patches are overexposed, the

number of data points is reduced to 4x3=12. One can optionally assume that gamma is identical for each of the three RGB channels and reduce the number of parameters ατc 403 to 8. This approach is helpful for lower quality images that have noisy RGB data extracted from the color chart.

Having determined optimal parameters arc 403 at step 402, step 404 generates optimal parameters <xc 405 that describe the RGB chromaticites of the device profile, α-rc 403 and RGB-XYZILLCORR 313 are passed unmodified through step 404 to step 406. In step 404, standard Matrix/TRC formalism, as indicated in the description below, may be used to calculate the values of XYZ for R, G, and B for all the color chart values using tone response calculated in step 402. The error function is similar to that described above with respect to step 402. However, all data points may be used, and all squared errors added to the sum may be reduced by a predefined weighting factor if values of R, G, or B are equal to 0 or 255, implying that the image data was clipped and that the actual R, G, or B value is not known accurately.

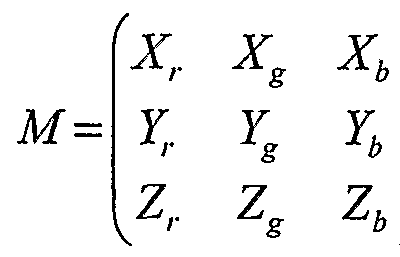

In step 404, the adjusted parameter list αc 405 is the chromaticity value (xy, known in the art) for R, G, and B. According to an embodiment of the present invention, the mathematical description of the matrix portion of the XYZ function of RGB is as follows. Note that it is assumed that device-dependent data RGB (in RGB-XYZILLCORR 313) has already been processed by the RGB tone curve functions defined above with respect to step 402. In other words, the new values of RGB are in linear RGB space. Thus, they can easily be converted to XYZ using a simple matrix:

The M matrix is uniquely determined by the (x,y) chromaticities of the RGB channels and the white point for the system, i.e., the (x,y) chromaticities resulting from the value of the XYZ at RGB=max.

χ n /yn χjy8 V^1

K(Xn >yn >Zn >*«■ Jg1 'zs> >**, Jb1 ^b1 ) = V1Jyn V8Jy8x ybjybχ zn 1Vn ∑ gl Iy81 2B1 /ybl

In this case, the formalism for defining the error function is identical to process of defining the tone curve described above with respect to step 402, with the differences that a) all color chart values can be used (as opposed to only the gray chart patches) and b) the parameters αc defined are the values of x and y for the R, G, B channels. The white point of model (xWp,ywp) should correspond to the white point used for the measured data of the chart, typically D50, known in the art. At step 406, which is optional, optimal parameters (XSA 407 that describe selective adjustments to the resulting device-independent color space values XYZ of the device profile. Parameters (XSA 407 may be optimized using the error minimization routines described above with respect to steps 402 and/or 404. According to an embodiment of the present invention, the selective adjustments performed by parameters (XSA 407 at step 406 are made to one or more colors such that there is substantially no risk of introducing artifacts or unwanted corruption to images converted or rendered with profiles that have been modified with this approach. Although any method may be used at step 406, an inventive process of performing selective adjustments to a device-independent color space as part of step 406 is described below with respect to FIG. 5. The embodiment illustrated with FIG. 5 may be used to generate parameters CCSA 407 that comprise a list of corrections ΔXYZ; for i=0,..,5 corresponding to R,G,B,C,M,Y. Such parameters

may be optimized using the error minimization processes described above such that an adjusted device-independent color space XYZadj 505 is generated that minimizes sum squared error.

At step 408, also an optional step, a final optimization of some or all ofthe parameters in axe 403, «c 405, and αsA 407 may be performed. These parameters may be optimized individually by repeating steps 402 and/or 404 and/or 406 and/or by optimizing most ofthe parameters as a group using the error minimization techniques mentioned above, such as Powell. For images that are not over-exposed, the total number of data points is 24x3=72. The total number of adjustable parameters is 10 for the tone curves (arc 403), 6 for the chromati cities (etc 405), and 18 for the selective XYZ adjustments (CCSA 407). Since there is a strong correlation ofthe chromaticity values and the selective XYZ adjustments, it may be beneficial to perform a global optimization ofthe tone curve and selective chromaticities simultaneously, leaving the chromaticities fixed. The output of step 408 may include adjusted etc parameters αc' 409 , adjusted <xτc parameters arc' 410, and adjusted CCSA parameters CISA' 411. αc' 409 , arc' 410 and as A' 411 are output from step 408 and included in ParameterList 315. RGB-XYZ ILLCORR 313 need not be output from step 408, and, instead, αjLLcoRR 311 may be output from step 408 and added to the ParameterList 315 used to generate the profile 317 at step 316. In this regard, it should be noted that step 312 could be performed as part of step 316. In this scenario, αnxcoRR 311 and RGB-XYZ 305, output from step 310, would be passed directly as input to step 314, instead of RGB- XYZILLCORR 313. Anytime illumination-corrected device-independent data is needed to be operated on in step 314, α1LLC0RR 311 could be applied to XYZ 301 (stored in RGB-XYZ 305) to generate XYZILLCORR.

The above method of FIGS. 3 and 4 has been shown to provide good results on image with color checker, known in the art, that are either overexposed or less well-illuminated than the rest of a scene. In all cases, the extreme colors and overall white balance is improved. If desired, the measured chart values XYZ 301 can be modified to achieve the equivalent of a "look profile", i.e., images can be made more saturated or otherwise modified.

Turning now to FIG. 5, a method 500, according to an embodiment of the present invention, for performing selective adjustments to a device independent color space is illustrated. The method 500 ensures an excellent visual match between an image displayed for viewing and the same image printed out in hard-copy form. The method 500 is useful for characterizing image data coming from input devices, rendering images to display devices, and converting images to output devices. The method 500 also is useful for improving the accuracy of a color profile for a digital-image input device, such as a digital camera or a scanner. Accordingly, the method 500 may be used as part of step 406 in FIG. 4. However, one skilled in the art will appreciate that the method 500 may be used completely independently of the method 300 illustrated with FIGS. 3 and 4.

The method 500 applies corrections in a linear manner in all or selected areas of device-independent data derived from device-dependent data with substantially no possibility of introducing artifacts. Selective adjustments may be made to the device-independent data to improve color in selected color regions, such as in the vicinity of red ("R"), green ("G"), blue ("B"), cyan ("C"), magenta ("M"), or yellow ("Y"), without degrading color in the unmodified color areas.

Inputs used by the method 500 include device-dependent data "RGB" 501 , device-independent data "XYZ" 502, other parameters 503, and changes to selected regions of color "ΔXYZ;" 504 in device-independent coordinates, where "i" represents a local region of color. When the method 500 is used as part of step 406 in FIG. 4, ΔXYZ; 504 represents (XSA 407. The device- dependent data RGB 501 and the device-independent data XYZ 502 correspond, such that each piece of device-dependent data in RGB 501 corresponds to a piece of device-independent data in XYZ 502. For example, if the method 500 is used as step 406 in FIG. 4, the RGB data in RGB-XYZILLCORR 313 may be input as RGB data 501, and the associated device-independent data XYZΪLLCORR in RGB- XYZiLLCORR 313 may be input as XYZ data 502. When attempting to improve a profile for RGB device-dependent data, such as RGB data 501, it is desirable to perform a correction in a device- independent color space that is similar to the output of the mathematical

expression characterizing the device associated with the RGB data. A common mathematical expression characterizing RGB-data-generating devices, such as digital cameras and scanners, is a modified version of the Matrix/TRC formalism described above, for example, in regard to step 402 in FIG. 4. This Matrix/TRC formalism inherently converts RGB to XYZ. Hence, for most devices, XYZ tristimulous space is the preferred space in which to perform corrections. Therefore, the device-independent data 502 is shown as XYZ data, and the output of the method 500 is adjusted XYZ coordinates, illustrated as XYZadj 505. However, similar linear corrections to those described with reference to the method 500 may be performed with some degree of success for visually uniform color spaces, such as CIELAB, and the various CIECAM models such as CIECAM 98, known in the art.

ΔXYZj 504 represents changes (e.g., to saturation, hue, and brightness) to selected regions "i" of color in the device-independent color space. According to an embodiment of the present invention, 0 < i < 5, such that the values zero to five are associated with the colors red, green, blue, cyan, magenta, and yellow, respectively (referred to as R0, G0, B0, C0, M0, and Y0, respectively). One skilled in the art will appreciate, however, that changes to different color regions maybe used. The value R0 is defined as R=Rmax, G=O, B=O, C=O, M=O, and Y=O. The values of G0, B0, C0, M0, and Y0 follow similarly. ΔXYZ; 504 may be generated manually or automatically. An example of manually generating ΔXYZj 504 is having an operator view a displayed image (such as on a CRT) or a hard copy print out of an image generated from the device-independent data XYZ 502 and then make changes to select colors of the displayed or printed image. For instance, if red in a displayed image looks too bright, the operator may specify a negative brightness value for ΔXYZ0 504 to reduce the brightness of the red in the image. Alternatively, ΔXYZj data 504 may be generated automatically through the use of an error minimization routine, such as that described with respect to steps 402 and/or 404 above. For example, if the method 500 is used as part of step 406 in FIG. 4, initial values for ΔXYZj data 504 may be automatically generated. Then, the method 500 may be performed iteratively, such that different values of ΔXYZ; data 504 are used in an effort to minimize the sum squared error

between the resulting device-independent color space XYZadj 505 and a predicted device-independent color space. The values of ΔXYZj data 504 that result in the least sum squared error may be output at step 406 as as A 407.

Step 506 of method 500 takes as input RGB 501 and other parameters 503 and generates linear RGB values (RGB)' 507. Alternatively, step 506 may take directly as input the linear values (RGB)' without the need for the other parameters 503 and step 506. Although any procedure for generating (RGB)' 507 may be used, (RGB)' 507 typically is calculated to be the corrected values of RGB 501 that achieve optimized predictions of XYZ 502 when multiplied by the appropriate RGB->XYZ matrix as described in the optimization processes above. In the case of a CRT, (RGB)' may be calculated by a form of gamma curve associated with the CRT, e.g., Rγ. In this case, the other parameters 503 are the parameters describing the gamma curve. More complex functions, beyond just using the gamma curve, may be required for greater accuracy or for LCD systems. In the case where the method 500 is used as step 406 in FIG. 4, the other parameters 503 may include one or more of the parameters in α-rc 403. For example, if the device-independent coordinates of a digital camera profile are to be adjusted, the camera's tone channel expressions fR, fb, fβ (and associated arc 403 parameters as described with respect to step 402) may be used at step 506 to generate (RGB)' 507.

At step 508, correction factors β 509 are generated for each color i corresponding to ΔXYZ; 504. According to an embodiment of the present invention, the correction factors β 509 are calculated using piecewise linear correction functions, such that a maximum of each of the piecewise linear correction functions occurs at a boundary condition of a corresponding device- dependent color space (RGB 501) and each piecewise linear correction function is linearly reduced to zero or approximately zero as values in the corresponding device-dependent color space approach either a different boundary condition or a neutral axis. Stated differently, the correction factors β 509 are calculated, according to an embodiment of the present invention, such that (a) the piecewise linear correction function for a correction factor (β) and a color i is at a maximum when the current device-dependent color being evaluated is at a maximum

distance from all other colors capable of being associated with i and the neutral axis, and (b) the piecewise linear correction function for a correction factor correction factor (β) and a color i scales linearly to zero as the device-dependent colors being evaluated approach another color capable of being associated with i or the neutral axis. For an illustration of what is meant by "associated," consider a color at the boundary condition RbGbBb . Assume, for example, that a boundary condition is defined as all values of RbGbBb being either 0 or 1.0. An "adjacent" or "associated" color would be one in which only one of the colors is switched from 0 to 1 or from 1 to 0 from the current color. Thus, adjacent colors to RbGbBb =(1,0,0) are (1,1,0) and (1,0,1). Adjacent colors to RbGbBb =(1,1,0) are (1,0,0) and (0,1,0)

According to an embodiment of the present invention, βi 509 for i=0 to i=5 (i.e., for R0, G0, B0, C0, Mo, and Y0) for values ΔXYZ; 504 are calculated as follows. According to this embodiment, R, G, and B are assumed to be normalized to 1.0 so that β is between zero and one, with maximum correction occurring at β=l.

RGBιr,n =min(R,G,B) β0 (R, G1B) = R- max(G, B) for i = 0(R), R > G, R > B βo(R,G,B) = O fori = 0(R),R<GorR<B

β{(R,G,B) = G-max(R,B) fori - 1(G)5G > R5G > B β{(R,G,B) = 0 fori = l(G),G<RorG<B

β2 (R, G, B) = B- max(R, G) for i = 2(B), B > G, B > R β2(R,G,B) = 0 fori = 2(B),B<GorB<R

β3 (R, G, B) = min(G, B) - RGBmin for i = 3(C), R < G, R < B β3(R,G,B) = 0 fori = 3(C)5R >GorR> B

β4 (R, G, B) = min(Λ. B) - RGBιrάα for i = 4(M)5 G < R5 G < B β4(R,G,B) = 0 fori = 4(M),G>RorG>B

β5 (R, G, B) = HIm(T-, G) - RGB^n for i = 5(Y)5 B < G, B < R β5(R,G,B) = 0 fori = 5(Y)5B >GorB> R

For example, when i=0 (associated with red), βo is at a maximum when the current device-dependent color being evaluated (from (RGB)' 507) is at a maximum distance from all other colors capable of being associated with i (that is, green, blue, cyan, magenta, and yellow) and the neutral axis. In other words, β0 is at a maximum when the green and blue components of the current device-dependent color (from (RGB)' 507) being evaluated are zero. The piecewise linear correction function for βo linearly scales to zero as the device-dependent colors being evaluated (from (RGB)' 507) approach green, blue, cyan, magenta, yellow, or the neutral axis. In other words, the linear correction function associated with βo linearly scales to zero as the green and blue components of the current device- dependent color (from (RGB)' 507) being evaluated increase.

At step 510, each of the individual device-independent changes ΔXYZj 504 is corrected by its corresponding correction factors βj 509. A total adjustment to be made to the device-independent data XYZ 502 is calculated as ΔXYZtotai 511. ΔXYZtotai 511 is calculated by summing each of the individually

corrected device-independent changes ΔXYZ; 504. According to an embodiment of the present invention, ΔXYZtotai 511 is calculated as follows.

AXYZ10101 (R,G,B) = % β. (R, G, B)AXYZ1

At step 512, the device-independent data XYZ 502 is adjusted by ' ΔXYZtotai 511, thereby generating XYZadj 505. According to an embodiment of the present invention, XYZadj 505 is calculated as follows.

XYZadJ = XYZ + AXYZtotπl

The following software code illustrates an implementation of the method 500, according to an embodiment of the present invention.

#define nRGBCMY 6 int iColor; deltaXYZ=XYZ(0.0,0.0,0.0); for (iColor=0; iColor<nRGBCMY; iColor++)

{ corrFactor=calcCorrFactor (rgbLinear, iColor); deltaX=corrFactor*mDeltaX[iColor] ; deltaY=corrFactor*mDeltaY[iColor] ; deltaZ=corrFactor*mDeltaZ[iColor] ; deltaXYZtemp.x(deltaX); deltaXYZtemp.y(deltaY); deltaXYZtemp.z(deltaZ); deltaXYZ+=deltaXYZtemρ; } xyzResult+=deltaXYZ;

double Camera_Model: :calcCorrFactor(const vRGB<double>&rgbVal, int corrColor) { double corrFactor, minRGB;

corrFactor=0.0;

minRGB=min(rgbVal.r(), min(rgbVal.g(), rgbVal.b())); if (corrColor==iRED)

5 { if (rgbVal.r()>rgbVal.g() && rgbVal.r()>rgbVal.b());

{ corrFactor=rgbVal.r() - max(rgbVal.g(), 10 rgbVal.b());

} else corrFactor=0.0

} 15 else if (corrColor==iGRE)

{ if (rgbVal.g()>rgbVal.r() && rgbVal.g()>rgbVal.b());

{

20 corrFactor=rgbVal.g() - max(rgbVal.r(), rgbVal.b());

} else corrFactor=0.0 25 } else if (corrColor==iBLU)

{ if (rgbVal.b()>rgbVal.g() && rgbVal.b()>rgbVal.r()); 30 { corrFactor=rgbVal.b() - max(rgbVal.r(), rgbValgO);

} else corrFactor=0.0

5 } else if (corrColor==iCYAN)

{ if (rgbVal.g()>rgbVal.r() && rgbVal.b()>rgbVal.r()); 10 { corrFactor=min(rgbVal.g(), rgbVal.b()) minRGB;

} else

15 corrFactor-0.0

} else if (corrColor==iMAG)

{ if (rgbVal.r()>rgbVal.g() && 20 rgbVal.b()>rgbVal.g());

{ corrFactor=min(rgbVal.r(), rgbVal.b()) - minRGB;

} 25 else corrFactor=0.0

{ 30 if (rgbVal.r()>rgbVal.b() && rgbVal.g()>rgbVal.b()); {

corrFactor=min(rgbVal.r(), rgbVal.g()) - minRGB;

} else corrFactor=0.0

} return(corrFactor) ;

}

The method 500 may be used to improve the process of soft proofing on a display. In order to determine the desired corrections for achieving such improved soft proofing, the magnitude and direction of the corrections can be estimated by correcting a display profile A->display profile A' in the desired direction of improvement and then converting colors from the corrected display profile A'->display profile A. For example, if an operator desires to shift the hue of yellow in a displayed image towards red, a value of A(XYZ)5 (yellow), which equates to a hue shift of 3 delta E towards red for saturated yellow, can be used. If the operator confirms that the desired result has occurred, the RGB profile of the display may now be corrected in an inverse manner to ensure that images rendered to the screen will have the desired red shift in the yellows. This may be accomplished to reasonable accuracy by adjusting the display profile A with a value of A(XYZ)5 (yellow), which is the negative of the above correction. Similarly, the method 500 may be used to perform device independent color space correction. For instance, an original RGB profile (profile Al) may be modified according to the method 500 to generate a new profile (profile A2). XYZ corrections may be performed by following the standard color management path of XYZ->RGB(profileAl)->RGB(profileA2)->(XYZ)'. Combining multiple conversions into a single conversion is well-known in the art and is called concatenation of profiles. The XYZ->(XYZ)' result is known as an abstract profile.

Another application for the method 500, as discussed above with reference to FIGS. 3 and 4 is for digital-image input device (such as a scanner or a digital camera) profiling. The method 500 may permit adequate correction to be applied to a generic RGB profile for a digital camera or scanner in order to preserve gray balance and good overall image integrity, but also improvement to the predictive conversion of RGB->XYZ or L*a*b* for a specific object in an image having intense color. The values of the corrections to the angle, saturation, and luminance for RGBCMY may be automatically calculated by an error minimizing routine. Unlike other techniques, the method 500 uses piecewise linear functions that may be used to correct both small color discrepancies, i.e., on the order of 2-3 delta E, or large color discrepancies, i.e., on the order of 20-30 delta E.

PARTS LIST

101 object

102 input device

103 device-dependent data RGB

104 color profile

105 device-independent data XYZ

106 output device color profile

107 device-independent data CMYK

108 output device

109 representation of object 101

200 system

202 input device

204 computer system

206 data storage system

208 operator

210 optional devices/computers

300 method

301 XYZ data

302 step

303 RGB data

304 step

305 RGB-XYZ

306 step

307 GrayPatches

308 step

309 Brightest Patch

310 step

311 ttlLLCORR

312 step

313 RGB-XYZ1LLCORR

314 step

315 parameters

316 step

317 profile

5 402 step

403 parameters arc

404 step

405 parameters etc

406 step

0 407 parameters (XSA

408 step

409 adjusted etc parameters etc'

410 adjusted arc parameters <xτc'

411 adjusted (XSA parameters CISA'

.5 500 method

501 device-dependent data "RGB"

502 device-independent data "XYZ"

503 other parameters

504 changes to selected regions of color "ΔXYZi" to 505 adjusted device-independent data XYZaclj

506 step

507 adjusted device-dependent data (RGB)'

508 step

509 correction factors β

•5 510 step

511 total changes to device-independent data ΔXYZtotai

512 step

Claims

1. A method comprising profiling a digital-image input device based at least on an image of a color chart and an estimated illumination of the color chart, wherein the profiling step generates a profile independent of a relative exposure of the color chart with respect to other portions of the image outside of the color chart.

2. The method of claim 1 , further comprising determining device-dependent coordinate values for the image of the color chart.

3. The method of claim 2, wherein the estimated illumination of the color chart comprises an illumination estimated from the device-dependent coordinate values.

4. The method of claim 2, wherein profiling the digital-image input device comprises: linearly scaling device-independent coordinate values of the color chart based at least on a scaling factor; and calculating first parameters describing tone curves of the digital- image input device based at least on the linearly scaled device-independent coordinate values.

5. The method of claim 4, wherein the scaling is performed using a scaling factor comprising a ratio of the estimated illumination of the color chart and a measured illumination of the color chart.

6. The method of claim 5, wherein linearly scaling the device- independent coordinate values comprises linearly increasing the device- independent coordinate values when the estimated illumination is high compared to the measured illumination.

7. The method of claim 5, wherein linearly scaling the device- independent coordinate values comprises linearly decreasing the device- independent coordinate values when the estimated illumination is low compared to the measured illumination.

8. The method of Claim 4, wherein profiling the digital-image input device further comprises calculating second parameters describing chromaticities of the profile based at least on the linearly scaled device- independent coordinate values.

9. The method of claim 1 , wherein the color chart comprises a plurality of color patches, and the estimated illumination of the color chart comprises an estimated illumination of a brightest gray patch of the color chart.

10. The method of claim 1 , wherein the image comprises an 8- bit image.

11. A method for generating a profile for a digital-image input device, the method comprising: capturing an image of a color chart with the digital-image input device, wherein the color chart comprises a plurality of color patches; determining device-dependent coordinate values of each of the plurality of color patches of the image; estimating an illumination of a brightest gray patch of the plurality of color patches based at least on the device-dependent coordinate values; linearly scaling device-independent coordinate values for each of the plurality of color patches based at least on the estimated illumination; generating the profile for the digital-image input device based at least on the linearly scaled device-independent coordinate values or a derivative thereof; and storing the profile in a computer-accessible memory.

12. The method of Claim 11 , wherein the profile is generated independently from of a relative exposure of the color chart with respect to other portions of the image outside of the color chart.

13. The method of Claim 11 , further comprising identifying gray patches within the color chart based at least on the device-independent coordinate values for each of the plurality of color patches.

14. The method of claim 13 , wherein identifying the gray patches comprises: comparing the device-independent coordinate values for each of the plurality of color patches with a predefined grey value; and assigning each of the plurality of color patches as a gray patch when the device-independent coordinate values are less than the gray value.

15. The method of claim 14, wherein the gray value is approximately equal to 5 delta E.

16. The method of claim 11 , wherein linearly scaling the device-independent coordinate values comprises: calculating a scaling factor based at least on a ratio of the estimated illumination of the brightest gray patch and a measured illumination of the brightest gray patch; and applying the scaling factor to the device-independent coordinate values.

17. The method of claim 16, wherein the measured illumination is based at least on device-independent coordinate values of the brightest gray patch.

18. The method of claim 11 , further comprising the steps of: calculating first parameters describing tone curves of the digital- image input device based at least on the linearly scaled device-independent coordinate values; and selectively adjusting the linearly scaled device-independent coordinate values or a derivative thereof using a piecewise linear correction function, wherein the selectively adjusted device-independent coordinate values are a derivative of the linearly scaled device-independent coordinate values, and wherein the generating step also generates the profile based at least on the first parameters.

19. The method of claim 18, wherein calculating the first parameters comprises applying device-dependent coordinate parameters to the device-dependent coordinate values to predict device-independent coordinate values.

20. The method of claim 19, further comprising adjusting the device-dependent coordinate parameters to minimize an error between predicted device-independent coordinate values from the device-dependent coordinate values and the linearly scaled device-independent coordinate values for each of the gray patches of the color chart.

21. The method of claim 19, wherein the device-dependent coordinate parameters comprise a black bias offset, a linear scaling factor, and a gamma for each of the device-dependent coordinates.

22. The method of claim 19, wherein the device-dependent coordinate parameters comprise a black bias offset, a linear scaling factor for each of the device-dependent coordinates, and a single gamma for the device- dependent coordinates.

23. The method of claim 18, wherein the piecewise linear correction function is defined such that a maxima of the piecewise linear correction function occurs at a boundary condition of a corresponding device- dependent color space and the piecewise linear correction function is linearly reduced to zero or approximately zero as values in the corresponding device- dependent color space approach either a different boundary condition or a neutral axis.

24. The method of claim 19, further comprising the step of calculating second parameters describing chromati cities of the profile based at least on the linearly scaled device-independent coordinate values, wherein the generating step also generates the profile based at least on the second parameters.

25. A method for generating a profile for a digital-image input device, the method comprising: capturing an image of a color chart with the digital-image input device, wherein the color chart comprises a plurality of color patches; determining device-dependent coordinate values of each of the plurality of color patches of the image; estimating an illumination of a brightest gray patch of the plurality of color patches based at least on the device-dependent coordinate values; linearly scaling device-independent coordinate values for each of the plurality of color patches based at least on the estimated illumination; calculating first parameters describing tone curves of the digital- image input device based at least on the linearly scaled device-independent coordinate values; calculating second parameters describing chromaticities of the . profile based at least on the linearly scaled device-independent coordinate values; selectively adjusting the linearly scaled device-independent coordinate values or a derivative thereof using a piecewise linear correction function; generating the profile for the digital-image input device based at least on the selectively-adjusted-linearly-scaled-device-independent coordinate values, the first parameters, and the second parameters, wherein the profile is generated independently from of a relative exposure of the color chart with respect to other portions of the image outside of the color chart; and storing the profile in a computer-accessible memory.

26. A computer-accessible memory comprising instructions that cause a programmable processor to : receive a captured image of a color chart with a digital-image input device, wherein the color chart comprises a plurality of color patches; determine device-dependent coordinate values for each of the plurality of color patches of the captured image; estimate an illumination of a brightest gray patch of the plurality of color patches based at least on the device-dependent coordinate values; linearly scale device-independent coordinate values for each of the plurality of color patches based at least on the estimated illumination; and generate a profile for the digital-image input device based at least on the linearly scaled device-independent coordinate values.

Priority Applications (2)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| JP2007556160A JP4881325B2 (en) | 2005-02-15 | 2006-02-01 | Profiling digital image input devices |

| EP06720018A EP1849295A1 (en) | 2005-02-15 | 2006-02-01 | Profiling digital-image input devices |

Applications Claiming Priority (4)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| US65299805P | 2005-02-15 | 2005-02-15 | |

| US60/652,998 | 2005-02-15 | ||

| US11/303,071 US7733353B2 (en) | 2005-02-15 | 2005-12-14 | System and method for profiling digital-image input devices |

| US11/303,071 | 2005-12-14 |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| WO2006088641A1 true WO2006088641A1 (en) | 2006-08-24 |

Family

ID=36123872

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| PCT/US2006/003457 WO2006088641A1 (en) | 2005-02-15 | 2006-02-01 | Profiling digital-image input devices |

Country Status (3)

| Country | Link |

|---|---|

| EP (1) | EP1849295A1 (en) |

| JP (1) | JP4881325B2 (en) |

| WO (1) | WO2006088641A1 (en) |

Cited By (1)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP2010525486A (en) * | 2007-04-27 | 2010-07-22 | ヒューレット−パッカード デベロップメント カンパニー エル.ピー. | Image segmentation and image enhancement |

Citations (6)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US5818960A (en) * | 1991-06-18 | 1998-10-06 | Eastman Kodak Company | Characterization calibration |

| US6108442A (en) * | 1997-06-27 | 2000-08-22 | Minnesota Mining And Manufacturing Company | Characterization of color imaging systems |

| US20020051159A1 (en) * | 2000-10-31 | 2002-05-02 | Fuji Photo Film Co., Ltd. | Color reproduction characteristic correction method |

| US6459436B1 (en) * | 1998-11-11 | 2002-10-01 | Canon Kabushiki Kaisha | Image processing method and apparatus |

| US20030053085A1 (en) * | 1996-11-29 | 2003-03-20 | Fuji Photo Film Co., Ltd. | Method of processing image signal |

| US6654150B1 (en) * | 1999-06-29 | 2003-11-25 | Kodak Polychrome Graphics | Colorimetric characterization of scanned media using spectral scanner and basis spectra models |

Family Cites Families (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JPH0646448A (en) * | 1992-07-21 | 1994-02-18 | Sony Corp | Image processing method/device |

| JP3912813B2 (en) * | 1996-02-23 | 2007-05-09 | 株式会社東芝 | Color image processing system |

| JPH11355586A (en) * | 1998-06-04 | 1999-12-24 | Canon Inc | Device and method for processing image |

| JP4497764B2 (en) * | 2001-08-24 | 2010-07-07 | キヤノン株式会社 | Color processing apparatus and method, and storage medium |

-

2006

- 2006-02-01 JP JP2007556160A patent/JP4881325B2/en not_active Expired - Fee Related

- 2006-02-01 WO PCT/US2006/003457 patent/WO2006088641A1/en active Application Filing

- 2006-02-01 EP EP06720018A patent/EP1849295A1/en not_active Withdrawn

Patent Citations (6)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US5818960A (en) * | 1991-06-18 | 1998-10-06 | Eastman Kodak Company | Characterization calibration |

| US20030053085A1 (en) * | 1996-11-29 | 2003-03-20 | Fuji Photo Film Co., Ltd. | Method of processing image signal |

| US6108442A (en) * | 1997-06-27 | 2000-08-22 | Minnesota Mining And Manufacturing Company | Characterization of color imaging systems |

| US6459436B1 (en) * | 1998-11-11 | 2002-10-01 | Canon Kabushiki Kaisha | Image processing method and apparatus |

| US6654150B1 (en) * | 1999-06-29 | 2003-11-25 | Kodak Polychrome Graphics | Colorimetric characterization of scanned media using spectral scanner and basis spectra models |

| US20020051159A1 (en) * | 2000-10-31 | 2002-05-02 | Fuji Photo Film Co., Ltd. | Color reproduction characteristic correction method |

Cited By (2)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP2010525486A (en) * | 2007-04-27 | 2010-07-22 | ヒューレット−パッカード デベロップメント カンパニー エル.ピー. | Image segmentation and image enhancement |

| US8417033B2 (en) | 2007-04-27 | 2013-04-09 | Hewlett-Packard Development Company, L.P. | Gradient based background segmentation and enhancement of images |

Also Published As

| Publication number | Publication date |

|---|---|

| JP2008533773A (en) | 2008-08-21 |

| EP1849295A1 (en) | 2007-10-31 |

| JP4881325B2 (en) | 2012-02-22 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| US7710432B2 (en) | Color correction techniques for correcting color profiles or a device-independent color space | |

| US6462835B1 (en) | Imaging system and method | |

| EP1014687A2 (en) | Correcting exposure and tone scale of digital images captured by an image capture device | |

| US6650771B1 (en) | Color management system incorporating parameter control channels | |

| US20010035989A1 (en) | Method, apparatus and recording medium for color correction | |

| JP2005210370A (en) | Image processor, photographic device, image processing method, image processing program | |

| US7733353B2 (en) | System and method for profiling digital-image input devices | |

| EP2551818A1 (en) | Image processing method and device, and image processing program | |

| JP2006520557A (en) | Color correction using device-dependent display profiles | |

| US7333136B2 (en) | Image processing apparatus for carrying out tone conversion processing and color correction processing using a three-dimensional look-up table | |

| WO2007127057A2 (en) | Maintenance of accurate color performance of displays | |

| JP2008011293A (en) | Image processing apparatus and method, program, and recording medium | |

| KR20100074016A (en) | Method of calibration of a target color reproduction device | |

| US8411944B2 (en) | Color processing apparatus and method thereof | |

| JPH1169181A (en) | Image processing unit | |

| US20210006758A1 (en) | Iimage processing device, image display system, image processing method, and program | |

| JP4633806B2 (en) | Color correction techniques for color profiles | |

| JP2006048420A (en) | Method for preparing color conversion table, and image processor | |

| EP1849295A1 (en) | Profiling digital-image input devices | |

| JP4300780B2 (en) | Color conversion coefficient creation method, color conversion coefficient creation apparatus, program, and storage medium | |