EP3104357A1 - A device for detecting vehicles on a traffic area - Google Patents

A device for detecting vehicles on a traffic area Download PDFInfo

- Publication number

- EP3104357A1 EP3104357A1 EP15171194.2A EP15171194A EP3104357A1 EP 3104357 A1 EP3104357 A1 EP 3104357A1 EP 15171194 A EP15171194 A EP 15171194A EP 3104357 A1 EP3104357 A1 EP 3104357A1

- Authority

- EP

- European Patent Office

- Prior art keywords

- cameras

- virtual

- traffic area

- evaluation unit

- images

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Withdrawn

Links

Images

Classifications

-

- G—PHYSICS

- G08—SIGNALLING

- G08G—TRAFFIC CONTROL SYSTEMS

- G08G1/00—Traffic control systems for road vehicles

- G08G1/01—Detecting movement of traffic to be counted or controlled

- G08G1/015—Detecting movement of traffic to be counted or controlled with provision for distinguishing between two or more types of vehicles, e.g. between motor-cars and cycles

-

- G—PHYSICS

- G08—SIGNALLING

- G08G—TRAFFIC CONTROL SYSTEMS

- G08G1/00—Traffic control systems for road vehicles

- G08G1/01—Detecting movement of traffic to be counted or controlled

- G08G1/04—Detecting movement of traffic to be counted or controlled using optical or ultrasonic detectors

-

- G—PHYSICS

- G08—SIGNALLING

- G08G—TRAFFIC CONTROL SYSTEMS

- G08G1/00—Traffic control systems for road vehicles

- G08G1/01—Detecting movement of traffic to be counted or controlled

- G08G1/017—Detecting movement of traffic to be counted or controlled identifying vehicles

- G08G1/0175—Detecting movement of traffic to be counted or controlled identifying vehicles by photographing vehicles, e.g. when violating traffic rules

Definitions

- the present invention relates to a device for detecting vehicles on a traffic area in accordance with the preamble of claim 1.

- a known method of recognizing, tracking and classifying objects is to use laser scanners.

- the laser scanners are mounted above or to the side of the road surface and detect the objects when travelling past.

- a disadvantage of this solution is that the laser scanners only scan the objects in one plane and therefore complete detection of the objects is only possible as long as the vehicles are moving. If the objects do not move evenly through the scanning area, the measurement is impaired. For example, measurement of the object length is either not possible or only in an imprecise manner. Particularly, this method is not well suitable in the case of traffic congestion or stop-and-go situations.

- a further known solution is to detect the objects by means of using stereo cameras.

- the objects are detected from different viewing directions with at least two cameras. From the geometric position of corresponding points in the camera images the position of the points can be calculated in three-dimensional space.

- a disadvantage is that stereo cameras are very expensive instruments and that if only one of the two cameras is soiled or malfunctioning, no calculations from the images of the remaining unsoiled and functioning camera are possible, resulting in a complete failure of the stereo camera system.

- the aim set by the invention is to provide a device for detecting vehicles on a traffic area, which overcomes the disadvantages of the known prior art, which is inexpensive regarding its installation and operation and nevertheless allows a precise and reliable detection of both moving and stationary vehicles on a large traffic area.

- the device for detecting vehicles on a traffic area comprises a plurality of monocular digital cameras being arranged in a distributed manner above the traffic area transversely to the traffic area, wherein the viewing direction, i.e. the optical axis, of each camera is oriented downwards.

- the viewing directions of the cameras lie in one plane, the cameras capture images at synchronized points in time, and an evaluation unit is provided which receives the images captured by the cameras via a wired or wireless data transmission path, wherein the relative positions of the cameras with regard to each other and to the traffic area as well as their viewing directions are known to the evaluation unit.

- the evaluation unit is configured to combine at least two cameras into a virtual camera, wherein the fields of view of these at least two cameras overlap each other in an image-capturing space, by calculating virtual images having virtual fields of view from the images captured by said cameras at the synchronized point in time and from the known positions and viewing directions of the combined cameras.

- the image-capturing space is defined as an area underneath the cameras as far as down to the traffic area.

- This device for detecting vehicles on a traffic area provides the advantages that the calculated virtual cameras can be positioned arbitrarily with regard to their positions and viewing directions. Thereby, a so called “Multilane Free flow" monitoring can be realized, wherein the vehicles are not confined to move along predefined traffic lanes. Rather, the lanes can be altered by traffic guiding personnel, or the vehicles can change between predefined traffic lanes within the traffic area during monitoring operation, or the vehicles can even use arbitrary portions of the traffic area.

- the use of monocular cameras is attractive in terms of price, since many camera manufacturers already offer such cameras for general purposes. Soiling or malfunctioning of a camera will not lead to a breakdown of the system, thereby allowing to provide an almost failsafe system.

- the evaluation unit for generating a plurality of instances of virtual cameras i.e. a plurality of virtual cameras

- the virtual cameras can be positioned arbitrarily with regard to their positions and viewing directions and said positioning can be changed retroactively.

- Circumferential views of a vehicle can be calculated such that a virtual camera is apparently made to "travel" sideways around the vehicle.

- traffic lanes on the traffic area are altered (added, reduced or relocated) this altering of traffic lanes on the traffic area can be compensated for by shifting the positions of the virtual cameras monitoring the traffic lines by the software being executed in the evaluation unit, without the service personnel having to readjust the actual cameras on location.

- this embodiment of the invention it is also possible to implement automatic number plate identification. Due to the combination of a plurality of real images into one virtual image an increased resolution and dynamic range of the virtual image is achieved, compared to the resolution and dynamic range of the images captured by the real cameras. Thereby the automatic number plate identification performs much better than in hitherto used systems, since the border lines and transition patterns between the characters of the number plate and its background are sharper than in the real images.

- the evaluation unit changes the instances of virtual cameras over the course of time by altering the combinations of cameras, with the change in the instances of virtual cameras optionally being carried out as a function of properties of detected vehicles, such as their dimensions, vehicle speed or direction of travel.

- a virtual camera is centered with regard to the longitudinal axis of the vehicle and further virtual cameras are positioned obliquely to the left and/or to the right thereof for counting wheels via a lateral view. This allows for calculating the length of the vehicle and counting its number of axles.

- the fields of view of at least three adjacent cameras overlap each other in an image-capturing space, which is an area underneath the cameras as far as down to the traffic area.

- This embodiment enables multiple overlaying of portions of real images captured by the cameras, thereby tremendously increasing the image quality, particularly the resolution and dynamic range, of the calculated virtual images. This is a particular advantage, when one or some camera(s) has/have been soiled.

- the cameras are mounted to an instrument axis of a portal or a gantry, which instrument axis is oriented transversely across the traffic area.

- the instrument axis can be configured as a physical axis, e.g. a beam or rail of the portal or gantry, or as a geometrical axis, i.e. a line along the portal or gantry.

- one portal or gantry, respectively is sufficient.

- Said portal or gantry does not have to be arranged precisely transversely to the traffic area, since deviations can be compensated by the virtual image calculation software of the evaluation unit. Algorithms therefor are known to skilled persons.

- monocular digital cameras of the same type For the purpose of the invention it is preferred to use monocular digital cameras of the same type. This guarantees for cheap purchase prices because of high piece numbers and for a simple spare part warehousing. Further, it is sufficient to use monocular digital cameras with an image resolution of at least 640x480 pixels. Hence, almost all commercially available, cheap cameras may be used. Of course, due to the ever growing image resolutions of cheap commercially available cameras it is to be expected that in the future cameras with higher resolutions might be employed without raising costs. On the other hand, a low resolution of the image sensors of the cameras provides the advantage of a reduced calculating and storage effort. As already explained above, superimposing of the real images in the course of calculating virtual images enhances the image quality and resolution of the virtual image, which is another argument for using low-resolution cameras.

- the plane on which the viewing directions of the cameras are located is inclined forward or backward onto the traffic area as viewed in the direction of travel of vehicles.

- This embodiment allows to capture the number plates in a less oblique angle compared to vertically captured images of the number plates. Thereby, the characters of the number plate can be recognized easier and with less effort in computation.

- the plane, which is inclined forward or backward onto the traffic area constitutes a first plane, and further monocular digital cameras are arranged in a distributed manner above the traffic area transversely to the traffic area with their viewing directions being oriented downwards, wherein the viewing directions of said further cameras lie in a second plane that is oriented vertically onto the traffic area.

- This embodiment allows for an easy recognition of number plates by using virtual images calculated from real images captured by cameras assigned to the first plane, whereas an exact calculation of the length of vehicles can be carried out by using virtual images calculated from real images captured by cameras assigned to the second plane.

- This embodiment further allows classifying vehicles in respect of various properties on the basis of different viewing angles.

- Handing over a vehicle detected at the first plane to the second plane is used to track the vehicle along the path being travelled.

- hardware data compression units are connected into the data transmission path between the cameras and the evaluation unit, which data compression units compress the image data in a loss-free manner, e.g. JPEG images with Huffman coding, or TIFF (tagged image file format) images, or in a lossy manner, e.g. JPEG images, or Wavelet compression.

- a loss-free manner e.g. JPEG images with Huffman coding, or TIFF (tagged image file format) images

- TIFF tagged image file format

- the evaluation unit combines images of cameras which have been captured successively. This embodiment allows for detecting an entire vehicle and its length, as this vehicle moves underneath the cameras in the course of time.

- the detection of vehicles can be enhanced by taking into account their weights.

- this is accomplished by building at least one weighing sensor, preferably a piezoelectric weighing sensor, into the traffic area in an area underneath the cameras.

- the device according to the present invention may further be equipped with wireless transceivers being adapted for tracking vehicles.

- These wireless transceivers may be mounted on the portal or the gantry, and may further be adapted to communicate with tracking devices mounted in the vehicles, such as the so-called "GO boxes".

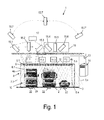

- Fig. 1 shows a device 1 for detecting vehicles 2 on a traffic area 3.

- the device 1 comprises a plurality of monocular digital cameras 5.1-5.n arranged at equal distances from each other above the traffic area 3 transversely to the traffic area 3.

- the monocular digital cameras 5.1-5.n are of the same type and may be selected from general purpose, inexpensive cameras available on the market. There are no specific requirements to the quality and resolution of the cameras. For instance, an image resolution of 640x480 pixels will be sufficient for the purpose of the present invention.

- the cameras 5.1-5.n are arranged at equal distances from each other, this is not mandatory, because unequal distances can computationally be balanced out by means of simple geometric transformations.

- the viewing directions 6.1-6.n of all cameras 5.1- 5.n are oriented downwards, but not necessarily vertically downwards, as will be explained later.

- the viewing direction 6.1- 6.n of each camera 5.1-5.n coincides with the optical axis of the optical system of each camera, i.e. the image sensor, such as a CCD sensor and a lens system.

- the viewing direction 6.1-6.n defines the center of the field of view 7.1-7.n of each camera 5.1-5.n. It is preferred that the viewing directions 6.1.6.n of all cameras 5.1-5.n are arranged in parallel to each other. This, however, is not an indispensable prerequisite for the present invention.

- the viewing directions 6.1.6.n of the cameras 5.1-5.n are not arranged in parallel to each other, this can computationally be balanced for by applying simple geometric transformations.

- the cameras 5.1-5.n are mounted on an instrument axis 9 of a cross-beam 4b of a gantry 4, which gantry 4 further comprises two posts 4a connected by the cross-beam 4b, which cross-beam 4b traverses the traffic area 3.

- the instrument axis 9 is oriented transversely across the traffic area 3.

- the fields of view 7.1-7.n of a plurality of adjacent cameras 5.1-5.n overlap each other in an image-capturing space 10, which is an area underneath the cameras as far as down to the traffic area 3.

- the cameras 5.1-5.n are mounted so closely to each other, that even at the margins of the traffic area 3 at least three fields of view overlap each other.

- the image data of the images captured by the camera 5.1-5.n are sent to data compression units 11.1-11.j via a first data transmission path 12 (wired or wireless).

- the data compression units 11.1-11.j carry out data compression algorithms on the image data, in order to considerably reduce the amount of data.

- the data compression units 11.1-11.j are configured as embedded electronic hardware modules with built-in data compression algorithms.

- the data compression algorithms are configured either as loss-free algorithms, e.g., JPEG with Huffman coding, or TIFF coding, or as lossy algorithms, such as JPEG or Wavelet algorithms.

- the number of cameras 5.1-5.n does not necessarily correspond with the number of data compression units 11.1-1l.j. As can be seen in the example of Fig.

- each data compression unit 11.1-11.j cooperates with five cameras 5.1-5.n.

- the compressed image data are sent from the data compression units 11.1-11.j via a second data transmission path 13 (wired or wireless) to an evaluation unit 14.

- the evaluation unit 14 is configured as a computer, typically a server computer, which may be located either in a safe place adjacent to the traffic area 3, or remote from it.

- the evaluation unit 14 is set up with the relative positions of the cameras 5.1-5.n with regard to each other and to the traffic area 3 as well as with the viewing directions 6.1-6.n.

- the evaluation unit 14 is configured to carry out software code that combines the images of at least two cameras 5.1-5.n, wherein the fields of view 7.1-7.n of these at least two cameras 5.1-5.n overlap each other at least partly in the image-capturing space 10. By carrying out said software portions the evaluation unit 14 combines the selected cameras 5.1-5.n into one or more virtual cameras 15.1-15.8. It is essential that the virtual images of the virtual camera 15.1-15.8 are calculated from images captured by the real cameras 5.1-5.n at a synchronized point in time.

- the virtual images are generated by the software code by computing a 2-dimensional feature set, e.g. in accordance with the FAST algorithms well-known to those skilled in the art, which FAST algorithms are for instance disclosed in Rosten, Edward, and Tom Drummond. "Machine learning for high-speed corner detection.” Computer Vision-ECCV 2006. Springer Berlin Heidelberg, 2006. 430-443.).

- these algorithms are usually embedded in standard computer-vision software libraries, such as openCV or AMD Framewave.

- This 2-dimensional feature set is then looked-up in the images having been captured at the same synchronized point in time by the other cameras 5.1-5.n combined into the virtual camera 15.1-15.8.

- the position of an object having the 2-dimensional feature set is derived from the other images, thereby allowing putting together all these images.

- Putting together images as explained is known in the art as "Stitching", see https://en.wikipedia.org/wiki/Image_stitching.

- Delimiting the object, such as a vehicle 2 or its number plate 2a, from the background of the images is done by well-known foreground-background separation methods, such as Blob Detection. It is advisable to position "lateral" virtual cameras such that two separated objects in the virtual image do not interfere with each other. This allows deriving the maximum height of a vehicle from side views "captured" by the lateral virtual cameras.

- image portions (pixels) of interest are defined several times. This means that the multiple occurring of the image portions of interests in multiple images enables combining them several times, thereby enhancing its information content, such as its dynamic range.

- image portions and objects in the images captured at synchronized points in time in the course of calculating virtual images further pixels are allocated to the retrieved image portions and objects, which improves the "virtual" resolution of the retrieved image portions and objects in the virtual image.

- the borders of characters in the number plates 2a of the vehicles 2 are sharpened, resulting in easier and more precise character recognition.

- the sharpened borders of objects in the virtual images provide further advantages, such as a better recognition of contours of vehicles.

- Fig. 3 depicts three monocular digital cameras 5.7, 5.8, 5.9 having parallel viewing directions 6.7, 6.8, 6.9 and fields of view 7.7, 7.8, 7.9.

- the cameras 5.7, 5.8, 5.9 capture partly overlapping images 27, 28, 29 at synchronized points in time.

- the image data of the images 27, 28, 29 are sent to the evaluation unit 14, which analyzes the images 27, 28, 29 in regard of 2-dimensional feature sets, such as a 2-dimensional feature set corresponding to an object 25 being present in all images 27, 28, 29. Having found this 2-dimensional feature set the images 27, 28, 29 are "stitched" together, as has been explained above.

- the evaluation unit 14 selects a portion of the images 27, 28, 29 as an area of a virtual image 26 and generates the virtual image 26 by means of pixel operations, such as summing up the pixels of the portions of the images 27, 28, 29 that correspond to the area of the virtual image 26.

- the system includes a plurality of cameras which direct multiple simultaneous streams of analog or digital input into an image transformation engine, to process those streams to remove distortion and redundant information, creating a single output image in a cylindrical or spherical perspective. After removing distortion and redundant information the pixels of the imag data streams are seamlessly merged, such that a single stream of digital or analog video is outputted from said engine.

- the output stream of digital or analog video is directed to an image clipper, which image clipper is controlled by a pan-tilt-rotation-zoom controller to select a portion of the merged panoramic or panospheric images for displaying said portion for viewing thereof.

- the evaluation unit 14 is configured to generate a plurality of virtual cameras. These virtual cameras may have different virtual viewing directions and different virtual fields of view. For instance, as depicted in Fig. 1 the evaluation unit 14 combines the images captured by the two cameras 5.1 and 5.2 into a virtual camera 15.1 having such a virtual viewing direction that it functions as a first side view camera for a first lane of the traffic area 3. Further, the evaluation unit 14 generates a second virtual camera 15.2 by combining the images captured by four cameras 5.1-5.4. This second virtual camera 15.2 has such a virtual viewing direction that it functions as a top view camera for the first lane of the traffic area 3. The evaluation unit 14 generates also a third virtual camera 15.3 by combining the images captured by three cameras 5.5-5.7.

- This third virtual camera 15.3 has such a virtual viewing direction that it functions as a second side camera for the first lane of the traffic area 3. Thereby, vehicles 2 which are moving along the first lane are surveilled from different virtual viewing directions by the three virtual cameras 15.1-15.3.

- the evaluation unit 14 is further configured to form a group 16 consisting of a plurality of virtual cameras, such as three virtual cameras 15.4, 15.5, 15.6, and to virtually move the group 16 of virtual cameras in respect of the traffic area 3.

- the three virtual cameras 15.4, 15.5, 15.6 are combined from different real cameras 5.1 such that they surveil a second lane of the traffic area 3 from different virtual viewing directions.

- the evaluation unit virtually moves the entire group 16 of virtual cameras to a new position above the relocated second lane. This is done by simply changing the cameras 15.1-15.n that are combined into the virtual cameras.

- the evaluation unit 14 By combining images from a plurality of or even from all cameras 15.1-15.n the evaluation unit 14 generates a virtual camera that "captures" 3-dimensional panorama images.

- the evaluation unit 14 is further able to reposition a virtual camera 15.7 arbitrarily with regard to its position and viewing direction, which can also be done retroactively from stored images. Thereby, circumferential views of a vehicle can be generated. This is shown in Fig. 1 where the virtual camera 15.7 is first positioned at the left side of the traffic area, then travels to the center (numeral 15.7') and later reaches an end position (numeral 15.7") at the right side of the traffic area 3.

- the virtual images of the virtual cameras 15.1-15-7 can be used by classifying units 17 in order to detect properties of objects appearing in the virtual images.

- properties comprise number plate characters, the number of axles of a vehicle 2, dimensions of the vehicle 2, and the like.

- a virtual camera is centered with regard to the longitudinal axis of the vehicle and further virtual cameras are positioned obliquely to the left and/or to the right thereof. Obliquely directed virtual cameras also serve for counting wheels via a lateral view.

- the classifying units 17 are usually realized as software code portions executed by the evaluation unit 14.

- the evaluation unit 14 virtually move a virtual camera to and fro along a lane of the traffic area by combining images of cameras, which images have been captured and stored successively.

- the plane 8, which is inclined forward or backward onto the traffic area 3, constitutes a first plane.

- Further monocular digital cameras 18.1-18.m are arranged in distances from each other along the cross-beam 4b of the gantry 4 above the traffic area 3.

- the viewing directions 19.1-19.m of the further cameras 18.1-18.m lie in a second plane 20 that is oriented vertically onto the traffic area 3.

- the evaluation unit 14 combines the cameras 18.1-18.m into one or more vertical virtual cameras 21 in the same way as has been explained above for the cameras 5.1-5.n and virtual cameras 15.1-15.8.

- the virtual images generated from the vertical virtual camera 21 are perfectly suited for measuring the length of a vehicle, particularly, when successively generated virtual images are used for detecting the length of the vehicle.

- wireless transceivers 23 adapted for tracking vehicles 2 are mounted on the gantry 4. These transceivers 23 can work e.g. according to the CEN- or UNI-DSRC or IEEE 802.11p WAVE, ETSI ITS-G5 or ISO-18000 RFID standard as the relevant communication technology for electronic toll collection, but other transmission technologies in proprietary realisation is possible. Depending on the used standard, the communication uses omni- or directional antennas at the transceiver 23, which results in other mounting location as shown in Fig. 2 .

- Fig. 4 shows an application of the invention for axle counting of vehicles.

- Fig. 4 there are depicted the virtual fields of view of four virtual cameras CA, CB, CC, CD. These virtual cameras are offset from each other across a traffic area 3, wherein virtual camera CA is the most left camera and virtual camera CD is the most right camera.

- virtual cameras CA, CB, CC, CD are displayed successively it seems to an observer that a camera moves across the traffic area 3, either from left to right (starting with the image of virtual camera CA), or from right to left (starting with the image of virtual camera CD).

- the virtual cameras CA, CB, CC, CD may have different viewing directions.

- the virtual field of view of virtual camera CA shows a part of the left side VAS of a first vehicle and a part of the top VBT of a second vehicle.

- the virtual field of view of virtual camera CB shows a part of the top VAT of the first vehicle, a part of the left side VAS of the first vehicle depicting two wheels WA arranged one behind the other, the traffic area 3, a part of the right side VBS of the second vehicle depicting two wheels WB arranged one behind the other, and a part of the top VBT of the second vehicle.

- the wheels WA of the first vehicle are represented by ellipses with small eccentricity

- the wheels WB of the second vehicle are represented by very elongated ellipses, i.e.

- the image of virtual camera CC comprises the same elements as that of virtual camera CB, with the difference that the eccentricity of the ellipses representing the wheels WA of the first vehicle has increased and the eccentricity of the ellipses representing the wheels WB of the second vehicle has decreased.

- the image of virtual camera CD differs from that of virtual camera CC in as much as the top of the second vehicle is not shown and that the eccentricity of the ellipses representing the wheels WA of the first vehicle has further increased to a very elongated shape and the eccentricity of the ellipses representing the wheels WB of the second vehicle has further decreased having almost the shape of a circle.

- axle counting for the first vehicle i.e. counting the wheels WA

- axle counting for the second vehicle i.e. counting the wheels WB

- axle counting for the second vehicle will be carried out by means of the image of virtual camera CD.

Landscapes

- Physics & Mathematics (AREA)

- General Physics & Mathematics (AREA)

- Traffic Control Systems (AREA)

- Image Processing (AREA)

Abstract

A device (1) for detecting vehicles (2) on a traffic area (3) comprises a plurality of monocular digital cameras (5.1-5.n) transversely arranged above the traffic area. The viewing directions (6.1-6.n) of the cameras are oriented downwards and lie in one plane (8). An evaluation unit (14) receives images captured by the cameras (5.1-5.n) at synchronized points in time. The relative positions of the cameras (5.1-5.n) with regard to each other and to the traffic area (3) and their viewing directions (6.1-6.n) are known to the evaluation unit (14). The evaluation unit (14) combines at least two cameras (5.1-5.n) having overlapping fields of view (7.1-7.n) into a virtual camera (15.1-15.8), by calculating virtual images with virtual fields of view from the images captured by said cameras (5.1-5.n) at the synchronized point in time and from the known positions and viewing directions of the combined cameras.

Description

- The present invention relates to a device for detecting vehicles on a traffic area in accordance with the preamble of

claim 1. - It is a recurring task in traffic engineering to recognize, classify and track the course of movement of vehicles or general objects located on a traffic area. This information is used in toll systems, for example, to check that toll payment is adhered to. However, the recognition and classification of vehicles or objects is also necessary for ascertaining accidents or for preparing traffic statistics.

- A known method of recognizing, tracking and classifying objects is to use laser scanners. The laser scanners are mounted above or to the side of the road surface and detect the objects when travelling past. A disadvantage of this solution is that the laser scanners only scan the objects in one plane and therefore complete detection of the objects is only possible as long as the vehicles are moving. If the objects do not move evenly through the scanning area, the measurement is impaired. For example, measurement of the object length is either not possible or only in an imprecise manner. Particularly, this method is not well suitable in the case of traffic congestion or stop-and-go situations.

- A further known solution is to detect the objects by means of using stereo cameras. In this method the objects are detected from different viewing directions with at least two cameras. From the geometric position of corresponding points in the camera images the position of the points can be calculated in three-dimensional space. A disadvantage is that stereo cameras are very expensive instruments and that if only one of the two cameras is soiled or malfunctioning, no calculations from the images of the remaining unsoiled and functioning camera are possible, resulting in a complete failure of the stereo camera system.

- Finally, it is also known to detect objects with single cameras. However, from the single camera image alone it is only possible to determine the position of the object imprecisely in three-dimensional space. Similarly, the measurement of the objects and the determination of the speed are only possible in an imprecise manner.

- Thus, the aim set by the invention is to provide a device for detecting vehicles on a traffic area, which overcomes the disadvantages of the known prior art, which is inexpensive regarding its installation and operation and nevertheless allows a precise and reliable detection of both moving and stationary vehicles on a large traffic area.

- This aim is achieved according to the invention by providing a device with the features of

claim 1. Advantageous embodiments of the invention are set out in the depending claims, the specification and the drawings. - The device for detecting vehicles on a traffic area according to the invention comprises a plurality of monocular digital cameras being arranged in a distributed manner above the traffic area transversely to the traffic area, wherein the viewing direction, i.e. the optical axis, of each camera is oriented downwards. According to the present invention the viewing directions of the cameras lie in one plane, the cameras capture images at synchronized points in time, and an evaluation unit is provided which receives the images captured by the cameras via a wired or wireless data transmission path, wherein the relative positions of the cameras with regard to each other and to the traffic area as well as their viewing directions are known to the evaluation unit. The evaluation unit is configured to combine at least two cameras into a virtual camera, wherein the fields of view of these at least two cameras overlap each other in an image-capturing space, by calculating virtual images having virtual fields of view from the images captured by said cameras at the synchronized point in time and from the known positions and viewing directions of the combined cameras. The image-capturing space is defined as an area underneath the cameras as far as down to the traffic area.

- This device for detecting vehicles on a traffic area according to the invention provides the advantages that the calculated virtual cameras can be positioned arbitrarily with regard to their positions and viewing directions. Thereby, a so called "Multilane Free flow" monitoring can be realized, wherein the vehicles are not confined to move along predefined traffic lanes. Rather, the lanes can be altered by traffic guiding personnel, or the vehicles can change between predefined traffic lanes within the traffic area during monitoring operation, or the vehicles can even use arbitrary portions of the traffic area. The use of monocular cameras is attractive in terms of price, since many camera manufacturers already offer such cameras for general purposes. Soiling or malfunctioning of a camera will not lead to a breakdown of the system, thereby allowing to provide an almost failsafe system. It is also possible by the invention to generate a virtual "overview"-image or even a virtual "ovemiew"-video sequence of the entire traffic area, the latter by sequentially linking virtual images having different virtual fields of view. By means of virtual cameras, axle counts of vehicles can be performed (for placing tolls as per vehicle axles).

- By designing the evaluation unit for generating a plurality of instances of virtual cameras, i.e. a plurality of virtual cameras, by combining different cameras for each instance of a virtual camera, the virtual cameras can be positioned arbitrarily with regard to their positions and viewing directions and said positioning can be changed retroactively. Circumferential views of a vehicle (and from this the dimensions thereof) can be calculated such that a virtual camera is apparently made to "travel" sideways around the vehicle. When traffic lanes on the traffic area are altered (added, reduced or relocated) this altering of traffic lanes on the traffic area can be compensated for by shifting the positions of the virtual cameras monitoring the traffic lines by the software being executed in the evaluation unit, without the service personnel having to readjust the actual cameras on location. With this embodiment of the invention it is also possible to implement automatic number plate identification. Due to the combination of a plurality of real images into one virtual image an increased resolution and dynamic range of the virtual image is achieved, compared to the resolution and dynamic range of the images captured by the real cameras. Thereby the automatic number plate identification performs much better than in hitherto used systems, since the border lines and transition patterns between the characters of the number plate and its background are sharper than in the real images.

- In a preferred embodiment of the invention the evaluation unit changes the instances of virtual cameras over the course of time by altering the combinations of cameras, with the change in the instances of virtual cameras optionally being carried out as a function of properties of detected vehicles, such as their dimensions, vehicle speed or direction of travel. For example, a virtual camera is centered with regard to the longitudinal axis of the vehicle and further virtual cameras are positioned obliquely to the left and/or to the right thereof for counting wheels via a lateral view. This allows for calculating the length of the vehicle and counting its number of axles.

- In another embodiment of the device according to the present invention the fields of view of at least three adjacent cameras overlap each other in an image-capturing space, which is an area underneath the cameras as far as down to the traffic area. This embodiment enables multiple overlaying of portions of real images captured by the cameras, thereby tremendously increasing the image quality, particularly the resolution and dynamic range, of the calculated virtual images. This is a particular advantage, when one or some camera(s) has/have been soiled.

- In an easily installable embodiment of the device according to the present invention the cameras are mounted to an instrument axis of a portal or a gantry, which instrument axis is oriented transversely across the traffic area. The instrument axis can be configured as a physical axis, e.g. a beam or rail of the portal or gantry, or as a geometrical axis, i.e. a line along the portal or gantry. In contrast to laser scanners or camera assemblies according to the prior art, one portal or gantry, respectively, is sufficient. Said portal or gantry does not have to be arranged precisely transversely to the traffic area, since deviations can be compensated by the virtual image calculation software of the evaluation unit. Algorithms therefor are known to skilled persons.

- For the purpose of the invention it is preferred to use monocular digital cameras of the same type. This guarantees for cheap purchase prices because of high piece numbers and for a simple spare part warehousing. Further, it is sufficient to use monocular digital cameras with an image resolution of at least 640x480 pixels. Hence, almost all commercially available, cheap cameras may be used. Of course, due to the ever growing image resolutions of cheap commercially available cameras it is to be expected that in the future cameras with higher resolutions might be employed without raising costs. On the other hand, a low resolution of the image sensors of the cameras provides the advantage of a reduced calculating and storage effort. As already explained above, superimposing of the real images in the course of calculating virtual images enhances the image quality and resolution of the virtual image, which is another argument for using low-resolution cameras.

- In order to reduce the effort in calculation it is suggested to arrange the cameras at equal distances from each other. Thereby no compensation algorithms are required for balancing out unequal mutual distances. The same advantages are achieved, when the viewing directions of all cameras are arranged in parallel to each other.

- In another embodiment of the invention the plane on which the viewing directions of the cameras are located is inclined forward or backward onto the traffic area as viewed in the direction of travel of vehicles. This embodiment allows to capture the number plates in a less oblique angle compared to vertically captured images of the number plates. Thereby, the characters of the number plate can be recognized easier and with less effort in computation. In a further development of this embodiment of the invention the plane, which is inclined forward or backward onto the traffic area, constitutes a first plane, and further monocular digital cameras are arranged in a distributed manner above the traffic area transversely to the traffic area with their viewing directions being oriented downwards, wherein the viewing directions of said further cameras lie in a second plane that is oriented vertically onto the traffic area. This embodiment allows for an easy recognition of number plates by using virtual images calculated from real images captured by cameras assigned to the first plane, whereas an exact calculation of the length of vehicles can be carried out by using virtual images calculated from real images captured by cameras assigned to the second plane. This embodiment further allows classifying vehicles in respect of various properties on the basis of different viewing angles.

- Handing over a vehicle detected at the first plane to the second plane is used to track the vehicle along the path being travelled.

- In a preferred embodiment of the present invention hardware data compression units are connected into the data transmission path between the cameras and the evaluation unit, which data compression units compress the image data in a loss-free manner, e.g. JPEG images with Huffman coding, or TIFF (tagged image file format) images, or in a lossy manner, e.g. JPEG images, or Wavelet compression. With said data compression units an extreme reduction of the amount of image data and thereby in the effort in calculation within the evaluation unit can be achieved.

- It is also preferred that the evaluation unit combines images of cameras which have been captured successively. This embodiment allows for detecting an entire vehicle and its length, as this vehicle moves underneath the cameras in the course of time.

- Advantageously, the detection of vehicles can be enhanced by taking into account their weights. According to the invention, this is accomplished by building at least one weighing sensor, preferably a piezoelectric weighing sensor, into the traffic area in an area underneath the cameras.

- The device according to the present invention may further be equipped with wireless transceivers being adapted for tracking vehicles. These wireless transceivers may be mounted on the portal or the gantry, and may further be adapted to communicate with tracking devices mounted in the vehicles, such as the so-called "GO boxes".

- The invention is explained in more detail below on the basis of exemplary embodiments shown in the attached drawings:

-

Figures 1 and2 show a first embodiment of the device of the invention in a front view and a side view. -

Fig. 3 shows in a front view the principle of combining cameras into a virtual camera. -

Fig. 4 shows an application of the invention for axle counting of vehicles. -

Fig. 1 shows adevice 1 for detectingvehicles 2 on atraffic area 3. Thedevice 1 comprises a plurality of monocular digital cameras 5.1-5.n arranged at equal distances from each other above thetraffic area 3 transversely to thetraffic area 3. The monocular digital cameras 5.1-5.n are of the same type and may be selected from general purpose, inexpensive cameras available on the market. There are no specific requirements to the quality and resolution of the cameras. For instance, an image resolution of 640x480 pixels will be sufficient for the purpose of the present invention. Although it is preferred that the cameras 5.1-5.n are arranged at equal distances from each other, this is not mandatory, because unequal distances can computationally be balanced out by means of simple geometric transformations. - The viewing directions 6.1-6.n of all cameras 5.1- 5.n are oriented downwards, but not necessarily vertically downwards, as will be explained later. The viewing direction 6.1- 6.n of each camera 5.1-5.n coincides with the optical axis of the optical system of each camera, i.e. the image sensor, such as a CCD sensor and a lens system. The viewing direction 6.1-6.n defines the center of the field of view 7.1-7.n of each camera 5.1-5.n. It is preferred that the viewing directions 6.1.6.n of all cameras 5.1-5.n are arranged in parallel to each other. This, however, is not an indispensable prerequisite for the present invention. If the viewing directions 6.1.6.n of the cameras 5.1-5.n are not arranged in parallel to each other, this can computationally be balanced for by applying simple geometric transformations. On the other hand, it is regarded essential that the viewing directions 6.1-6.n of the cameras 5.1-5.n lie in one

plane 8 and that the cameras 5.1-5.n capture images at synchronized points in time. - In the embodiment of

Fig. 1 the cameras 5.1-5.n are mounted on aninstrument axis 9 of across-beam 4b of agantry 4, which gantry 4 further comprises twoposts 4a connected by thecross-beam 4b, whichcross-beam 4b traverses thetraffic area 3. Theinstrument axis 9 is oriented transversely across thetraffic area 3. - As can be seen in

Fig. 1 the fields of view 7.1-7.n of a plurality of adjacent cameras 5.1-5.n overlap each other in an image-capturingspace 10, which is an area underneath the cameras as far as down to thetraffic area 3. The cameras 5.1-5.n are mounted so closely to each other, that even at the margins of thetraffic area 3 at least three fields of view overlap each other. - The image data of the images captured by the camera 5.1-5.n are sent to data compression units 11.1-11.j via a first data transmission path 12 (wired or wireless). The data compression units 11.1-11.j carry out data compression algorithms on the image data, in order to considerably reduce the amount of data. The data compression units 11.1-11.j are configured as embedded electronic hardware modules with built-in data compression algorithms. The data compression algorithms are configured either as loss-free algorithms, e.g., JPEG with Huffman coding, or TIFF coding, or as lossy algorithms, such as JPEG or Wavelet algorithms. The number of cameras 5.1-5.n does not necessarily correspond with the number of data compression units 11.1-1l.j. As can be seen in the example of

Fig. 1 , each data compression unit 11.1-11.j cooperates with five cameras 5.1-5.n. The compressed image data are sent from the data compression units 11.1-11.j via a second data transmission path 13 (wired or wireless) to anevaluation unit 14. Theevaluation unit 14 is configured as a computer, typically a server computer, which may be located either in a safe place adjacent to thetraffic area 3, or remote from it. Theevaluation unit 14 is set up with the relative positions of the cameras 5.1-5.n with regard to each other and to thetraffic area 3 as well as with the viewing directions 6.1-6.n. Theevaluation unit 14 is configured to carry out software code that combines the images of at least two cameras 5.1-5.n, wherein the fields of view 7.1-7.n of these at least two cameras 5.1-5.n overlap each other at least partly in the image-capturingspace 10. By carrying out said software portions theevaluation unit 14 combines the selected cameras 5.1-5.n into one or more virtual cameras 15.1-15.8. It is essential that the virtual images of the virtual camera 15.1-15.8 are calculated from images captured by the real cameras 5.1-5.n at a synchronized point in time. - The virtual images are generated by the software code by computing a 2-dimensional feature set, e.g. in accordance with the FAST algorithms well-known to those skilled in the art, which FAST algorithms are for instance disclosed in Rosten, Edward, and Tom Drummond. "Machine learning for high-speed corner detection." Computer Vision-ECCV 2006. Springer Berlin Heidelberg, 2006. 430-443.). Nowadays, these algorithms are usually embedded in standard computer-vision software libraries, such as openCV or AMD Framewave. This 2-dimensional feature set is then looked-up in the images having been captured at the same synchronized point in time by the other cameras 5.1-5.n combined into the virtual camera 15.1-15.8. When the 2-dimensional feature set is found in the other images, the position of an object having the 2-dimensional feature set is derived from the other images, thereby allowing putting together all these images. Putting together images as explained is known in the art as "Stitching", see https://en.wikipedia.org/wiki/Image_stitching. Delimiting the object, such as a

vehicle 2 or itsnumber plate 2a, from the background of the images is done by well-known foreground-background separation methods, such as Blob Detection. It is advisable to position "lateral" virtual cameras such that two separated objects in the virtual image do not interfere with each other. This allows deriving the maximum height of a vehicle from side views "captured" by the lateral virtual cameras. By retrieving feature-sets in a plurality of adjacent images captured by the real cameras 5.1-5.n image portions (pixels) of interest are defined several times. This means that the multiple occurring of the image portions of interests in multiple images enables combining them several times, thereby enhancing its information content, such as its dynamic range. By retrieving image portions and objects in the images captured at synchronized points in time in the course of calculating virtual images further pixels are allocated to the retrieved image portions and objects, which improves the "virtual" resolution of the retrieved image portions and objects in the virtual image. Thereby, for instance the borders of characters in thenumber plates 2a of thevehicles 2 are sharpened, resulting in easier and more precise character recognition. Of course, the sharpened borders of objects in the virtual images provide further advantages, such as a better recognition of contours of vehicles. - The principle of combining a plurality of cameras 5 into a virtual camera is explained in

Fig. 3. Fig. 3 depicts three monocular digital cameras 5.7, 5.8, 5.9 having parallel viewing directions 6.7, 6.8, 6.9 and fields of view 7.7, 7.8, 7.9. The cameras 5.7, 5.8, 5.9 capture partly overlappingimages images evaluation unit 14, which analyzes theimages object 25 being present in allimages images evaluation unit 14 selects a portion of theimages virtual image 26 and generates thevirtual image 26 by means of pixel operations, such as summing up the pixels of the portions of theimages virtual image 26. - Combining the images captured by at least two cameras 5.1-5.n into an image "taken by" a virtual camera, wherein the virtual images have virtual fields of view can e.g. be carried out according to the method described in

US 5,657,073 A , which document is incorporated herein by reference. The system includes a plurality of cameras which direct multiple simultaneous streams of analog or digital input into an image transformation engine, to process those streams to remove distortion and redundant information, creating a single output image in a cylindrical or spherical perspective. After removing distortion and redundant information the pixels of the imag data streams are seamlessly merged, such that a single stream of digital or analog video is outputted from said engine. The output stream of digital or analog video is directed to an image clipper, which image clipper is controlled by a pan-tilt-rotation-zoom controller to select a portion of the merged panoramic or panospheric images for displaying said portion for viewing thereof. - As explained above the

evaluation unit 14 is configured to generate a plurality of virtual cameras. These virtual cameras may have different virtual viewing directions and different virtual fields of view. For instance, as depicted inFig. 1 theevaluation unit 14 combines the images captured by the two cameras 5.1 and 5.2 into a virtual camera 15.1 having such a virtual viewing direction that it functions as a first side view camera for a first lane of thetraffic area 3. Further, theevaluation unit 14 generates a second virtual camera 15.2 by combining the images captured by four cameras 5.1-5.4. This second virtual camera 15.2 has such a virtual viewing direction that it functions as a top view camera for the first lane of thetraffic area 3. Theevaluation unit 14 generates also a third virtual camera 15.3 by combining the images captured by three cameras 5.5-5.7. This third virtual camera 15.3 has such a virtual viewing direction that it functions as a second side camera for the first lane of thetraffic area 3. Thereby,vehicles 2 which are moving along the first lane are surveilled from different virtual viewing directions by the three virtual cameras 15.1-15.3. - The

evaluation unit 14 is further configured to form agroup 16 consisting of a plurality of virtual cameras, such as three virtual cameras 15.4, 15.5, 15.6, and to virtually move thegroup 16 of virtual cameras in respect of thetraffic area 3. For instance, the three virtual cameras 15.4, 15.5, 15.6 are combined from different real cameras 5.1 such that they surveil a second lane of thetraffic area 3 from different virtual viewing directions. When this second lane has to be relocated for any reason (e.g. construction work) then the evaluation unit virtually moves theentire group 16 of virtual cameras to a new position above the relocated second lane. This is done by simply changing the cameras 15.1-15.n that are combined into the virtual cameras. - By combining images from a plurality of or even from all cameras 15.1-15.n the

evaluation unit 14 generates a virtual camera that "captures" 3-dimensional panorama images. - The

evaluation unit 14 is further able to reposition a virtual camera 15.7 arbitrarily with regard to its position and viewing direction, which can also be done retroactively from stored images. Thereby, circumferential views of a vehicle can be generated. This is shown inFig. 1 where the virtual camera 15.7 is first positioned at the left side of the traffic area, then travels to the center (numeral 15.7') and later reaches an end position (numeral 15.7") at the right side of thetraffic area 3. - It should be noted that the number of instances of virtual cameras, as well as their movements separately or in groups is only limited by the computational power of the

evaluation unit 14. Generating or altering virtual cameras over the course of time by altering the combinations of cameras may be triggered by properties of detected objects, such as vehicle dimensions, vehicle speed and direction of travel. Soiled or malfunctioning cameras used for a virtual camera can be dynamically replaced by adjacent clean and functioning cameras by means of software. - It should be noted that the virtual images of the virtual cameras 15.1-15-7 can be used by classifying

units 17 in order to detect properties of objects appearing in the virtual images. Such properties comprise number plate characters, the number of axles of avehicle 2, dimensions of thevehicle 2, and the like. For detecting dimensions of a vehicle a virtual camera is centered with regard to the longitudinal axis of the vehicle and further virtual cameras are positioned obliquely to the left and/or to the right thereof. Obliquely directed virtual cameras also serve for counting wheels via a lateral view. The classifyingunits 17 are usually realized as software code portions executed by theevaluation unit 14. - It is further possible to have the

evaluation unit 14 virtually move a virtual camera to and fro along a lane of the traffic area by combining images of cameras, which images have been captured and stored successively. - In order to realize an automatic number plate recognition it is helpful to arrange the

plane 8, on which the viewing directions 6.1-6.n of the cameras 5.1-5.n are located, forward or backward inclined onto the traffic area. This provides for less geometrical distortion of the characters of the number plate. - In an optional embodiment of the

device 1 theplane 8, which is inclined forward or backward onto thetraffic area 3, constitutes a first plane. Further monocular digital cameras 18.1-18.m are arranged in distances from each other along thecross-beam 4b of thegantry 4 above thetraffic area 3. The viewing directions 19.1-19.m of the further cameras 18.1-18.m lie in asecond plane 20 that is oriented vertically onto thetraffic area 3. Theevaluation unit 14 combines the cameras 18.1-18.m into one or more verticalvirtual cameras 21 in the same way as has been explained above for the cameras 5.1-5.n and virtual cameras 15.1-15.8. The virtual images generated from the verticalvirtual camera 21 are perfectly suited for measuring the length of a vehicle, particularly, when successively generated virtual images are used for detecting the length of the vehicle. - Optionally, there are weighing

sensors 22, preferably piezoelectric weighing sensor, built into thetraffic area 3 underneath the cameras, in order to weighvehicles 2 while they are detected by thedevice 1. Optionally, wireless transceivers 23 adapted for trackingvehicles 2 are mounted on thegantry 4. These transceivers 23 can work e.g. according to the CEN- or UNI-DSRC or IEEE 802.11p WAVE, ETSI ITS-G5 or ISO-18000 RFID standard as the relevant communication technology for electronic toll collection, but other transmission technologies in proprietary realisation is possible. Depending on the used standard, the communication uses omni- or directional antennas at the transceiver 23, which results in other mounting location as shown inFig. 2 . -

Fig. 4 shows an application of the invention for axle counting of vehicles. InFig. 4 there are depicted the virtual fields of view of four virtual cameras CA, CB, CC, CD. These virtual cameras are offset from each other across atraffic area 3, wherein virtual camera CA is the most left camera and virtual camera CD is the most right camera. When the fields of view of the virtual cameras CA, CB, CC, CD are displayed successively it seems to an observer that a camera moves across thetraffic area 3, either from left to right (starting with the image of virtual camera CA), or from right to left (starting with the image of virtual camera CD). The virtual cameras CA, CB, CC, CD may have different viewing directions. The virtual field of view of virtual camera CA shows a part of the left side VAS of a first vehicle and a part of the top VBT of a second vehicle. The virtual field of view of virtual camera CB shows a part of the top VAT of the first vehicle, a part of the left side VAS of the first vehicle depicting two wheels WA arranged one behind the other, thetraffic area 3, a part of the right side VBS of the second vehicle depicting two wheels WB arranged one behind the other, and a part of the top VBT of the second vehicle. Depending on the viewing directions of virtual camera CB the wheels WA of the first vehicle are represented by ellipses with small eccentricity, whereas the wheels WB of the second vehicle are represented by very elongated ellipses, i.e. ellipses with an eccentricity close to 1. The image of virtual camera CC comprises the same elements as that of virtual camera CB, with the difference that the eccentricity of the ellipses representing the wheels WA of the first vehicle has increased and the eccentricity of the ellipses representing the wheels WB of the second vehicle has decreased. The image of virtual camera CD differs from that of virtual camera CC in as much as the top of the second vehicle is not shown and that the eccentricity of the ellipses representing the wheels WA of the first vehicle has further increased to a very elongated shape and the eccentricity of the ellipses representing the wheels WB of the second vehicle has further decreased having almost the shape of a circle. For axle counting of the two vehicles, i.e. counting the wheels positioned one behind the other, the images of all four virtual cameras CA, CB, CC, CD are analyzed in respect of the visibility of wheels and those images are selected for axle counting showing wheels as ellipses with the smallest eccentricity. In the present example axle counting for the first vehicle, i.e. counting the wheels WA, will be carried out by means of the image of virtual camera CB, whereas axle counting for the second vehicle, i.e. counting the wheels WB, will be carried out by means of the image of virtual camera CD.

Claims (15)

- A device (1) for detecting vehicles (2) on a traffic area (3), wherein a plurality of monocular digital cameras (5.1-5.n) are arranged in a distributed manner above the traffic area transversely to the traffic area and the viewing direction (6.1-6.n) of each camera is oriented downwards, characterized in that

the viewing directions (6.1-6.n) of the cameras (5.1-5.n) lie in one plane (8),

the cameras (5.1-5.n) capture images at synchronized points in time,

an evaluation unit (14) is provided which receives images captured by the cameras (5.1-5.n) via a data transmission path (12, 13),

the relative positions of the cameras (5.1-5.n) with regard to each other and to the traffic area (3) and their viewing directions (6.1-6.n) are known to the evaluation unit (14),

the evaluation unit (14) is configured to combine at least two cameras (5.1-5.n) into a virtual camera (15.1-15.8), wherein the fields of view (7.1-7.n) of these at least two cameras (5.1-5.n) overlap each other in an image-capturing space (10), by calculating virtual images having virtual fields of view from the images captured by said cameras (5.1-5.n) at the synchronized point in time and from the known positions and viewing directions of the combined cameras. - A device according to claim 1, characterized in that the evaluation unit (14) is designed for generating a plurality of instances of virtual cameras (15.1-15.8) by combining different cameras (5.1-5.n).

- A device according to claim 2, characterized in that the evaluation unit (14) changes the instances of virtual cameras (15.1-15.8) over the course of time by altering the combinations of cameras (5.1-5.n), with the change in the instances of virtual cameras optionally being carried out as a function of properties of detected vehicles (2), such as their dimensions, vehicle speed and direction of travel.

- A device according to any of the preceding claims, characterized in that the fields of view (7.1-7.n) of at least three adjacent cameras (5.1-5.n) overlap each other in an image-capturing space (10).

- A device according to any of the preceding claims, characterized in that the cameras (5.1-5.n) are mounted to an instrument axis (9) of a portal or a gantry (4), which instrument axis (9) is oriented transversely across the traffic area (3).

- A device according to any of the preceding claims, characterized in that the monocular digital cameras (5.1-5.n) are of the same type and exhibit an image resolution of at least 640x480 pixels.

- A device according to any of the preceding claims, characterized in that the cameras (5.1-5.n) are arranged at equal distances from each other.

- A device according to any of the preceding claims, characterized in that the viewing directions (6.1-6.n) of all cameras (5.1-5.n) are arranged in parallel to each other.

- A device according to any of the preceding claims, characterized in that, viewed in the direction of travel of vehicles (2), the plane (8) on which the viewing directions of the cameras are located is inclined forward or backward onto the traffic area (3).

- A device according to any of the preceding claims, characterized in that data compression units (11.1-11.j) are connected into the data transmission path (12,13) between the cameras (5.1-5.n) and the evaluation unit (14), which data compression units compress the image data in a loss-free manner or lossy manner.

- A device according to any of the preceding claims, characterized in that the evaluation unit (14) combines images of cameras (5.1-5.n) which have been captured successively.

- A device according to claim 9, characterized in that the plane (8), which is inclined forward or backward onto the traffic area (3), constitutes a first plane and that further monocular digital cameras (18.1-18.m) are arranged in a distributed manner above the traffic area (3) transversely to the traffic area with their viewing directions (19.1-19.m) being oriented downwards, wherein the viewing directions (19.1-19.m) of said cameras (18.1-18.m) lie in a second plane (20) that is oriented vertically onto the traffic area (3).

- A device according to any of the preceding claims, characterized in that at least one weighing sensor (22), preferably a piezoelectric weighing sensor, is built into the traffic area (3) underneath the cameras.

- A device according to any of claims 5 to 13, wherein wireless transceivers (23) being adapted for tracking vehicles are mounted on the portal or the gantry.

- A device according to claim 12 wherein handing over a vehicle detected at the first plane (8) to the second plane (20) is used to track the vehicle along the path being travelled.

Priority Applications (2)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| EP15171194.2A EP3104357A1 (en) | 2015-06-09 | 2015-06-09 | A device for detecting vehicles on a traffic area |

| PCT/EP2016/061108 WO2016198242A1 (en) | 2015-06-09 | 2016-05-18 | A device for detecting vehicles on a traffic area |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| EP15171194.2A EP3104357A1 (en) | 2015-06-09 | 2015-06-09 | A device for detecting vehicles on a traffic area |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| EP3104357A1 true EP3104357A1 (en) | 2016-12-14 |

Family

ID=53434220

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| EP15171194.2A Withdrawn EP3104357A1 (en) | 2015-06-09 | 2015-06-09 | A device for detecting vehicles on a traffic area |

Country Status (2)

| Country | Link |

|---|---|

| EP (1) | EP3104357A1 (en) |

| WO (1) | WO2016198242A1 (en) |

Cited By (1)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN115004273A (en) * | 2019-04-15 | 2022-09-02 | 华为技术有限公司 | Digital reconstruction method, device and system for traffic road |

Families Citing this family (1)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| KR102696084B1 (en) * | 2018-01-31 | 2024-08-19 | 쓰리엠 이노베이티브 프로퍼티즈 컴파니 | Virtual camera array for inspection of manufactured webs |

Citations (8)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US5657073A (en) | 1995-06-01 | 1997-08-12 | Panoramic Viewing Systems, Inc. | Seamless multi-camera panoramic imaging with distortion correction and selectable field of view |

| JP2001103451A (en) * | 1999-09-29 | 2001-04-13 | Toshiba Corp | System and method for image monitoring |

| KR100852683B1 (en) * | 2007-08-13 | 2008-08-18 | (주)한국알파시스템 | An apparatus for recognizing number of vehicles and a methode for recognizing number of vehicles |

| KR20090030666A (en) * | 2007-09-20 | 2009-03-25 | 조성윤 | High-speed weight in motion |

| EP2306426A1 (en) * | 2009-10-01 | 2011-04-06 | Kapsch TrafficCom AG | Device for detecting vehicles on a traffic surface |

| WO2013128427A1 (en) * | 2012-03-02 | 2013-09-06 | Leddartech Inc. | System and method for multipurpose traffic detection and characterization |

| KR101432512B1 (en) * | 2014-02-17 | 2014-08-25 | (주) 하나텍시스템 | Virtual image displaying apparatus for load control |

| WO2015012219A1 (en) * | 2013-07-22 | 2015-01-29 | 株式会社東芝 | Vehicle monitoring device and vehicle monitoring method |

Family Cites Families (3)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US7728877B2 (en) * | 2004-12-17 | 2010-06-01 | Mitsubishi Electric Research Laboratories, Inc. | Method and system for synthesizing multiview videos |

| WO2008012821A2 (en) * | 2006-07-25 | 2008-01-31 | Humaneyes Technologies Ltd. | Computer graphics imaging |

| JP5239991B2 (en) * | 2009-03-25 | 2013-07-17 | 富士通株式会社 | Image processing apparatus and image processing system |

-

2015

- 2015-06-09 EP EP15171194.2A patent/EP3104357A1/en not_active Withdrawn

-

2016

- 2016-05-18 WO PCT/EP2016/061108 patent/WO2016198242A1/en active Application Filing

Patent Citations (8)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US5657073A (en) | 1995-06-01 | 1997-08-12 | Panoramic Viewing Systems, Inc. | Seamless multi-camera panoramic imaging with distortion correction and selectable field of view |

| JP2001103451A (en) * | 1999-09-29 | 2001-04-13 | Toshiba Corp | System and method for image monitoring |

| KR100852683B1 (en) * | 2007-08-13 | 2008-08-18 | (주)한국알파시스템 | An apparatus for recognizing number of vehicles and a methode for recognizing number of vehicles |

| KR20090030666A (en) * | 2007-09-20 | 2009-03-25 | 조성윤 | High-speed weight in motion |

| EP2306426A1 (en) * | 2009-10-01 | 2011-04-06 | Kapsch TrafficCom AG | Device for detecting vehicles on a traffic surface |

| WO2013128427A1 (en) * | 2012-03-02 | 2013-09-06 | Leddartech Inc. | System and method for multipurpose traffic detection and characterization |

| WO2015012219A1 (en) * | 2013-07-22 | 2015-01-29 | 株式会社東芝 | Vehicle monitoring device and vehicle monitoring method |

| KR101432512B1 (en) * | 2014-02-17 | 2014-08-25 | (주) 하나텍시스템 | Virtual image displaying apparatus for load control |

Non-Patent Citations (1)

| Title |

|---|

| ROSTEN, EDWARD; TOM DRUMMOND.: "Computer Vision-ECCV 2006", SPRINGER, article "Machine learning for high-speed corner detection", pages: 430 - 443 |

Cited By (1)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN115004273A (en) * | 2019-04-15 | 2022-09-02 | 华为技术有限公司 | Digital reconstruction method, device and system for traffic road |

Also Published As

| Publication number | Publication date |

|---|---|

| WO2016198242A1 (en) | 2016-12-15 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| TWI798305B (en) | Systems and methods for updating highly automated driving maps | |

| US8548229B2 (en) | Method for detecting objects | |

| KR101647370B1 (en) | road traffic information management system for g using camera and radar | |

| EP3876141A1 (en) | Object detection method, related device and computer storage medium | |

| CN107273788B (en) | Imaging system for performing lane detection in a vehicle and vehicle imaging system | |

| EP1796043B1 (en) | Object detection | |

| US9363483B2 (en) | Method for available parking distance estimation via vehicle side detection | |

| US9547912B2 (en) | Method for measuring the height profile of a vehicle passing on a road | |

| Premebida et al. | Fusing LIDAR, camera and semantic information: A context-based approach for pedestrian detection | |

| JP6270102B2 (en) | Moving surface boundary line recognition apparatus, moving body device control system using the moving surface boundary line recognition method, and moving surface boundary line recognition program | |

| US20160232410A1 (en) | Vehicle speed detection | |

| JP4363295B2 (en) | Plane estimation method using stereo images | |

| JP2011505610A (en) | Method and apparatus for mapping distance sensor data to image sensor data | |

| US20160247398A1 (en) | Device for tolling or telematics systems | |

| KR20180041524A (en) | Pedestrian detecting method in a vehicle and system thereof | |

| EP3029602A1 (en) | Method and apparatus for detecting a free driving space | |

| JP2016206801A (en) | Object detection device, mobile equipment control system and object detection program | |

| TWI851992B (en) | Object tracking integration method and integrating apparatus | |

| EP3104357A1 (en) | A device for detecting vehicles on a traffic area | |

| JP2017207874A (en) | Image processing apparatus, imaging apparatus, moving body device control system, image processing method, and program | |

| CN111465937B (en) | Face detection and recognition method employing light field camera system | |

| JP3629935B2 (en) | Speed measurement method for moving body and speed measurement device using the method | |

| CN110249366B (en) | Image feature quantity output device, image recognition device, and storage medium | |

| KR101073053B1 (en) | Auto Transportation Information Extraction System and Thereby Method | |

| CN112861599A (en) | Method and device for classifying objects on a road, computer program and storage medium |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PUAI | Public reference made under article 153(3) epc to a published international application that has entered the european phase |

Free format text: ORIGINAL CODE: 0009012 |

|

| STAA | Information on the status of an ep patent application or granted ep patent |

Free format text: STATUS: THE APPLICATION HAS BEEN PUBLISHED |

|

| AK | Designated contracting states |

Kind code of ref document: A1 Designated state(s): AL AT BE BG CH CY CZ DE DK EE ES FI FR GB GR HR HU IE IS IT LI LT LU LV MC MK MT NL NO PL PT RO RS SE SI SK SM TR |

|

| AX | Request for extension of the european patent |

Extension state: BA ME |

|

| STAA | Information on the status of an ep patent application or granted ep patent |

Free format text: STATUS: THE APPLICATION IS DEEMED TO BE WITHDRAWN |

|

| 18D | Application deemed to be withdrawn |

Effective date: 20170615 |