CN114241226A - Three-dimensional point cloud semantic segmentation method based on multi-neighborhood characteristics of hybrid model - Google Patents

Three-dimensional point cloud semantic segmentation method based on multi-neighborhood characteristics of hybrid model Download PDFInfo

- Publication number

- CN114241226A CN114241226A CN202111486128.XA CN202111486128A CN114241226A CN 114241226 A CN114241226 A CN 114241226A CN 202111486128 A CN202111486128 A CN 202111486128A CN 114241226 A CN114241226 A CN 114241226A

- Authority

- CN

- China

- Prior art keywords

- point cloud

- network layer

- network

- dimensional

- layer

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Pending

Links

- 230000011218 segmentation Effects 0.000 title claims abstract description 58

- 238000000034 method Methods 0.000 title claims abstract description 53

- 238000013528 artificial neural network Methods 0.000 claims description 34

- 238000011176 pooling Methods 0.000 claims description 28

- 239000011159 matrix material Substances 0.000 claims description 15

- 238000013507 mapping Methods 0.000 claims description 9

- 238000000605 extraction Methods 0.000 claims description 7

- 239000013598 vector Substances 0.000 claims description 7

- 238000003064 k means clustering Methods 0.000 claims description 6

- 230000004913 activation Effects 0.000 claims description 3

- 150000001875 compounds Chemical class 0.000 claims description 3

- 230000017105 transposition Effects 0.000 claims description 3

- 238000013135 deep learning Methods 0.000 abstract description 10

- 238000012545 processing Methods 0.000 abstract description 5

- 230000006870 function Effects 0.000 description 7

- 230000009286 beneficial effect Effects 0.000 description 6

- 239000000284 extract Substances 0.000 description 5

- 238000010801 machine learning Methods 0.000 description 5

- 238000010586 diagram Methods 0.000 description 3

- 230000004927 fusion Effects 0.000 description 2

- 238000012216 screening Methods 0.000 description 2

- 230000003213 activating effect Effects 0.000 description 1

- 230000007547 defect Effects 0.000 description 1

- 230000001419 dependent effect Effects 0.000 description 1

- 238000013461 design Methods 0.000 description 1

- 238000011161 development Methods 0.000 description 1

- 230000018109 developmental process Effects 0.000 description 1

- 238000003708 edge detection Methods 0.000 description 1

- 230000000694 effects Effects 0.000 description 1

- 230000007613 environmental effect Effects 0.000 description 1

- 238000011160 research Methods 0.000 description 1

- 238000012549 training Methods 0.000 description 1

Images

Classifications

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F18/00—Pattern recognition

- G06F18/20—Analysing

- G06F18/23—Clustering techniques

- G06F18/232—Non-hierarchical techniques

- G06F18/2321—Non-hierarchical techniques using statistics or function optimisation, e.g. modelling of probability density functions

- G06F18/23213—Non-hierarchical techniques using statistics or function optimisation, e.g. modelling of probability density functions with fixed number of clusters, e.g. K-means clustering

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F18/00—Pattern recognition

- G06F18/20—Analysing

- G06F18/24—Classification techniques

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

- G06N3/02—Neural networks

- G06N3/04—Architecture, e.g. interconnection topology

- G06N3/045—Combinations of networks

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

- G06N3/02—Neural networks

- G06N3/08—Learning methods

Landscapes

- Engineering & Computer Science (AREA)

- Theoretical Computer Science (AREA)

- Physics & Mathematics (AREA)

- Data Mining & Analysis (AREA)

- Life Sciences & Earth Sciences (AREA)

- Artificial Intelligence (AREA)

- General Physics & Mathematics (AREA)

- General Engineering & Computer Science (AREA)

- Evolutionary Computation (AREA)

- Computer Vision & Pattern Recognition (AREA)

- Computational Linguistics (AREA)

- Bioinformatics & Computational Biology (AREA)

- Bioinformatics & Cheminformatics (AREA)

- Health & Medical Sciences (AREA)

- Biomedical Technology (AREA)

- Biophysics (AREA)

- Evolutionary Biology (AREA)

- General Health & Medical Sciences (AREA)

- Molecular Biology (AREA)

- Computing Systems (AREA)

- Mathematical Physics (AREA)

- Software Systems (AREA)

- Probability & Statistics with Applications (AREA)

- Image Analysis (AREA)

Abstract

The invention discloses a three-dimensional point cloud semantic segmentation method based on mixed model multi-neighborhood characteristics, which solves the problem of poor robustness of a single model based on traditional or deep learning in the three-dimensional point cloud semantic segmentation method, fully realizes the discovery of the relationship between neighborhood points of point cloud data by a clustering algorithm, constructs a deep learning network for effectively processing the point cloud data to extract rich point data characteristics, and finally realizes the semantic segmentation of three-dimensional point cloud accurately and robustly.

Description

Technical Field

The invention belongs to the technical field of three-dimensional point cloud identification, and particularly relates to a three-dimensional point cloud semantic segmentation method based on a mixed model multi-neighborhood characteristic.

Background

In recent years, with the rapid development of the related application of the internet of things, the demand based on scene understanding service is driven to rise, so that the demands on high-precision scene identification and scene semantic segmentation are increasingly urgent. A stable, accurate and lightweight scene understanding system is an important guarantee for achieving Internet of things application such as unmanned driving, robot control, Virtual Reality (VR), 3D reconstruction and the like.

Among the existing scene understanding techniques, the three-dimensional point cloud semantic segmentation technique is an important research task. The goal of the three-dimensional point cloud semantic segmentation technique is to distinguish the boundaries of instance objects in an environment and assign semantic labels to points in three-dimensional space, which is crucial to the application of refined scene understanding. Although two-dimensional image data can also be used for describing the real world, the two-dimensional spatial data has uncertainty of describing the environmental depth, so that the intelligent robot cannot have human understanding of the scene, and cannot acquire prior knowledge such as the size of a spatial object. The technology for realizing the scene understanding by utilizing the three-dimensional point cloud data to carry out semantic segmentation is an effective way for solving the problems, and has the advantages of wide applicable scene, rich scene space structure information and the like.

Although the current model based on the learning method makes great progress in processing the image data, the point cloud data has many challenges in directly processing the unstructured three-dimensional point cloud due to the irregularity and the unstructured shape of the point cloud data. Therefore, the key for realizing the high-precision three-dimensional point cloud semantic segmentation technology is to effectively process point cloud data and extract important spatial features. At present, common methods for realizing high-precision three-dimensional point cloud semantic segmentation technology include: 1) the traditional point cloud semantic segmentation method based on characteristics such as edges, areas or point cloud attributes. Edge detection techniques such as point cloud semantic segmentation can classify point cloud edges of different objects by calculating gradient changes of normal vectors of the point cloud surface to further achieve point cloud segmentation. 2) A point cloud semantic segmentation method based on a deep learning method is provided. For example, a PointNet point cloud segmentation network extracts global and single-point characteristics from point cloud data through a plurality of multi-layer perceptrons (MLPs) connected in series to realize a point cloud segmentation task. 3) A point cloud semantic segmentation method based on a mixed model is realized. For example, a mixed model based on K nearest neighbor and PointNet is a three-dimensional point cloud segmentation technology realized by firstly screening neighborhood points and then extracting point features. However, the first method is not only highly dependent on prior information of point cloud segmentation, but also sensitive to noise information in point cloud data. The method is mainly characterized in that manual screening and prior understanding are required for extracting the point cloud data features in the traditional method, and meanwhile, noise generated during data acquisition influences the stability of the point cloud data, so that the edge of point cloud information of different objects is blurred. In addition, the second method only considers the feature based on a single point in the point cloud and ignores the space geometric relationship between adjacent points, so that the classification system has limitations. The method is mainly characterized in that when point cloud data has an obvious three-dimensional space constraint relationship, the relationship between points cannot be embodied by single-point information, so that the semantic segmentation effect is seriously influenced. Therefore, the machine learning method is utilized to fully learn the spatial correlation among the field points of the point cloud data, and meanwhile, the deep learning method is fused to extract the multi-field point cloud data features in a self-adaptive manner, so that the semantic segmentation of the three-dimensional point cloud is finally realized. In addition, for the realization of the three-dimensional point cloud semantic segmentation of the multi-neighborhood characteristics based on the hybrid model, the selection of a proper machine learning clustering method to learn the spatial relationship of the neighborhood data points and the design of an effective deep learning-based characteristic extraction and classification network are the key for improving the precision of the three-dimensional point cloud semantic segmentation model.

Disclosure of Invention

Aiming at the defects in the prior art, the three-dimensional point cloud semantic segmentation method based on the multi-neighborhood characteristics of the mixed model provided by the invention solves the problem of poor robustness of a single model based on the traditional or deep learning in the three-dimensional point cloud semantic segmentation method.

In order to achieve the purpose of the invention, the invention adopts the technical scheme that: a three-dimensional point cloud semantic segmentation method based on multi-neighborhood characteristics of a mixed model comprises the following steps:

s1, point cloud data in a three-dimensional space are collected through a laser radar, and feature extraction is carried out on the point cloud data to obtain point cloud global features, single-point features and intermediate features of a network layer;

s2, clustering the point cloud data through a clustering algorithm, storing point indexes in each clustering point subset, and obtaining a middle characteristic clustering subset of the network layer through index classification;

s3, performing feature mapping on the intermediate feature cluster subsets of the network layers to obtain high-dimensional multi-neighborhood features corresponding to the intermediate feature cluster subsets of each network layer;

s4, splicing and fusing the point cloud global feature, the single-point feature and the high-dimensional multi-neighborhood feature, and further calculating through a third artificial neural network to finally obtain the semantic classification label of each point.

Further: in step S1, the representation form of the point cloud data is specifically a seven-dimensional form (x, y, z, intensity, r, g, b), where x, y, and z are spatial information of the point cloud data in an x axis, a y axis, and a z axis of a three-dimensional space, respectively; intensity is the intensity of the reflection value of the laser radar; and r, g and b are red information, green information and blue information of the color information of the point cloud data respectively.

Further: in step S1, the method for extracting features of point cloud data specifically includes:

extracting the characteristics of the point cloud data through a first artificial neural network; wherein the first artificial neural network comprises a shared multilayer perceptron and a bifurcate structure which are connected with each other;

the shared multilayer perceptron comprises a first network layer, a second network layer and a third network layer which are sequentially connected, wherein the scales of the first network layer, the second network layer and the third network layer are respectively 7, 10 and 64;

the two-branch structure comprises a first branch network and a second branch network, and the first branch network and the second branch network are both connected with the shared multilayer perceptron.

The beneficial effects of the above further scheme are: the two branch structures are respectively used for generating single-point characteristics and point cloud global characteristic information of the point cloud data.

Further: the first branch network is provided with a first multilayer perceptron;

the first multilayer perceptron comprises a fourth network layer and a fifth network layer which are connected with each other, and the dimensions of the fourth network layer and the fifth network layer are 64 and 128 respectively;

the beneficial effects of the above further scheme are: the first branch may extract high-dimensional point cloud single point features in the point cloud data features.

The second branch network comprises a second multilayer perceptron and a first maximum pooling layer which are connected with each other;

the second multilayer perceptron comprises a sixth network layer and a seventh network layer which are connected with each other, and the scales of the sixth network layer and the seventh network layer are 64 and 512 respectively; the first largest pooling layer has a scale of n × 1, where n is the total number of point cloud data.

Further: the output formulas of the fourth network layer and the sixth network layer are the same, and the expression of the output F of the fourth network layer and the sixth network layer is specifically as follows:

in the formula, x1×iAs input point cloud features, Wj×iAs a weight matrix to be trained, b1×jFor the offset matrix, T is the matrix transposition operation, (i, j) is the position number on the two-dimensional space,. is the matrix multiplication operation, + is the matrix addition operation, Relu (·) is the activation function;

the expression of the first maximum pooling layer is specifically:

in the formula (I), the compound is shown in the specification,calculated output, x, for the first max pooling layeri,jIs the first maximumThe input of the pooling layer, r, is the size of the pooling core.

The beneficial effects of the above further scheme are: the first maximum pooling layer may extract point cloud global features in the high-dimensional point cloud data features.

Further: in the step S2, the clustering algorithm is a K-means clustering algorithm or a mean shift clustering algorithm;

the clustering parameter K of the set K-means clustering algorithm is 10, the mean shift bandwidth example size Meanshift Radius of the set mean shift clustering algorithm is 0.2, and the minimum number of points Meanshift MinPts in the mean shift clustering category is 10;

the step S2 specifically includes: and clustering the point cloud data through a clustering algorithm, and classifying the intermediate features of the network layer through indexing the point numbers in each clustering result to obtain a feature clustering subset.

Further: the step S3 specifically includes:

extracting high-dimensional neighborhood characteristics of each characteristic clustering subset through a second artificial neural network to obtain high-dimensional multi-neighborhood characteristics corresponding to each characteristic clustering subset;

the second artificial neural network is specifically a multi-branch structure, and each branch structure in the multi-branch structure is the same and comprises a third multilayer perceptron and a second maximum pooling layer which are connected with each other;

the third multilayer perceptron comprises an eighth network layer and a ninth network layer, wherein the eighth network layer and the ninth network layer have the dimensions of 64 and 512 respectively, and the second maximum pooling layer has the dimension of niX 1, wherein niThe total number of points in the ith feature cluster subset.

Further: the step S4 specifically includes:

splicing the global point cloud features and the high-dimensional multi-neighborhood features to obtain point cloud spatial feature information with the size of 1024, copying the point cloud spatial feature information and splicing the point cloud spatial feature information with the single-point features to obtain point cloud features, and then performing high-dimensional mapping on the point cloud features through a third artificial neural network to obtain semantic classification labels of all points;

the scale of the point cloud features is n x 1152.

The beneficial effects of the above further scheme are: the third artificial neural network of the present invention can implement feature high-dimensional mapping and predictive semantic tags.

Further: the third artificial neural network comprises a fourth multilayer perceptron and a normalized exponential function layer which are connected with each other;

the fourth multilayer perceptron comprises a tenth network layer, an eleventh network layer and a twelfth network layer which are connected in sequence; the scales of the tenth network layer, the eleventh network layer and the twelfth network layer are 1152, 256 and m respectively, and m is the number of classes of semantic segmentation;

the expression of the normalized exponential function layer output p is specifically as follows:

wherein m is the number of classes of semantic segmentation of the third artificial neural network, vnAn output vector of the output value of the nth class of the twelfth network layer, wherein n is a class ordinal number, vcAnd outputting an output vector of the output value of the c-th class of the twelfth network layer, wherein c is the class needing to be calculated currently, and the output p value is in the range of (0, 1).

The invention has the beneficial effects that:

(1) the semantic segmentation of the three-dimensional point cloud is realized by designing a three-dimensional point cloud semantic segmentation method based on the multi-neighborhood characteristics of the mixed model based on the three-dimensional point cloud data input.

(2) The method also utilizes a clustering algorithm to mine the characteristic relation of the field points in the three-dimensional point cloud data, utilizes a deep learning network model to extract multi-neighborhood characteristics of the point cloud data, and realizes the fusion and utilization of the multi-dimensional point cloud characteristics by a mixed model through the single-point, global and multi-field characteristics of the extracted point cloud, thereby finally obtaining a high-precision three-dimensional point cloud semantic segmentation result.

(3) The method can directly process disordered three-dimensional data without manually extracting point cloud features in advance, and realizes extraction and utilization of point cloud features of different dimensions by a semantic segmentation network based on a hybrid model fusing machine learning and a deep learning algorithm, so that a more accurate point cloud semantic segmentation result is obtained after more hidden space features are excavated by the model, and the autonomous high-robustness three-dimensional point cloud semantic segmentation prediction model is realized.

Drawings

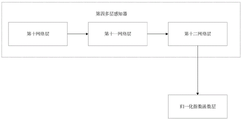

FIG. 1 is a flow chart of a three-dimensional point cloud semantic segmentation method based on a hybrid model multi-neighborhood feature;

FIG. 2 is a block diagram of a first artificial neural network architecture of the present invention;

FIG. 3 is a block diagram of a second artificial neural network according to the present invention;

fig. 4 is a block diagram of a third artificial neural network according to the present invention.

Detailed Description

The following description of the embodiments of the present invention is provided to facilitate the understanding of the present invention by those skilled in the art, but it should be understood that the present invention is not limited to the scope of the embodiments, and it will be apparent to those skilled in the art that various changes may be made without departing from the spirit and scope of the invention as defined and defined in the appended claims, and all matters produced by the invention using the inventive concept are protected.

Example 1:

as shown in fig. 1, in an embodiment of the present invention, a three-dimensional point cloud semantic segmentation method based on a hybrid model multi-neighborhood feature includes the following steps:

s1, point cloud data in a three-dimensional space are collected through a laser radar, and feature extraction is carried out on the point cloud data to obtain point cloud global features, single-point features and intermediate features of a network layer;

s2, clustering the point cloud data through a clustering algorithm, storing point indexes in each clustering point subset, and obtaining a middle characteristic clustering subset of the network layer through index classification;

s3, performing feature mapping on the intermediate feature cluster subsets of the network layers to obtain high-dimensional multi-neighborhood features corresponding to the intermediate feature cluster subsets of each network layer;

s4, splicing and fusing the point cloud global feature, the single-point feature and the high-dimensional multi-neighborhood feature, and further calculating through a third artificial neural network to obtain the semantic classification label of each point.

In step S1, the representation form of the point cloud data is specifically a seven-dimensional form (x, y, z, intensity, r, g, b), where x, y, and z are spatial information of the point cloud data in an x axis, a y axis, and a z axis of a three-dimensional space, respectively; intensity is the intensity of the reflection value of the laser radar; and r, g and b are red information, green information and blue information of the color information of the point cloud data respectively.

In step S1, the method for extracting features of point cloud data specifically includes:

as shown in fig. 2, feature extraction is performed on the point cloud data through a first artificial neural network; wherein the first artificial neural network comprises a shared multilayer perceptron and a bifurcate structure which are connected with each other;

the shared multilayer perceptron comprises a first network layer, a second network layer and a third network layer which are sequentially connected, wherein the scales of the first network layer, the second network layer and the third network layer are respectively 7, 10 and 64;

the two-branch structure comprises a first branch network and a second branch network, and the first branch network and the second branch network are both connected with the shared multilayer perceptron.

In this embodiment, the first artificial neural network for extracting the single-point feature and the point cloud global feature includes a shared multilayer perceptron and a two-branch structure, wherein an output of the shared multilayer perceptron is an intermediate feature of a network layer, and the two-branch structure is used for generating the single-point feature and the point cloud global feature of the point cloud data respectively.

In the present embodiment, the dimension of the intermediate feature of the network layer output by the third network layer is (n × 64).

The first branch network is provided with a first multilayer perceptron;

the first multilayer perceptron comprises a fourth network layer and a fifth network layer which are connected with each other, and the dimensions of the fourth network layer and the fifth network layer are 64 and 128 respectively;

the second branch network comprises a second multilayer perceptron and a first maximum pooling layer which are connected with each other;

the second multilayer perceptron comprises a sixth network layer and a seventh network layer which are connected with each other, and the scales of the sixth network layer and the seventh network layer are 64 and 512 respectively; the first largest pooling layer has a scale of n × 1, where n is the total number of point cloud data.

In this embodiment, the branch network for generating the single-point feature of the cloud data in the two-branch structure includes a first multi-layer sensor, which has two layers of network layers with sizes of 64 and 128, respectively, and the size of the resulting single-point feature is (n × 128); the branch network for generating the global features in the two-branch structure comprises a second multilayer perceptron and a first maximum pooling layer which are connected in series; the second multilayer perceptron comprises two network layers, the sizes of the two network layers are 64 and 512 respectively, and the output dimension of the last network layer is (n multiplied by 512); the pooling size of the maximum pooling layer is nx1; the resulting global feature size is 512.

The output formulas of the fourth network layer and the sixth network layer are the same, and the expression of the output F of the fourth network layer and the sixth network layer is specifically as follows:

in the formula, x1×iAs input point cloud features, Wj×iAs a weight matrix to be trained, b1×jFor the offset matrix, T is the matrix transposition operation, (i, j) is the position number on the two-dimensional space,. is the matrix multiplication operation, + is the matrix addition operation, Relu (·) is the activation function; the nonlinear mapping can be performed by activating a function.

The expression of the first maximum pooling layer is specifically:

in the formula (I), the compound is shown in the specification,calculated output, x, for the first max pooling layeri,jThe input to the first largest pooling layer, r is the size of the pooling kernel.

In the step S2, the clustering algorithm is a K-means clustering algorithm or a mean shift clustering algorithm;

the clustering parameter K of the set K-means clustering algorithm is 10, the mean shift bandwidth example size Meanshift Radius of the set mean shift clustering algorithm is 0.2, and the minimum number of points Meanshift MinPts in the mean shift clustering category is 10;

the step S2 specifically includes: and clustering the point cloud data through a clustering algorithm, and classifying the intermediate features of the network layer through indexing the point numbers in each clustering result to obtain an intermediate feature clustering subset of the network layer.

The step S3 specifically includes:

extracting high-dimensional neighborhood characteristics from the intermediate characteristic clustering subset characteristics of each network layer through a second artificial neural network to obtain high-dimensional multi-neighborhood characteristics corresponding to the intermediate characteristic clustering subset of each network layer;

as shown in fig. 3, the second artificial neural network is specifically a multi-branch structure, and each branch structure in the multi-branch structure is the same and comprises a third multilayer perceptron and a second maximum pooling layer which are connected with each other;

the third multilayer perceptron comprises an eighth network layer and a ninth network layer, wherein the eighth network layer and the ninth network layer have the dimensions of 64 and 512 respectively, and the second maximum pooling layer has the dimension of niX 1, wherein niThe total number of points in the ith feature cluster subset.

In this embodiment, each branch in the second artificial neural network processes one intermediate feature cluster subset feature, and extracts a high-dimensional neighborhood feature, and finally the size of the high-dimensional neighborhood feature obtained by each branch is 512.

The step S4 specifically includes:

splicing the global point cloud features and the high-dimensional multi-neighborhood features to obtain point cloud spatial feature information with the size of 1024, copying the point cloud spatial feature information and splicing the point cloud spatial feature information with the single-point features to obtain point cloud features, and then performing high-dimensional mapping on the point cloud features through a third artificial neural network to obtain semantic classification labels of all points;

the scale of the point cloud features is n x 1152.

As shown in fig. 4, the third artificial neural network includes a fourth multilayer perceptron and a normalized exponential function layer connected to each other;

the fourth multilayer perceptron comprises a tenth network layer, an eleventh network layer and a twelfth network layer which are connected in sequence; the scales of the tenth network layer, the eleventh network layer and the twelfth network layer are 1152, 256 and m respectively, and m is the number of classes of semantic segmentation;

the expression of the normalized exponential function layer output p is specifically as follows:

wherein m is the number of classes of semantic segmentation of the third artificial neural network, vnAn output vector of the output value of the nth class of the twelfth network layer, wherein n is a class ordinal number, vcAnd outputting an output vector of the output value of the c-th class of the twelfth network layer, wherein c is the class needing to be calculated currently, and the output p value is in the range of (0, 1).

In an embodiment of the invention, a third artificial neural network is used to implement feature high-dimensional mapping and predictive semantic tags.

The implementation process of the method comprises the following steps: the method comprises the steps of obtaining point cloud data in a three-dimensional space acquired by a laser radar, processing data of each point in the point cloud data into a 7-dimensional format, inputting the data into a mixed model, extracting single-point characteristics and point cloud global characteristics from the input point cloud data through a first artificial neural network, storing intermediate characteristics of a network layer for processing and application of a subsequent clustering algorithm, clustering the three-dimensional point cloud data by adopting a machine learning clustering algorithm based on spatial information of the point cloud, and classifying the intermediate characteristics of the network layer by indexing point numbers in each point cloud clustering result. And then, extracting corresponding high-dimensional neighborhood characteristic information from the network layer intermediate characteristics after the clustering classification through a second artificial neural network. And finally, splicing and fusing the global features and the single-point features of the point cloud and the multi-neighborhood features extracted from the intermediate feature cluster subsets of each network layer through a third artificial neural network to finally obtain semantic classification labels of each point, so as to realize semantic segmentation of the three-dimensional point cloud. In the specific implementation process, the marked data can be used for training the network parameters of the system.

The invention has the beneficial effects that: the semantic segmentation of the three-dimensional point cloud is realized by designing a three-dimensional point cloud semantic segmentation method based on the multi-neighborhood characteristics of the mixed model based on the three-dimensional point cloud data input.

The method also utilizes a clustering algorithm to mine the characteristic relation of the field points in the three-dimensional point cloud data, utilizes a deep learning network model to extract multi-neighborhood characteristics of the point cloud data, and realizes the fusion and utilization of the multi-dimensional point cloud characteristics by a mixed model through the single-point, global and multi-field characteristics of the extracted point cloud, thereby finally obtaining a high-precision three-dimensional point cloud semantic segmentation result.

The method can directly process disordered three-dimensional data without manually extracting point cloud features in advance, and realizes extraction and utilization of point cloud features of different dimensions by a semantic segmentation network based on a hybrid model fusing machine learning and a deep learning algorithm, so that a more accurate point cloud semantic segmentation result is obtained after more hidden space features are excavated by the model, and the autonomous high-robustness three-dimensional point cloud semantic segmentation prediction model is realized.

In the description of the present invention, it is to be understood that the terms "center", "thickness", "upper", "lower", "horizontal", "top", "bottom", "inner", "outer", "radial", and the like, indicate orientations and positional relationships based on the orientations and positional relationships shown in the drawings, and are used merely for convenience in describing the present invention and for simplicity in description, and do not indicate or imply that the referenced devices or elements must have a particular orientation, be constructed and operated in a particular orientation, and thus, are not to be construed as limiting the present invention. Furthermore, the terms "first," "second," and "third" are used for descriptive purposes only and are not to be construed as indicating or implying relative importance or an implicit indication of the number of technical features. Thus, features defined as "first", "second", "third" may explicitly or implicitly include one or more of the features.

Claims (9)

1. A three-dimensional point cloud semantic segmentation method based on multi-neighborhood characteristics of a mixed model is characterized by comprising the following steps:

s1, point cloud data in a three-dimensional space are collected through a laser radar, and feature extraction is carried out on the point cloud data to obtain point cloud global features, single-point features and intermediate features of a network layer;

s2, clustering the point cloud data through a clustering algorithm, storing point indexes in each clustering point subset, and obtaining a middle characteristic clustering subset of the network layer through index classification;

s3, performing feature mapping on the intermediate feature cluster subsets of the network layers to obtain high-dimensional multi-neighborhood features corresponding to the intermediate feature cluster subsets of each network layer;

s4, splicing and fusing the point cloud global feature, the single-point feature and the high-dimensional multi-neighborhood feature, and further calculating through a third artificial neural network to obtain the semantic classification label of each point.

2. The three-dimensional point cloud semantic segmentation method based on the hybrid model multi-neighborhood feature according to claim 1, wherein in the step S1, the representation form of the point cloud data is specifically a seven-dimensional form (x, y, z, intensity, r, g, b), where x, y, and z are spatial information of the point cloud data in an x axis, a y axis, and a z axis of a three-dimensional space, respectively; intensity is the intensity of the reflection value of the laser radar; and r, g and b are red information, green information and blue information of the color information of the point cloud data respectively.

3. The three-dimensional point cloud semantic segmentation method based on the hybrid model multi-neighborhood characteristics as claimed in claim 1, wherein in the step S1, the method for extracting the characteristics of the point cloud data specifically comprises:

extracting the characteristics of the point cloud data through a first artificial neural network; wherein the first artificial neural network comprises a shared multilayer perceptron and a bifurcate structure which are connected with each other;

the shared multilayer perceptron comprises a first network layer, a second network layer and a third network layer which are sequentially connected, wherein the scales of the first network layer, the second network layer and the third network layer are respectively 7, 10 and 64;

the two-branch structure comprises a first branch network and a second branch network, and the first branch network and the second branch network are both connected with the shared multilayer perceptron.

4. The three-dimensional point cloud semantic segmentation method based on the hybrid model multi-neighborhood characteristics according to claim 3, characterized in that the first branch network is provided with a first multilayer perceptron;

the first multilayer perceptron comprises a fourth network layer and a fifth network layer which are connected with each other, and the dimensions of the fourth network layer and the fifth network layer are 64 and 128 respectively;

the second branch network comprises a second multilayer perceptron and a first maximum pooling layer which are connected with each other;

the second multilayer perceptron comprises a sixth network layer and a seventh network layer which are connected with each other, and the scales of the sixth network layer and the seventh network layer are 64 and 512 respectively; the first largest pooling layer has a scale of n × 1, where n is the total number of points in the point cloud data.

5. The three-dimensional point cloud semantic segmentation method based on the hybrid model multi-neighborhood feature of claim 4, wherein the output formulas of the fourth network layer and the sixth network layer are the same, and the expression of the output F of the fourth network layer and the sixth network layer is specifically:

in the formula, x1×iAs input point cloud features, Wj×iAs a weight matrix to be trained, b1×jFor the offset matrix, T is the matrix transposition operation, (i, j) is the position number on the two-dimensional space,. is the matrix multiplication operation, + is the matrix addition operation, Relu (·) is the activation function;

the expression of the first maximum pooling layer is specifically:

6. The three-dimensional point cloud semantic segmentation method based on the hybrid model multi-neighborhood characteristics according to claim 1, wherein in the step S2, the clustering algorithm is a K-means clustering algorithm or a mean shift clustering algorithm;

the clustering parameter K of the set K-means clustering algorithm is 10, the mean shift bandwidth example size Meanshift Radius of the set mean shift clustering algorithm is 0.2, and the minimum number of points Meanshift MinPts in the mean shift clustering category is 10;

the step S2 specifically includes: and clustering the point cloud data through a clustering algorithm, and classifying the intermediate features of the network layer through indexing the point numbers in each clustering result to obtain an intermediate feature clustering subset of the network layer.

7. The three-dimensional point cloud semantic segmentation method based on the hybrid model multi-neighborhood feature of claim 1, wherein the step S3 specifically comprises:

extracting high-dimensional neighborhood characteristics from the intermediate characteristic clustering subset characteristics of each network layer through a second artificial neural network to obtain high-dimensional multi-neighborhood characteristics corresponding to the intermediate characteristic clustering subset of each network layer;

the second artificial neural network is specifically a multi-branch structure, and each branch structure in the multi-branch structure is the same and comprises a third multilayer perceptron and a second maximum pooling layer which are connected with each other;

the third multilayer perceptron comprises an eighth network layer and a ninth network layer, wherein the eighth network layer and the ninth network layer have the dimensions of 64 and 512 respectively, and the second maximum pooling layer has the dimension of niX 1, wherein niThe total number of points in the ith feature cluster subset.

8. The three-dimensional point cloud semantic segmentation method based on the hybrid model multi-neighborhood feature of claim 1, wherein the step S4 specifically comprises:

splicing the global point cloud features and the high-dimensional multi-neighborhood features to obtain point cloud spatial feature information with the size of 1024, copying the point cloud spatial feature information and splicing the point cloud spatial feature information with the single-point features to obtain point cloud features, and then performing high-dimensional mapping on the point cloud features through a third artificial neural network to obtain semantic classification labels of all points;

the scale of the point cloud features is n x 1152.

9. The hybrid model multi-neighborhood feature based three-dimensional point cloud semantic segmentation method according to claim 8, the third artificial neural network comprising a fourth multi-layered perceptron and a normalized exponential function layer connected to each other;

the fourth multilayer perceptron comprises a tenth network layer, an eleventh network layer and a twelfth network layer which are connected in sequence; the scales of the tenth network layer, the eleventh network layer and the twelfth network layer are 1152, 256 and m respectively, and m is the number of classes of semantic segmentation;

the expression of the normalized exponential function layer output p is specifically as follows:

wherein m is the number of classes of semantic segmentation of the third artificial neural network, vnAn output vector of the output value of the nth class of the twelfth network layer, wherein n is a class ordinal number, vcAnd outputting an output vector of the output value of the c-th class of the twelfth network layer, wherein c is the class needing to be calculated currently, and the output p value is in the range of (0, 1).

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202111486128.XA CN114241226A (en) | 2021-12-07 | 2021-12-07 | Three-dimensional point cloud semantic segmentation method based on multi-neighborhood characteristics of hybrid model |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202111486128.XA CN114241226A (en) | 2021-12-07 | 2021-12-07 | Three-dimensional point cloud semantic segmentation method based on multi-neighborhood characteristics of hybrid model |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| CN114241226A true CN114241226A (en) | 2022-03-25 |

Family

ID=80753691

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN202111486128.XA Pending CN114241226A (en) | 2021-12-07 | 2021-12-07 | Three-dimensional point cloud semantic segmentation method based on multi-neighborhood characteristics of hybrid model |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN114241226A (en) |

Cited By (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN114926649A (en) * | 2022-05-31 | 2022-08-19 | 中国第一汽车股份有限公司 | Data processing method, device and computer readable storage medium |

| CN116168046A (en) * | 2023-04-26 | 2023-05-26 | 山东省凯麟环保设备股份有限公司 | 3D point cloud semantic segmentation method, system, medium and device under complex environment |

| CN116958553A (en) * | 2023-07-27 | 2023-10-27 | 石河子大学 | Lightweight plant point cloud segmentation method based on non-parametric attention and point-level convolution |

| CN117649530A (en) * | 2024-01-30 | 2024-03-05 | 武汉理工大学 | Point cloud feature extraction method, system and equipment based on semantic level topological structure |

Citations (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN110660062A (en) * | 2019-08-31 | 2020-01-07 | 南京理工大学 | Point cloud instance segmentation method and system based on PointNet |

| CN111489358A (en) * | 2020-03-18 | 2020-08-04 | 华中科技大学 | Three-dimensional point cloud semantic segmentation method based on deep learning |

| CN112907602A (en) * | 2021-01-28 | 2021-06-04 | 中北大学 | Three-dimensional scene point cloud segmentation method based on improved K-nearest neighbor algorithm |

| US20210350183A1 (en) * | 2019-02-25 | 2021-11-11 | Tencent Technology (Shenzhen) Company Limited | Point cloud segmentation method, computer-readable storage medium, and computer device |

-

2021

- 2021-12-07 CN CN202111486128.XA patent/CN114241226A/en active Pending

Patent Citations (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US20210350183A1 (en) * | 2019-02-25 | 2021-11-11 | Tencent Technology (Shenzhen) Company Limited | Point cloud segmentation method, computer-readable storage medium, and computer device |

| CN110660062A (en) * | 2019-08-31 | 2020-01-07 | 南京理工大学 | Point cloud instance segmentation method and system based on PointNet |

| CN111489358A (en) * | 2020-03-18 | 2020-08-04 | 华中科技大学 | Three-dimensional point cloud semantic segmentation method based on deep learning |

| CN112907602A (en) * | 2021-01-28 | 2021-06-04 | 中北大学 | Three-dimensional scene point cloud segmentation method based on improved K-nearest neighbor algorithm |

Non-Patent Citations (2)

| Title |

|---|

| RUJUN SONG 等: "HyPNet: Hybrid Multi-features Network for Point Cloud Semantic Segmentation" * |

| 牛辰庚;刘玉杰;李宗民;李华;: "基于点云数据的三维目标识别和模型分割方法" * |

Cited By (7)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN114926649A (en) * | 2022-05-31 | 2022-08-19 | 中国第一汽车股份有限公司 | Data processing method, device and computer readable storage medium |

| CN116168046A (en) * | 2023-04-26 | 2023-05-26 | 山东省凯麟环保设备股份有限公司 | 3D point cloud semantic segmentation method, system, medium and device under complex environment |

| CN116168046B (en) * | 2023-04-26 | 2023-08-25 | 山东省凯麟环保设备股份有限公司 | 3D point cloud semantic segmentation method, system, medium and device under complex environment |

| CN116958553A (en) * | 2023-07-27 | 2023-10-27 | 石河子大学 | Lightweight plant point cloud segmentation method based on non-parametric attention and point-level convolution |

| CN116958553B (en) * | 2023-07-27 | 2024-04-16 | 石河子大学 | Lightweight plant point cloud segmentation method based on non-parametric attention and point-level convolution |

| CN117649530A (en) * | 2024-01-30 | 2024-03-05 | 武汉理工大学 | Point cloud feature extraction method, system and equipment based on semantic level topological structure |

| CN117649530B (en) * | 2024-01-30 | 2024-04-23 | 武汉理工大学 | Point cloud feature extraction method, system and equipment based on semantic level topological structure |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN111368896B (en) | Hyperspectral remote sensing image classification method based on dense residual three-dimensional convolutional neural network | |

| CN111275688B (en) | Small target detection method based on context feature fusion screening of attention mechanism | |

| CN114241226A (en) | Three-dimensional point cloud semantic segmentation method based on multi-neighborhood characteristics of hybrid model | |

| CN106250812B (en) | A kind of model recognizing method based on quick R-CNN deep neural network | |

| CN109559320A (en) | Realize that vision SLAM semanteme builds the method and system of figure function based on empty convolution deep neural network | |

| CN112907602B (en) | Three-dimensional scene point cloud segmentation method based on improved K-nearest neighbor algorithm | |

| CN108648233A (en) | A kind of target identification based on deep learning and crawl localization method | |

| CN112347970B (en) | Remote sensing image ground object identification method based on graph convolution neural network | |

| CN110046671A (en) | A kind of file classification method based on capsule network | |

| CN109190635A (en) | Target tracking method, device and electronic equipment based on classification CNN | |

| CN114821014B (en) | Multi-mode and countermeasure learning-based multi-task target detection and identification method and device | |

| US20190279011A1 (en) | Data anonymization using neural networks | |

| CN113743417A (en) | Semantic segmentation method and semantic segmentation device | |

| CN108509567B (en) | Method and device for building digital culture content library | |

| CN115965819A (en) | Lightweight pest identification method based on Transformer structure | |

| CN114187506B (en) | Remote sensing image scene classification method of viewpoint-aware dynamic routing capsule network | |

| CN112381730B (en) | Remote sensing image data amplification method | |

| CN114120270A (en) | Point cloud target detection method based on attention and sampling learning | |

| CN117456480B (en) | Light vehicle re-identification method based on multi-source information fusion | |

| CN113313091B (en) | Density estimation method based on multiple attention and topological constraints under warehouse logistics | |

| CN114708321B (en) | Semantic-based camera pose estimation method and system | |

| CN115830322A (en) | Building semantic segmentation label expansion method based on weak supervision network | |

| CN114283289A (en) | Image classification method based on multi-model fusion | |

| CN114821258B (en) | Class activation mapping method and device based on feature map fusion | |

| Briouya et al. | Exploration of image and 3D data segmentation methods: an exhaustive survey |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| RJ01 | Rejection of invention patent application after publication |

Application publication date: 20220325 |

|

| RJ01 | Rejection of invention patent application after publication |