CN113496148A - Multi-source data fusion method and system - Google Patents

Multi-source data fusion method and system Download PDFInfo

- Publication number

- CN113496148A CN113496148A CN202010197890.5A CN202010197890A CN113496148A CN 113496148 A CN113496148 A CN 113496148A CN 202010197890 A CN202010197890 A CN 202010197890A CN 113496148 A CN113496148 A CN 113496148A

- Authority

- CN

- China

- Prior art keywords

- information sources

- data sets

- neural network

- multiple information

- image data

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Pending

Links

- 238000007500 overflow downdraw method Methods 0.000 title claims abstract description 9

- 238000000034 method Methods 0.000 claims abstract description 39

- 238000003062 neural network model Methods 0.000 claims abstract description 38

- 230000004927 fusion Effects 0.000 claims abstract description 29

- 230000003595 spectral effect Effects 0.000 claims abstract description 27

- 238000007781 pre-processing Methods 0.000 claims abstract description 20

- 238000000605 extraction Methods 0.000 claims abstract description 16

- 230000006870 function Effects 0.000 claims description 28

- 238000012549 training Methods 0.000 claims description 21

- 238000003860 storage Methods 0.000 claims description 10

- 230000003287 optical effect Effects 0.000 claims description 9

- 238000012545 processing Methods 0.000 claims description 8

- 238000004590 computer program Methods 0.000 claims description 7

- 238000010606 normalization Methods 0.000 claims description 3

- 238000013135 deep learning Methods 0.000 abstract description 5

- 238000005516 engineering process Methods 0.000 description 8

- 230000008569 process Effects 0.000 description 8

- 238000004891 communication Methods 0.000 description 6

- 238000010586 diagram Methods 0.000 description 6

- 239000013598 vector Substances 0.000 description 6

- 238000011176 pooling Methods 0.000 description 4

- 238000013473 artificial intelligence Methods 0.000 description 3

- 238000004422 calculation algorithm Methods 0.000 description 3

- 238000013527 convolutional neural network Methods 0.000 description 3

- 230000008878 coupling Effects 0.000 description 3

- 238000010168 coupling process Methods 0.000 description 3

- 238000005859 coupling reaction Methods 0.000 description 3

- 238000011161 development Methods 0.000 description 3

- 238000013528 artificial neural network Methods 0.000 description 2

- 230000006872 improvement Effects 0.000 description 2

- 238000004519 manufacturing process Methods 0.000 description 2

- 238000011160 research Methods 0.000 description 2

- 239000004065 semiconductor Substances 0.000 description 2

- 230000004913 activation Effects 0.000 description 1

- 238000004364 calculation method Methods 0.000 description 1

- 238000006243 chemical reaction Methods 0.000 description 1

- 238000013329 compounding Methods 0.000 description 1

- 238000004883 computer application Methods 0.000 description 1

- 238000010276 construction Methods 0.000 description 1

- 238000012937 correction Methods 0.000 description 1

- 238000013499 data model Methods 0.000 description 1

- 238000013136 deep learning model Methods 0.000 description 1

- 238000009826 distribution Methods 0.000 description 1

- 230000000694 effects Effects 0.000 description 1

- 238000011478 gradient descent method Methods 0.000 description 1

- 238000002372 labelling Methods 0.000 description 1

- 239000011159 matrix material Substances 0.000 description 1

- 230000007246 mechanism Effects 0.000 description 1

- 238000005065 mining Methods 0.000 description 1

- 239000013307 optical fiber Substances 0.000 description 1

- 230000009466 transformation Effects 0.000 description 1

Images

Classifications

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F18/00—Pattern recognition

- G06F18/20—Analysing

- G06F18/21—Design or setup of recognition systems or techniques; Extraction of features in feature space; Blind source separation

- G06F18/214—Generating training patterns; Bootstrap methods, e.g. bagging or boosting

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F18/00—Pattern recognition

- G06F18/20—Analysing

- G06F18/25—Fusion techniques

- G06F18/251—Fusion techniques of input or preprocessed data

Landscapes

- Engineering & Computer Science (AREA)

- Data Mining & Analysis (AREA)

- Theoretical Computer Science (AREA)

- Computer Vision & Pattern Recognition (AREA)

- Bioinformatics & Cheminformatics (AREA)

- Bioinformatics & Computational Biology (AREA)

- Artificial Intelligence (AREA)

- Evolutionary Biology (AREA)

- Evolutionary Computation (AREA)

- Physics & Mathematics (AREA)

- General Engineering & Computer Science (AREA)

- General Physics & Mathematics (AREA)

- Life Sciences & Earth Sciences (AREA)

- Image Analysis (AREA)

Abstract

The invention discloses a multi-source data fusion method and a multi-source data fusion system, wherein the method comprises the steps of collecting image data sets of multiple information sources for the same scene or the same target, and preprocessing and extracting image spectral features of the image data sets of the multiple information sources; respectively inputting the image spectral feature extraction results of the image data sets of the multiple information sources into corresponding pre-trained neural network models to obtain corresponding classification results; and fusing the corresponding classification results to obtain a final classification recognition result. By applying the scheme of the invention, the automatic selection of the characteristics of the multi-source remote sensing image is automatically realized by utilizing a deep learning method, the manual characteristic selection is not needed, the engineering application of the multi-source remote sensing image fusion is convenient, and excellent results are obtained in the field of the multi-source remote sensing image fusion.

Description

[ technical field ] A method for producing a semiconductor device

The invention relates to a computer application technology, in particular to a multi-source data fusion method and a multi-source data fusion system.

[ background of the invention ]

The global comprehensive observation result has the characteristics of various data sources, various types, different scales, unclear semantics, huge data quantity and the like, and the multi-source data fusion technology is widely concerned in the military field and the civil field. With the completion of data fusion technology and theory and the successful application of military and other fields, the research on the field is increasingly emphasized.

With the development of artificial intelligence technology and the improvement of computer hardware performance, a neural network and a fuzzy theory are applied to the data fusion technology, and the target loss is effectively prevented by adaptively adjusting tracking parameters; and a fusion algorithm of a spline approximation theory and a neural network principle is combined, and the weight is determined as soon as possible through weight correction iteration, so that higher operation speed and calculation precision are achieved. With the development of artificial intelligence technology and the improvement of computer hardware performance, technologies such as deep learning and the like make breakthrough progress in research such as multi-source data fusion and the like. However, most deep learning models are constructed only for learning with a two-dimensional spatial semantic level, and the applicability to multi-dimensional vector features is poor. Moreover, the construction of the multi-source heterogeneous data model based on the deep learning platform is blank.

At the present stage, certain artificial intelligence technology is adopted for analyzing multi-source data, tasks such as intelligent identification and position information extraction of key elements are primarily realized, and the problems that the reliability of information mining is low and the execution efficiency is difficult to meet requirements exist. Particularly, the comprehensive interpretation capability of the data still stays at the target fusion level, the conversion efficiency from data to information is not high, and the development of decision by a robot instead of a human is far from realized. The multi-source heterogeneous data fusion still faces the problems of multi-factor identification, data fusion and intelligent comprehensive decision-making.

When massive multi-source heterogeneous data is processed and analyzed by deep learning, data in various forms such as images, pictures, videos, texts and the like are involved, and application purposes are different, for example, surface feature information is extracted from remote sensing images, target position information is obtained from message information, fact information … … of entities, relations, events and the like in specified types is extracted from natural language texts, a lot of sample data are needed according to different data forms and application purposes, the problem of lack of sample resources is bound to be met, and the process of manufacturing training samples according to different tasks is a time-consuming and labor-consuming process.

[ summary of the invention ]

Aspects of the present application provide a multi-source data fusion method, system, device, and storage medium.

One aspect of the application provides a multi-source data fusion method, which comprises the steps of collecting image data sets of multiple information sources for the same scene or the same target, preprocessing the image data sets of the multiple information sources and extracting image spectral features;

respectively inputting the image spectral feature extraction results of the image data sets of the multiple information sources into corresponding pre-trained neural network models to obtain corresponding classification results;

and fusing the corresponding classification results to obtain a final classification recognition result.

The above-described aspects and any possible implementation further provide an implementation, where the plurality of signal sources include at least two of optical remote sensing, SAR remote sensing, and infrared remote sensing signal sources.

The above-described aspects and any possible implementation further provide an implementation, and the preprocessing is an image registration processing and a normalization processing.

The above-described aspects and any possible implementation further provide an implementation in which a source corresponds to a pre-trained neural network model; the pre-trained neural network model is a deep convolution neural network model.

The foregoing aspects and any possible implementations further provide an implementation manner that determines the weight of each data source according to the classification precision of each data source, and performs weighted fusion on the corresponding classification results.

The above-described aspect and any possible implementation further provide an implementation, where the corresponding pre-trained neural network model is trained by: respectively collecting image data sets of multiple information sources for the same scene or the same target, and respectively carrying out manual annotation on the image data sets of the multiple information sources; preprocessing and extracting image spectral features of the image data sets of the multiple information sources, and taking the feature extraction result and corresponding artificial labels as training data sets; and respectively training a preset neural network model by using the training data sets to obtain the corresponding trained neural network model.

The above-described aspects and any possible implementations further provide an implementation, and the method further includes: and obtaining the target functions of the preset neural network models corresponding to the various information sources by adopting a multi-task learning method based on a characteristic function.

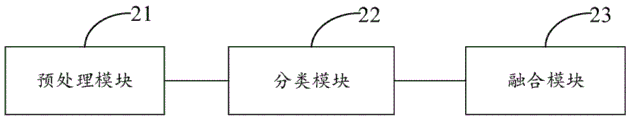

In a second aspect of the present disclosure, there is provided a multi-source data fusion system, comprising: the preprocessing module is used for acquiring image data sets of multiple information sources on the same scene or the same target, and preprocessing and extracting image spectral characteristics of the image data sets of the multiple information sources; the classification module is used for inputting the image spectral feature extraction results of the image data sets of the multiple information sources into corresponding pre-trained neural network models to obtain corresponding classification results; and the fusion module is used for fusing the corresponding classification results to serve as final classification recognition results.

In a third aspect of the disclosure, an electronic device is provided. The electronic device includes: a memory having a computer program stored thereon and a processor implementing the method as described above when executing the program.

In a fourth aspect of the present disclosure, a computer readable storage medium is provided, having stored thereon a computer program, which when executed by a processor, implements a method as in accordance with the first aspect of the present disclosure.

It should be understood that the statements herein reciting aspects are not intended to limit the critical or essential features of the embodiments of the present disclosure, nor are they intended to limit the scope of the present disclosure. Other features of the present disclosure will become apparent from the following description.

[ description of the drawings ]

FIG. 1 shows a flow diagram of a multi-source data fusion method according to an embodiment of the present disclosure;

FIG. 2 illustrates a block diagram of a multi-source data fusion system according to an embodiment of the present disclosure;

FIG. 3 illustrates a block diagram of an exemplary electronic device capable of implementing embodiments of the present disclosure.

[ detailed description ] embodiments

In order to make the objects, technical solutions and advantages of the embodiments of the present application clearer, the technical solutions in the embodiments of the present application will be clearly and completely described below with reference to the drawings in the embodiments of the present application, and it is obvious that the described embodiments are some embodiments of the present application, but not all embodiments. All other embodiments, which can be derived by a person skilled in the art from the embodiments given herein without making any creative effort, shall fall within the protection scope of the present application.

Fig. 1 is a flowchart of an embodiment of a multi-source data fusion method according to the present invention, as shown in fig. 1, including the following steps:

and step S11, collecting image data sets of the same scene or the same target by multiple information sources, and preprocessing and extracting image spectral characteristics of the image data sets of the multiple information sources.

S12, inputting the image spectral feature extraction results of the image data sets of the multiple information sources into corresponding pre-trained neural network models respectively to obtain corresponding classification results;

and step S13, fusing the corresponding classification results to obtain the final classification recognition result.

In one preferred implementation of step S11,

preferably, the plurality of information sources comprise optical remote sensing, SAR remote sensing, infrared remote sensing and the like.

And respectively preprocessing and extracting the spectral features of the images of various information sources by using a preset preprocessing and image spectral feature extraction model to obtain the spectral feature vectors of the pixels of the position coordinates (i, j) in the images of various information sources.

In some embodiments, the preprocessing includes an image registration process and a normalization process. The image registration refers to a process of overlapping and compounding two or more images obtained at different times, at different viewing angles, and by different devices, and refers to geometrically aligning two or more images. The quality of the fusion effect is directly influenced by the image registration precision. The multi-source images can be registered two by two; multiple images can also be registered simultaneously, preferably, a hybrid's model (SMM) is adopted to establish a model for points in a combined gray level scattergram of the multiple multi-source images, an Expectation Maximization (EM) algorithm is used for iteratively solving model parameters, and then geometric transformation parameters are obtained through solving to integrate and register the multiple multi-source images, so that the problems of selection dependence and internal inconsistency existing in the traditional pairwise registration algorithm are solved, and the efficiency and the time cost are improved and reduced by simultaneously registering the multiple images.

In some embodiments, image spectral feature extraction is performed on the preprocessed various source images, and a vector is formed by all pixels in a (2 × w +1) × (2 × w +1) size region by taking a coordinate (i, j) of each pixel in the image to be fused as a center, and is used as a spectral feature vector of a corresponding pixel on the coordinate (i, j); w represents the size of the characteristic window, the size of the characteristic window can be selected empirically according to application purposes, and the preferred value of w is 5-9, and the most preferred value of w is 5.

In one preferred implementation of step S12,

and inputting spectral feature vectors obtained by extracting image spectral features of various preprocessed information source images into corresponding pre-trained neural network models to respectively obtain corresponding classification results.

In some embodiments, the pre-trained neural network model is a deep convolutional neural network model.

The deep convolutional neural network model includes: the system comprises an input layer, a convolution layer, a pooling layer, a full-connection layer, a softmax layer and an output layer; preferably, the method comprises the following steps: 1 input layer, 5 convolutional layers, 3 pooling layers, 2 fully-connected layers, 1 softmax layer, and 1 output layer. The specific structure is input layer- > convolutional layer- > pooling layer- > convolutional layer- > pooling layer- > full-link layer- > softmax layer- > output layer.

In one preferred implementation of step S13,

the fusion is decision-level fusion, namely, the single-source remote sensing images are classified respectively, and then the classification results are integrated according to a certain fusion rule to obtain the final classification result.

In some embodiments, according to the classification result of the pre-trained neural network model for each data source, statistics is performed on the membership degree of each pixel of each data source belonging to each class, and then the membership degrees of all the current pixels of the data sources belonging to the same class are fused. The weight of each data source is determined according to the classification accuracy, for example, the classification accuracy can reach 60% and 80% for SAR remote sensing and infrared remote sensing respectively. And comparing the fusion membership degree of each category of the current pixel, and determining the final category of the current pixel according to the maximum membership degree principle.

Wherein the corresponding pre-trained neural network model is obtained by training through the following method:

and step 22, preprocessing and extracting image spectral features of the image data sets of the multiple information sources, and taking the feature extraction result and the corresponding artificial labels as training data sets.

Preferably, preprocessing and image spectral feature extraction are respectively carried out on the image data sets of the multiple information sources by utilizing preprocessing and image spectral feature extraction models, so that an optical remote sensing image training data set, an SAR remote sensing image training data set, an infrared remote sensing image training data set and the like are obtained.

Preferably, a training data set [ x ] is obtainedij,yij]Which represents the spectral feature vector of the pixel at position coordinate (i, j) in the image; y isijE {1, 2., K } represents the class of pixels for the position coordinate (i, j), and K is a constant representing the total number of classes of pixels.

And 23, training the preset neural network model by using the training data sets respectively to obtain the corresponding trained neural network model.

Preferably, the preset neural network model is a deep convolution neural network model, and the neural network model after training obtained through the above steps corresponds to the plurality of information sources one to one, that is, the method includes: the method comprises the following steps of obtaining a preset neural network model corresponding to optical remote sensing, a preset neural network model corresponding to SAR remote sensing and a preset neural network model corresponding to infrared remote sensing.

In some embodiments, the training data set obtained in step 22 is used to train a preset neural network model by using a random gradient descent method, that is, to optimize the objective function; when the loss function of the whole neural network model tends to be close to the local optimal solution, the training is completed; wherein the local optimal solution is manually set in advance. Preferably, a deep convolutional neural network is trained by using the optical remote sensing image training data set, the SAR remote sensing image training data set and the infrared image remote sensing training data set obtained in step 22 respectively.

In some embodiments, a multi-task learning method based on a feature function is adopted to obtain the objective function of the preset neural network model corresponding to the various information sources. Preferably, a soft sharing mechanism of parameters is employed: each task has its own model and its own parameters.

In some embodiments, the objective function of the preset neural network model is obtained by:

assuming that there are T different learning tasks (T sources, each source corresponding to a learning task, i.e. a predetermined neural network model), there is an associated data for each learning task:

Firstly, calculating a characteristic value and characteristic function pair { lambda ] of the t-th learning taskti,φtiIn which λ istiIs a characteristic value, phitiIs the corresponding characteristic function. In additionIs a Gramian matrix formed from the data set and also the kernel function (activation function) K. The kernel function is preferably a sigmoid function or a hyperbolic tangent function, most preferably a sigmoid function. sigmoid compresses the output to [0, 1 ]]The final output average value generally tends to 0.

The eigenvalues and eigenfunctions are as follows:

unlike the single-task learning in the prior art, in the present embodiment, the multi-task learning simultaneously learns a plurality of tasks through the interrelations between the tasks. Assuming that part of the feature functions are shared by multiple tasks, the feature functions of different tasks can influence each other. Shared feature functions can provide more information about the distribution of data.

In order to prevent the learning results of all tasks from being the same due to the too large weight of the shared features, in this embodiment, a unique feature function is established for each task separately, as follows:

wherein C ═ C1,C2,...,CT],d=d1+d2+...+dTRepresenting the number of sum feature functions for all tasks. Suppose thatAnd C0Is the above object letterNumerical solution, then the t-th regression objective function can be written as follows:

wherein x istiInput representing the t-th task, ft(xti) Representing an input xtiThe predicted result of (1).

The learning method of the t-th objective function is as follows:

for n sample data of the t-th task, initializing parameter CtDetermining a convergence value C0(ii) a The operation is circulated to the public display 2 until Ct<C0And obtaining n characteristic functions corresponding to the n sample data, and further obtaining the t-th target function.

By applying the scheme of the invention, the automatic selection of the characteristics of the multi-source remote sensing image is automatically realized by utilizing a deep learning method, the manual characteristic selection is not needed, the engineering application of the multi-source remote sensing image fusion is convenient, and excellent results are obtained in the field of the multi-source remote sensing image fusion.

It should be noted that, for simplicity of description, the above-mentioned method embodiments are described as a series of acts or combination of acts, but those skilled in the art will recognize that the present application is not limited by the order of acts described, as some steps may occur in other orders or concurrently depending on the application. Further, those skilled in the art should also appreciate that the embodiments described in the specification are preferred embodiments and that the acts and modules referred to are not necessarily required in this application.

The above is a description of method embodiments, and the embodiments of the present invention are further described below by way of apparatus embodiments.

Fig. 2 is a block diagram of an embodiment of the multi-source data fusion system according to the present invention, as shown in fig. 2, including:

the preprocessing module 21 is configured to collect image data sets of a same scene or a same target from multiple information sources, and perform preprocessing and image spectral feature extraction on the image data sets of the multiple information sources.

The classification module 22 is configured to input the image spectral feature extraction results of the image data sets of the multiple information sources into corresponding pre-trained neural network models to obtain corresponding classification results;

and the fusion module 23 is configured to fuse the corresponding classification results to obtain final classification recognition results.

It can be clearly understood by those skilled in the art that, for convenience and brevity of description, the specific working processes of the terminal and the server described above may refer to corresponding processes in the foregoing method embodiments, and are not described herein again.

In the several embodiments provided in the present application, it should be understood that the disclosed method and apparatus may be implemented in other ways. For example, the above-described apparatus embodiments are merely illustrative, and for example, the division of the units is only one logical division, and other divisions may be realized in practice, for example, a plurality of units or components may be combined or integrated into another system, or some features may be omitted, or not executed. In addition, the shown or discussed mutual coupling or direct coupling or communication connection may be an indirect coupling or communication connection through some interfaces, devices or units, and may be in an electrical, mechanical or other form.

The units described as separate parts may or may not be physically separate, and parts displayed as units may or may not be physical units, may be located in one place, or may be distributed on a plurality of network units. Some or all of the units can be selected according to actual needs to achieve the purpose of the solution of the embodiment.

In addition, functional units in the embodiments of the present application may be integrated into one processor, or each unit may exist alone physically, or two or more units are integrated into one unit. The integrated unit can be realized in a form of hardware, or in a form of hardware plus a software functional unit.

FIG. 3 shows a schematic block diagram of an electronic device 300 that may be used to implement embodiments of the present disclosure. The apparatus 300 may be used to implement the multi-source data fusion system of FIG. 2. As shown, device 300 includes a Central Processing Unit (CPU)301 that may perform various appropriate actions and processes in accordance with computer program instructions stored in a Read Only Memory (ROM)302 or loaded from a storage unit 308 into a Random Access Memory (RAM) 303. In the RAM 303, various programs and data necessary for the operation of the device 300 can also be stored. The CPU 301, ROM 302, and RAM 303 are connected to each other via a bus 304. An input/output (I/O) interface 305 is also connected to bus 304.

Various components in device 300 are connected to I/O interface 305, including: an input unit 306 such as a keyboard, a mouse, or the like; an output unit 307 such as various types of displays, speakers, and the like; a storage unit 308 such as a magnetic disk, optical disk, or the like; and a communication unit 309 such as a network card, modem, wireless communication transceiver, etc. The communication unit 309 allows the device 300 to exchange information/data with other devices via a computer network such as the internet and/or various telecommunication networks.

The processing unit 301 performs the various methods and processes described above. For example, in some embodiments, the various methods may be implemented as a computer software program tangibly embodied in a machine-readable medium, such as storage unit 308. In some embodiments, part or all of the computer program may be loaded and/or installed onto device 300 via ROM 302 and/or communication unit 309. When the computer program is loaded into RAM 303 and executed by CPU 301, one or more steps of the method described above may be performed. Alternatively, in other embodiments, the CPU 301 may be configured to perform the method by any other suitable means (e.g., by way of firmware).

The functions described herein above may be performed, at least in part, by one or more hardware logic components. For example, without limitation, exemplary types of hardware logic components that may be used include: a Field Programmable Gate Array (FPGA), an Application Specific Integrated Circuit (ASIC), an Application Specific Standard Product (ASSP), a system on a chip (SOC), a load programmable logic device (CPLD), and the like.

Program code for implementing the methods of the present disclosure may be written in any combination of one or more programming languages. These program codes may be provided to a processor or controller of a general purpose computer, special purpose computer, or other programmable data processing apparatus, such that the program codes, when executed by the processor or controller, cause the functions/operations specified in the flowchart and/or block diagram to be performed. The program code may execute entirely on the machine, partly on the machine, as a stand-alone software package partly on the machine and partly on a remote machine or entirely on the remote machine or server.

In the context of this disclosure, a machine-readable medium may be a tangible medium that can contain, or store a program for use by or in connection with an instruction execution system, apparatus, or device. The machine-readable medium may be a machine-readable signal medium or a machine-readable storage medium. A machine-readable medium may include, but is not limited to, an electronic, magnetic, optical, electromagnetic, infrared, or semiconductor system, apparatus, or device, or any suitable combination of the foregoing. More specific examples of a machine-readable storage medium would include an electrical connection based on one or more wires, a portable computer diskette, a hard disk, a Random Access Memory (RAM), a read-only memory (ROM), an erasable programmable read-only memory (EPROM or flash memory), an optical fiber, a portable compact disc read-only memory (CD-ROM), an optical storage device, a magnetic storage device, or any suitable combination of the foregoing.

Further, while operations are depicted in a particular order, this should be understood as requiring that such operations be performed in the particular order shown or in sequential order, or that all illustrated operations be performed, to achieve desirable results. Under certain circumstances, multitasking and parallel processing may be advantageous. Likewise, while several specific implementation details are included in the above discussion, these should not be construed as limitations on the scope of the disclosure. Certain features that are described in the context of separate embodiments can also be implemented in combination in a single implementation. Conversely, various features that are described in the context of a single implementation can also be implemented in multiple implementations separately or in any suitable subcombination.

Although the subject matter has been described in language specific to structural features and/or methodological acts, it is to be understood that the subject matter defined in the appended claims is not necessarily limited to the specific features or acts described above. Rather, the specific features and acts described above are disclosed as example forms of implementing the claims.

Claims (10)

1. A multi-source data fusion method is characterized by comprising the following steps:

collecting image data sets of multiple information sources to the same scene or the same target, and preprocessing and extracting image spectral features of the image data sets of the multiple information sources;

respectively inputting the image spectral feature extraction results of the image data sets of the multiple information sources into corresponding pre-trained neural network models to obtain corresponding classification results;

and fusing the corresponding classification results to obtain a final classification recognition result.

2. The method of claim 1,

the multiple information sources comprise at least two of optical remote sensing, SAR remote sensing and infrared remote sensing information sources.

3. The method of claim 1,

the preprocessing comprises image registration processing and normalization processing.

4. The method of claim 1,

an information source corresponds to a pre-trained neural network model; the pre-trained neural network model is a deep convolution neural network model.

5. The method of claim 1,

and determining the weight of each data source according to the classification precision of each data source, and performing weighted fusion on the corresponding classification results.

6. The method of claim 1, wherein the corresponding pre-trained neural network model is trained by:

respectively collecting image data sets of multiple information sources for the same scene or the same target, and respectively carrying out manual annotation on the image data sets of the multiple information sources;

preprocessing and extracting image spectral features of the image data sets of the multiple information sources, and taking the feature extraction result and corresponding artificial labels as training data sets;

and respectively training a preset neural network model by using the training data sets to obtain the corresponding trained neural network model.

7. The method of claim 6, further comprising:

and obtaining the target functions of the preset neural network models corresponding to the various information sources by adopting a multi-task learning method based on a characteristic function.

8. A multi-source data fusion system, comprising:

the preprocessing module is used for acquiring image data sets of multiple information sources on the same scene or the same target, and preprocessing and extracting image spectral characteristics of the image data sets of the multiple information sources;

the classification module is used for inputting the image spectral feature extraction results of the image data sets of the multiple information sources into corresponding pre-trained neural network models to obtain corresponding classification results;

and the fusion module is used for fusing the corresponding classification results to serve as final classification recognition results.

9. An electronic device comprising a memory and a processor, the memory having stored thereon a computer program, wherein the processor, when executing the program, implements the method of any of claims 1-7.

10. A computer-readable storage medium, on which a computer program is stored, which program, when being executed by a processor, carries out the method according to any one of claims 1 to 7.

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202010197890.5A CN113496148A (en) | 2020-03-19 | 2020-03-19 | Multi-source data fusion method and system |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202010197890.5A CN113496148A (en) | 2020-03-19 | 2020-03-19 | Multi-source data fusion method and system |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| CN113496148A true CN113496148A (en) | 2021-10-12 |

Family

ID=77993607

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN202010197890.5A Pending CN113496148A (en) | 2020-03-19 | 2020-03-19 | Multi-source data fusion method and system |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN113496148A (en) |

Cited By (3)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN114529489A (en) * | 2022-03-01 | 2022-05-24 | 中国科学院深圳先进技术研究院 | Multi-source remote sensing image fusion method, device, equipment and storage medium |

| CN115131412A (en) * | 2022-05-13 | 2022-09-30 | 国网浙江省电力有限公司宁波供电公司 | Image processing method in multispectral image fusion process |

| CN117392539A (en) * | 2023-10-13 | 2024-01-12 | 哈尔滨师范大学 | River water body identification method based on deep learning, electronic equipment and storage medium |

Citations (8)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN102592134A (en) * | 2011-11-28 | 2012-07-18 | 北京航空航天大学 | Multistage decision fusing and classifying method for hyperspectrum and infrared data |

| CN103020605A (en) * | 2012-12-28 | 2013-04-03 | 北方工业大学 | Bridge identification method based on decision-making layer fusion |

| CN105930772A (en) * | 2016-04-13 | 2016-09-07 | 武汉大学 | City impervious surface extraction method based on fusion of SAR image and optical remote sensing image |

| CN106295714A (en) * | 2016-08-22 | 2017-01-04 | 中国科学院电子学研究所 | A kind of multi-source Remote-sensing Image Fusion based on degree of depth study |

| CN108960300A (en) * | 2018-06-20 | 2018-12-07 | 北京工业大学 | A kind of urban land use information analysis method based on deep neural network |

| CN109993220A (en) * | 2019-03-23 | 2019-07-09 | 西安电子科技大学 | Multi-source Remote Sensing Images Classification method based on two-way attention fused neural network |

| CN110136154A (en) * | 2019-05-16 | 2019-08-16 | 西安电子科技大学 | Remote sensing images semantic segmentation method based on full convolutional network and Morphological scale-space |

| CN110261329A (en) * | 2019-04-29 | 2019-09-20 | 北京航空航天大学 | A kind of Minerals identification method based on full spectral coverage high-spectrum remote sensing data |

-

2020

- 2020-03-19 CN CN202010197890.5A patent/CN113496148A/en active Pending

Patent Citations (8)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN102592134A (en) * | 2011-11-28 | 2012-07-18 | 北京航空航天大学 | Multistage decision fusing and classifying method for hyperspectrum and infrared data |

| CN103020605A (en) * | 2012-12-28 | 2013-04-03 | 北方工业大学 | Bridge identification method based on decision-making layer fusion |

| CN105930772A (en) * | 2016-04-13 | 2016-09-07 | 武汉大学 | City impervious surface extraction method based on fusion of SAR image and optical remote sensing image |

| CN106295714A (en) * | 2016-08-22 | 2017-01-04 | 中国科学院电子学研究所 | A kind of multi-source Remote-sensing Image Fusion based on degree of depth study |

| CN108960300A (en) * | 2018-06-20 | 2018-12-07 | 北京工业大学 | A kind of urban land use information analysis method based on deep neural network |

| CN109993220A (en) * | 2019-03-23 | 2019-07-09 | 西安电子科技大学 | Multi-source Remote Sensing Images Classification method based on two-way attention fused neural network |

| CN110261329A (en) * | 2019-04-29 | 2019-09-20 | 北京航空航天大学 | A kind of Minerals identification method based on full spectral coverage high-spectrum remote sensing data |

| CN110136154A (en) * | 2019-05-16 | 2019-08-16 | 西安电子科技大学 | Remote sensing images semantic segmentation method based on full convolutional network and Morphological scale-space |

Non-Patent Citations (2)

| Title |

|---|

| 于秀兰, 钱国蕙: "TM和SAR遥感图像的不同层次融合分类比较", 遥感技术与应用, no. 03, 30 September 1999 (1999-09-30), pages 38 - 44 * |

| 贾永红, 李德仁: "多源遥感影像像素级融合分类与决策级分类融合法的研究", 武汉大学学报(信息科学版), no. 05, 25 October 2001 (2001-10-25), pages 430 - 434 * |

Cited By (6)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN114529489A (en) * | 2022-03-01 | 2022-05-24 | 中国科学院深圳先进技术研究院 | Multi-source remote sensing image fusion method, device, equipment and storage medium |

| WO2023164929A1 (en) * | 2022-03-01 | 2023-09-07 | 中国科学院深圳先进技术研究院 | Multi-source remote sensing image fusion method and apparatus, device and storage medium |

| CN115131412A (en) * | 2022-05-13 | 2022-09-30 | 国网浙江省电力有限公司宁波供电公司 | Image processing method in multispectral image fusion process |

| CN115131412B (en) * | 2022-05-13 | 2024-05-14 | 国网浙江省电力有限公司宁波供电公司 | Image processing method in multispectral image fusion process |

| CN117392539A (en) * | 2023-10-13 | 2024-01-12 | 哈尔滨师范大学 | River water body identification method based on deep learning, electronic equipment and storage medium |

| CN117392539B (en) * | 2023-10-13 | 2024-04-09 | 哈尔滨师范大学 | River water body identification method based on deep learning, electronic equipment and storage medium |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| Zhou et al. | BOMSC-Net: Boundary optimization and multi-scale context awareness based building extraction from high-resolution remote sensing imagery | |

| CN110728209B (en) | Gesture recognition method and device, electronic equipment and storage medium | |

| CN112990211B (en) | Training method, image processing method and device for neural network | |

| EP4099220A1 (en) | Processing apparatus, method and storage medium | |

| CN111797893A (en) | Neural network training method, image classification system and related equipment | |

| TW202011282A (en) | Neural network system, method and device used for vehicle part recognition | |

| CN112288706A (en) | Automatic chromosome karyotype analysis and abnormality detection method | |

| US20220148291A1 (en) | Image classification method and apparatus, and image classification model training method and apparatus | |

| CN110264444B (en) | Damage detection method and device based on weak segmentation | |

| CN114912612A (en) | Bird identification method and device, computer equipment and storage medium | |

| CN110781970B (en) | Classifier generation method, device, equipment and storage medium | |

| CN110033007A (en) | Attribute recognition approach is worn clothes based on the pedestrian of depth attitude prediction and multiple features fusion | |

| CN108564102A (en) | Image clustering evaluation of result method and apparatus | |

| CN113052295B (en) | Training method of neural network, object detection method, device and equipment | |

| CN113496148A (en) | Multi-source data fusion method and system | |

| CN113592060A (en) | Neural network optimization method and device | |

| US20230060211A1 (en) | System and Method for Tracking Moving Objects by Video Data | |

| Grigorev et al. | Depth estimation from single monocular images using deep hybrid network | |

| EP3857449A1 (en) | Apparatus and method for three-dimensional object recognition | |

| CN113673482B (en) | Cell antinuclear antibody fluorescence recognition method and system based on dynamic label distribution | |

| CN111507288A (en) | Image detection method, image detection device, computer equipment and storage medium | |

| CN112330701A (en) | Tissue pathology image cell nucleus segmentation method and system based on polar coordinate representation | |

| CN115546549A (en) | Point cloud classification model construction method, point cloud classification method, device and equipment | |

| CN110889858A (en) | Automobile part segmentation method and device based on point regression | |

| CN114385846A (en) | Image classification method, electronic device, storage medium and program product |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination |