CN112598600B - Image moire correction method, electronic device and medium therefor - Google Patents

Image moire correction method, electronic device and medium therefor Download PDFInfo

- Publication number

- CN112598600B CN112598600B CN202110006964.7A CN202110006964A CN112598600B CN 112598600 B CN112598600 B CN 112598600B CN 202110006964 A CN202110006964 A CN 202110006964A CN 112598600 B CN112598600 B CN 112598600B

- Authority

- CN

- China

- Prior art keywords

- pixel point

- moire

- pixel

- brightness

- detected

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Active

Links

- 238000012937 correction Methods 0.000 title claims abstract description 121

- 238000000034 method Methods 0.000 title claims abstract description 91

- 230000008859 change Effects 0.000 claims abstract description 105

- 238000001514 detection method Methods 0.000 claims description 155

- 230000015654 memory Effects 0.000 claims description 44

- 238000011156 evaluation Methods 0.000 claims description 29

- 230000007423 decrease Effects 0.000 claims description 3

- 238000012545 processing Methods 0.000 abstract description 18

- 238000010586 diagram Methods 0.000 description 17

- 230000006854 communication Effects 0.000 description 16

- 238000004891 communication Methods 0.000 description 15

- 230000003247 decreasing effect Effects 0.000 description 11

- 230000008569 process Effects 0.000 description 11

- 238000012549 training Methods 0.000 description 11

- 238000001914 filtration Methods 0.000 description 10

- 230000006870 function Effects 0.000 description 9

- 230000003287 optical effect Effects 0.000 description 9

- 230000000630 rising effect Effects 0.000 description 8

- 230000001629 suppression Effects 0.000 description 6

- 230000000295 complement effect Effects 0.000 description 4

- 230000009471 action Effects 0.000 description 3

- 230000007246 mechanism Effects 0.000 description 3

- 230000002093 peripheral effect Effects 0.000 description 3

- 230000005540 biological transmission Effects 0.000 description 2

- 238000007726 management method Methods 0.000 description 2

- 102220272493 rs782575179 Human genes 0.000 description 2

- 239000004065 semiconductor Substances 0.000 description 2

- 241000023320 Luma <angiosperm> Species 0.000 description 1

- 230000001133 acceleration Effects 0.000 description 1

- 238000013528 artificial neural network Methods 0.000 description 1

- 238000004422 calculation algorithm Methods 0.000 description 1

- 238000004364 calculation method Methods 0.000 description 1

- 239000003795 chemical substances by application Substances 0.000 description 1

- 239000003086 colorant Substances 0.000 description 1

- 230000006835 compression Effects 0.000 description 1

- 238000007906 compression Methods 0.000 description 1

- 238000004590 computer program Methods 0.000 description 1

- 238000013500 data storage Methods 0.000 description 1

- 238000013461 design Methods 0.000 description 1

- 238000007599 discharging Methods 0.000 description 1

- 238000005516 engineering process Methods 0.000 description 1

- 230000007613 environmental effect Effects 0.000 description 1

- 238000003384 imaging method Methods 0.000 description 1

- 230000009191 jumping Effects 0.000 description 1

- 239000004973 liquid crystal related substance Substances 0.000 description 1

- 230000007774 longterm Effects 0.000 description 1

- 229910044991 metal oxide Inorganic materials 0.000 description 1

- 150000004706 metal oxides Chemical class 0.000 description 1

- OSWPMRLSEDHDFF-UHFFFAOYSA-N methyl salicylate Chemical compound COC(=O)C1=CC=CC=C1O OSWPMRLSEDHDFF-UHFFFAOYSA-N 0.000 description 1

- 238000010295 mobile communication Methods 0.000 description 1

- 230000002969 morbid Effects 0.000 description 1

- 238000005457 optimization Methods 0.000 description 1

- 230000000644 propagated effect Effects 0.000 description 1

- 102220092686 rs1662316 Human genes 0.000 description 1

- 102220122335 rs201564143 Human genes 0.000 description 1

- 102220216339 rs202221169 Human genes 0.000 description 1

- 102220017009 rs397517923 Human genes 0.000 description 1

- 102220170903 rs61737298 Human genes 0.000 description 1

- 238000005070 sampling Methods 0.000 description 1

- 230000003068 static effect Effects 0.000 description 1

Images

Classifications

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T5/00—Image enhancement or restoration

- G06T5/70—Denoising; Smoothing

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/20—Special algorithmic details

- G06T2207/20212—Image combination

- G06T2207/20216—Image averaging

Landscapes

- Physics & Mathematics (AREA)

- General Physics & Mathematics (AREA)

- Engineering & Computer Science (AREA)

- Theoretical Computer Science (AREA)

- Image Processing (AREA)

Abstract

The application relates to the technical field of image processing, and discloses an image moire correction method, electronic equipment and a medium thereof. The method comprises the following steps: acquiring an image to be corrected; determining whether the pixel points are located in a moire area or not based on the brightness component values of the pixel points in the image to be corrected; and under the condition that the pixel points are located in the moire area, performing molar correction on the pixel points. According to the moire correction method, whether the brightness among a plurality of continuous pixel points has a step or not is determined by detecting the brightness change among the plurality of continuous pixel points in the image to be corrected in a certain direction, and the pixel points to be detected are determined to be in a moire area under the condition that the number of the pixel points with the step is larger than a step threshold value, so that the moire in the image to be corrected can be more accurately determined and corrected by electronic equipment.

Description

The present application claims priority of chinese patent application having application number 202011064866.0, entitled "image moire correction method and electronic device and medium thereof", filed by the chinese patent office on 30/09/2020, the entire contents of which are incorporated herein by reference.

Technical Field

The present disclosure relates to the field of image processing, and in particular, to a method for correcting moire fringes in an image, an electronic device and a medium thereof.

Background

When the sampling frequency of the image sensor of the electronic device is lower than the spatial frequency of the captured scene, moire may be generated in the captured image due to stacking of high-frequency image information in the low-frequency pixel space, which affects the image quality. When the image contains moire areas, a lot of false colors are also accompanied. In the pixel space of the image including the moire area, the frequency of the photosensitive element corresponding to the pixel point in the moire area is significantly higher than the frequency of the photosensitive element of the surrounding pixel point, for example, in the image of YUV (Luminance-Bandwidth-Chrominance space) format, the Y component (Luminance), that is, the Luminance of the pixel point in the moire area is significantly higher than the Luminance of the surrounding pixel point.

Currently, most manufacturers choose to use an optical low pass filter in the camera to suppress moir e generation at the expense of reducing image sharpness. A commonly used method for removing moire is to take a raw Bayer image (Bayer image) after photographing, and since the Bayer image is a raw image obtained by an imaging Device CCD (Charge-coupled Device) or CMOS (Complementary Metal Oxide Semiconductor) image sensor of an electronic Device, the above method cannot be adopted in a case where the raw Bayer image cannot be obtained. Therefore, there is a need for a moire correction method that can support common image formats.

Disclosure of Invention

An object of the present application is to provide an image moire correction method, an electronic apparatus and a medium therefor. By the method, the electronic equipment can detect the brightness change among a plurality of continuous pixel points in the image to be corrected in a certain direction of the pixel points to be detected, determine whether the brightness among the plurality of continuous pixel points has a step, and determine that the pixel points to be detected are located in the moire zone under the condition that the number of the pixel points with the step is larger than a step threshold value, so that the electronic equipment can more accurately determine the moire zone in the image to be corrected.

A first aspect of the present application provides an image moire correction method, including: acquiring an image to be corrected; determining whether the pixel points are located in a moire area or not based on the brightness component values of the pixel points in the image to be corrected; and under the condition that the pixel points are positioned in the moire area, performing moire correction on the pixel points.

That is, in the embodiment of the present application, for example, the format of the image to be corrected may be a YUV (Luminance-Bandwidth-Chrominance space) format. The Luminance component value of a pixel may be a Y component (Luminance).

In a possible implementation of the first aspect, determining whether a pixel point is located in a moire area based on a luminance component of each pixel point in an image to be corrected includes:

selecting pixel points included in a detection window of the pixel points to be detected from the image to be corrected, wherein the detection window includes the pixel points to be detected, the detection window includes M multiplied by M pixel points, and M is an odd number larger than 1;

calculating the number of pixel points with brightness steps in the detection window based on the brightness value of each pixel point in the detection window;

and under the condition that the number of pixel points with brightness step in the detection window exceeds a preset number, determining that the pixel points to be detected are located in the Moire pattern area.

In one possible implementation of the first aspect described above, the detection window is a sliding window.

That is, in the embodiment of the present application, for example, the detection window may be a sliding window of 5 × 5 size in fig. 6, that is, the detection window may include 25 pixels. The preset number may be a step threshold, and if the number of pixel points where a brightness step occurs in the detection window is determined to be 10, that is, the number of steps in the detection window step _ hv is 10, it is determined that the pixel points to be detected are located in the moire area under the condition that the preset step threshold is 5.

In a possible implementation of the first aspect, the pixel point to be detected is located at the center of the detection window.

For example, the pixel point to be detected may be the pixel point Y22 in fig. 6.

In a possible implementation of the first aspect, whether a pixel has a brightness step is determined by:

under the condition that the brightness change direction of the brightness value from the first pixel point to the second pixel point in at least one detection direction in the detection window is different from the brightness change direction of the brightness value from the second pixel point to the third pixel point, determining that the brightness step occurs at the second pixel point, wherein the first pixel point, the second pixel point and the third pixel point are respectively three adjacent pixel points in the detection window, and the brightness change direction of the brightness value comprises two directions of brightness increase and brightness decrease.

For example, whether a step occurs may be determined by determining whether the luminance change direction from the first pixel point to the second pixel point is opposite to the luminance change direction from the second pixel point to the third pixel point, and if the luminance change directions are opposite, it is determined that a step occurs.

In a possible implementation of the first aspect, it is determined whether a luminance change direction from the first pixel point to the second pixel point is the same as a luminance change direction from the second pixel point to the third pixel point by: for example, the first pixel point, the second pixel point, and the third pixel point may be Y21, Y22, and Y23 in fig. 6; for another example, the first pixel point, the second pixel point and the third pixel point can also be Y12, Y22 and Y32 in fig. 6

Calculating the brightness value step sign diffH _ r1 of the first pixel point and the second pixel point by the following formula:

wherein diffH1 can be calculated by the following formula:

diffH1=sign(diff1)*diffH a1

wherein sign (diff 1) is a sign bit of a luminance value difference between the first pixel point and the second pixel point, diffH a1 Can be calculated by the following formula:

diffH a1 =abs(diff1)-tn

wherein abs (diff 1) is an absolute value of a difference value of brightness values of the first pixel point and the second pixel point, and tn is a noise model calibration parameter;

calculating the brightness value step sign diffH _ r2 of the second pixel point and the third pixel point by the following formula:

wherein diffH2 can be calculated by the following formula:

diffH2=sign(diff2)*diffH a2

wherein sign (diff 2) is the second pixel point and the third pixel pointSign, diffH, of the difference in luminance values of the points a 2 can be calculated by the following formula:

diffH a2 =abs(diff2)-tn

wherein abs (diff 1) is an absolute value of a difference between luminance values of the second pixel point and the third pixel point;

and under the condition that the product of diffH _ r1 and diffH _ r2 is a negative number, determining that the brightness change direction from the first pixel point to the second pixel point is different from the brightness change direction from the second pixel point to the third pixel point.

In a possible implementation of the first aspect, the sign bit is-1 when a difference between luminance values of the first pixel point and the second pixel point is a negative number; under the condition that the difference value of the brightness values of the first pixel point and the second pixel point is a non-negative number, the sign bit is 1, and under the condition that the difference value of the brightness values of the second pixel point and the third pixel point is a negative number, the sign bit is-1; and under the condition that the difference value of the brightness values of the second pixel point and the third pixel point is a non-negative number, the sign bit is 1.

In a possible implementation of the first aspect, the noise model calibration parameter is used to remove a noise signal in an absolute value of a difference between luminance values of the first pixel point and the second pixel point and an absolute value of a difference between luminance values of the second pixel point and the third pixel point.

For example, taking Y23 and Y22 in fig. 6 as examples, the noise model calibration parameters are used to represent the noise magnitudes of the Y components of Y23 and Y22. The noise model calibration parameters can adjust the values of the Y components of the pixels in the sliding window, and the values can change along with the change of the values of the Y components of each pixel in the sliding window.

In a possible implementation of the first aspect, it is determined that a brightness step is generated at the second pixel point when the brightness value of the first pixel point is smaller than the brightness value of the second pixel point, the absolute value of the difference between the brightness values of the first pixel point and the second pixel point is greater than the first threshold, the brightness value of the third pixel point is smaller than the brightness value of the second pixel point, and the absolute value of the difference between the brightness values of the third pixel point and the second pixel point is greater than the second threshold.

For example, taking Y21, Y22, and Y23 in fig. 6 as an example, in the case where Y22 to Y23 make an upward change in the Y component, and Y21 to Y22 make a downward change in the Y component, it is determined that a luminance step occurs at Y22.

In a possible implementation of the first aspect, performing moir correction on a pixel point when the pixel point is located in a moir e area includes:

and calculating texture evaluation indexes in the detection window based on the brightness values of all the pixel points in the detection window, wherein the texture evaluation indexes are used for describing the distribution state of the brightness values of all the pixel points in the detection window.

For example, in fig. 6, the texture evaluation index of a sliding window centered on Y22 is calculated.

In one possible implementation of the first aspect, the texture evaluation index in the detection window is calculated by the following formula:

calculating the brightness value step sign texH _ r3 of each pixel point and the fourth pixel point in the detection window by the following formula:

wherein, the texH3 can be calculated by the following formula:

texH3=sign(tex3)*texH a3

wherein sign (tex 3) is the sign bit of the difference between the brightness values of each pixel and the fourth pixel, texH a3 Can be calculated by the following formula:

texH a3 =abs(tex3)-tn

wherein abs (tex 3) is the absolute value of the difference between the brightness values of each pixel point and the fourth pixel point, and tn is a noise model calibration parameter;

and solving the sum of the brightness value step symbol texH _ r3 of each pixel point and the fourth pixel point in the detection window, and recording the sum as the texture evaluation index of the detection window.

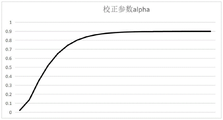

In a possible implementation of the first aspect, a correction parameter for moire correction of a pixel point to be detected is calculated by using the number of pixel points having a brightness step in a detection window and a texture evaluation index of the detection window, and the correction parameter is calculated by using the following formula:

moire=step_hv/step_tex

alpha=k1/(1+e -a*(moire-b) )-k2

step _ hv is the number of pixel points with brightness steps in the detection window, step _ tex is the texture evaluation index of the detection window, alpha is a correction parameter, and a, b, k1 and k2 are debugging parameters.

In a possible implementation of the first aspect, the luminance value of the pixel point to be detected is corrected by the following formula:

CorY=beta*med+(1-beta)*mean

Yout=alpha*CorY+(1-alpha)*Y

wherein, med and mean are the median and mean of the brightness values of each pixel point and the pixel point to be detected in at least one direction with the pixel point to be detected as the center; beta is an adjustment parameter, corY is a correction component of the brightness value of the pixel point to be detected, Y is the brightness value of the pixel point to be detected, and Yout is the brightness value of the pixel point to be detected after correction.

In one possible implementation of the first aspect, in a case that a distance between the pixel point to be detected and at least one boundary of the image to be corrected is less than M, the partial region of the detection window is located outside the boundary of the image to be corrected.

In a possible implementation of the first aspect, the luminance values of the pixels in the detection window are used to complement the partial region with the pixel to be detected as a center based on a direction parallel to the boundary as a symmetry axis.

For example, as Y22 in fig. 13a and 13b is located at the left boundary of the image to be corrected, in this case, in the sliding window with Y22 as the center, except for Y22, there are no adjacent pixel points on the left sides of Y02, Y12, Y32, and Y42, and in this case, the pixel points on the right side can be copied by mirroring with Y02, Y12, Y22, Y32, and Y42 as the center to complete the missing pixel points.

In one possible implementation of the first aspect described above, the moire correction method is implemented by an image signal processor of the electronic device, and the image to be corrected is an image taken by a camera of the electronic device.

In one possible implementation of the first aspect, the format of the image to be corrected is a format of a luminance width chrominance space.

In one possible implementation of the first aspect, the detection direction is a horizontal direction or a vertical direction or a diagonal direction in the region to be corrected.

In one possible implementation of the first aspect, the detection window is determined in the image to be corrected by a preset step size.

In a possible implementation of the first aspect, when a next detection window is determined in the image to be corrected and the next detection window includes a second pixel point, it is determined that a step is generated at the second pixel point in the next region to be corrected.

For example, as shown in fig. 6 to 8, the detection window moves from being centered on Y22 to being centered on Y23, and for Y22, the detection window may use the previous detection result to determine that a step is generated at Y22.

A second aspect of the present application provides a readable medium, in which instructions are stored, and when the instructions are executed by an electronic device, the electronic device executes the image moire correction method provided in the foregoing first aspect.

A third aspect of the present application provides an electronic device comprising:

a memory having instructions stored therein, an

A processor configured to read and execute the instructions in the memory, so as to enable the electronic device to execute the image moire correction method as provided in the foregoing first aspect.

In one possible implementation of the above third aspect, the processor comprises an image signal processor.

Drawings

FIG. 1 illustrates a scene schematic of moir e correction, according to some embodiments of the present application;

fig. 2a shows a schematic diagram of a step occurring at a pixel point to be detected in an image to be corrected according to some embodiments of the present application;

fig. 2b is a schematic diagram illustrating another example of a step occurring in a pixel point to be detected in an image to be corrected according to some embodiments of the present application;

fig. 2c shows a schematic diagram of an image to be corrected in which no step occurs in a pixel point to be detected according to some embodiments of the present application;

FIG. 3 is a block diagram of a mobile phone capable of implementing the Moire correction method according to an embodiment of the present application;

fig. 4 is a block diagram illustrating an image signal processor ISP capable of implementing the moire correction method according to an embodiment of the present application;

FIG. 5 illustrates a flow chart of Moire correction of an image according to an embodiment of the present application;

FIG. 6 shows a schematic view of a sliding window according to an embodiment of the present application;

fig. 7a shows a schematic diagram of a pixel to be detected as a boundary in a sliding window according to an embodiment of the present application;

fig. 7b shows a schematic diagram of padding neighboring pixels of a boundary pixel according to an embodiment of the present application;

FIG. 8 is a schematic diagram illustrating a sliding window sliding to a next pixel point to be detected according to a step length according to an embodiment of the present application;

fig. 9 is a schematic diagram illustrating luminance components of neighboring pixels of the pixel Y22 to be detected according to an embodiment of the application;

FIG. 10 shows a schematic of an image of a correction parameter for moire correction, in accordance with an embodiment of the present application;

FIG. 11 shows a schematic view of another sliding window, according to an embodiment of the present application;

FIG. 12 illustrates a flow chart for Moire correction of an image, according to an embodiment of the present application;

fig. 13a is a schematic diagram illustrating a pixel point to be detected as a boundary in a sliding window according to an embodiment of the present application;

fig. 13b is a schematic diagram illustrating padding of neighboring pixels of a boundary pixel according to an embodiment of the present application;

FIG. 14 illustrates a structural block diagram of an electronic device, according to some embodiments of the present application;

FIG. 15 illustrates a schematic diagram of a structure of an SOC based electronic device, according to some embodiments of the present application.

DETAILED DESCRIPTION OF EMBODIMENT (S) OF INVENTION

Illustrative embodiments of the present application include, but are not limited to, an image moire correction method, and an electronic device and medium therefor.

FIG. 1 is a schematic view of a moire correction scenario according to an embodiment of the present application.

As shown in fig. 1, when the electronic device 100 captures the target object 101, moire may occur in the captured image 102 for the aforementioned reasons. The moire correction technology disclosed in the application can determine whether the brightness between a plurality of continuous pixels appears a step through detecting the brightness change between a plurality of continuous pixels in a certain direction of the pixels to be detected in the image 102, that is, determine whether the pixels to be detected are in a moire region.

For example, fig. 2a to 2c show a situation that a step occurs between a pixel point to be detected and pixel points on the left side and the right side of the pixel point, for example, for a horizontal direction, it is possible to determine whether a step occurs by determining whether a luminance change direction from the pixel point on the left side of the pixel point to be detected to the pixel point to be detected is opposite to a luminance change direction from the pixel point on the right side of the pixel point to be detected to the pixel point to be detected, and if the luminance change directions are opposite, that is, the pixel point to be detected falls after rising or rises after falling, it is indicated that a step occurs. The same is true for the vertical direction, whether a step occurs can be judged by judging whether the brightness change direction from the pixel point above the pixel point to be detected to the pixel point to be detected is opposite to the brightness change direction from the pixel point below the pixel point to be detected to the pixel point to be detected, and if the brightness change directions of the pixel point above the pixel point to be detected and the pixel point below the pixel point to be detected are opposite, namely the brightness change direction is increased and then decreased or increased after being decreased, the step occurs. For example, the direction of the brightness change from the pixel point to the pixel point is increased by the value +1, the direction of the brightness change from the pixel point to the pixel point is decreased by the value-1, and the brightness change from the pixel point to the pixel point is not changed by the value 0. As shown in fig. 2a, when the luminance change direction of the pixel point to be detected and the pixel point on the left side is +1, and the luminance change direction of the pixel point to be detected and the pixel point on the right side is-1, that is, the luminance change direction of the pixel point to be detected and the pixel point on the right side is decreased after being increased, it is indicated that a step occurs in the pixel point to be detected, and thus the pixel point to be detected is in the moire fringe area.

Fig. 2b shows the case where the luminance change direction of both decreases first and then increases. At this time, the brightness change direction between the pixel point to be detected and the pixel point on the left side is-1, and the brightness change direction between the pixel point to be detected and the pixel point on the right side is +1. And showing that the pixel point to be detected has a step, so that the pixel point to be detected is in the Moire pattern area.

Fig. 2c shows a case that the luminance change direction of the pixel point to be detected and the pixel point on the left side rises after no change, at this time, the luminance change direction of the pixel point to be detected and the pixel point on the right side is 0, and the luminance change direction of the pixel point to be detected and the pixel point on the right side is +1. It is demonstrated that no step occurs in the pixel point to be detected, and therefore the pixel point to be detected is not in the moire area.

Then, when it is determined that the pixel point is in the moire area, the moire correction is performed, for example, the moire correction is performed on the image 102 in fig. 1, so as to obtain an image 103.

Specifically, the moire correction method of the present application includes: counting the number of times of phase steps of the pixel points to be detected, calculating the sum of numerical values of brightness change directions between each pixel point in the sliding window and the interlaced and spaced pixel points thereof, taking the sum as a texture evaluation index of all the pixel points in the sliding window, determining a correction parameter according to the number of times of phase steps of the pixel points to be detected and the texture evaluation index, and correcting the pixel points to be detected according to the correction parameter so as to eliminate Moire patterns. The method can judge whether the pixel point to be detected has a step change or not by judging whether the brightness change direction between the pixel point to be detected and the adjacent pixel point in the sliding window is opposite or not, and carries out moire detection through the number of the step changes of the pixel point to be detected, so as to correct the detected moire. According to the method, the moire in the image to be corrected can be detected and corrected more accurately, besides the image supporting the YUV format, the method can also be applied to images in other formats, meanwhile, cross median filtering is used, the moire is restrained compared with the traditional low-pass filtering, and details in the image can be better protected.

In the embodiment of the present application, the image to be corrected is in YUV format. YUV is a common color space. The Y component represents Luminance (Luma) and represents a Luminance component in the image to be corrected, and the U component and the V component represent Chrominance (Chroma) and describe the color and saturation of the image, so as to specify 2 Chrominance components in the image to be corrected.

It is to be understood that although the present application is described in YUV format as an example, the technical solution of the present application is also applicable to any color space having a luminance component, for example, the image to be corrected may also be in YCbCr format, where Y refers to the luminance component, cb refers to the blue chrominance component, and Cr refers to the red chrominance component.

The image to be corrected may be an image captured by the electronic device 100 through a camera thereof, or an image captured by another device, and then sent to the electronic device 100 for moire correction. After the electronic device 100 acquires the image to be corrected, if the format of the image to be corrected does not belong to the YUV format, it needs to be converted into the YUV format.

It is understood that in embodiments of the present application, the electronic device 100 may be various devices capable of capturing and processing images, such as: various devices with cameras, such as mobile phones, computers, laptop computers, tablet computers, televisions, game machines, display devices, outdoor display screens, vehicle-mounted terminals, digital cameras, and the like. For convenience of explanation, the following description will use the mobile phone 100 as an example.

Fig. 3 shows a block diagram of a mobile phone 100 capable of implementing the moire correction method according to an embodiment of the present application. Specifically, as shown in fig. 3, the mobile phone 100 may include a processor 110, an rf circuit 120, a wireless communication module 125, a display 130, a camera 140, an Image Signal Processor (ISP) 141, an external storage interface 150, an internal memory 151, an audio module 160, a sensor module 170, an input unit 180, and a power supply 190.

It is to be understood that the exemplary structure of the embodiments of the present application does not constitute a specific limitation to the mobile phone 100. In other embodiments of the present application, the handset 100 may include more or fewer components than shown, or combine certain components, or split certain components, or a different arrangement of components. The illustrated components may be implemented in hardware, software, or a combination of software and hardware.

Processor 110 may include one or more processing units, such as: the processor 110 may include an Application Processor (AP), a modem processor, a Graphics Processing Unit (GPU), an Image Signal Processor (ISP), a controller, a video codec, a Digital Signal Processor (DSP), a DPU (data processing unit), a baseband processor, and/or a neural-Network Processing Unit (NPU), etc. The different processing units may be separate devices or may be integrated into one or more processors.

A memory may also be provided in the processor 110 for storing instructions and data. In some embodiments, the memory in the processor 110 is a cache memory. The memory may hold instructions or data that have just been used or recycled by the processor 110. If the processor 110 needs to reuse the instruction or data, it can be called directly from the memory. Avoiding repeated accesses reduces the latency of the processor 110, thereby increasing the efficiency of the system.

The RF circuit 120 may be used for receiving and transmitting signals during a message transmission or communication process, and in particular, for receiving downlink information of a base station and then sending the received downlink information to the one or more processors 110; in addition, data relating to uplink is transmitted to the base station. In general, RF circuit 120 includes, but is not limited to, an antenna, at least one Amplifier, a tuner, one or more oscillators, a Subscriber Identity Module (SIM) card, a transceiver, a coupler, an LNA (Low Noise Amplifier, chinese), a duplexer, and the like. In addition, the RF circuitry 120 may also communicate with networks and other devices via wireless communications. The wireless communication may use any communication standard or protocol, including but not limited to GSM (Global System for Mobile communications, chinese), GPRS (General Packet Radio Service, chinese), CDMA (Code Division Multiple Access, chinese), CDMA (Wideband Code Division Multiple Access), LTE (Long Term Evolution, chinese), e-mail, SMS (Short Messaging Service, chinese), and so on.

The wireless communication module 125 can provide solutions for wireless communication applied to the mobile phone 100, including Wireless Local Area Networks (WLANs) (e.g., wireless fidelity (Wi-Fi) networks), bluetooth (BT), global Navigation Satellite System (GNSS), frequency Modulation (FM), near Field Communication (NFC), infrared (IR), and the like. The wireless communication module 125 may be one or more devices integrating at least one communication processing module. The wireless communication module 125 receives electromagnetic waves via an antenna, performs frequency modulation and filtering processing on electromagnetic wave signals, and transmits the processed signals to the processor 110. The wireless communication module 125 can also receive a signal to be transmitted from the processor 110, frequency modulate it, amplify it, and convert it into electromagnetic waves via the antenna to radiate it out. In some embodiments, the wireless communication module 125 is capable of implementing the multicarrier techniques of the Wi-Fi network-based communication protocols described above, thereby enabling the handset 100 to implement ultra-wideband transmissions over existing Wi-Fi protocols.

The cell phone 100 may implement a camera function via the camera 140, isp141, video codec, GPU, display screen 130, and application processor, etc.

The ISP141 is used for processing data fed back by the camera 140. For example, when a photo is taken, the shutter is opened, light is transmitted to the camera photosensitive element through the lens, the optical signal is converted into an electric signal, and the camera photosensitive element transmits the electric signal to the ISP141 for processing and converting into an image visible to the naked eye. The ISP141 may also perform algorithm optimization for noise, brightness, and skin color of the image. The ISP141 may also optimize parameters such as exposure, color temperature, etc. of the shooting scene. In some embodiments of the present application, moir e patterns generated by the camera 140 on the captured image may be processed by the ISP 141. The camera photosensitive element here may be the image sensor 142. An analog-to-digital converter 143 (not shown) may receive the plurality of electrical signals of the image area from the image sensor 142 and convert them into a plurality of digital pixel data.

It should be noted that in some embodiments of the present application, the ISP141 may be disposed inside the camera 140, and in other embodiments of the present application, the ISP141 may also be disposed outside the camera 140, for example, in the processor 110, which is not limited herein.

The camera 140 is used to capture still images or video. The object generates an optical image through the lens and projects the optical image to the photosensitive element. The photosensitive element may be a Charge Coupled Device (CCD) or a complementary metal-oxide-semiconductor (CMOS) phototransistor. The light sensing element converts the optical signal into an electrical signal, which is then passed to the ISP where it is converted into a digital image signal. The ISP141 outputs the digital image signal to the DSP for processing. The DSP converts the digital image signal into image signal in standard RGB, YUV and other formats. In some embodiments, the cell phone 100 may include 1 or N cameras 140, N being a positive integer greater than 1.

The digital signal processor is used for processing digital signals, and can process digital image signals and other digital signals. For example, when the handset 100 selects a frequency bin, the digital signal processor is used to perform fourier transform or the like on the frequency bin energy.

Video codecs are used to compress or decompress digital video. Handset 100 may support one or more video codecs. Thus, the mobile phone 100 can play or record video in a variety of encoding formats, such as: moving Picture Experts Group (MPEG) 1, MPEG2, MPEG3, MPEG4, and the like.

The external memory interface 150 may be used to connect an external memory card, such as a Micro SD card, to extend the storage capability of the mobile phone 100.

The internal memory 151 may be used to store computer-executable program code, which includes instructions. The internal memory 151 may include a program storage area and a data storage area. In some embodiments of the present application, the processor 110 performs various functional applications and data processing by executing instructions stored in the internal memory 151 and/or instructions stored in a memory provided in the processor. For example, the internal memory may store the image to be corrected to be morbid corrected.

The handset 100 further includes an audio module 160, and the audio module 160 may include a speaker, a receiver, a microphone, an earphone interface, and an application processor, etc. to implement audio functions. Such as music playing, recording, etc.

The handset 100 also includes a sensor module 170, wherein the sensor module 170 may include a pressure sensor, a gyroscope sensor, an air pressure sensor, a magnetic sensor, an acceleration sensor, a distance sensor, a proximity light sensor, a fingerprint sensor, a temperature sensor, a touch sensor, an ambient light sensor, and the like.

The input unit 180 may be used to receive input numeric or character information and generate keyboard, mouse, joystick, optical or trackball signal inputs related to user settings and function control.

The handset 100 also includes a power supply 190 (e.g., a battery) for powering the various components, which may preferably be logically coupled to the processor 110 via a power management system, such that the power management system may manage charging, discharging, and power consumption. The power supply 190 may also include any component including one or more of a dc or ac power source, a recharging system, a power failure detection circuit, a power converter or inverter, a power status indicator, and the like.

It is understood that the structure shown in fig. 3 is only one specific structure for implementing the functions of the mobile phone 100 in the technical solution of the present application, and other structures are possible. The mobile phone 100 implementing similar functions is also applicable to the technical solution of the present application, and is not limited herein.

It is to be understood that the moire correction of the image in the present application may be implemented in the ISP141 of the camera of the mobile phone 100, or may be executed by the processor 110 of the mobile phone 100, or the mobile phone 100 transmits the image to the server and is executed by the server, which is not limited herein.

For convenience of explanation, the ISP141 in the mobile phone 100 will be described as an example. Fig. 4 shows a schematic diagram of the structure of ISP 141. As shown in fig. 4, the ISP141 includes a moire detection unit 141A, a moire correction unit 141B, and a color correction unit 141C. The concrete steps are as follows:

the analog-to-digital converter 143 receives a plurality of pixel voltages of the image area from the image sensor 142 and converts them into a plurality of digital pixel data. The moire detection unit 141A calculates, according to the difference between the luminance components of the pixel to be detected and the surrounding pixels, whether a step of luminance is generated between the pixel and the pixel in at least one direction of the pixel to be detected, so as to determine whether the pixel to be detected is in a moire region. The moire correction unit 141B determines a correction parameter by the step of the brightness of the pixel point in at least one direction of the pixel point to be detected and the texture evaluation index, and performs moire correction on the pixel point to be detected in the moire region. The color correction unit 141C performs pseudo color suppression on the image data output by the moire correction unit 141B, and transmits the image data to the CPU through the I/O interface for processing, thereby obtaining an image to be finally displayed.

Based on the structures shown in fig. 3 and 4, the moire correction scheme of the image of the embodiment of the present application will be described in detail below, wherein the moire correction scheme of the image of the present application can be implemented by the moire detection unit 141A and the moire correction unit 141B in fig. 4.

FIG. 5 illustrates a flow chart of Moire correction of an image, as shown in FIG. 5, comprising:

s501: the moire detection unit 141A acquires an image to be corrected.

It is understood that the image to be corrected may be an image captured by the camera 140 in real time, or may be an image stored in the internal memory 151 or the external memory of the mobile phone 100.

S502: the moire detection unit 141A determines whether the pixel point to be detected is in a moire area. If yes, S504 is executed, and Moire pattern correction is carried out on the pixel point to be detected. Otherwise, returning to S503, the moire detection unit 141A continues to detect the next pixel point to be detected.

It can be understood that the moire detection unit 141A may use a sliding window to traverse the image to be corrected, and obtain the pixel point to be detected. Fig. 6 shows a schematic view of the sliding window in the embodiment of the present application, as for the sliding window. The size of the sliding window may be m × m, where m is equal to or greater than 3 and m is an odd number, and fig. 6 exemplifies that m is 5. It can be understood that, under the condition that the length and the width of the sliding window are both odd numbers, the midpoint of the sliding window can be conveniently used as a pixel point to be detected.

For example, a sliding window of 5 × 5 size is used to traverse the image to be corrected, and each time the sliding window slides on the image to be corrected, Y components of 25 pixels can be extracted from the image to be corrected to form a Y component block of 5 × 5 size, and meanwhile, a midpoint in the sliding window is used as a pixel to be detected. As shown in fig. 6, Y22 represents the current pixel to be detected in the sliding window, that is, the Y component of the pixel in the 2 nd row and 2 nd column in the sliding window with the size of 5 × 5. It will be appreciated that the Y component is the luminance in YUV format.

In some embodiments, as described above, the moire detection unit 141A may determine whether the pixel to be detected is in the moire area by determining whether there is a step in brightness between all adjacent pixels in a certain direction (for example, a horizontal direction, a vertical direction, a direction at 45 degrees to the horizontal direction, or any other direction) of the pixel to be detected in the sliding window with the pixel to be detected as the center. Specifically, for example, for the horizontal direction, taking the pixel point to be detected as an example, it may be determined whether the luminance change direction from the pixel point on the left side of the pixel point to be detected is opposite to the luminance change direction from the pixel point on the right side of the pixel point to be detected to determine whether a step occurs, and if the luminance change directions of the pixel point to be detected and the pixel point to be detected are opposite, the step occurs. The same is true for the vertical direction, whether a step occurs can be judged by judging whether the brightness change direction from the pixel point above the pixel point to be detected to the pixel point to be detected is opposite to the brightness change direction from the pixel point below the pixel point to be detected to the pixel point to be detected, and if the brightness change directions of the pixel point to be detected and the pixel point to be detected are opposite, namely the pixel point is decreased after the brightness change direction is increased or is increased after the brightness change direction is decreased, the step occurs.

The process of determining the moire area is described below by taking the horizontal and vertical directions as examples. For example, whether a step occurs between adjacent pixel points in the horizontal and vertical directions of the pixel point to be detected can be judged through the following steps and formulas, and the pixel point to be detected is determined to be in the moire area.

The method for determining whether the pixel point to be detected is in the moire area by the moire detection unit 141A includes:

a) The moire detection unit 141A calculates a difference value of Y components of adjacent pixel points of the pixel point to be detected in the horizontal and vertical directions.

Taking Y22 as the current pixel point to be detected, as shown in fig. 6, in the horizontal direction of Y22, there are five pixel points Y20, Y21, Y22, Y23 and Y24, and it is necessary to calculate the difference of Y components between Y21 and Y20, between Y22 and Y22, between Y21 and Y23 and Y22, between Y23 and Y22, and between Y24 and Y23. Similarly, in the vertical direction, it is necessary to calculate the difference of the Y components between Y12 and Y02 and Y22 and Y12, between Y32 and Y22 and Y12, and between Y32 and Y22 and Y42 and Y32.

With continuing reference to Y22, taking the examples between Y21 and Y22 and between Y23 and Y22 below, the difference between the pixel Y23 on the right side in the horizontal direction of Y22 and its Y component can be calculated by the following formula (one).

diff 23-22 =Y 23 -Y 22 (A)

Wherein, diff 23-22 =Y 23 -Y 22 Represents the difference in Y component between Y23 and Y22 in the horizontal direction.

In the same way, diff 22-21 =Y 22 -Y 21 Represents the difference in Y component between Y22 and Y21 in the horizontal direction.

b) The moire detection unit 141A removes noise from the difference of the Y components of the adjacent pixel points.

By the following formula (two), the moire detection unit 141A calculates the absolute value of the difference of the Y components between Y22 and Y23, subtracting the preset noise model calibration parameter.

diffHa 23-22 =abs(diff 23-22 ) -tn (two)

Wherein abs represents the absolute value of the difference between Y23 and Y22, and tn is a preset noise model calibration parameter for representing the noise level of the luminance value of the pixel, i.e. the noise level of the Y component. tn is used for adjusting the value of the Y component of the pixel points in the sliding window, and the value of tn can change along with the change of the value of the Y component of each pixel point in the sliding window. When the pixel point contained in the sliding window belongs to the high-frequency image information, namely, the value of the Y component of the pixel point contained in the sliding window is larger, and the value of tn can be correspondingly increased. For example, when the average value of the Y components of the pixels included in the sliding window is 100, the value of tn may be 80. When the average of the Y components of the pixels included in the sliding window is 80, tn may be 60.

It can be understood that tn may have different sizes tn according to the average brightness of the block, and may also have different sizes tn according to different frequencies (e.g., edge, flat region, texture region) of the pixel points in the image.

Similarly, for Y22 and Y21, the signal can be obtained by diffHa 22-21 =abs(diff 22-21 ) Tn removes the noise signal in the difference of the Y components of Y22 and Y21.

c) The moire detection unit 141A converts the difference between the Y components of the pixel point to be detected and the adjacent pixel point thereof into the change direction of the pixel point to be detected and the adjacent pixel point thereof in the Y component.

The moire detection unit 141A performs sign operation on the difference value between the Y component of the pixel point Y22 to be detected and the Y component of the adjacent pixel point Y23, and multiplies the result by the value obtained in step b).

diffH 23-22 =sign(diff 23-22 )*diffH a (III)

Wherein function sign represents obtaining diffH 23-22 Is operated on the sign bit, i.e. when diff 23-22 >=0, sign(diff 23-22 ) =1; when diff 23-22 <0,sign(diff 23-22 )=-1。

Similarly, for Y22 and Y21, diffH 22-21 =sign(diff 22-21 )*diffH a 。

d) The moire detection unit 141A obtains the change direction of the pixel point to be detected and the adjacent pixel point thereof on the Y component.

diffH obtainable by the following equation (four) for step c) 23-22 The sign operation is performed again, and the direction of change of Y23 and Y22 in the Y component is determined based on the obtained result.

When diffHr 23-22 Above 0, this indicates an upward change in the Y component from Y22 to Y23; when diffHr 23-22 Less than 0, indicating a decreasing change in the Y component from Y22 to Y23; when diffHr 23-22 Equal to 0, indicates no change in the Y component from Y22 to Y23.

Similarly, the moire detection unit 141A acquires the change direction of Y22 and Y21 in the Y component.

Next, with the data of the Y component of Y21, Y22, and Y23 shown in fig. 6, it is determined whether or not a step occurs at Y22 by the above-described formulas (one) to (four). When Y23=1.5, Y22=1, Y21=2.5, and tn =0.4, for between Y23 and Y22,

diff 23-22 =Y23-Y22=0.5;diffHa 23-22 =abs(0.5)-0.4=0.1;

diffH 23-22 =sign(0.5)*(0.1)=0.1;diffHr 23-22 =1。

for between Y22 and Y21, the same can be calculated using the above equations (one) to (four).

diff 22-21 =Y22-Y21=-1.5;diffHa 22-21 =abs(-1.5)-0.4=1.1;

diffH 22-21 =sign(-1.5)*(1.1)=-1.1;diffHr 22-21 =-1。

e) The moire detection unit 141A determines whether a step occurs on the pixel to be detected by determining whether a change direction from the pixel point on the left side of the pixel to be detected to the pixel to be detected on the Y component is opposite to a change direction from the pixel point on the right side of the pixel to be detected to the pixel to be detected on the Y component, and if the step occurs, counts a step _ hv, where the step _ hv may be a variable stored in the moire detection unit 141A.

Taking Y22 as an example, for diffHr 22-21 = -1 indicates that the change direction of the Y component from Y21 to Y22 is down, for diffHr 23-22 =1 indicates that the direction of change of the Y component from Y22 to Y23 is rising. By calculating Y22 and Y21 and Y23 from Y22, diffHr 22-21 And diffHr 23-22 To determine whether a step has occurred at Y22. When the product of the difference of the Y components of the pixel point to be detected and two continuous adjacent pixel points in the horizontal direction is negative, a step appears at the pixel point. Here, the step, that is, the process of jumping from-1 to 1 of the difference between Y22 and the Y component of its two consecutive adjacent pixel points Y21 and Y23 in the horizontal direction, jumps from-1 to 1, and a "falling edge + rising edge" appears on the Y component from Y21 to Y22 to Y23. It can be understood that, in the case that Y22 has a step, the difference between Y22 and the Y component of two consecutive adjacent pixel points Y21 and Y23 in the horizontal direction may jump from 1 to-1, that is, a "rise" appears in the Y component from Y21 to Y22 to Y23Edge + falling edge ".

It will be appreciated that, taking Y22 as an example, if a step occurs at Y22, indicating a large change in the value of the Y component from Y21 to Y22, and from Y22 to Y23. Meanwhile, the number of steps is counted by adding 1.

In addition to between Y21 and Y22 and between Y23 and Y22, it is necessary to calculate whether steps occur between Y21 and Y20 and between Y22 and Y21, between Y23 and Y22 and between Y24 and Y23 in the horizontal direction of Y22, and if so, the number of steps is incremented by 1.

Similarly, taking the example between Y32 and Y22 and between Y22 and Y12, that is, Y12 and Y32 in the direction perpendicular to Y22, the direction of change of the Y component, that is, diffHr, is also calculated by the above steps a) to e) 32-22 And diffHr 22-12 If there is also a "falling edge + rising edge" or a "rising edge + falling edge" process in the Y components between Y32 and Y22 and Y12, it is stated that Y22 also has a step in the vertical direction, and the number of steps is incremented by 1.

In addition to between Y32 and Y22 and between Y22 and Y12, it is necessary to calculate whether steps occur between Y12 and Y02 and between Y22 and Y12, between Y32 and Y22, and between Y42 and Y32 in the vertical direction of Y22, and if so, the number of steps is incremented by 1.

f) The moire detection unit 141A determines whether the number of steps is greater than 0, and if so, it indicates that the pixel point to be detected is in a moire area.

For example, according to diffHr described above 22-21 And diffHr 23-22 As a result, it is judged that at least one step occurs in the horizontal direction of Y22, and therefore the moir e detection unit 141A determines that Y22 is in the moir e region. And sends the number of steps to the moir e correction unit 141B.

In some embodiments of the present application, in a case where the pixel point to be detected is a pixel point on a boundary of the image to be corrected, as shown in fig. 7a, taking Y00 as an example, it is assumed that Y00 is a first pixel point of the image to be corrected, and at this time, in horizontal and vertical directions with Y00 as a center, there is no pixel point in one direction, and therefore, there is no corresponding Y component. At this time, as shown in fig. 7b, after mirroring the pixel on one side of Y00, it is determined whether a step occurs in the Y component between the adjacent pixels in the horizontal and vertical directions of Y00 using the above formulas (one) to (four). For example, taking the above Y00 as an example, since there are adjacent pixels Y01 and Y02 only on the right side in the horizontal direction, the left sides of Y01 and Y02 are mirror-copied to be pixels on the left side of Y00 with Y00 as the center. Similarly, in the vertical direction, with Y00 as the center, the pixel points above Y10, Y20 to Y00 are mirror-copied as the pixel points above Y00.

S503: the moire detection unit 141A continues to detect the next pixel point to be detected.

After the pixel point to be detected is corrected or under the condition that the pixel point to be detected is not located in the moire pattern area, the sliding window horizontally slides to the next pixel point to be detected according to the step length of 1, and moire pattern detection is carried out on the next pixel point to be detected in the sliding window. Taking Y22 as an example, as shown in fig. 8, after Y22 is corrected, the sliding window slides to the next pixel point Y23 to be detected according to the step length of 1.

It will be appreciated that in some embodiments, the sliding window may also be slid in the vertical direction by a step size of 1.

It can be understood that, except for the detection method of the moire pixel in S502, taking the horizontal direction as an example, when the luminance values of the left adjacent pixel and the right adjacent pixel of the pixel to be detected are both smaller than or greater than the luminance value of the pixel to be detected, and the absolute value of the difference between the luminance values of the left adjacent pixel and the pixel to be detected is greater than the first threshold, and the absolute value of the difference between the luminance values of the right adjacent pixel and the pixel to be detected is greater than the second threshold, it may also be determined that the step occurs in the pixel to be detected.

S504: the moire correction unit 141B performs moire correction on the pixel point to be detected.

In some embodiments, the moire correction unit 141B performs a moire correction process on the pixel point to be detected, including:

a) The moire correction unit 141B calculates a difference value of Y components of interlaced and spaced pixel points of the pixel point to be detected in the horizontal and vertical directions.

And for the pixel points to be detected in the sliding window, calculating the sum of the difference values of the Y components of the pixel points which are interlaced and spaced in the horizontal and vertical directions of the pixel points to be detected, and taking the sum as the texture evaluation index step _ tex of the sliding window.

For example, for the sliding window shown in fig. 6, taking Y22 as the current pixel point to be detected, as shown in fig. 6, there are five pixel points of Y20, Y21, Y22, Y23 and Y24 in the Y22 horizontal direction, and it is necessary to calculate the differences of the Y components between Y20 and Y22, between Y21 and Y23 and between Y22 and Y24. Similarly, in the vertical direction, it is necessary to calculate the differences between Y22 and Y02, between Y32 and Y12, and between Y42 and Y22, and the Y components therebetween, and sum the calculated differences.

Taking the example of calculating the difference between the Y components of the pixels at which Y22 is interlaced in the horizontal and vertical directions, continuing with fig. 9, the moire detection unit 141A needs to calculate the difference between Y24 and Y22 in the horizontal direction and the difference between Y42 and Y22 in the vertical direction, and the difference between Y24 and Y22 can be calculated by the following formula (five).

tex 24-22 =Y 24 -Y 22 (V)

Next, the moire correction unit 141B removes a noise signal from the difference of the Y components of Y24 and Y22. This step is the same as step b) in S502 described above.

The noise signal can be removed from the difference of the Y components of Y24 and Y22 by the following formula (six).

texHa 24-22 =abs(tex 24-22 ) -tn (six)

Then, the moire correction unit 141B converts the difference between the Y components of Y24 and Y22 into the direction of change in the Y component between Y22 and its adjacent pixel point. This step is the same as step c) in S502 described above, and may be converted into the direction of change of Y22 and its neighboring pixel points on the Y component by the difference between Y24 and Y22 in formula (seven).

texH 24-22 =sign(tex 24-22 )*texH a (VII)

The moire correction unit 141B acquires the direction of change in the Y component for Y24 and Y22. This step is similar to step d) in S502 described above, and the following formula (eight) is used for texH 24-22 The sign operation is performed again to obtain the direction of change of Y24 and Y22 in the Y component.

Finally, the values texHr of the directions of change of Y24 and Y22 in the Y component are calculated 24-22 And accumulating the texture evaluation indexes to the texture evaluation index step _ tex of the sliding window.

For example, when Y24=2.0, Y22=1, Y20=1.5, and tn =0.4, for between Y24 and Y22,

tex 24-22 =Y24-Y22=1;texHa 24-22 =abs(1)-0.4=0.6;

texH 24-22 =sign(1)*(0.6)=0.6;texHr 24-22 =1。

thus, for Y24 and Y22, texHr 24-22 And =1. The accumulated texture evaluation index step _ tex =1.

It will be appreciated that in addition to between Y24 and Y22, texHr between Y22 and Y20, and Y23 and Y21 needs to be calculated 22-20 And texHr 23-21 。

Similarly, for between Y42 and Y22, the value texHr of the direction of change of the Y component thereof is also calculated 42-22 And then texHr 42-22 And accumulating the texture evaluation indexes to the texture evaluation index step _ tex of the sliding window.

It will be appreciated that in addition to between Y42 and Y22, texHr between Y22 and Y02, and between Y32 and Y12 needs to be calculated 22-02 And texHr 32-12 。

It can be understood that the difference of the Y components of the interlaced pixels in the horizontal and vertical directions is not included in the current pixel point Y22 to be detected. For other pixel points in the sliding window, the difference value of the Y components of the interlaced pixel points in the horizontal and vertical directions needs to be calculated, and the texture evaluation index step _ tex of the sliding window is counted. For example, for Y00, the difference between Y02 and Y00 and between Y20 and Y00 needs to be calculated.

In some embodiments of the present application, if a part of the pixels in the sliding window does not exist in horizontally and vertically interlaced pixels in the sliding window, the difference of the Y component is not calculated. For example, taking Y04 in fig. 6 as an example, in the horizontal direction, in the sliding window, there are no pixel points in every other column in Y04, and therefore, the direction of change of the Y component in the horizontal direction is not calculated.

b) After the moire correction unit 141B calculates texture evaluation indexes of all pixel points in the sliding window, the moire correction unit 141B calculates correction parameters of the pixel points to be detected through the number of steps of the pixel points to be detected and the texture evaluation indexes.

The moire correction unit 141B may calculate the correction parameter alpha of the pixel point to be detected by using a formula (nine) through the number step _ hv of steps of the pixel point to be detected, which is obtained from the moire detection unit 141A, and the texture evaluation index step _ tex of the sliding window, which is calculated by itself.

moire=step_hv/step_tex

alpha=k1/(1+e -a*(moire-b) ) -k2 (nine)

For the correction parameter alpha, a, b, k1, k2 are debugging parameters. 1c -a*(moire-b) Is a gaussian distance. The image of the formula of the correction parameter alpha may be, as shown in fig. 10, such that the calculated alpha may range between (0, 1) by adjusting a, b, k1, k2 in the formula of the correction parameter alpha and the function image of the correction parameter alpha tends to be smooth. The process of adjusting the parameters a, b, k1, k2 may include: first, a set of moire's training data and the training results of alpha's corresponding to the moire's training data are prepared, for example, 100 ranges are [1,20 ]]Moire's training data in between, and 100 alpha's training results corresponding thereto, ranging between (0, 1). Then, moire's training data is input to the formula and compared with the alpha's training results to find the range of 100 sets a, b, k1, k2. Finally, taking the median of each of the 100 groups a, b, k1, k2 as the parameter of the formula of the correction parameter alpha, the median representing the training data passing through moire and alphaThe a, b, k1, k2 obtained from the training results of (a) are compared to the values in the set. It will be appreciated that for the parameters a, b, k1, k2, the above steps may be repeated a number of times using training data for different moires and training results for alpha to obtain more accurate parameters a, b, k1, k2.

c) The moire correction unit 141B performs moire correction on the pixel point to be detected in the sliding window by using the correction parameter.

In the embodiment of the present application, the moire correction unit 141B may calculate the corrected median of the pixel to be detected by using a cross median filtering method.

Continuing with fig. 9, taking the pixel point Y22 to be detected as an example for explanation, the cross median filtering method is adopted to calculate the corrected median med of Y22 22 。

For example, as shown in fig. 9, for Y22 in the sliding window, Y22 is obtained as the center, and the Y components of Y02, Y12, Y32, Y42, and Y20, Y21, Y23, Y24 in the horizontal and vertical directions of Y22 in the sliding window are obtained, and Y22 is added, and the corrected median med of the above 9Y components for Y22 is calculated 22 Is 2.

Meanwhile, the moire correction unit 141B calculates the average value of the pixel points to be detected in a cross filtering manner.

For example, for Y22 in the sliding window, a mean value mean is calculated by adopting a cross median filtering mode 22 Comprises obtaining Y components of Y02, Y12, Y32, Y42 and Y20Y 21Y 23Y 24 around Y22 and adding Y22, and calculating mean of the 9Y components to Y22 22 Was 1.89.

Then, the moire correction unit 141B calculates a corrected Y component using the corrected median and mean of the pixel points to be detected, for example, calculates a corrected Y component CorY of Y22 using the following formula (ten).

CorY 22 =beta*med 22 +(1-beta)*mean 22 (ten)

For example, where beta is the tuning parameter, the corrected median value med at Y22 22 Is the mean value mean of 2, Y22 22 1.89, and beta is 0.5, the corrected Y component CorY of Y22 is calculated 22 Is 1.94.

Finally, the moire correction unit 141B corrects the pixel to be detected based on the correction parameter and the correction Y component.

For example, the moire correction unit 141B calculates the corrected Y component Yout of Y22 using (eleven) 22 。

Yout 22 =alpha*CorY 22 + (1-alpha) Y22 (eleven)

For example, the corrected Y component CorY at Y22 22 1.94, alpha 0.4, the calculated corrected Y component Yout of Y22 22 Is 1.37.

In another embodiment of the present application, a window with another size may be used to calculate the median and the mean of the pixel points to be detected.

For example, as shown in fig. 11, Y22 is corrected by a 3 × 3 window to have a median med 22 Mean of 22 And (4) calculating. In fig. 11, with Y22 as the center, the Y components of Y12, Y32, and Y21 and Y23 in the horizontal and vertical directions of Y22 within the sliding window are acquired and added to Y22, and the corrected median med of the above 5Y components to Y22 is calculated 22 Is 1.5. Similarly, the mean of Y22 is calculated 22 Is 1.6.

S505: the color correction unit 141C performs pseudo color suppression on the corrected pixel point to be detected.

Taking Y22 as an example, after the moire correction unit 141B performs moire correction on the Y component of the pixel point Y22 to be detected in the sliding window, the color correction unit 141C performs pseudo color suppression on the U component and the V component corresponding to the pixel point Y22 to be detected by using the correction parameter alpha.

For example, the saturation represented by the U component and the V component is suppressed based on the above correction parameter alpha =0.4 calculated in S504.

For U22 corresponding to Y22 in the sliding window, the following formula (twelve) Uout may be used 22 And the saturation suppression is carried out on the U component of the Y22 by the = U22-128- (1-alpha) × C (twelve). Where 128 represents the median value of the U component, which represents no saturation. And C is debugging parameters. For example, the correction parameters alpha =0.4, U22=156,c =4, uout 22 And = (156-128) × (1-0.4) × 4=112. Similarly, when Y22 corresponds to V22=180, according to Vout 22 Calculating Vout by = (V-128) (1-alpha) × C 22 =124。

After the pixel point to be detected is corrected, step 503 is executed, and the sliding window horizontally slides to the next pixel point to be detected according to the step length of 1.

It is to be understood that the values used in S501 to S505 above are exemplary and that other values may be included when using the method of the present application.

It can be understood that, in another embodiment of the present application, it may also be determined whether the brightness of the shot pixel point to be detected has a step by detecting the brightness change between the pixel point to be detected and the adjacent pixel point in the diagonal direction. For example, whether a step occurs can be determined by determining whether the luminance change direction from the pixel point on the upper left side of the pixel point to be detected to the pixel point to be detected is opposite to the luminance change direction from the pixel point on the lower right side of the pixel point to be detected to the pixel point to be detected, and if the luminance change directions are opposite, namely, the luminance change direction is decreased after the luminance change direction is increased or the luminance change direction is increased after the luminance change direction is decreased, the step occurs.

In another embodiment of the present application, after the mobile phone 100 detects the moire pixel through the moire detection unit 141A, the moire pixel may be corrected by using other methods.

For example, the Y component of the moire pixel is decomposed to obtain moire layer content and image layer content of the moire pixel, then the moire layer is filtered to separate and remove moire in the moire pixel, and then the image layer content is combined into the Y component of the moire pixel.

It is to be understood that the filtering within the moir e layer may be performed by the median filtering described above, and may also be performed by gaussian filtering.

In the method for correcting moire of the image shown in fig. 5, whether the pixel point in the sliding window is in the moire area is determined according to whether the brightness of all the adjacent pixel points has a step in the horizontal and vertical directions of the pixel point Y22 to be detected. Next, whether there is a step in brightness between all adjacent pixel points in two diagonal directions of the pixel point Y22 to be detected is described.

As shown in fig. 6, when the moire detection unit 141A determines whether the pixel point Y22 to be detected is in the moire area, five pixel points of Y00, Y11, Y22, Y33, and Y44 exist in the diagonal direction from the upper left to the lower right of Y22, and the moire detection unit 141A needs to calculate the difference of the Y components between Y22 and Y11 and Y00, between Y33 and Y22 and Y11, and between Y44 and Y33 and Y22.

Taking Y33 and Y22 and Y11 as examples, the variation directions diffHr of Y33 and Y22 in the Y component can be calculated by the above equations (one) to (four) 33-22 And the direction diffHr of the change of Y22 and Y11 in the Y component 22-11 . If, diffHr 33-22 And diffHr 22-11 The product of (a) and (b) is a negative number, which indicates that a "rising edge + falling edge" occurs on the Y component from Y11 to Y22 to Y33, i.e. a step occurs at Y22. Similarly, in the diagonal direction from top left to bottom right of Y22, it is also required to calculate whether steps occur between Y22 and Y11 and between Y11 and Y00 and between Y22 and Y11 and between Y11 and Y00, and if yes, count the number of steps _ hv to the steps.

Thereafter, similarly, in the diagonal direction from the bottom left to the top right, with the above formulas (one) to (four), the moire detecting unit 141A needs to calculate the differences of the Y components between Y22 and Y31 and Y40, between Y13 and Y22 and Y31, and between Y04 and Y13 and Y22. And counting whether steps occur between Y22 and Y31 and Y40, between Y13 and Y22 and Y31, between Y04 and Y12, and between Y12 and Y22, and if so, counting the number of steps _ hv to the steps.

Here, the moire correction unit 141B may calculate the texture evaluation index step _ tex of the sliding window in the same manner as in S504. That is, the value of the change direction of the interlaced Y component of each pixel point in the sliding window with the pixel point Y22 to be detected as the midpoint.

Except for the method described in the above step S502, a sliding window of 5 × 5 size with the pixel point Y22 to be detected as the midpoint is determined, whether the region of the sliding window is a moire region is determined by calculating whether there is a step in the brightness between the pixel points in each direction with Y22 as the center, and if so, the moire correction is performed on the pixel point Y22. The embodiment of the application also provides another method for determining whether the pixel point to be detected in the image is in the moire area. In the method, the moire detection unit 141A detects each pixel point in the image to be corrected, so as to determine whether there is a pixel point in the image to be corrected, which needs to be molar corrected. When determining whether a pixel needs to perform moir correction, it is necessary to determine whether the pixel is located in a moir e area. In some embodiments, whether a pixel is located in a moire area may be determined by the following method.

Firstly, determining a sliding window containing a pixel point to be detected by taking the pixel point to be detected as a center; and then, calculating the number of step pixel points in the sliding window, if the number exceeds a step threshold, indicating that pixel points in a moire zone are in the sliding window, namely the pixel points to be detected in the center are in the moire zone, and performing moire correction, and if the number does not exceed the step threshold, indicating that the pixel points in the sliding window are not in the moire zone, namely the pixel points to be detected in the center are not in the moire zone, and performing moire correction is not needed. This scheme will be explained below with reference to fig. 12. As shown in fig. 12, includes:

s1201: the moire detection unit 141A determines a sliding window containing a pixel point to be detected in the image to be corrected.