CN112489088A - Twin network visual tracking method based on memory unit - Google Patents

Twin network visual tracking method based on memory unit Download PDFInfo

- Publication number

- CN112489088A CN112489088A CN202011473954.6A CN202011473954A CN112489088A CN 112489088 A CN112489088 A CN 112489088A CN 202011473954 A CN202011473954 A CN 202011473954A CN 112489088 A CN112489088 A CN 112489088A

- Authority

- CN

- China

- Prior art keywords

- target

- tracking

- target template

- memory unit

- video

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Pending

Links

Images

Classifications

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/20—Analysis of motion

- G06T7/246—Analysis of motion using feature-based methods, e.g. the tracking of corners or segments

- G06T7/251—Analysis of motion using feature-based methods, e.g. the tracking of corners or segments involving models

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

- G06N3/02—Neural networks

- G06N3/04—Architecture, e.g. interconnection topology

- G06N3/045—Combinations of networks

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

- G06N3/02—Neural networks

- G06N3/04—Architecture, e.g. interconnection topology

- G06N3/049—Temporal neural networks, e.g. delay elements, oscillating neurons or pulsed inputs

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

- G06N3/02—Neural networks

- G06N3/08—Learning methods

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/20—Analysis of motion

- G06T7/223—Analysis of motion using block-matching

- G06T7/238—Analysis of motion using block-matching using non-full search, e.g. three-step search

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/10—Image acquisition modality

- G06T2207/10016—Video; Image sequence

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/20—Special algorithmic details

- G06T2207/20081—Training; Learning

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/20—Special algorithmic details

- G06T2207/20084—Artificial neural networks [ANN]

Landscapes

- Engineering & Computer Science (AREA)

- Physics & Mathematics (AREA)

- Theoretical Computer Science (AREA)

- General Physics & Mathematics (AREA)

- General Health & Medical Sciences (AREA)

- General Engineering & Computer Science (AREA)

- Biophysics (AREA)

- Computational Linguistics (AREA)

- Data Mining & Analysis (AREA)

- Evolutionary Computation (AREA)

- Artificial Intelligence (AREA)

- Molecular Biology (AREA)

- Computing Systems (AREA)

- Biomedical Technology (AREA)

- Life Sciences & Earth Sciences (AREA)

- Mathematical Physics (AREA)

- Software Systems (AREA)

- Health & Medical Sciences (AREA)

- Multimedia (AREA)

- Computer Vision & Pattern Recognition (AREA)

- Image Analysis (AREA)

Abstract

A twin network visual tracking method based on a memory unit belongs to the technical field of target tracking and comprises the following steps: step 1, building a tracking model; step 2, obtaining initial target template characteristics; step 3, acquiring the corresponding position of the tracking target in the current video frame; step 4, cutting out an area where the target is located according to the position where the tracking target is located in the current frame found in the step 3 to be used as a target template, and inputting the target template into a target template branch of the tracking model to obtain new target template characteristics; and (4) reading the next frame of the video as the current frame, and turning to the step (3) to carry out the next iteration until all frames in the video are read and the iteration is finished. The method can effectively solve the problems of shielding, background mixing, violent change of the target form and the like in the visual tracking process, and improves the tracking robustness of the tracking model facing a complex environment.

Description

Technical Field

The invention belongs to the technical field of target tracking, and particularly relates to a twin network visual tracking method based on a memory unit.

Background

The target tracking technology plays a very important role in the computer vision technology, is widely applied to the fields of intelligent transportation, security, sports, medical treatment, robot navigation, human-computer interaction and the like, and has great commercial value. The task to be completed by visual tracking is to select an interested area in an image sequence as a tracking target and obtain accurate information such as the position, specific form and motion track of the target in a plurality of next continuous image frames. From the technical development point of view, the research of the visual tracking technology can be divided into three stages, and in the first stage, a classical tracking method represented by Kalman filtering, mean value filtering, particle filtering and an optical flow method is adopted; in the second stage, a TLD model is taken as a representative visual tracking method based on detection, and a CSK algorithm is taken as a representative related filtering visual tracking method; in the third stage, a visual tracking method based on deep learning. However, in the visual tracking task, only the target labeling information of the first image can be used, and therefore, sufficient a priori knowledge is not available in the training process to ensure the accuracy of the tracking model. In addition, the visual tracking problem also faces the challenges of illumination change, serious shielding of a tracked target, background mixing, severe change of the target form, motion blurring and the like.

The twin network based visual tracking model translates the tracking problem into a picture block similarity matching problem. The twin network tracking model takes the target template image and the search image corresponding to the current frame in the video as the input vector of the network, and the area of the search image is usually larger. And obtaining respective features of the two tensors through a backbone network, and performing cross correlation operation by taking the features corresponding to the target template as convolution kernels and the features corresponding to the search pictures to finally obtain a similarity score map, wherein the position of the maximum point in the similarity score map is the position of the target in the current frame of the video.

However, in the conventional twin network tracking model, only the initial frame is used as the target template, and the network parameters and the target template of the model are not updated after the offline training of the model is completed. The fact that the network parameters are not updated means that huge landslide occurs in the tracking performance of the model when the model encounters unseen scenes or targets, and the tracking drift problem is caused when the target template is not updated, so that the targets in the video sequence are subjected to severe appearance change or are seriously shielded, and the like, which can cause the reduction of the robustness and the tracking accuracy of the model.

Disclosure of Invention

Aiming at the problems in the traditional twin network tracking model, the invention starts from the aspect of updating the target template, and utilizes the DWConv-LSTM memory unit to solve the problem of updating the target template from two aspects of time and space, thereby being capable of obtaining better tracking accuracy while improving the robustness of the model and having considerable use value.

The technical scheme of the invention is that a backbone network in a tracking model adopts a twin network based on residual errors, the model has two branches, the upper branch is a target template branch, and the lower branch is a search image branch. In the target template branch, the target template obtains the robust features of the target through a backbone network and a memory unit; in the search image branch, in order to adapt to the target scale change in the tracking process, multi-scale processing is carried out on the search images to obtain three search images with different scales, and the search images are subjected to feature extraction through a backbone network. And performing cross correlation operation on the features corresponding to the three search images with different scales and the features corresponding to the target template respectively to finally obtain three similarity score graphs, finding the optimal feature graph among the three, and determining the predicted position of the target by the average value of the K response values with the maximum value on the feature graph. And acquiring a new target template according to the detected target position, inputting the acquired target template into a target template branch of the tracking model, and learning the change of the target template in time and space by using a DWConv-LSTM memory unit.

The invention discloses a twin network visual tracking method based on a memory unit, which comprises the following specific steps:

step 1: building a tracking model;

the tracking model is divided into two branches: the method comprises the steps that a target template branch and a search image branch are respectively arranged, the target template branch consists of a backbone network and a DWConv-LSTM memory unit, the search image branch consists of a backbone network, and the backbone networks of the two branches are twin networks which are built on the basis of residual modules and share weights;

the DWConv-LSTM memory unit is essentially a long-short term memory network LSTM fused with deep separable convolution operation, the conventional LSTM can well describe time sequence information, but the internal part adopts a full-connection structure, so that the LSTM learns a large amount of information which is useless for describing time sequence and cannot depict the spatial characteristic information of a target like convolution operation; the invention adopts the depth separable convolution to replace the full connection structure in the LSTM, so that the target template characteristics output by the memory unit not only contain the variation information on the time sequence, but also contain the characteristic information on the space;

step 2: acquiring an initial target template characteristic F;

step 2.1: extracting characteristics e of an initial target template image through a target template branched backbone network0,e0The initial cell unit c in DWConv-LSTM memory unit is obtained by convolution operation of 3 × 3 and 1 × 10,e0Obtaining an initial hidden layer state h in a DWConv-LSTM memory cell by performing a convolution operation on a branch consisting of another set of convolutions of 3 × 3 and convolutions of 1 × 10;

Step 2.2: c is to0,h0And e0Inputting the target template into a DWConv-LSTM memory unit to obtain an initial target template characteristic F;

and step 3: acquiring the corresponding position of a tracking target in a current video frame;

step 3.1: cutting out a search image from a current frame of a video, and acquiring characteristics corresponding to a multi-scale search image;

assuming that the current frame is the tth frame (t is 1,2,3, …, n) of the video, the search image S is cut out from the current frame in the videotEstablishing with StCorresponding multiscale search image collectionMultiscale search images in set S asObtaining a characteristic set corresponding to the multi-scale search image by a batch of search image branches of the tracking model

Step 3.2: acquiring a similarity score map;

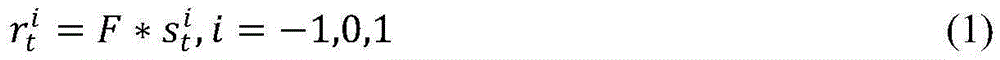

the target template feature F at the current moment and the feature corresponding to the multi-scale search image obtained in step 3.1Performing cross correlation operation according to the formula (1) to obtain a similarity score map setIn the formula (#) represents a convolution operator;

step 3.3: acquiring a corresponding position of a tracking target according to the similarity score map;

upsampling each similarity score map in the set r to obtain an upsampled similarity score map setFinding the upsampled similarity score map where the maximum value is located in the set R, and marking the upsampled similarity score map as RtTo R, to RtComparing all the values to obtain K response value points with the maximum value, averaging the K response value points to obtain a response value point d, and finding a corresponding position of the d point in the current video frame, wherein the corresponding position of the d point is the position of the searched target;

and 4, step 4: cutting out an area where the target is located according to the position where the tracking target is located in the current frame found in the step 3 to be used as a target template, and inputting the target template into a target template branch of the tracking model to obtain a new target template characteristic F; and (4) reading the next frame of the video as the current frame, and turning to the step (3) to carry out the next iteration until all frames in the video are read and the iteration is finished.

The twin network visual tracking method based on the memory unit comprises the following steps:

in step 3, the 1 st frame of the initial video is taken as the current frame, i.e., the first iteration time t is 1, and then when the next iteration is performed from step 4 to step 3, the next frame of the video is taken as the current frame, i.e., t is t + 1.

In step 4, in the process of updating the target template feature F, the DWConv-LSTM memory unit outputs the cell unit c in the DWConv-LSTM memory unit in the previous updating processt-1And hidden layer state ht-1And the extracted features e of the target template branch backbone networktAs input, cell units and hidden layer states are updated and target template features F are obtained.

The benefits of the invention are:

according to the invention, the memory unit based on DWConv-LSTM added in the target template branch of the tracking model can learn the appearance change trend of the target on a time sequence, and meanwhile, the convolution network is utilized to ensure the stability of the target on the space, so that the problems of shielding, background mixing, violent change of the target form and the like in the visual tracking process can be effectively solved, the tracking robustness of the tracking model facing a complex environment is improved, and meanwhile, the memory unit is further accelerated by using the depth separable convolution in the memory unit, so that the tracking model can ensure the real-time property, and the memory unit has important application value.

Drawings

FIG. 1 is a flow chart of a twin network visual tracking method based on memory units according to the present invention.

FIG. 2 tracking model overall architecture diagram of the present invention

FIG. 3 is a diagram of the basic network structure of the DWConv-LSTM memory unit of the present invention.

FIG. 4 is a diagram illustrating an exemplary cropping of a target template image according to the present invention.

FIG. 5 is a schematic diagram of the process of obtaining the initial cell unit and the hidden layer state of the memory cell according to the present invention.

FIG. 6 is an exemplary diagram of the present invention cropping a multi-scale search image in a video frame.

Fig. 7 and 8 are diagrams illustrating effects of the embodiment of the present invention.

Detailed Description

The following detailed description of embodiments of the invention refers to the accompanying drawings.

In the method of the embodiment, the TensorFlow deep learning framework is adopted for algorithm implementation, and the operating system is Ubuntu 16.04LTS.

As shown in fig. 1, a twin network visual tracking method based on memory units includes the following steps:

step 1: building a tracking model;

as shown in fig. 2, the tracking model is divided into two branches, namely a target template branch and a search image branch, the target template branch is composed of a backbone network and a DWConv-LSTM memory unit, the search image branch is composed of a backbone network, and the backbone networks of the two branches are twin networks which are built based on residual modules and share weights;

FIG. 3 shows a network structure of DWConv-LSTM memory unit, which mainly includes a forgetting gate ftAnd input gate itAnd an output gate otThree gating units and one cell unit ctThe calculation of each gate is performed according to the equations (2), (3) and (4), respectively, where Wi、WfAnd WoAs a weight matrix, bi、bfAnd boIs an offset amount, etFeatures extracted for the target template branch backbone network, ht-1For the hidden state at the last moment, () represents a convolution operator, and sigma represents a sigmoid activation function;

cell unit ctUpdate of (2), hidden layer state htThe updating and the obtaining of the target template characteristics F are calculated according to formulas (5), (6) and (7) respectively; wherein, tanh represents the tanh activation function, ct-1For the cell unit at the previous moment, (. star.) represents the convolution operator, WcAnd WtAs a weight matrix, bcIs an offset amount, ht-1The hidden layer state at the previous moment;

ht=ot*tanh(ct) (6)

F=Wt*ht (7)

the convolution operation of each gate is realized by using deep separable convolution, which is beneficial to capturing the spatial relation among the characteristics and reducing the network parameter number so as to accelerate the forward reasoning speed.

Step 2: acquiring an initial target template feature vector F;

step 2.1: and calculating the size Z of the area where the tracking target is located according to a formula (8) according to the width w and the height h of the given tracking target to be detected, wherein p represents the extended length and is calculated according to a formula (9). As shown in fig. 4, a side length is cut out from the original image with a given center position (cx, cy) of the target to be tracked as the centerThe square area of (a) is the template image. The height-width dimension of the template image is adjusted to 127 x 127 to obtain the initial target template. As shown in FIG. 5, the initial target template gets the feature e through the backbone network of the target template branch0,e0Obtaining cell units c through two branches respectively0Hiding layer state h0Each branch consists of a convolution of 3 x 3 and a convolution of 1 x 1.

Z=(w+2p)*(h+2p) (8)

p=(w+h)/4 (9)

Step 2.2: c is to0,h0And e0The initial target template characteristics F are obtained by inputting the target template characteristics F into a DWConv-LSTM memory unit.

And step 3: acquiring the corresponding position of a tracking target in a current video frame;

step 3.1: cutting out a search image from a current frame of a video, and acquiring characteristics corresponding to a multi-scale search image;

assuming that the current frame is the tth frame of the video (t is 1,2,3, …, n), the area a of the region to be clipped is calculated as the equation (10) { a ═ a-1,A0,A1And p' represents the extended length and is calculated according to the formula (11). w and h represent the width and height, respectively, of a given tracking target, k being 1.05, kiRepresents the power i of k. Then, the target center detected in the previous frame of the video by the algorithm is used as a central point, and the side lengths of the target center are respectively cut out from the current frame of the videoThe three square regions of (a) result in a search image, the result is shown in fig. 6. Then, the image sizes are adjusted to 255 x 255 to obtain a multi-scale search image setTaking the multi-scale search images in the set S as a batch, and obtaining a feature set corresponding to the multi-scale search images through search image branching

Ai=ki(w+4p′)*ki(h+4p′),i=-1,0,1 (10)

p′=(w+h)/4 (11)

Step 3.2: acquiring a similarity score map;

target template feature F at current moment and multi-ruler obtained in step 3.1Searching for corresponding features of imagePerforming cross correlation operation according to the formula (1) to obtain a similarity score map set

Step 3.3: acquiring a corresponding position of a tracking target according to the similarity score map;

upsampling each similarity score map in the set r to obtain an upsampled similarity score map setFinding the upsampled similarity score map where the maximum value is located in the set R, and marking the upsampled similarity score map as RtTo R, to RtComparing all the values to obtain K response value points with the maximum value, averaging the K response value points to obtain a response value point d, and finding a corresponding position of the d point in the current video frame, wherein the corresponding position of the d point is the position of the searched target;

and 4, step 4: cutting out an area where the target is located according to the position where the tracking target is located in the current frame found in the step 3 to be used as a target template, inputting the target template into a target template branch of a tracking model to obtain a new target template characteristic F, reading the next frame of the video to be used as the current frame, and turning to the step 3 to perform the next iteration until all frames in the video are read and the iteration is finished.

In order to detect the effectiveness of the invention, Bird2 video sequences in the OTB100 data set are selected and tested, and the result is shown in fig. 7, which shows that the invention can effectively cope with the problems of occlusion, morphological change and the like in the video tracking process, and has good robustness. Here, a real scene is selected to test the method of the present invention, and the same method as the above implementation steps is adopted to track the target, and as a result, as shown in fig. 8, it can be seen from the example that the present invention can track the target well, and has a certain use value.

In conclusion, the twin network visual tracking method based on the memory unit can be used for learning the appearance change trend of the target on the time sequence, ensuring the stability of the target on the space, ensuring the real-time performance of the tracking process and improving the tracking robustness of the tracking model facing a complex environment.

Claims (4)

1. A twin network visual tracking method based on a memory unit is characterized by comprising the following specific steps:

step 1: building a tracking model;

the tracking model is divided into two branches: the method comprises the steps that a target template branch and a search image branch are respectively arranged, the target template branch consists of a backbone network and a DWConv-LSTM memory unit, the search image branch consists of a backbone network, and the backbone networks of the two branches are twin networks which are built on the basis of residual modules and share weights;

step 2: acquiring an initial target template characteristic F;

and step 3: acquiring the corresponding position of a tracking target in a current video frame;

and 4, step 4: cutting out an area where the target is located according to the position where the tracking target is located in the current frame found in the step 3 to be used as a target template, and inputting the target template into a target template branch of the tracking model to obtain a new target template characteristic F; and (4) reading the next frame of the video as the current frame, and turning to the step (3) to carry out the next iteration until all frames in the video are read and the iteration is finished.

2. The twin network vision tracking method based on memory units as claimed in claim 1, wherein in step 1, the DWConv-LSTM memory unit is established by using deep separable convolution instead of full connection structure in long-short term memory network LSTM, so that the target template feature output by the memory unit contains both time-series variation information and spatial feature information.

3. The twin network visual tracking method based on memory units as claimed in claim 1, wherein the step 2 obtains an initial target template feature F, and the specific operation steps are as follows:

step 2.1: extracting characteristics e of an initial target template image through a target template branched backbone network0,e0The initial cell unit c in DWConv-LSTM memory unit is obtained by convolution operation of 3 × 3 and 1 × 10,e0Obtaining an initial hidden layer state h in a DWConv-LSTM memory cell by performing a convolution operation on a branch consisting of another set of convolutions of 3 × 3 and convolutions of 1 × 10;

Step 2.2: c is to0,h0And e0Inputting the target template features into a DWConv-LSTM memory unit, and acquiring initial target template features F.

4. The twin network visual tracking method based on memory unit as claimed in claim 1, wherein the step 3 obtains the corresponding position of the tracking target in the current frame of the video, and the specific operation steps are as follows:

step 3.1: cutting out a search image from a current frame of a video, and acquiring characteristics corresponding to a multi-scale search image;

cutting out search image S from current frame in videotEstablishing with StCorresponding multiscale search image collectionTaking the multi-scale search image in the set S as a batch, obtaining a feature set corresponding to the multi-scale search image through the search image branch of the tracking model

Step 3.2: acquiring a similarity score map;

the target template feature F at the current moment and the feature corresponding to the multi-scale search image obtained in step 3.1Performing cross correlation operation according to the following formula to obtain a similarity score atlasCombination of Chinese herbsIn the formula (#) represents a convolution operator;

step 3.3: acquiring a corresponding position of a tracking target according to the similarity score map;

upsampling each similarity score map in the set r to obtain an upsampled similarity score map setFinding the upsampled similarity score map where the maximum value is located in the set R, and marking the upsampled similarity score map as RtTo R, to RtAnd comparing all the values to obtain K response value points with the maximum value, averaging the K response value points to obtain a response value point d, and finding the corresponding position of the d point in the current video frame, namely the position of the searched target.

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202011473954.6A CN112489088A (en) | 2020-12-15 | 2020-12-15 | Twin network visual tracking method based on memory unit |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202011473954.6A CN112489088A (en) | 2020-12-15 | 2020-12-15 | Twin network visual tracking method based on memory unit |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| CN112489088A true CN112489088A (en) | 2021-03-12 |

Family

ID=74917829

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN202011473954.6A Pending CN112489088A (en) | 2020-12-15 | 2020-12-15 | Twin network visual tracking method based on memory unit |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN112489088A (en) |

Cited By (3)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN115061574A (en) * | 2022-07-06 | 2022-09-16 | 陈伟 | Human-computer interaction system based on visual core algorithm |

| CN115222771A (en) * | 2022-07-05 | 2022-10-21 | 北京建筑大学 | Target tracking method and device |

| CN115588030A (en) * | 2022-09-27 | 2023-01-10 | 湖北工业大学 | Visual target tracking method and device based on twin network |

Citations (8)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN108734272A (en) * | 2017-04-17 | 2018-11-02 | 英特尔公司 | Convolutional neural networks optimize mechanism |

| US20190147610A1 (en) * | 2017-11-15 | 2019-05-16 | Uber Technologies, Inc. | End-to-End Tracking of Objects |

| CN110223324A (en) * | 2019-06-05 | 2019-09-10 | 东华大学 | A kind of method for tracking target of the twin matching network indicated based on robust features |

| CN110415271A (en) * | 2019-06-28 | 2019-11-05 | 武汉大学 | One kind fighting twin network target tracking method based on the multifarious generation of appearance |

| CN110728270A (en) * | 2019-12-17 | 2020-01-24 | 北京影谱科技股份有限公司 | Method, device and equipment for removing video character and computer readable storage medium |

| CN111144364A (en) * | 2019-12-31 | 2020-05-12 | 北京理工大学重庆创新中心 | Twin network target tracking method based on channel attention updating mechanism |

| CN111179314A (en) * | 2019-12-30 | 2020-05-19 | 北京工业大学 | Target tracking method based on residual dense twin network |

| CN111696137A (en) * | 2020-06-09 | 2020-09-22 | 电子科技大学 | Target tracking method based on multilayer feature mixing and attention mechanism |

-

2020

- 2020-12-15 CN CN202011473954.6A patent/CN112489088A/en active Pending

Patent Citations (8)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN108734272A (en) * | 2017-04-17 | 2018-11-02 | 英特尔公司 | Convolutional neural networks optimize mechanism |

| US20190147610A1 (en) * | 2017-11-15 | 2019-05-16 | Uber Technologies, Inc. | End-to-End Tracking of Objects |

| CN110223324A (en) * | 2019-06-05 | 2019-09-10 | 东华大学 | A kind of method for tracking target of the twin matching network indicated based on robust features |

| CN110415271A (en) * | 2019-06-28 | 2019-11-05 | 武汉大学 | One kind fighting twin network target tracking method based on the multifarious generation of appearance |

| CN110728270A (en) * | 2019-12-17 | 2020-01-24 | 北京影谱科技股份有限公司 | Method, device and equipment for removing video character and computer readable storage medium |

| CN111179314A (en) * | 2019-12-30 | 2020-05-19 | 北京工业大学 | Target tracking method based on residual dense twin network |

| CN111144364A (en) * | 2019-12-31 | 2020-05-12 | 北京理工大学重庆创新中心 | Twin network target tracking method based on channel attention updating mechanism |

| CN111696137A (en) * | 2020-06-09 | 2020-09-22 | 电子科技大学 | Target tracking method based on multilayer feature mixing and attention mechanism |

Non-Patent Citations (3)

| Title |

|---|

| ANFENG HE等: "A Twofold Siamese Network for Real-Time Object Tracking", 《CVPR 2018》, pages 4834 - 4843 * |

| TIANYU YANG等: "Recurrent Filter learning for Visual Tracking", 《IEEE INTERNATIONAL CONFERENCE ON COMPUTER VISION WORKSHOP》, pages 1 - 4 * |

| 葛道辉等: "轻量级神经网络架构综述", 《软件学报》, vol. 13, no. 9, pages 2630 - 2631 * |

Cited By (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN115222771A (en) * | 2022-07-05 | 2022-10-21 | 北京建筑大学 | Target tracking method and device |

| CN115061574A (en) * | 2022-07-06 | 2022-09-16 | 陈伟 | Human-computer interaction system based on visual core algorithm |

| CN115588030A (en) * | 2022-09-27 | 2023-01-10 | 湖北工业大学 | Visual target tracking method and device based on twin network |

| CN115588030B (en) * | 2022-09-27 | 2023-09-12 | 湖北工业大学 | Visual target tracking method and device based on twin network |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN108154118B (en) | A kind of target detection system and method based on adaptive combined filter and multistage detection | |

| CN111311666B (en) | Monocular vision odometer method integrating edge features and deep learning | |

| CN112184752A (en) | Video target tracking method based on pyramid convolution | |

| CN107358623B (en) | Relevant filtering tracking method based on significance detection and robustness scale estimation | |

| CN108062525B (en) | Deep learning hand detection method based on hand region prediction | |

| CN107016689A (en) | A kind of correlation filtering of dimension self-adaption liquidates method for tracking target | |

| CN111311647B (en) | Global-local and Kalman filtering-based target tracking method and device | |

| CN112750148B (en) | Multi-scale target perception tracking method based on twin network | |

| CN113361542B (en) | Local feature extraction method based on deep learning | |

| CN111046856B (en) | Parallel pose tracking and map creating method based on dynamic and static feature extraction | |

| CN112232134B (en) | Human body posture estimation method based on hourglass network and attention mechanism | |

| JP2008243187A (en) | Computer implemented method for tracking object in video frame sequence | |

| CN112489088A (en) | Twin network visual tracking method based on memory unit | |

| CN112364865B (en) | Method for detecting small moving target in complex scene | |

| CN110555868A (en) | method for detecting small moving target under complex ground background | |

| CN115375737B (en) | Target tracking method and system based on adaptive time and serialized space-time characteristics | |

| CN111523463B (en) | Target tracking method and training method based on matching-regression network | |

| Park et al. | X-ray image segmentation using multi-task learning | |

| CN111415370A (en) | Embedded infrared complex scene target real-time tracking method and system | |

| Zhang et al. | Dual-branch multi-information aggregation network with transformer and convolution for polyp segmentation | |

| CN112991394B (en) | KCF target tracking method based on cubic spline interpolation and Markov chain | |

| CN112070181B (en) | Image stream-based cooperative detection method and device and storage medium | |

| CN116386089B (en) | Human body posture estimation method, device, equipment and storage medium under motion scene | |

| CN110689559B (en) | Visual target tracking method based on dense convolutional network characteristics | |

| Li et al. | Spatiotemporal tree filtering for enhancing image change detection |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination |