CN110807920B - Emotion determining and reporting method and device for vehicle driving, server, terminal and vehicle - Google Patents

Emotion determining and reporting method and device for vehicle driving, server, terminal and vehicle Download PDFInfo

- Publication number

- CN110807920B CN110807920B CN201911013257.XA CN201911013257A CN110807920B CN 110807920 B CN110807920 B CN 110807920B CN 201911013257 A CN201911013257 A CN 201911013257A CN 110807920 B CN110807920 B CN 110807920B

- Authority

- CN

- China

- Prior art keywords

- data

- emotion

- vehicle

- curve

- road condition

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Active

Links

- 230000008451 emotion Effects 0.000 title claims abstract description 262

- 238000000034 method Methods 0.000 title claims abstract description 48

- 230000036651 mood Effects 0.000 claims abstract description 70

- 230000001133 acceleration Effects 0.000 claims abstract description 27

- 230000002996 emotional effect Effects 0.000 claims description 17

- 230000002159 abnormal effect Effects 0.000 claims description 10

- 238000004422 calculation algorithm Methods 0.000 claims description 10

- 230000004927 fusion Effects 0.000 claims description 4

- 238000004364 calculation method Methods 0.000 claims description 2

- 230000008569 process Effects 0.000 description 5

- 230000008859 change Effects 0.000 description 4

- 238000010586 diagram Methods 0.000 description 4

- 230000008921 facial expression Effects 0.000 description 3

- 238000004458 analytical method Methods 0.000 description 2

- 230000009286 beneficial effect Effects 0.000 description 2

- 238000013500 data storage Methods 0.000 description 2

- 230000000694 effects Effects 0.000 description 2

- 230000000295 complement effect Effects 0.000 description 1

- 238000010276 construction Methods 0.000 description 1

- 238000005516 engineering process Methods 0.000 description 1

- 230000005281 excited state Effects 0.000 description 1

- 230000006872 improvement Effects 0.000 description 1

- 238000012986 modification Methods 0.000 description 1

- 230000004048 modification Effects 0.000 description 1

- 230000006855 networking Effects 0.000 description 1

- 230000003287 optical effect Effects 0.000 description 1

- 238000004451 qualitative analysis Methods 0.000 description 1

- 238000004445 quantitative analysis Methods 0.000 description 1

- 239000007787 solid Substances 0.000 description 1

Images

Classifications

-

- G—PHYSICS

- G08—SIGNALLING

- G08G—TRAFFIC CONTROL SYSTEMS

- G08G1/00—Traffic control systems for road vehicles

- G08G1/01—Detecting movement of traffic to be counted or controlled

- G08G1/0104—Measuring and analyzing of parameters relative to traffic conditions

-

- G—PHYSICS

- G10—MUSICAL INSTRUMENTS; ACOUSTICS

- G10L—SPEECH ANALYSIS TECHNIQUES OR SPEECH SYNTHESIS; SPEECH RECOGNITION; SPEECH OR VOICE PROCESSING TECHNIQUES; SPEECH OR AUDIO CODING OR DECODING

- G10L25/00—Speech or voice analysis techniques not restricted to a single one of groups G10L15/00 - G10L21/00

- G10L25/48—Speech or voice analysis techniques not restricted to a single one of groups G10L15/00 - G10L21/00 specially adapted for particular use

- G10L25/51—Speech or voice analysis techniques not restricted to a single one of groups G10L15/00 - G10L21/00 specially adapted for particular use for comparison or discrimination

- G10L25/63—Speech or voice analysis techniques not restricted to a single one of groups G10L15/00 - G10L21/00 specially adapted for particular use for comparison or discrimination for estimating an emotional state

Landscapes

- Engineering & Computer Science (AREA)

- Physics & Mathematics (AREA)

- Health & Medical Sciences (AREA)

- Audiology, Speech & Language Pathology (AREA)

- Child & Adolescent Psychology (AREA)

- Hospice & Palliative Care (AREA)

- Computational Linguistics (AREA)

- Signal Processing (AREA)

- General Health & Medical Sciences (AREA)

- Human Computer Interaction (AREA)

- Psychiatry (AREA)

- Acoustics & Sound (AREA)

- Multimedia (AREA)

- Chemical & Material Sciences (AREA)

- Analytical Chemistry (AREA)

- General Physics & Mathematics (AREA)

- Traffic Control Systems (AREA)

Abstract

A method and a device for determining and reporting emotion of vehicle driving, a server, a terminal and a vehicle are provided, wherein the method for determining emotion comprises the following steps: receiving emotion data and vehicle operation data generated when a user drives a vehicle, wherein the emotion data comprises a plurality of data points which are arranged according to a time sequence, and each data point is used for describing any one of the following emotions: positive mood, calm mood, negative mood; and fusing the emotion data and the vehicle operation data to generate an emotion curve, wherein the emotion curve is at least used for describing the incidence relation between the emotion generated by the user and the vehicle dynamic data of the vehicle when the vehicle is driven, and the vehicle dynamic data comprises vehicle speed data, acceleration data, deceleration data, constant-speed cruise data, brake data and turning data. The technical scheme provided by the invention can more accurately evaluate the driving experience of the user on the vehicle.

Description

Technical Field

The invention relates to the technical field of vehicle networking, in particular to a method and a device for determining and reporting emotion of vehicle driving, a server, a terminal and a vehicle.

Background

With the development of science and technology and the improvement of living standard, automobiles are increasingly integrated into the lives of people. The trial riding and the trial running become an indispensable link before various large automobile manufacturers and dealers popularize new automobile models.

Considering that the car purchasing intention of the customer is closely related to the driving experience of the customer, the current pilot driving enables the pilot driving users to score so as to analyze the user experience of the pilot driving users based on the score. However, subjective scoring assessment does not accurately represent the objective assessment of the vehicle by the user.

Disclosure of Invention

The invention solves the technical problem of how to more accurately evaluate the vehicle driving experience of a user.

In order to solve the above technical problem, an embodiment of the present invention provides an emotion determining method for vehicle driving, including: receiving emotion data and vehicle operation data generated when a user drives a vehicle, wherein the emotion data comprises a plurality of data points which are arranged according to a time sequence, and each data point is used for describing any one of the following emotions: positive mood, calm mood, negative mood; and fusing the emotion data and the vehicle operation data to generate an emotion curve, wherein the emotion curve is at least used for describing the incidence relation between the emotion generated by the user and the vehicle dynamic data of the vehicle when the vehicle is driven, and the vehicle dynamic data comprises vehicle speed data, acceleration data, deceleration data, constant-speed cruise data, brake data and turning data.

Optionally, the emotion determining method further includes: receiving road condition information generated when the user drives a vehicle; the mood curve comprises the peaks and the troughs and corresponding time periods thereof, and the method further comprises: determining the time range of the emotional curve from the peak to the trough, wherein the peak is connected with the trough; or determining the time range of the emotion curve descending from the reference line to the wave trough, wherein the reference line is connected with the wave trough; intercepting the road condition information within the time range from the road condition information; if the intercepted road condition information shows that the road condition is abnormal, judging that the emotion curve in the time range is noise; marking the noise points; wherein the trough represents a negative emotion and the peak represents a positive emotion.

Optionally, the emotion data is obtained by using an edge calculation algorithm.

Optionally, the fusing the emotion data and the vehicle operation data to generate an emotion curve includes: determining the running state of the vehicle, wherein the running state comprises an acceleration state, a deceleration state, a constant-speed cruise state, a braking state and a turning state; establishing an incidence relation between the emotion data and the running state according to the time sequence, and generating the emotion curve according to the incidence relation; wherein the emotional curve is marked with the operating state.

In order to solve the above technical problem, an embodiment of the present invention further provides an emotion reporting method for vehicle driving, including: calculating emotion data of a user when the user drives a vehicle, wherein the emotion data comprises a plurality of data points which are arranged according to time sequence, and each data point is used for describing any one of the following emotions: positive mood, calm mood, negative mood; recording vehicle operation data generated when the vehicle runs; and reporting the emotion data and the vehicle operation data to a server so that the server generates an emotion curve according to the emotion data and the vehicle operation data, wherein the emotion curve is at least used for describing the association relationship between the emotion generated by the user and the vehicle dynamic data of the vehicle when the vehicle is driven, and the vehicle dynamic data comprises vehicle speed data, acceleration data, deceleration data, constant-speed cruise data, brake data and turning data.

Optionally, the emotion determining method further includes: and recording road condition information generated when the user drives the vehicle, and uploading the road condition information to the server.

Optionally, the emotion data is obtained by using an edge calculation algorithm.

In order to solve the above technical problem, an embodiment of the present invention further provides an emotion determining apparatus for vehicle driving, including: the receiving module is used for receiving emotion data and vehicle operation data generated when a user drives a vehicle, the emotion data comprises a plurality of data points which are arranged according to time sequence, and each data point is used for describing any one of the following emotions: positive mood, calm mood, negative mood; and the fusion module is used for fusing the emotion data and the vehicle operation data to generate an emotion curve, wherein the emotion curve is at least used for describing the incidence relation between the emotion generated by the user and the vehicle dynamic data of the vehicle when the vehicle is driven, and the vehicle dynamic data comprises vehicle speed data, acceleration data, deceleration data, constant-speed cruise data, brake data and turning data.

In order to solve the above technical problem, an embodiment of the present invention further provides an emotion reporting apparatus for vehicle driving, including: the calculation module is used for calculating and obtaining emotion data of a user when the user drives a vehicle, the emotion data comprises a plurality of data points which are arranged according to time sequence, and each data point is used for describing any one of the following emotions: positive mood, calm mood, negative mood; the recording module is used for recording vehicle operation data generated when the vehicle runs; the reporting module is used for reporting the emotion data and the vehicle operation data to a server so that the server generates an emotion curve according to the emotion data and the vehicle operation data, the emotion curve is at least used for describing an incidence relation between emotion generated by the user and vehicle dynamic data of the vehicle when the vehicle is driven, and the vehicle dynamic data comprises vehicle speed data, acceleration data, deceleration data, constant-speed cruise data, brake data and turning data.

To solve the above technical problem, an embodiment of the present invention further provides a storage medium having stored thereon computer instructions, where the computer instructions execute the steps of the above method when executed.

In order to solve the above technical problem, an embodiment of the present invention further provides a server, including a memory and a processor, where the memory stores computer instructions executable on the processor, and the processor executes the computer instructions to perform the steps of the above method.

In order to solve the foregoing technical problem, an embodiment of the present invention further provides a terminal, including a memory and a processor, where the memory stores computer instructions executable on the processor, and the processor executes the computer instructions to perform the steps of the foregoing method.

In order to solve the technical problem, an embodiment of the present invention further provides a vehicle, including the terminal.

Compared with the prior art, the technical scheme of the embodiment of the invention has the following beneficial effects:

the embodiment of the invention provides a method for determining emotion of vehicle driving, which comprises the following steps: receiving emotion data and vehicle operation data generated when a user drives a vehicle, wherein the emotion data comprises a plurality of data points which are arranged according to a time sequence, and each data point is used for describing any one of the following emotions: positive mood, calm mood, negative mood; and fusing the emotion data and the vehicle operation data to generate an emotion curve, wherein the emotion curve is at least used for describing the incidence relation between the emotion generated by the user and the vehicle dynamic data of the vehicle when the vehicle is driven, and the vehicle dynamic data comprises vehicle speed data, acceleration data, deceleration data, constant-speed cruise data, brake data and turning data. According to the embodiment of the invention, the emotion curve is generated through the user emotion data and the vehicle dynamic data reported by the vehicle-mounted equipment so as to describe the association relation between the emotion generated by the user in the vehicle driving process and the vehicle dynamic data. The emotional curve can more clearly reflect the experience effect of the user in the driving process (such as test driving). Compared with the traditional manual scoring mode, the vehicle driving experience of the user can be more accurately and objectively evaluated by the embodiment of the invention.

Further, the method further comprises: receiving road condition information generated when the user drives a vehicle; the emotional curve comprises peaks and troughs and corresponding time periods, and the method further comprises the following steps: determining the time range of the emotional curve from the peak to the trough, wherein the peak is connected with the trough; intercepting the road condition information within the time range from the road condition information; if the intercepted road condition information shows that the road condition is abnormal, judging that the emotion curve in the time range is noise; marking the noise points; wherein the trough represents a negative emotion and the peak represents a positive emotion. The embodiment of the invention can also mark abnormal road conditions and noise points corresponding to the abnormal road conditions, thereby providing a feasible scheme for more accurately evaluating the real satisfaction degree of the user on the vehicle.

Further, the emotion data is obtained by adopting an edge calculation algorithm. The embodiment of the invention can obtain the emotion data by adopting an edge calculation algorithm, can reduce the data volume of the data reported by the vehicle-mounted equipment, and can also reduce the data storage capacity.

Drawings

Fig. 1 is a schematic flow chart of an emotion determination method for vehicle driving according to an embodiment of the present invention;

fig. 2 is a flowchart illustrating an emotion reporting method for vehicle driving according to an embodiment of the present invention;

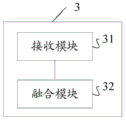

fig. 3 is a schematic structural diagram of an emotion determining apparatus for vehicle driving according to an embodiment of the present invention;

fig. 4 is a schematic structural diagram of an emotion reporting apparatus for vehicle driving according to an embodiment of the present invention.

Detailed Description

As background art, in the prior art, the way of evaluating the vehicle or the driving satisfaction of the vehicle by means of artificial subjective scoring has large main influence and low accuracy.

Those skilled in the art will appreciate that the manual scoring format is a qualitative analysis, not a quantitative analysis. There is a lack of valuable information for further sales and marketing by vehicle dealers.

The embodiment of the invention provides a method for determining emotion of vehicle driving, which comprises the following steps: receiving emotion data and vehicle operation data generated when a user drives a vehicle, wherein the emotion data comprises a plurality of data points which are arranged according to a time sequence, and each data point is used for describing any one of the following emotions: positive mood, calm mood, negative mood; and fusing the emotion data and the vehicle operation data to generate an emotion curve, wherein the emotion curve is at least used for describing the incidence relation between the emotion generated by the user and the vehicle dynamic data of the vehicle when the vehicle is driven, and the vehicle dynamic data comprises vehicle speed data, acceleration data, deceleration data, constant-speed cruise data, brake data and turning data.

According to the embodiment of the invention, the emotion curve is generated through the user emotion data and the vehicle dynamic data reported by the vehicle-mounted equipment so as to describe the association relation between the emotion generated by the user in the vehicle driving process and the vehicle dynamic data. The emotional curve can more clearly reflect the experience effect of the user in the driving process (such as test driving). Compared with the traditional manual scoring mode, the vehicle driving experience of the user can be more accurately and objectively evaluated by the embodiment of the invention.

In order to make the aforementioned objects, features and advantages of the present invention comprehensible, embodiments accompanied with figures are described in detail below.

Fig. 1 is a flowchart illustrating an emotion determining method for vehicle driving according to an embodiment of the present invention. The vehicle may be an autonomous vehicle, or may be another vehicle other than an autonomous vehicle.

In particular implementations, the emotion determination method may be used to assess the emotion of a user driving a vehicle. The emotion determination method may be performed by a server. The server may be a single server or a server cluster.

Specifically, the emotion determination method may include the steps of:

step S101, receiving emotion data and vehicle operation data generated when a user drives a vehicle, wherein the emotion data comprises a plurality of data points which are arranged according to time sequence, and each data point is used for describing any one of the following emotions: positive mood, calm mood, negative mood;

and S102, fusing the emotion data and the vehicle operation data to generate an emotion curve, wherein the emotion curve is at least used for describing the incidence relation between the emotion generated by the user and vehicle dynamic data of the vehicle when the vehicle is driven, and the vehicle dynamic data comprises vehicle speed data, acceleration data, deceleration data, constant-speed cruise data, brake data and turning data.

More specifically, the vehicle may include an in-vehicle device, which may be a terminal that includes a camera and is capable of connecting to a server (e.g., a cloud server).

In a specific implementation, the vehicle may deploy a camera inside the vehicle to capture facial expressions and/or body language, etc. of a user driving the vehicle, where the "/" may be understood as an or.

The vehicle can also be provided with a camera outside the vehicle to shoot real-time road conditions in the driving process of the vehicle, so that road condition information is obtained. The road condition information may include whether the road is smooth, whether the road is flat, whether traffic conditions such as overtaking and overtaking of other vehicles exist, and whether an accident or road construction information occurs.

The vehicle may also include at least one sensor that may monitor vehicle operating data of the vehicle. Such as vehicle speed, temperature and humidity in the vehicle, etc.

In step S101, vehicle dynamic data recorded by the vehicle-mounted device may be reported to a server. The vehicle dynamics data may include vehicle operating speed with time information (e.g., a timestamp). The vehicle operating speeds may be arranged in chronological order. Further, the vehicle dynamics data may also include turn data, acceleration data, deceleration data, braking data, hard acceleration or hard deceleration data, and the like.

The camera positioned inside can analyze and calculate the facial expressions and/or body languages of the user shot by the camera to obtain the emotion data of the user. In a specific implementation, the emotion data can be obtained by adopting an edge calculation algorithm so as to reduce the amount of uploaded data and the data storage amount.

The camera may then send the mood data to the server.

In one non-limiting example, the mood data may include a plurality of data points arranged in chronological order. Each of the data points is used to describe any of the following emotions: positive mood, calm mood, negative mood. The driving experience satisfaction degree of the user can be obtained through the emotion of the user.

Further, the in-vehicle device may upload the emotion data and the vehicle dynamics data at the same time.

Then, after receiving the emotion data and the vehicle dynamic data generated when the user drives the vehicle, the server may fuse the emotion data and the vehicle dynamic data to generate an emotion curve in step S102. The mood curve may be used to describe the association of the user generated mood with the vehicle dynamics data while driving the vehicle. The mood curve may reflect changes in mood of the user over time, as well as changes in vehicle dynamics data.

In one non-limiting example, the horizontal axis (i.e., the X-axis) of the mood curve is time and the vertical axis (i.e., the Y-axis) is mood value. The reference line of the emotion curve represents calm emotion, positive emotion is represented above the reference line, and negative emotion is represented below the reference line.

Further, the emotion curve may mark vehicle speed information such as rapid acceleration, rapid deceleration, or cruise control, and corresponding time range or time point. And the emotion of the user during acceleration, deceleration and constant-speed cruise can be quantitatively determined by integrating the emotion value and the vehicle speed information.

In specific implementation, after the vehicle-mounted device directly reports the vehicle operation data to the server, the server may determine the vehicle operation state of the vehicle according to the received vehicle dynamic data, where the vehicle operation state includes a (rapid) acceleration state, a (rapid) deceleration state, a constant-speed cruise state, a braking state, a turning state, and the like.

As a variation, the vehicle-mounted device may further calculate an operating state of the vehicle according to a change in the vehicle speed, and report the operating state to the server.

And then, the server can establish the incidence relation between the emotion data and the running state according to the time sequence, and can generate an emotion curve according to the incidence relation. Wherein the emotional curve is marked with the operating state.

The vehicle speed may include acceleration, deceleration, constant speed (i.e., the vehicle speed is kept within a preset speed range for a certain time), sudden braking, sudden acceleration, sudden deceleration, and the like. The sudden braking, the sudden acceleration and the sudden deceleration can be obtained by calculating and deducing speed change. The emotion curve can mark key speed change information such as 'sudden braking, sudden acceleration, sudden deceleration' and the like at corresponding time periods and/or time points.

In a specific implementation, the mood curve may include peaks and troughs and their corresponding time periods. Wherein, the peaks and the troughs are used for representing the emotion change of the user, the troughs can represent negative emotions, and the peaks can represent positive emotions. The positive emotions may indicate that the user is in a happy, excited state, and the negative emotions may indicate that the user is in an angry, angry state. Furthermore, the mood curve may also include a reference line, which may represent a calm mood. The calm mood may indicate that the user is in a mood and state.

In specific implementation, the vehicle-mounted device can also send road condition information obtained by shooting through the camera to the server. After receiving the traffic information, the server may mark noise in the emotion curve.

In particular, the server may analyze the mood curve to determine a time range in which the mood curve reverses directly from a peak to a trough, the peak being connected to the trough, i.e. between the peak and the trough there is no calmness. This time indicating that the user's mood was mutated from a positive mood to a negative mood. Alternatively, the server may analyze the emotion curve to determine a time range in which the emotion curve descends from the reference line to the trough, the reference line being connected to the trough. At this time, it is indicated that the user emotion suddenly changes from calm emotion to negative emotion.

Then, the server may intercept the traffic information within the time range from the traffic information. If the intercepted traffic information indicates that the traffic is abnormal, the server may determine that the emotion curve in the time range is noise. And the noise can be marked. Marking noise points is beneficial to obtaining more accurate emotion analysis results of users and vehicle satisfaction.

Further, if abrupt peaks and/or troughs appear at part of time or in a time period in the emotion curve, road condition information corresponding to the peaks and/or troughs can be further checked to analyze emotion changes of the user. For example, when cruising at a constant speed, the emotional curve suddenly appears with a trough (i.e. negative emotion), and then the road condition information and the vehicle data are further analyzed, and if other vehicles are found to cut into the lane and have sudden braking at the same time, the emotional fluctuation cannot be judged as unsatisfactory for cruising at the constant speed or the sudden braking. By means of the emotion curve, the analysis result of vehicle driving (such as test driving) can be further more accurate and objective.

Fig. 2 is a flowchart illustrating an emotion reporting method for vehicle driving according to an embodiment of the present invention. The emotion reporting method can be executed by a terminal, for example, an in-vehicle device.

Specifically, the emotion reporting method may include the following steps:

step S201, calculating emotion data of a user when the user drives a vehicle, wherein the emotion data comprises a plurality of data points which are arranged according to a time sequence, and each data point is used for describing any one of the following emotions: positive mood, calm mood, negative mood;

step S202, recording vehicle operation data generated when the vehicle runs;

step S203, reporting the emotion data and the vehicle operation data to a server so that the server generates an emotion curve according to the emotion data and the vehicle operation data, wherein the emotion curve is at least used for describing an incidence relation between emotion generated by the user and vehicle dynamic data of the vehicle when the vehicle is driven, and the vehicle dynamic data comprises vehicle speed data, acceleration data, deceleration data, constant-speed cruise data, brake data and turning data.

More specifically, the vehicle-mounted device may calculate the facial expression and/or the body language of the user captured by the camera in step S201 to obtain the emotion data of the user when driving the vehicle.

The emotion data comprises a plurality of data points which are arranged according to a time sequence, and each data point is used for describing any one of the following emotions: positive mood, calm mood, negative mood.

In a specific implementation, the emotion data can be obtained by using an edge calculation algorithm.

In step S202, the vehicle-mounted device may also record vehicle dynamic data generated while the vehicle is running.

In step S203, the vehicle-mounted device may report the emotion data and the vehicle dynamic data to a server, so that the server generates an emotion curve according to the emotion data and the vehicle operation data, where the emotion curve is at least used to describe an association relationship between an emotion generated by the user and a vehicle speed when the vehicle is driven.

In specific implementation, the vehicle-mounted device may further record road condition information generated when the user drives the vehicle, and upload the road condition information to the server.

After receiving the road condition information, the server can further analyze the generated emotion curve. For example, when unreasonable emotions such as peaks turning to troughs appear in the emotion curve, how the emotions are generated can be judged based on road condition information of relevant time periods, so that the vehicle driving experience of the user can be evaluated more accurately and objectively.

Those skilled in the art understand that the steps S201 to S203 can be regarded as execution steps corresponding to the steps S101 to S102 described in the above embodiment shown in fig. 1, and the two steps are complementary in specific implementation principle and logic. Therefore, for the emotion reporting method and terminology on the vehicle-mounted device side, reference may be made to the description related to the embodiment shown in fig. 1, and details are not repeated here.

Therefore, by the technical scheme provided by the embodiment of the invention, the emotion data is combined with the vehicle running speed and the road condition information, so that the vehicle driving experience of the user can be more accurately and objectively evaluated.

Fig. 3 is a schematic diagram of the structure of an emotion determining apparatus for vehicle driving, in which the present invention is implemented. The emotion determining apparatus 3 for vehicle driving (hereinafter, referred to as emotion determining apparatus 3) may implement the method of fig. 1, and is executed by a server.

Specifically, the emotion determining apparatus 3 may include: the receiving module 31 is configured to receive emotion data and vehicle operation data generated when a user drives a vehicle, where the emotion data includes a plurality of data points arranged according to a time sequence, and each data point is used to describe any one of the following emotions: positive mood, calm mood, negative mood; and the fusion module 32 is configured to fuse the emotion data and the vehicle operation data to generate an emotion curve, where the emotion curve is at least used to describe an association relationship between an emotion generated by the user and vehicle dynamic data of the vehicle when the vehicle is driven, and the vehicle dynamic data includes vehicle speed data, acceleration data, deceleration data, cruise control data, brake data, and turning data.

In a specific implementation, the emotion determining apparatus 3 may further include: an accepting module (not shown) for receiving the road condition information generated when the user drives the vehicle; the emotion curve includes a peak and a trough and a time period corresponding thereto, and the emotion determining apparatus 3 may further include: a determining module (not shown) for determining a time range of the emotional curve from a peak to a trough, wherein the peak is connected with the trough; or determining the time range of the emotion curve descending from the reference line to the wave trough, wherein the reference line is connected with the wave trough; an intercepting module (not shown) for intercepting the traffic information within the time range from the traffic information; a judging module (not shown), if the intercepted traffic information indicates abnormal traffic, judging the emotion curve in the time range as noise; a marking module (not shown) for marking the noise point; wherein the trough represents a negative emotion and the peak represents a positive emotion.

In a specific implementation, the emotion data can be obtained by using an edge calculation algorithm.

In a specific implementation, the fusion module 32 may include: a determining submodule (not shown) for determining the operating state of the vehicle according to the operating speed of the vehicle, wherein the operating state comprises an acceleration state, a deceleration state, a constant-speed cruising state and a braking state; a generating submodule (not shown) for establishing an association relationship between the emotion data and the operating state according to a time sequence, and generating the emotion curve according to the association relationship; wherein the emotional curve is marked with the operating state.

For more details of the working principle and working mode of the emotion determining apparatus 3, reference may be made to the related description in fig. 1, and details are not repeated here.

Fig. 4 is a schematic structural diagram of an emotion reporting apparatus for vehicle driving according to an embodiment of the present invention. The emotion reporting device 4 for vehicle driving (hereinafter, referred to as emotion reporting device 4) may implement the method of fig. 2, and is executed by a terminal, for example, by a vehicle-mounted device.

Specifically, the emotion reporting apparatus 4 may include: the calculating module 41 is configured to calculate emotion data of a user when the user drives a vehicle, where the emotion data includes a plurality of data points arranged according to a time sequence, and each data point is used to describe any one of the following emotions: positive mood, calm mood, negative mood; a recording module 42, configured to record vehicle operation data generated when the vehicle runs; and a reporting module 43, configured to report the emotion data and the vehicle operation data to a server, so that the server generates an emotion curve according to the emotion data and the vehicle operation data, where the emotion curve is at least used to describe an association relationship between an emotion generated by the user and vehicle dynamic data of the vehicle when the vehicle is driven, and the vehicle dynamic data includes vehicle speed data, acceleration data, deceleration data, cruise control data, brake data, and turning data.

In a specific implementation, the emotion reporting apparatus 4 may further include: and an uploading module (not shown) for recording road condition information generated when the user drives the vehicle and uploading the road condition information to the server.

In a specific implementation, the emotion data is obtained by adopting an edge calculation algorithm.

For more contents of the working principle and the working mode of the emotion reporting apparatus 4, reference may be made to the related description in fig. 2, which is not described herein again.

Embodiments of the present invention further provide a storage medium, on which computer instructions are stored, and when the computer instructions are executed, the steps of the method shown in fig. 1 or the steps of the method shown in fig. 2 are executed. The storage medium may be a computer-readable storage medium, and may include, for example, a non-volatile (non-volatile) or non-transitory (non-transitory) memory, and may further include an optical disc, a mechanical hard disk, a solid state hard disk, and the like.

The embodiment of the present invention further provides a server, which includes a memory and a processor, where the memory stores computer instructions capable of running on the processor, and the processor executes the steps of the method when executing the computer instructions. In one embodiment, the server may be a cloud server.

The embodiment of the invention also provides a terminal, which comprises a memory and a processor, wherein the memory is stored with computer instructions capable of running on the processor, and the processor executes the steps of the method when running the computer instructions. In one embodiment, the terminal may be a vehicle-mounted device.

The embodiment of the invention also provides a vehicle which can comprise the terminal.

Although the present invention is disclosed above, the present invention is not limited thereto. Various changes and modifications may be effected therein by one skilled in the art without departing from the spirit and scope of the invention as defined in the appended claims.

Claims (11)

1. An emotion determination method for vehicle driving, characterized by comprising:

receiving emotion data and vehicle operation data generated when a user drives a vehicle, wherein the emotion data comprises a plurality of data points which are arranged according to a time sequence, and each data point is used for describing any one of the following emotions: positive mood, calm mood, negative mood;

fusing the emotion data and the vehicle operation data to generate an emotion curve, wherein the emotion curve is used for describing an incidence relation between emotion generated by the user and vehicle dynamic data of the vehicle when the vehicle is driven, and the vehicle dynamic data comprises vehicle speed data, acceleration data, deceleration data, constant-speed cruise data, brake data and turning data;

wherein, still include: receiving road condition information generated when the user drives a vehicle; the emotion curve comprises a reference line, a peak and a trough and corresponding time periods, and the method further comprises the following steps:

determining a time range of the emotional curve from the peak to the valley, wherein the peak is connected with the valley; or determining the time range of the emotion curve descending from the reference line to the wave trough, wherein the reference line is connected with the wave trough;

intercepting the road condition information within the time range from the road condition information;

if the intercepted road condition information shows that the road condition is abnormal, judging that the emotion curve in the time range is noise;

marking the noise points;

wherein the trough represents a negative emotion and the peak represents a positive emotion.

2. The emotion determination method of claim 1, wherein the emotion data is obtained using an edge calculation algorithm.

3. The emotion determination method of claim 1, wherein the fusing the emotion data and vehicle operation data to generate an emotion curve comprises:

determining the running state of the vehicle, wherein the running state comprises an acceleration state, a deceleration state, a constant-speed cruise state, a braking state and a turning state;

establishing an incidence relation between the emotion data and the running state according to the time sequence, and generating the emotion curve according to the incidence relation;

wherein the emotional curve is marked with the operating state.

4. An emotion reporting method for vehicle driving, comprising:

calculating emotion data of a user when the user drives a vehicle, wherein the emotion data comprises a plurality of data points which are arranged according to time sequence, and each data point is used for describing any one of the following emotions: positive mood, calm mood, negative mood;

recording vehicle operation data generated when the vehicle runs;

reporting the emotion data and the vehicle operation data to a server so that the server generates an emotion curve according to the emotion data and the vehicle operation data, wherein the emotion curve is used for describing an association relation between emotion generated by the user and vehicle dynamic data of the vehicle when the vehicle is driven, and the vehicle dynamic data comprises vehicle speed data, acceleration data, deceleration data, constant-speed cruise data, brake data and turning data;

the emotion curve comprises a reference line, a peak, a trough and a corresponding time period;

the method further comprises the following steps: recording road condition information generated when the user drives a vehicle, and uploading the road condition information to the server so that the server intercepts the road condition information within a time range from the road condition information; if the intercepted road condition information shows that the road condition is abnormal, judging that the emotion curve in the time range is a noise point, and marking the noise point;

wherein the time range refers to the time range of the emotional curve from the peak to the trough, and the peak is connected with the trough; or, the emotion curve falls from the reference line to the time range of the wave trough, and the reference line is connected with the wave trough;

wherein the trough represents a negative emotion and the peak represents a positive emotion.

5. The method of claim 4, wherein the emotion data is obtained by using an edge calculation algorithm.

6. An emotion determining apparatus for vehicle driving, characterized by comprising:

the receiving module is used for receiving emotion data and vehicle operation data generated when a user drives a vehicle, the emotion data comprises a plurality of data points which are arranged according to time sequence, and each data point is used for describing any one of the following emotions: positive mood, calm mood, negative mood;

the fusion module is used for fusing the emotion data and the vehicle operation data to generate an emotion curve, wherein the emotion curve is used for describing the incidence relation between emotion generated by the user and vehicle dynamic data of the vehicle when the vehicle is driven, and the vehicle dynamic data comprises vehicle speed data, acceleration data, deceleration data, constant-speed cruise data, brake data and turning data;

wherein, still include: the receiving module is used for receiving road condition information generated when the user drives a vehicle; the emotional curve comprises peaks and troughs and corresponding time periods,

the emotion determining apparatus may further include:

the determining module is used for determining the time range of the emotional curve from the peak inversion to the trough inversion, and the peak is connected with the trough; or determining the time range of the emotion curve descending from a reference line to the wave trough, wherein the reference line is connected with the wave trough;

the intercepting module is used for intercepting the road condition information within the time range from the road condition information;

the judging module is used for judging that the emotion curve in the time range is noise if the intercepted road condition information shows that the road condition is abnormal;

the marking module is used for marking the noise points;

wherein the trough represents a negative emotion and the peak represents a positive emotion.

7. An emotion reporting apparatus for vehicle driving, comprising:

the calculation module is used for calculating and obtaining emotion data of a user when the user drives a vehicle, the emotion data comprises a plurality of data points which are arranged according to time sequence, and each data point is used for describing any one of the following emotions: positive mood, calm mood, negative mood;

the recording module is used for recording vehicle operation data generated when the vehicle runs;

the reporting module is used for reporting the emotion data and the vehicle operation data to a server so that the server generates an emotion curve according to the emotion data and the vehicle operation data, the emotion curve is used for describing an incidence relation between emotion generated by the user and vehicle dynamic data of the vehicle when the vehicle is driven, and the vehicle dynamic data comprises vehicle speed data, acceleration data, deceleration data, constant-speed cruise data, brake data and turning data;

the emotion curve comprises a reference line, a peak, a trough and a corresponding time period;

the emotion reporting device further comprises: the uploading module is used for recording the road condition information generated when the user drives the vehicle and uploading the road condition information to the server so that the server intercepts the road condition information within a time range from the road condition information; if the intercepted road condition information shows that the road condition is abnormal, judging that the emotion curve in the time range is a noise point, and marking the noise point;

wherein the time range refers to the time range of the emotional curve from the peak to the trough, and the peak is connected with the trough; or, the emotion curve falls from the reference line to the time range of the wave trough, and the reference line is connected with the wave trough;

wherein the trough represents a negative emotion and the peak represents a positive emotion.

8. A storage medium having stored thereon computer instructions, characterized in that the computer instructions are operative to perform the steps of the method of any one of claims 1 to 5.

9. A server comprising a memory and a processor, the memory having stored thereon computer instructions executable on the processor, wherein the processor, when executing the computer instructions, performs the steps of the method of any one of claims 1 to 3.

10. A terminal comprising a memory and a processor, the memory having stored thereon computer instructions executable on the processor, wherein the processor, when executing the computer instructions, performs the steps of the method of any of claims 4 to 5.

11. A vehicle characterized by comprising a terminal according to claim 10.

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN201911013257.XA CN110807920B (en) | 2019-10-23 | 2019-10-23 | Emotion determining and reporting method and device for vehicle driving, server, terminal and vehicle |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN201911013257.XA CN110807920B (en) | 2019-10-23 | 2019-10-23 | Emotion determining and reporting method and device for vehicle driving, server, terminal and vehicle |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| CN110807920A CN110807920A (en) | 2020-02-18 |

| CN110807920B true CN110807920B (en) | 2020-10-30 |

Family

ID=69489076

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN201911013257.XA Active CN110807920B (en) | 2019-10-23 | 2019-10-23 | Emotion determining and reporting method and device for vehicle driving, server, terminal and vehicle |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN110807920B (en) |

Families Citing this family (1)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN114684152A (en) * | 2022-02-25 | 2022-07-01 | 智己汽车科技有限公司 | Method, device, vehicle and medium for processing driving experience data |

Citations (8)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN202815927U (en) * | 2012-10-19 | 2013-03-20 | 北京易卡互动信息技术有限公司 | Vehicle sales system |

| CN106166074A (en) * | 2016-08-30 | 2016-11-30 | 西南交通大学 | The method of testing of driver's emotion control ability and system |

| CN106361357A (en) * | 2016-08-30 | 2017-02-01 | 西南交通大学 | Testing method and system for driving ability |

| CN107341006A (en) * | 2017-06-21 | 2017-11-10 | 广东欧珀移动通信有限公司 | Screen locking wallpaper recommends method and Related product |

| CN107424019A (en) * | 2017-08-15 | 2017-12-01 | 京东方科技集团股份有限公司 | The art work based on Emotion identification recommends method, apparatus, medium and electronic equipment |

| CN108428338A (en) * | 2017-02-15 | 2018-08-21 | 阿里巴巴集团控股有限公司 | Traffic analysis method, device and electronic equipment |

| CN109606386A (en) * | 2018-12-12 | 2019-04-12 | 北京车联天下信息技术有限公司 | Cockpit in intelligent vehicle |

| CN109784185A (en) * | 2018-12-18 | 2019-05-21 | 深圳壹账通智能科技有限公司 | Client's food and drink evaluation automatic obtaining method and device based on micro- Expression Recognition |

Family Cites Families (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP3347107B2 (en) * | 1999-10-20 | 2002-11-20 | 株式会社 システム エイ.ブイ | POS system and security system using the same |

| US9710816B2 (en) * | 2008-08-05 | 2017-07-18 | Ford Motor Company | Method and system of measuring customer satisfaction with purchased vehicle |

| US20140310277A1 (en) * | 2013-04-15 | 2014-10-16 | Flextronics Ap, Llc | Suspending user profile modification based on user context |

| CN107463876A (en) * | 2017-07-03 | 2017-12-12 | 珠海市魅族科技有限公司 | Information processing method and device, computer installation and storage medium |

| CN109242529A (en) * | 2018-07-31 | 2019-01-18 | 上海博泰悦臻电子设备制造有限公司 | Vehicle, vehicle device equipment and the online method of investigation and study of user experience based on scene analysis |

-

2019

- 2019-10-23 CN CN201911013257.XA patent/CN110807920B/en active Active

Patent Citations (8)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN202815927U (en) * | 2012-10-19 | 2013-03-20 | 北京易卡互动信息技术有限公司 | Vehicle sales system |

| CN106166074A (en) * | 2016-08-30 | 2016-11-30 | 西南交通大学 | The method of testing of driver's emotion control ability and system |

| CN106361357A (en) * | 2016-08-30 | 2017-02-01 | 西南交通大学 | Testing method and system for driving ability |

| CN108428338A (en) * | 2017-02-15 | 2018-08-21 | 阿里巴巴集团控股有限公司 | Traffic analysis method, device and electronic equipment |

| CN107341006A (en) * | 2017-06-21 | 2017-11-10 | 广东欧珀移动通信有限公司 | Screen locking wallpaper recommends method and Related product |

| CN107424019A (en) * | 2017-08-15 | 2017-12-01 | 京东方科技集团股份有限公司 | The art work based on Emotion identification recommends method, apparatus, medium and electronic equipment |

| CN109606386A (en) * | 2018-12-12 | 2019-04-12 | 北京车联天下信息技术有限公司 | Cockpit in intelligent vehicle |

| CN109784185A (en) * | 2018-12-18 | 2019-05-21 | 深圳壹账通智能科技有限公司 | Client's food and drink evaluation automatic obtaining method and device based on micro- Expression Recognition |

Also Published As

| Publication number | Publication date |

|---|---|

| CN110807920A (en) | 2020-02-18 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| US10583792B2 (en) | System for evaluating and/or optimizing the operating behavior of a vehicle | |

| US10414408B1 (en) | Real-time driver monitoring and feedback reporting system | |

| EP3960576A1 (en) | Method and system for analysing the control of a vehicle | |

| US9530333B1 (en) | Real-time driver observation and scoring for driver's education | |

| JP2020524632A (en) | System and method for obtaining occupant feedback in response to an autonomous vehicle driving event | |

| US10035515B2 (en) | System and method for analyzing the energy efficiency of a motor vehicle, in particular of an apparatus of the motor vehicle | |

| US9165413B2 (en) | Diagnostic assistance | |

| US20170268948A1 (en) | System and method for analysing the energy efficiency of a vehicle | |

| CN111325230B (en) | Online learning method and online learning device for vehicle lane change decision model | |

| CN113119985B (en) | Automobile driving data monitoring method, device, equipment and storage medium | |

| US20170116793A1 (en) | Method for generating vehicle health parameters, method for displaying vehicle health parameters and devices thereof | |

| WO2020107894A1 (en) | Driving behavior scoring method and device and computer-readable storage medium | |

| US9524592B2 (en) | Driving analytics | |

| US20140358356A1 (en) | Event driven snapshots | |

| TW201741976A (en) | Vehicle data processing method, apparatus, and terminal device | |

| CN114383865A (en) | Machine monitoring | |

| CN107364446B (en) | Method for operating a motor vehicle | |

| US10331130B2 (en) | Operation model construction system, operation model construction method, and non-transitory computer readable storage medium | |

| CN110807920B (en) | Emotion determining and reporting method and device for vehicle driving, server, terminal and vehicle | |

| CN116653980B (en) | Driver driving habit analysis system and driving habit analysis method | |

| Wu et al. | Modeling Lead-Vehicle Kinematics for Rear-End Crash Scenario Generation | |

| CN110838027A (en) | Method and device for determining vehicle use satisfaction degree, storage medium and computing equipment | |

| US20240001945A1 (en) | Method and system for calibrating an adas/ads system of vehicles in a vehicle pool | |

| Holzinger et al. | Objective assessment of advanced driver assistance systems | |

| Kujala et al. | Key requirements and a method for measuring in-vehicle user interfaces’ distraction potential |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| GR01 | Patent grant | ||

| GR01 | Patent grant |