CN110796712A - Material processing method, device, electronic equipment and storage medium - Google Patents

Material processing method, device, electronic equipment and storage medium Download PDFInfo

- Publication number

- CN110796712A CN110796712A CN201911016784.6A CN201911016784A CN110796712A CN 110796712 A CN110796712 A CN 110796712A CN 201911016784 A CN201911016784 A CN 201911016784A CN 110796712 A CN110796712 A CN 110796712A

- Authority

- CN

- China

- Prior art keywords

- transformation

- pattern

- image

- image transformation

- processing information

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Pending

Links

Images

Classifications

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T11/00—2D [Two Dimensional] image generation

- G06T11/001—Texturing; Colouring; Generation of texture or colour

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F16/00—Information retrieval; Database structures therefor; File system structures therefor

- G06F16/40—Information retrieval; Database structures therefor; File system structures therefor of multimedia data, e.g. slideshows comprising image and additional audio data

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F9/00—Arrangements for program control, e.g. control units

- G06F9/06—Arrangements for program control, e.g. control units using stored programs, i.e. using an internal store of processing equipment to receive or retain programs

- G06F9/44—Arrangements for executing specific programs

- G06F9/451—Execution arrangements for user interfaces

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T11/00—2D [Two Dimensional] image generation

- G06T11/60—Editing figures and text; Combining figures or text

Landscapes

- Engineering & Computer Science (AREA)

- Theoretical Computer Science (AREA)

- Physics & Mathematics (AREA)

- General Physics & Mathematics (AREA)

- Software Systems (AREA)

- General Engineering & Computer Science (AREA)

- Human Computer Interaction (AREA)

- Multimedia (AREA)

- Data Mining & Analysis (AREA)

- Databases & Information Systems (AREA)

- Editing Of Facsimile Originals (AREA)

Abstract

The utility model discloses a material processing method, a device, an electronic device and a storage medium, which relate to the technical field of image processing, wherein the material processing method comprises the following steps: providing a drawing interface; receiving drawing operation implemented on a drawing interface, and acquiring a custom pattern corresponding to the drawing operation; and carrying out image transformation on the custom pattern according to preset image transformation information to obtain a corresponding target material, wherein the target material is used for manufacturing multimedia resources. By the method, the user can define the materials by user, obtain rich material resources and use the defined patterns for processing the multimedia resources, so that the user can edit the images or videos more variously.

Description

Technical Field

The present disclosure relates to the field of image processing technologies, and in particular, to a method and an apparatus for processing a material, an electronic device, and a storage medium.

Background

Users often use stickers as a material when editing images or videos. Stickers are pictures, animations or video material containing images or text. The user can "paste" the sticker on the original picture or video while editing the picture or video, so that the edited picture or video becomes more colorful.

Taking short video production as an example, a user may edit the short video by using a sticker, or edit the short video by using an object generated by a virtual reality technology, however, these editing modes cannot meet the needs of the user, so that the editing modes of the user on images or videos are limited.

Disclosure of Invention

The disclosure provides a material processing method and device, an electronic device and a storage medium, which are used for at least solving the problem that the editing mode of a user on an image or a video is limited in the related art.

According to a first aspect of the embodiments of the present disclosure, there is provided a material processing method, including:

providing a drawing interface;

receiving drawing operation implemented on a drawing interface, and acquiring a custom pattern corresponding to the drawing operation;

and carrying out image transformation on the custom pattern according to preset image transformation information to obtain a corresponding target material, wherein the target material is used for manufacturing multimedia resources.

In one embodiment, the preset image transformation information at least comprises an image transformation mode, transformation processing information and superposition processing information;

the image transformation is carried out on the user-defined pattern according to preset image transformation information to obtain a corresponding target material, and the method comprises the following steps:

performing image transformation on the custom pattern according to the image transformation mode and the transformation processing information to obtain an intermediate pattern;

and superposing the intermediate patterns according to the superposition processing information to obtain the target material.

In one embodiment, the conversion processing information includes at least reference conversion information and the number of conversions of the image conversion manner; the reference transformation information at least comprises the image transformation mode used for generating the reference pattern and a corresponding reference transformation parameter;

the transforming the user-defined pattern according to the image transformation mode and the transformation processing information to obtain an intermediate pattern, comprising:

converting the self-defined pattern according to the reference conversion information to obtain a corresponding reference pattern;

and transforming the reference pattern according to the image transformation mode and the corresponding image transformation times to obtain a corresponding intermediate pattern.

In one embodiment, the superimposing processing information includes a position relation parameter between any two intermediate patterns, and the superimposing the intermediate patterns according to the superimposing processing information to obtain the target material includes:

setting a superposition position corresponding to the intermediate pattern according to the position relation parameter;

and superposing the intermediate patterns according to corresponding superposition positions to obtain the target material.

In one embodiment, the image transformation mode at least comprises one of rotation transformation, scaling transformation, mirror image transformation or affine transformation;

if the image transformation mode comprises rotation transformation, the transformation processing information also comprises a mirror image reference line of the mirror image transformation;

if the image transformation mode comprises rotation transformation, the transformation processing information also comprises the position and/or the rotation angle of a rotation center point of the rotation transformation;

if the image transformation manner comprises a scaling transformation, the transformation processing information further comprises a scaling of the rotation transformation;

if the image transformation mode comprises affine transformation, the transformation processing information further comprises the translation direction and the displacement distance of the affine transformation.

In one embodiment, if the image transformation manner includes any at least two of rotation transformation, scaling transformation, mirror transformation, or affine transformation, the transformation processing information further includes transformation orders between different image transformation manners and an initial transformation pattern for each image transformation manner, where the initial transformation pattern includes the custom pattern or an intermediate pattern transformed by other different image transformation manners;

the transforming the user-defined pattern according to the image transformation mode and the transformation processing information to obtain an intermediate pattern, comprising:

and according to the conversion sequence, sequentially using the corresponding image conversion mode and the conversion processing information to convert the corresponding initial conversion pattern to obtain the corresponding intermediate pattern.

In one embodiment, the method further comprises:

and selecting the intermediate patterns meeting preset conditions, and superposing according to the superposition processing information to obtain the target material.

In one embodiment, the method further comprises:

and responding to the configuration operation of implementing the drawing interface, and acquiring the preset image transformation information.

According to a second aspect of the embodiments of the present disclosure, there is provided a material processing apparatus including:

a providing unit configured to perform providing a drawing interface;

the receiving unit is configured to execute drawing operation which is applied to a drawing interface and acquire a custom pattern corresponding to the drawing operation;

and the image transformation unit is configured to perform image transformation on the custom pattern according to preset image transformation information to obtain a corresponding target material, and the target material is used for manufacturing multimedia resources.

In one embodiment, the preset image transformation information at least comprises an image transformation mode, transformation processing information and superposition processing information;

the image transformation unit configured to perform:

performing image transformation on the custom pattern according to the image transformation mode and the transformation processing information to obtain an intermediate pattern;

and superposing the intermediate patterns according to the superposition processing information to obtain the target material.

In one embodiment, the conversion processing information includes at least reference conversion information and the number of conversions of the image conversion manner; the reference transformation information at least comprises the image transformation mode used for generating the reference pattern and a corresponding reference transformation parameter;

the image transformation unit configured to perform:

converting the self-defined pattern according to the reference conversion information to obtain a corresponding reference pattern;

and transforming the reference pattern according to the image transformation mode and the corresponding image transformation times to obtain a corresponding intermediate pattern.

In one embodiment, the superimposition processing information includes a positional relationship parameter between any two of the intermediate patterns, and the image conversion unit is configured to perform:

setting a superposition position corresponding to the intermediate pattern according to the position relation parameter;

and superposing the intermediate patterns according to corresponding superposition positions to obtain the target material.

In one embodiment, the image transformation mode at least comprises one of rotation transformation, scaling transformation, mirror image transformation or affine transformation;

if the image transformation mode comprises rotation transformation, the transformation processing information also comprises a mirror image reference line of the mirror image transformation;

if the image transformation mode comprises rotation transformation, the transformation processing information also comprises the position and/or the rotation angle of a rotation center point of the rotation transformation;

if the image transformation manner comprises a scaling transformation, the transformation processing information further comprises a scaling of the rotation transformation;

if the image transformation mode comprises affine transformation, the transformation processing information further comprises the translation direction and the displacement distance of the affine transformation.

In one embodiment, if the image transformation manner includes any at least two of rotation transformation, scaling transformation, mirror transformation, or affine transformation, the transformation processing information further includes transformation orders between different image transformation manners and an initial transformation pattern for each image transformation manner, where the initial transformation pattern includes the custom pattern or an intermediate pattern transformed by other different image transformation manners;

the image transformation unit configured to perform: and according to the conversion sequence, sequentially using the corresponding image conversion mode and the conversion processing information to convert the corresponding initial conversion pattern to obtain the corresponding intermediate pattern.

In one embodiment, the image transformation unit is configured to perform: and selecting the intermediate patterns meeting preset conditions, and superposing according to the superposition processing information to obtain the target material.

In one embodiment, the apparatus further comprises:

an acquisition unit configured to acquire the preset image transformation information in response to a configuration operation performed on the drawing interface.

According to a third aspect of the embodiments of the present disclosure, there is provided an electronic apparatus including: at least one processor; and a memory communicatively coupled to the at least one processor; wherein the memory stores instructions executable by the at least one processor to enable the at least one processor to perform the method of the first aspect.

According to a fourth aspect of embodiments of the present disclosure, there is provided a computer storage medium having stored thereon computer-executable instructions for performing the method of the first aspect.

The technical scheme provided by the embodiment of the disclosure at least brings the following beneficial effects:

according to the method, after a drawing interface is provided, drawing operation implemented on the drawing interface is received, a user-defined pattern corresponding to the drawing operation is obtained, and finally image transformation is carried out on the user-defined pattern according to preset image transformation information to obtain a corresponding target material, wherein the target material is used for manufacturing multimedia resources. By the method, the user can participate in the production of the material in a simple and convenient mode, obtain richer material resources and process the multimedia resources, so that the user can edit the image or video more variously.

It is to be understood that both the foregoing general description and the following detailed description are exemplary and explanatory only and are not restrictive of the disclosure.

Drawings

The accompanying drawings, which are incorporated in and constitute a part of this specification, illustrate embodiments consistent with the present disclosure and, together with the description, serve to explain the principles of the disclosure and are not to be construed as limiting the disclosure.

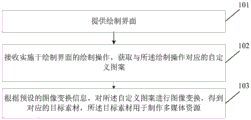

Fig. 1 is a schematic flow chart of a material processing method according to an embodiment of the present disclosure;

fig. 2 is a schematic diagram of a pattern provided in an embodiment of the disclosure;

FIG. 3 is a schematic diagram of a mirror transformation provided by an embodiment of the present disclosure;

FIG. 4 is a schematic diagram of a fiducial pattern provided by an embodiment of the present disclosure;

FIG. 5 is a schematic diagram of target materials provided by embodiments of the present disclosure;

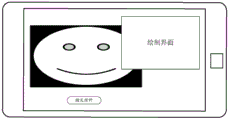

FIG. 6 is a schematic view of an operation interface provided by the embodiment of the present disclosure;

FIG. 7 is a pictorial illustration of a graphical interface provided by an embodiment of the present disclosure;

FIG. 8 is a pictorial illustration of a graphical interface provided by an embodiment of the present disclosure;

fig. 9 is a drawing interface diagram of a material processing method according to an embodiment of the present disclosure;

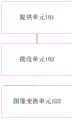

fig. 10 is a schematic structural diagram of a material processing apparatus according to an embodiment of the present disclosure;

fig. 11 is a schematic structural diagram of an electronic device according to an embodiment of the present disclosure.

Detailed Description

In order to make the technical solutions of the present disclosure better understood by those of ordinary skill in the art, the technical solutions in the embodiments of the present disclosure will be clearly and completely described below with reference to the accompanying drawings.

It should be noted that the terms "first," "second," and the like in the description and claims of the present disclosure and in the above-described drawings are used for distinguishing between similar elements and not necessarily for describing a particular sequential or chronological order. It is to be understood that such descriptions are interchangeable under appropriate circumstances such that the embodiments of the disclosure can be practiced in sequences other than those illustrated or described herein. The implementations described in the exemplary embodiments below are not intended to represent all implementations consistent with the present disclosure. Rather, they are merely examples of apparatus and methods consistent with certain aspects of the present disclosure, as detailed in the appended claims.

In the related art, when editing an image or video, a sticker material resource carried by an image or video editing application software application is generally selected for editing. The number of the materials acquired in the above manner is limited, and the user can only change the size or position of the sticker material, but cannot change the content in the material. Such as: the image in the sticker material is in a semicircular shape, and the user wants to use the circular sticker material, but the user cannot edit the semicircular material sticker to be in a circular shape based on the selected sticker material.

In addition, in the related art, it is mentioned that a user can freely scribble on an image or video, that is, by touching a touch screen with a finger, or by using a mouse to draw a picture on the image or video. Although the mode can enable the user to edit the image, the graffiti is not high in fineness and attractiveness, and the ordinary user is difficult to manually make beautiful graffiti. Based on this, the inventor proposes a material processing method to solve the above problems.

Referring to fig. 1, a method for processing materials according to an embodiment of the present disclosure includes:

in step 101, a drawing interface is provided. The drawing interface is a man-machine interaction interface which can receive a corresponding response given by a user to implement drawing operation. The drawing operation performed by the user may include a gesture operation or an operation performed by an external device such as a mouse. The user can randomly scribble on the drawing interface in a sliding touch mode or a mouse dragging mode to obtain the custom pattern. That is, in step 102, a drawing operation performed on the drawing interface may be received, and a custom pattern corresponding to the drawing operation may be obtained. Because different users have different ages, different regional distributions and different culture levels, not all users have the capability of drawing materials and can draw materials suitable for making multimedia resources by themselves, in view of this, in step 103 in the present disclosure, image transformation is performed on the custom pattern according to preset image transformation information to obtain corresponding target materials. That is, no matter what type the user gets the custom pattern by graffiti, whether it is beautiful or not, the custom pattern can be processed according to the preset image transformation information in the disclosure to obtain the target material, for example, the user draws a line, which cannot be called as beautiful or pattern.

For example, when a short video is produced, a user may scrawl through a drawing interface to obtain some messy lines, and then perform image transformation through image transformation information, and then the messy lines may be processed into a pattern, as shown in fig. 2, the pattern on the left side in fig. 2 is the scrawl of the user on the drawing interface, the pattern on the right side is the pattern obtained through the processing by the material processing method, and the obtained pattern is the photo frame in which the target material may be used as each frame image of the short video.

Note that the target material may be pasted at an arbitrary position in the image designated by the user. Of course, target materials may also be added to the newly-created transparent layer, so that a user may process through a separate layer, and specific requirements may be selected according to the user's own requirements, which is not specifically limited in this disclosure.

In addition, the target material can also be used for making video or audio-visual data, and the present disclosure is applicable as long as the purpose of editing images or videos can be achieved by using the target material.

For the convenience of understanding, some keywords related to the present disclosure are described first, and it should be noted that the definitions of the keywords are only used for illustrating the possible meanings of the keywords and are not used for limiting the embodiments of the present disclosure. The preset image transformation information at least comprises an image transformation mode, transformation processing information and superposition processing information, wherein,

image conversion method: i.e. the way in which the pattern can be transformed, such as: rotation transformation, scaling transformation, mirror transformation, affine transformation, and the like.

Transformation processing information: namely, the transformation times of the image transformation modes and the reference transformation information, wherein the reference transformation information is that the custom pattern is transformed into the reference image according to which image transformation modes, and the intermediate pattern is obtained by further transformation according to the reference pattern, such as: the user-defined pattern is subjected to image transformation for 10 times to obtain a reference pattern 1, wherein mirror image transformation is performed for 3 times and rotation transformation is performed for 7 times; the custom pattern undergoes 5 image transformations to obtain the reference pattern 2, wherein the rotation transformation is 4 times and the scaling transformation is 1 time.

Information superposition processing: namely, the method of superimposing the intermediate image is to directly superimpose the intermediate pattern, or to superimpose the intermediate pattern after position conversion. For example: rotating the middle pattern by 360 degrees according to the selected rotation center to obtain a target material 1; and after the mirror image transformation is carried out on the intermediate pattern, the image after the mirror image transformation is superposed with the intermediate pattern, and the target material 2 is obtained through rotation.

Based on the preset image transformation information, step 103 performs image transformation on the custom pattern according to the preset image transformation information to obtain a corresponding target material, and may perform image transformation on the custom pattern according to the image transformation mode and the transformation processing information to obtain an intermediate pattern, and then superimposes the intermediate pattern according to the superimposition processing information to obtain the target material.

In this way, it can be determined on which specific image transformation information the target material is acquired based.

It should be noted that the intermediate pattern is a pattern obtained by image transformation each time, if the image transformation is performed only once, the custom pattern can be directly transformed, and if the image transformation is performed multiple times, the custom pattern can be image-transformed to obtain the intermediate pattern.

Obtaining the intermediate pattern based on any mode, wherein the mode of image transformation is realized by at least one of rotation transformation, scaling transformation, mirror image transformation or affine transformation; if the image transformation mode comprises rotation transformation, the transformation processing information also comprises a mirror image reference line of the mirror image transformation, the mirror image reference line, namely the mirror image transformation, is the axisymmetric transformation based on which symmetric axis is the mirror image reference line. As shown in fig. 3, when the customized pattern is not consistent with the pattern obtained by performing mirror image transformation based on the mirror image reference line 1 and performing mirror image transformation based on the mirror image reference line 2, the mirror image reference line needs to be acquired based on the mirror image transformation to determine the result of the pattern transformation.

If the image transformation mode includes rotation transformation, the transformation processing information further includes a rotation center point position and/or a rotation angle of the rotation transformation, during the rotation transformation, the rotation can be performed with reference to a center point, or the rotation can be performed by a preset angle directly on the basis of the original pattern, or a center point can be selected, the target pattern can be obtained by rotating the preset angle based on the selected center point, during the actual image transformation, a specific rotation transformation mode can be selected according to the actual requirement, and the method is not particularly limited herein.

If the image transformation mode includes scaling transformation, the transformation processing information further includes a scaling ratio of the rotation transformation, and the scaling ratio can be set according to requirements, such as reducing the pattern to 20% of the original pattern, enlarging the pattern to 120% of the original pattern, and the like. The specific scaling ratio can be selected according to actual requirements, and is not specifically limited herein.

If the image transformation manner includes affine transformation, the transformation processing information further includes a translation direction and a displacement distance of the affine transformation. If the pattern is affine transformed, the transformation processing information may be positive direction of the coordinate axis X, and may be set according to actual requirements, and is not specifically limited herein.

Furthermore, it should be noted that the rotation transformation and the scaling transformation may also be implemented by affine transformation. Affine transformation can also implement transformation of translation direction and displacement distance. The affine transformation may also implement a combination transformation of a plurality of image transformations, such as rotation, scaling, translation, displacement, and the like, and which image transformations are specifically adopted may be selected according to actual requirements of a user, which is not specifically limited herein.

Aiming at different image transformation modes, the method corresponds to different transformation processing information, and is convenient for obtaining patterns meeting requirements during image processing.

How to acquire the intermediate pattern by the first and second modes will be described in detail below with reference to the determined image transformation mode.

Method one, acquiring intermediate pattern based on reference pattern

The intermediate pattern is acquired in the mode, wherein in the preset image transformation information, the transformation processing information at least comprises reference transformation information and transformation times of an image transformation mode; the reference transformation information at least comprises the image transformation mode used for generating the reference pattern and corresponding reference transformation parameters, and the reference transformation parameters are used for indicating which image transformations are specifically carried out on the reference pattern and the corresponding image transformation times, such as: the user-defined pattern is subjected to 5 times of rotation transformation to obtain a reference pattern, and the reference transformation parameters are subjected to 5 times of rotation transformation; and (3) the self-defined pattern is subjected to 1-time mirror image transformation, and is subjected to 1-time rotation transformation and is superposed with the self-defined pattern to obtain a reference pattern, and then the reference transformation parameters are subjected to 1-time mirror image transformation, 1-time rotation transformation and superposition of the self-defined pattern. All the parameters which can indicate the transformation process of the custom pattern into the reference pattern are reference transformation parameters, and are suitable for the disclosure, and are not specifically limited herein.

And transforming the custom pattern according to the reference transformation information to obtain a corresponding reference pattern, copying the custom pattern in the graph 4 based on the determined mirror image reference line, then carrying out mirror image transformation, and overlapping the custom pattern to obtain a reference pattern shown in a reference pattern 1 shown in the graph 4, wherein the curve 1 is the custom pattern drawn by a user, and the curve 1 is turned over according to the mirror image reference line to obtain a curve 2, wherein the reference transformation information is mirror image transformation, and the reference transformation parameters are mirror image transformation for 1 time and overlapping. And rotating an image obtained by scaling 20% of the custom pattern in the graph 4 to obtain a reference pattern 2, wherein a curve 1 is the custom pattern, a curve 1 is the reference pattern 2 obtained by scaling and rotating after copying, a curve 3 is the reference pattern 2 obtained by scaling and rotating, the reference transformation information is scaling transformation and rotating transformation, the reference transformation parameters are scaling transformation and rotating transformation for 20% of times and rotating transformation for 1 time. And then, converting the reference pattern according to the image conversion mode and the corresponding image conversion times to obtain a corresponding intermediate pattern.

The intermediate pattern obtained based on the method is simple and convenient to operate, and the pattern editing mode is enriched.

Second, intermediate pattern is obtained based on initial transformation pattern

Based on the intermediate pattern obtained in this way, if the image transformation manner includes at least two of rotation transformation, scaling transformation, mirror transformation, or affine transformation, the transformation processing information further includes transformation orders between different image transformation manners and an initial transformation pattern for each image transformation manner, the initial transformation pattern includes the custom pattern or an intermediate pattern obtained by transformation in other different image transformation manners, wherein the transformation order in the transformation processing information here is an execution order of the image transformation manners, the initial transformation image is a pattern before the image transformation manner is executed, the initial transformation patterns corresponding to different operation timings are different, the pattern before mirror transformation of the reference pattern 1 in fig. 4, that is, the custom pattern, is the initial transformation pattern, and for the reference pattern 2 in fig. 4, the pattern before the rotation transformation, i.e. the pattern scaled by 20% from curve 1, is the initial transformation pattern (not shown in the figure, and only a brief description is given here for understanding).

And according to the conversion sequence, sequentially using the corresponding image conversion mode and the conversion processing information to convert the corresponding initial conversion pattern to obtain the corresponding intermediate pattern.

The intermediate pattern obtained by the method increases the pattern editing mode, and further enables the generated target material to be more diversified.

The target material can be obtained based on the obtained intermediate pattern, and when the target material is executed, the information of the superposition processing includes a position relation parameter between any two intermediate patterns, wherein the position relation parameter is used for representing the position relation between the two intermediate patterns, as shown in fig. 4, a curve 1 and a curve 2 can be regarded as the two intermediate patterns, the curve 1 and the curve 2 are symmetrically distributed by a mirror image reference line and can be regarded as the position relation parameter of the two intermediate patterns, and when the target material is actually applied, the target material further includes a relative change angle, a displacement and the like of the intermediate patterns.

And setting the superposition position of the corresponding intermediate pattern according to the position relation parameter, and then superposing the intermediate pattern according to the corresponding superposition position to obtain the target material. For the reference pattern 1 in fig. 4, it is determined that the curve 1 and the curve 2 are symmetrical about a mirror image reference line, and three lines intersect at the point a and the point B, the reference image in fig. 4 is copied and rotated with the point B as a rotation center to obtain an intermediate pattern shown in fig. 5, the image in the reference pattern 1 as a whole is copied, then the copied image is rotated by a certain angle (the angle is counted as θ, such as 36 °), and then copied again, and the copied image is rotated by 2 times θ, and the copying and rotation are repeated n times, where n × θ is 360 °, which is exactly one cycle, and 3 times to obtain the intermediate pattern shown in fig. 5, and the target material 1 shown in fig. 5 is obtained by one cycle of rotation. The target material 2 shown in fig. 5 can be obtained by adjusting θ to 18 °.

Note that since only a single intermediate pattern is included in the reference pattern 2 shown in fig. 4, the positional relationship parameter does not exist, and the target material 3 shown in fig. 5 can be obtained by performing a plurality of times of rotational transformations on the image shown in fig. 4.

The target material obtained by the method has more diversified editing modes.

In one embodiment, the intermediate patterns meeting the preset condition can be selected, and the target material can be obtained by overlapping according to the overlapping processing information. It should be noted that, after a user-defined pattern drawn by a user is called as an intermediate pattern through image transformation, an attractive pattern may not be generated, the material processing method may pre-judge the intermediate pattern, prompt the user that the part in the intermediate pattern may be unfavorable for generating a target material, and give a prompt operation so that the user selects the intermediate pattern meeting a preset condition, thereby generating the target material, the intermediate pattern shown in fig. 6 is composed of a curve 1 and a curve 2, and the terminal, through analysis of the intermediate pattern, prompts that the curve 1, the curve 1 and the curve 2 cannot be combined, and proposes to delete the curve 2; 2. the line suggestion in the dashed box is reserved, and the user can select the operable part in the middle pattern according to the information prompt to generate the target material.

The target material generated by the method can be used for reprocessing the intermediate pattern based on user operation, so that the editing mode is more flexible.

In one embodiment, preset image transformation information can be acquired in response to a configuration operation of a rendering interface, the configuration can be performed through a tool box or an input box, for example, the configuration can be performed through the tool box, an image transformation tool set is included in the rendering interface, each image transformation tool corresponds to one image transformation, each image transformation has a corresponding configuration item, and the terminal can perform image transformation on the copied custom pattern according to a configuration result of the configuration item of the image transformation selected by a user. As shown in fig. 7, the image transformation tool set in the drawing interface is displayed by a toolbar and is located on the left side of the drawing interface, the right side of the drawing interface is a drawing area, the drawing area in fig. 7 displays a user-defined pattern, and the drawing area also has a movable alternative graphic column, and a user can select a pattern from the alternative graphic column according to the preface to modify the pattern displayed in the drawing area. In addition, the toolbar includes: mirror transformation tool, rotation transformation tool, scaling transformation tool, affine transformation tool. The image change corresponding to the mirror image transformation tool is mirror image transformation, the image change corresponding to the rotation transformation tool is rotation transformation, the image change corresponding to the scaling transformation tool is scaling transformation, and the image change corresponding to the affine transformation tool is affine transformation. If the input frame is the mode of directly inputting the configuration information in the input frame, as shown in fig. 8, the image transformation information may be input in the input frame, such as 1, performing mirror transformation 2 based on the suggested mirror reference line, and rotating 30 degrees based on the central point selected in the figure.

The image transformation information acquired in the mode is more visual, the user operation is facilitated, and the user experience is favorably improved.

In one embodiment, the multimedia resource may be acquired by an image acquisition device; and displaying a drawing interface in a newly-built layer on the layer of the multimedia resource. It should be noted that the customized material can be directly edited in the image or video editing software, that is, re-edited on the image layer of the image or video editing software. As shown in fig. 9, fig. 9 is a video editing interface, and by selecting a designated control, a drawing interface can be pulled down, so that a user can edit a material on the drawing interface, and the material after custom editing can be stored in a corresponding software application after editing.

In addition, the existing editable sticker materials in the image or video editing software can be edited again through the drawing interface to obtain new materials, and the operation of the user is simpler and more convenient through the method.

Referring to fig. 10, a schematic structural diagram of a material processing apparatus according to an embodiment of the present disclosure is shown, the apparatus including: a providing unit 101, a receiving unit 102, and an image transforming unit 103.

It should be noted that, the providing unit 101 is configured to perform providing a drawing interface; the receiving unit 102 is configured to execute receiving of a drawing operation implemented on a drawing interface, and obtain a custom pattern corresponding to the drawing operation; and the image transformation unit 103 is configured to perform image transformation on the custom pattern according to preset image transformation information to obtain a corresponding target material, and the target material is used for manufacturing multimedia resources.

In one embodiment, the preset image transformation information at least comprises an image transformation mode, transformation processing information and superposition processing information;

the image transformation unit configured to perform:

performing image transformation on the custom pattern according to the image transformation mode and the transformation processing information to obtain an intermediate pattern;

and superposing the intermediate patterns according to the superposition processing information to obtain the target material.

In one embodiment, the conversion processing information includes at least reference conversion information and the number of conversions of the image conversion manner; the reference transformation information at least comprises the image transformation mode used for generating the reference pattern and a corresponding reference transformation parameter;

the image transformation unit configured to perform:

converting the self-defined pattern according to the reference conversion information to obtain a corresponding reference pattern;

and transforming the reference pattern according to the image transformation mode and the corresponding image transformation times to obtain a corresponding intermediate pattern.

In one embodiment, the superimposition processing information includes a positional relationship parameter between any two of the intermediate patterns, and the image conversion unit is configured to perform:

setting a superposition position corresponding to the intermediate pattern according to the position relation parameter;

and superposing the intermediate patterns according to corresponding superposition positions to obtain the target material.

In one embodiment, the image transformation mode at least comprises one of rotation transformation, scaling transformation, mirror image transformation or affine transformation;

if the image transformation mode comprises rotation transformation, the transformation processing information also comprises a mirror image reference line of the mirror image transformation;

if the image transformation mode comprises rotation transformation, the transformation processing information also comprises the position and/or the rotation angle of a rotation center point of the rotation transformation;

if the image transformation manner comprises a scaling transformation, the transformation processing information further comprises a scaling of the rotation transformation;

if the image transformation mode comprises affine transformation, the transformation processing information further comprises the translation direction and the displacement distance of the affine transformation.

In one embodiment, if the image transformation manner includes any at least two of rotation transformation, scaling transformation, mirror transformation, or affine transformation, the transformation processing information further includes transformation orders between different image transformation manners and an initial transformation pattern for each image transformation manner, where the initial transformation pattern includes the custom pattern or an intermediate pattern transformed by other different image transformation manners;

the image transformation unit configured to perform: and according to the conversion sequence, sequentially using the corresponding image conversion mode and the conversion processing information to convert the corresponding initial conversion pattern to obtain the corresponding intermediate pattern.

In one embodiment, the image transformation unit is configured to perform: and selecting the intermediate patterns meeting preset conditions, and superposing according to the superposition processing information to obtain the target material.

In one embodiment, the apparatus further comprises:

an acquisition unit configured to acquire the preset image transformation information in response to a configuration operation performed on the drawing interface.

Having described the material processing method and apparatus in the exemplary embodiments of the present disclosure, an electronic device of another exemplary embodiment of the present disclosure is next described.

As will be appreciated by one skilled in the art, aspects of the present disclosure may be embodied as a system, method or program product. Accordingly, various aspects of the present disclosure may be embodied in the form of: an entirely hardware embodiment, an entirely software embodiment (including firmware, microcode, etc.) or an embodiment combining hardware and software aspects that may all generally be referred to herein as a "circuit," module "or" system.

In some possible implementations, an electronic device in accordance with the present disclosure may include at least one processor, and at least one memory. Wherein the memory stores program code which, when executed by the processor, causes the processor to perform the steps of the material processing methods according to the various exemplary embodiments of the present disclosure described above in this specification. For example, the processor may perform steps 101-103 as shown in FIG. 11.

The electronic device 130 according to this embodiment of the present disclosure is described below with reference to fig. 11. The electronic device 130 shown in fig. 11 is only an example, and should not bring any limitation to the functions and the scope of use of the embodiments of the present disclosure.

As shown in fig. 11, the electronic device 130 is embodied in the form of a general purpose computing apparatus. The components of the electronic device 130 may include, but are not limited to: the at least one processor 131, the at least one memory 132, and a bus 133 that connects the various system components (including the memory 132 and the processor 131).

The memory 132 may include readable media in the form of volatile memory, such as Random Access Memory (RAM)1321 and/or cache memory 1322, and may further include Read Only Memory (ROM) 1323.

The electronic device 130 may also communicate with one or more external devices 134 (e.g., keyboard, pointing device, etc.), with one or more devices that enable target objects to interact with the electronic device 130, and/or with any devices (e.g., router, modem, etc.) that enable the electronic device 130 to communicate with one or more other computing devices. Such communication may occur via input/output (I/O) interfaces 135. Also, the electronic device 130 may communicate with one or more networks (e.g., a Local Area Network (LAN), a Wide Area Network (WAN), and/or a public network, such as the internet) via the network adapter 136. As shown, network adapter 136 communicates with other modules for electronic device 130 over bus 133. It should be understood that although not shown in the figures, other hardware and/or software modules may be used in conjunction with electronic device 130, including but not limited to: microcode, device drivers, redundant processors, external disk drive arrays, RAID systems, tape drives, and data backup storage systems, among others.

In some possible embodiments, the various aspects of the material display method provided by the present disclosure may also be implemented in the form of a program product including program code for causing a computer device to perform the steps in the material processing method according to various exemplary embodiments of the present disclosure described above in this specification when the program product is run on the computer device, for example, the computer device may perform the steps 101-104 as shown in fig. 1.

The program product may employ any combination of one or more readable media. The readable medium may be a readable signal medium or a readable storage medium. A readable storage medium may be, for example, but not limited to, an electronic, magnetic, optical, electromagnetic, infrared, or semiconductor system, apparatus, or device, or any combination of the foregoing. More specific examples (a non-exhaustive list) of the readable storage medium include: an electrical connection having one or more wires, a portable disk, a hard disk, a Random Access Memory (RAM), a read-only memory (ROM), an erasable programmable read-only memory (EPROM or flash memory), an optical fiber, a portable compact disc read-only memory (CD-ROM), an optical storage device, a magnetic storage device, or any suitable combination of the foregoing.

The program product for material display of the embodiments of the present disclosure may employ a portable compact disc read only memory (CD-ROM) and include program code, and may be run on a computing device. However, the program product of the present disclosure is not limited thereto, and in this document, a readable storage medium may be any tangible medium that can contain, or store a program for use by or in connection with an instruction execution system, apparatus, or device.

A readable signal medium may include a propagated data signal with readable program code embodied therein, for example, in baseband or as part of a carrier wave. Such a propagated data signal may take any of a variety of forms, including, but not limited to, electro-magnetic, optical, or any suitable combination thereof. A readable signal medium may also be any readable medium that is not a readable storage medium and that can communicate, propagate, or transport a program for use by or in connection with an instruction execution system, apparatus, or device.

Program code embodied on a readable medium may be transmitted using any appropriate medium, including but not limited to wireless, wireline, optical fiber cable, RF, etc., or any suitable combination of the foregoing.

Program code for carrying out operations for the present disclosure may be written in any combination of one or more programming languages, including an object oriented programming language such as Java, C + + or the like and conventional procedural programming languages, such as the "C" programming language or similar programming languages. The program code may execute entirely on the target object computing device, partly on the target object apparatus, as a stand-alone software package, partly on the target object computing device and partly on a remote computing device, or entirely on the remote computing device or server. In the case of a remote computing device, the remote computing device may be connected to the target object electronic equipment through any kind of network, including a Local Area Network (LAN) or a Wide Area Network (WAN), or may be connected to external electronic equipment (e.g., through the internet using an internet service provider).

It should be noted that although several units or sub-units of the apparatus are mentioned in the above detailed description, such division is merely exemplary and not mandatory. Indeed, the features and functions of two or more units described above may be embodied in one unit, in accordance with embodiments of the present disclosure. Conversely, the features and functions of one unit described above may be further divided into embodiments by a plurality of units.

Further, while the operations of the disclosed methods are depicted in the drawings in a particular order, this does not require or imply that these operations must be performed in the particular order shown, or that all of the illustrated operations must be performed, to achieve desirable results. Additionally or alternatively, certain steps may be omitted, multiple steps combined into one step execution, and/or one step broken down into multiple step executions.

As will be appreciated by one skilled in the art, embodiments of the present disclosure may be provided as a method, system, or computer program product. Accordingly, the present disclosure may take the form of an entirely hardware embodiment, an entirely software embodiment or an embodiment combining software and hardware aspects. Furthermore, the present disclosure may take the form of a computer program product embodied on one or more computer-usable storage media (including, but not limited to, disk storage, CD-ROM, optical storage, and so forth) having computer-usable program code embodied therein.

The present disclosure is described with reference to flowchart illustrations and/or block diagrams of methods, apparatus (systems), and computer program products according to embodiments of the disclosure. It will be understood that each flow and/or block of the flow diagrams and/or block diagrams, and combinations of flows and/or blocks in the flow diagrams and/or block diagrams, can be implemented by computer program instructions. These computer program instructions may be provided to a processor of a general purpose computer, special purpose computer, embedded processor, or other programmable data processing apparatus to produce a machine, such that the instructions, which execute via the processor of the computer or other programmable data processing apparatus, create means for implementing the functions specified in the flowchart flow or flows and/or block diagram block or blocks.

These computer program instructions may also be stored in a computer-readable memory that can direct a computer or other programmable data processing apparatus to function in a particular manner, such that the instructions stored in the computer-readable memory produce an article of manufacture including instruction means which implement the function specified in the flowchart flow or flows and/or block diagram block or blocks.

These computer program instructions may also be loaded onto a computer or other programmable data processing apparatus to cause a series of operational steps to be performed on the computer or other programmable apparatus to produce a computer implemented process such that the instructions which execute on the computer or other programmable apparatus provide steps for implementing the functions specified in the flowchart flow or flows and/or block diagram block or blocks.

Other embodiments of the disclosure will be apparent to those skilled in the art from consideration of the specification and practice of the disclosure disclosed herein. This disclosure is intended to cover any variations, uses, or adaptations of the disclosure following, in general, the principles of the disclosure and including such departures from the present disclosure as come within known or customary practice within the art to which the disclosure pertains. It is intended that the specification and examples be considered as exemplary only, with a true scope and spirit of the disclosure being indicated by the following claims.

It will be understood that the present disclosure is not limited to the precise arrangements described above and shown in the drawings and that various modifications and changes may be made without departing from the scope thereof. The scope of the present disclosure is limited only by the appended claims.

Claims (10)

1. A method for processing material, comprising:

providing a drawing interface;

receiving drawing operation implemented on a drawing interface, and acquiring a custom pattern corresponding to the drawing operation;

and carrying out image transformation on the custom pattern according to preset image transformation information to obtain a corresponding target material, wherein the target material is used for manufacturing multimedia resources.

2. The method according to claim 1, wherein the preset image transformation information at least comprises an image transformation mode, transformation processing information and superimposition processing information;

the image transformation is carried out on the user-defined pattern according to preset image transformation information to obtain a corresponding target material, and the method comprises the following steps:

performing image transformation on the custom pattern according to the image transformation mode and the transformation processing information to obtain an intermediate pattern;

and superposing the intermediate patterns according to the superposition processing information to obtain the target material.

3. The method according to claim 2, wherein the conversion processing information includes at least reference conversion information and a number of conversions of the image conversion method; the reference transformation information at least comprises the image transformation mode used for generating the reference pattern and a corresponding reference transformation parameter;

the transforming the user-defined pattern according to the image transformation mode and the transformation processing information to obtain an intermediate pattern, comprising:

converting the self-defined pattern according to the reference conversion information to obtain a corresponding reference pattern;

and transforming the reference pattern according to the image transformation mode and the corresponding image transformation times to obtain a corresponding intermediate pattern.

4. The method according to claim 2, wherein the superimposition processing information includes a positional relationship parameter between any two of the intermediate patterns, and the superimposing the intermediate patterns according to the superimposition processing information to obtain the target material includes:

setting a superposition position corresponding to the intermediate pattern according to the position relation parameter;

and superposing the intermediate patterns according to corresponding superposition positions to obtain the target material.

5. The method of claim 2, wherein the image transformation manner comprises at least one of a rotation transformation, a scaling transformation, a mirroring transformation, or an affine transformation;

if the image transformation mode comprises rotation transformation, the transformation processing information also comprises a mirror image reference line of the mirror image transformation;

if the image transformation mode comprises rotation transformation, the transformation processing information also comprises the position and/or the rotation angle of a rotation center point of the rotation transformation;

if the image transformation manner comprises a scaling transformation, the transformation processing information further comprises a scaling of the rotation transformation;

if the image transformation mode comprises affine transformation, the transformation processing information further comprises the translation direction and the displacement distance of the affine transformation.

6. The method according to claim 2, wherein if the image transformation mode includes any at least two of a rotation transformation, a scaling transformation, a mirroring transformation, or an affine transformation, the transformation processing information further includes an order of transformation between different image transformation modes and an initial transformation pattern for each image transformation mode, the initial transformation pattern including the custom pattern or an intermediate pattern transformed by other different image transformation modes;

the transforming the user-defined pattern according to the image transformation mode and the transformation processing information to obtain an intermediate pattern, comprising:

and according to the conversion sequence, sequentially using the corresponding image conversion mode and the conversion processing information to convert the corresponding initial conversion pattern to obtain the corresponding intermediate pattern.

7. The method of claim 2, further comprising:

and selecting the intermediate patterns meeting preset conditions, and superposing according to the superposition processing information to obtain the target material.

8. A material processing apparatus, comprising:

a providing unit configured to perform providing a drawing interface;

the receiving unit is configured to execute drawing operation which is applied to a drawing interface and acquire a custom pattern corresponding to the drawing operation;

and the image transformation unit is configured to perform image transformation on the custom pattern according to preset image transformation information to obtain a corresponding target material, and the target material is used for manufacturing multimedia resources.

9. An electronic device, comprising: at least one processor; and a memory communicatively coupled to the at least one processor; wherein the memory stores instructions executable by the at least one processor to enable the at least one processor to perform the method of any one of claims 1-7.

10. A computer storage medium having computer-executable instructions stored thereon for performing the method of any one of claims 1-7.

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN201911016784.6A CN110796712A (en) | 2019-10-24 | 2019-10-24 | Material processing method, device, electronic equipment and storage medium |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN201911016784.6A CN110796712A (en) | 2019-10-24 | 2019-10-24 | Material processing method, device, electronic equipment and storage medium |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| CN110796712A true CN110796712A (en) | 2020-02-14 |

Family

ID=69441157

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN201911016784.6A Pending CN110796712A (en) | 2019-10-24 | 2019-10-24 | Material processing method, device, electronic equipment and storage medium |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN110796712A (en) |

Cited By (8)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN112083865A (en) * | 2020-09-24 | 2020-12-15 | Oppo广东移动通信有限公司 | Image generation method and device, terminal and readable storage medium |

| CN112634404A (en) * | 2020-06-28 | 2021-04-09 | 西安诺瓦星云科技股份有限公司 | Layer fusion method, device and system |

| CN112822544A (en) * | 2020-12-31 | 2021-05-18 | 广州酷狗计算机科技有限公司 | Video material file generation method, video synthesis method, device and medium |

| CN112843723A (en) * | 2021-02-03 | 2021-05-28 | 北京字跳网络技术有限公司 | Interaction method, interaction device, electronic equipment and storage medium |

| CN113345054A (en) * | 2021-05-28 | 2021-09-03 | 上海哔哩哔哩科技有限公司 | Virtual image decorating method, detection method and device |

| CN113722025A (en) * | 2020-05-26 | 2021-11-30 | Oppo(重庆)智能科技有限公司 | Dial pattern generation method and device, watch, electronic device, and computer-readable storage medium |

| CN113806306A (en) * | 2021-08-04 | 2021-12-17 | 北京字跳网络技术有限公司 | Media file processing method, device, equipment, readable storage medium and product |

| CN117216312A (en) * | 2023-11-06 | 2023-12-12 | 长沙探月科技有限公司 | Method and device for generating questioning material, electronic equipment and storage medium |

Citations (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US20090060391A1 (en) * | 2007-09-04 | 2009-03-05 | Fuji Xerox Co., Ltd. | Image processing apparatus, image processing method and computer-readable medium |

| CN101584210A (en) * | 2007-12-07 | 2009-11-18 | 索尼株式会社 | Image processing device, dynamic image reproduction device, and processing method and program used in the devices |

| CN102194215A (en) * | 2010-03-19 | 2011-09-21 | 索尼公司 | Image processing apparatus, method and program |

| CN106815799A (en) * | 2016-12-19 | 2017-06-09 | 浙江画之都文化创意股份有限公司 | A kind of self adaptation artistic pattern forming method based on chaology |

| CN108241645A (en) * | 2016-12-23 | 2018-07-03 | 腾讯科技(深圳)有限公司 | Image processing method and device |

-

2019

- 2019-10-24 CN CN201911016784.6A patent/CN110796712A/en active Pending

Patent Citations (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US20090060391A1 (en) * | 2007-09-04 | 2009-03-05 | Fuji Xerox Co., Ltd. | Image processing apparatus, image processing method and computer-readable medium |

| CN101584210A (en) * | 2007-12-07 | 2009-11-18 | 索尼株式会社 | Image processing device, dynamic image reproduction device, and processing method and program used in the devices |

| CN102194215A (en) * | 2010-03-19 | 2011-09-21 | 索尼公司 | Image processing apparatus, method and program |

| CN106815799A (en) * | 2016-12-19 | 2017-06-09 | 浙江画之都文化创意股份有限公司 | A kind of self adaptation artistic pattern forming method based on chaology |

| CN108241645A (en) * | 2016-12-23 | 2018-07-03 | 腾讯科技(深圳)有限公司 | Image processing method and device |

Cited By (14)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN113722025A (en) * | 2020-05-26 | 2021-11-30 | Oppo(重庆)智能科技有限公司 | Dial pattern generation method and device, watch, electronic device, and computer-readable storage medium |

| CN113722025B (en) * | 2020-05-26 | 2024-10-29 | Oppo(重庆)智能科技有限公司 | Dial pattern generation method and device, watch, electronic device, and computer-readable storage medium |

| CN112634404A (en) * | 2020-06-28 | 2021-04-09 | 西安诺瓦星云科技股份有限公司 | Layer fusion method, device and system |

| CN112083865A (en) * | 2020-09-24 | 2020-12-15 | Oppo广东移动通信有限公司 | Image generation method and device, terminal and readable storage medium |

| CN115167735A (en) * | 2020-09-24 | 2022-10-11 | Oppo广东移动通信有限公司 | Image generation method and device, terminal and readable storage medium |

| CN112822544A (en) * | 2020-12-31 | 2021-05-18 | 广州酷狗计算机科技有限公司 | Video material file generation method, video synthesis method, device and medium |

| CN112822544B (en) * | 2020-12-31 | 2023-10-20 | 广州酷狗计算机科技有限公司 | Video material file generation method, video synthesis method, device and medium |

| CN112843723B (en) * | 2021-02-03 | 2024-01-16 | 北京字跳网络技术有限公司 | Interaction method, interaction device, electronic equipment and storage medium |

| CN112843723A (en) * | 2021-02-03 | 2021-05-28 | 北京字跳网络技术有限公司 | Interaction method, interaction device, electronic equipment and storage medium |

| CN113345054A (en) * | 2021-05-28 | 2021-09-03 | 上海哔哩哔哩科技有限公司 | Virtual image decorating method, detection method and device |

| CN113806306A (en) * | 2021-08-04 | 2021-12-17 | 北京字跳网络技术有限公司 | Media file processing method, device, equipment, readable storage medium and product |

| CN113806306B (en) * | 2021-08-04 | 2024-01-16 | 北京字跳网络技术有限公司 | Media file processing method, device, equipment, readable storage medium and product |

| CN117216312B (en) * | 2023-11-06 | 2024-01-26 | 长沙探月科技有限公司 | Method and device for generating questioning material, electronic equipment and storage medium |

| CN117216312A (en) * | 2023-11-06 | 2023-12-12 | 长沙探月科技有限公司 | Method and device for generating questioning material, electronic equipment and storage medium |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN110796712A (en) | Material processing method, device, electronic equipment and storage medium | |

| JP2024505995A (en) | Special effects exhibition methods, devices, equipment and media | |

| CN106909385A (en) | The operating method of visual page editing machine and visual page editing machine | |

| CN106303723A (en) | Method for processing video frequency and device | |

| US20170316091A1 (en) | Authoring tools for synthesizing hybrid slide-canvas presentations | |

| US20150121189A1 (en) | Systems and Methods for Creating and Displaying Multi-Slide Presentations | |

| US10649618B2 (en) | System and method for creating visual representation of data based on generated glyphs | |

| CN110503581B (en) | Unity 3D-based visual training system | |

| US9159168B2 (en) | Methods and systems for generating a dynamic multimodal and multidimensional presentation | |

| CA2963850A1 (en) | Systems and methods for creating and displaying multi-slide presentations | |

| EP3680861A1 (en) | System for parametric generation of custom scalable animated characters on the web | |

| EP4273808A1 (en) | Method and apparatus for publishing video, device, and medium | |

| US20190227701A1 (en) | Facilitating the prototyping and previewing of design element state transitions in a graphical design environment | |

| CN109343924A (en) | Activiti flow chart redraws method, apparatus, computer equipment and storage medium processed | |

| US20160291694A1 (en) | Haptic authoring tool for animated haptic media production | |

| CN110286971A (en) | Processing method and system, medium and calculating equipment | |

| Schwab et al. | Scalable scalable vector graphics: Automatic translation of interactive svgs to a multithread vdom for fast rendering | |

| CN113191184A (en) | Real-time video processing method and device, electronic equipment and storage medium | |

| KR102268013B1 (en) | Method, apparatus and computer readable recording medium of rroviding authoring platform for authoring augmented reality contents | |

| US10685470B2 (en) | Generating and providing composition effect tutorials for creating and editing digital content | |

| JP2019532385A (en) | System for configuring or modifying a virtual reality sequence, configuration method, and system for reading the sequence | |

| US20170060601A1 (en) | Method and system for interactive user workflows | |

| US11068145B2 (en) | Techniques for creative review of 3D content in a production environment | |

| CN113126863B (en) | Object selection implementation method and device, storage medium and electronic equipment | |

| CN111782309B (en) | Method and device for displaying information and computer readable storage medium |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination |