Early Rumor Detection Using Neural Hawkes Process with a New Benchmark Dataset (Accepted at NAACL 2022)

Note

This is the official repository of EArly Rumor Detection (EARD) model HEARD and the early detection benchmark dataset BEARD.

- BEARD is the first EARD-oriented dataset, collected by including early-stage information relevant to the concerned claims from a fact-checking website Snopes. BEARD contains 1,198 rumors and non-rumors reported during 2015/03-2021/01 with around 3.3 million relevant posts.

- HEARD is a novel EARD model based on the Neural Hawkes Process to automatically determine an optimal time point for stable early detection.

To install requirements:

pip install -r requirements.txt

To train HEARD with default hyperparameters, run this command:

python -u Main.py

After training, it will automatically output the results in terms of accuracy, recall, precision, recall, early rate and SEA.

You may also set the hyperparameters and data dir in config.json to train your custom dataset and hyperparameters.

By setting evaluate_only in config.jsonto true, you can use the same command python -u Main.py to test the trained model without training.

| Dataset | Class | #instance | #post | #AvgLen (hrs) |

|---|---|---|---|---|

| rumor | 498 | 182,499 | 2,538 | |

| non-rumor | 494 | 48,064 | 1,456 | |

| PHEME | rumor | 1,972 | 31,230 | 10 |

| non-rumor | 3,830 | 71,210 | 19 | |

| BEARD | rumor | 531 | 2,644,807 | 1,432 |

| non-rumor | 667 | 657,925 | 1,683 |

HEARD achieves the following performance on general classification metrics(accuracy and F1 score) and EARD-specific metrics(early rate and SEA):

| Dataset | Acc | F1 | ER | SEA |

|---|---|---|---|---|

| 0.716 | 0.714 | 0.348 | 0.789 | |

| PHEME | 0.823 | 0.805 | 0.284 | 0.841 |

| BEARD | 0.789 | 0.788 | 0.490 | 0.765 |

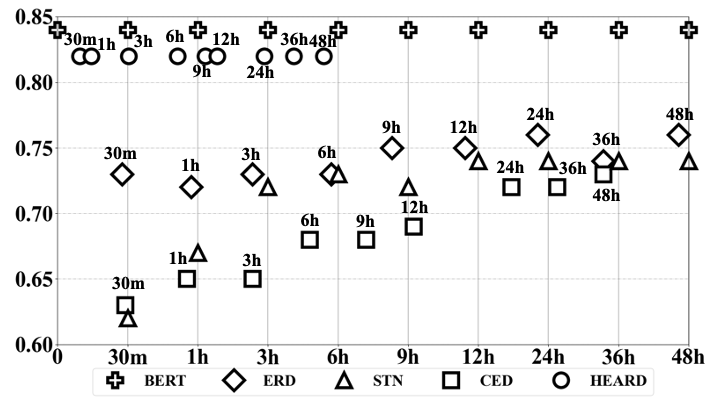

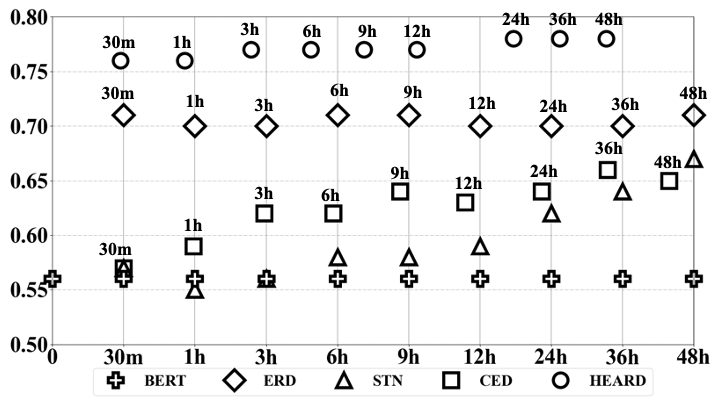

Results of Early Detection Accuracy Over Time:

This section aims to help users obtain the public BEARD dataset, which consists of claims, queries and relevant tweets (in the form of Tweet IDs), and prepare for the input data for our HEARD model.

Relevant files can be found in BEARD.zip.

This file provides the claim information and search query for Twitter search we used to gather the dataset. Each key-value pair of an instance contains: instance id, claim content, query and claim publish time. The data format is:

{

"instance id": {"claim": claim, "seed query":[sub_query,sub_query...],"time":time }

}

Rumor's instance id starts with "S" while Non-Rumor's instance id starts with "N".

This file contains tweet ids for 1198 instances. The data format is as follows:

{

"instance id": {"eid": instance id, "post_ids":[id,id...] }

}

Note that we cannot release the specific content of tweets due to the terms of use of Twitter data. Users can download the content via Twitter API or by following this blog.

After obtaining the tweets content, user needs to prepare input data for the HEARD model. User can follow BERTweet[code] to pre-process the text and this example to generate tf-idf vectors. Alternatively, user can refer to our example code in data_process.py.

After the above steps, input instance should be in the following format that will be fed into our HEARD model:

{

"eid": {

"label": "1", # 1 for rumor, 0 for non-rumor

"merge_seqs": {

"merge_times": [[timestamp,timestamp,...], [timestamp,timestamp,...], ...],

"merge_tids": [[post_id,post_id,...], [post_id,post_id,...], ...],

'merge_vecs': [[...], [...], ...], # tf-idf vecs[1000] for each interval, so the shape of merge_vecs should be [num of intervals,1000]

}}

...

}

This file contains the tree structure of each conversation of each instance. The data format is as follows:

{

"instance id": {"tree": {root1 id:{reply1 id:{...},reply2 id:{...}...},...}, "parent_mappings":{root1 id:{child1 id:parent1 id,...}}} }

}

An instance may consist of multiple trees, each representing a conversation. Each tree comprises a root post and replies within the conversation. "parent_mappings" denotes the mappings of child posts to their respective parent posts, indicating which reply post corresponds to which parent post.

This file contains the timestamp of each post. The data format is as follows:

{

"instance id": {post id: timestamp}

}

This folder contains the BERT embeddings of each post. The folder structure is as follow:

- BEARD_emb

- instance id

- post_id1.json

- post_id2.json

- ...

The data format of post_id.json file is as follows:

{

"pooler_output":pooler_output

}

If you use this code in your research, please cite our paper.

@inproceedings{zeng-gao-2022-early,

title = "{E}arly Rumor Detection Using Neural {H}awkes Process with a New Benchmark Dataset",

author = "Zeng, Fengzhu and

Gao, Wei",

booktitle = "Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies",

month = jul,

year = "2022",

address = "Seattle, United St