Created by Charles R. Qi, Li (Eric) Yi, Hao Su, Leonidas J. Guibas from Stanford University.

If you find our work useful in your research, please consider citing:

@article{qi2017pointnetplusplus,

title={PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space},

author={Qi, Charles R and Yi, Li and Su, Hao and Guibas, Leonidas J},

journal={arXiv preprint arXiv:1706.02413},

year={2017}

}

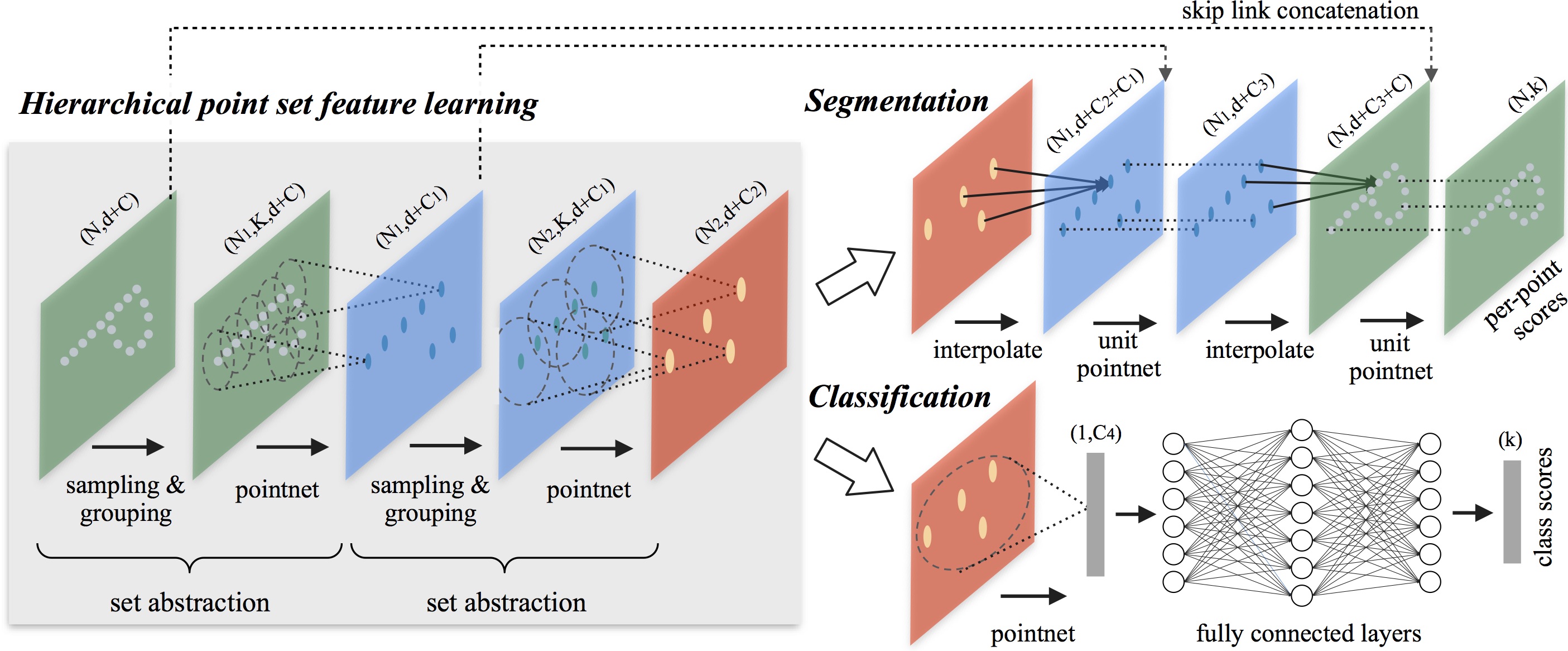

This is work is based on our NIPS'17 paper. You can find arXiv version of the paper here or check project webpage for a quick overview. PointNet++ is a follow-up project that builds on and extends PointNet. It's version 2.0 of the PointNet architecture.

While PointNet (the v1 model) either transforms features of individual points independently or process global features of the entire point set, in many cases, there are well defined distance metrics such as Euclidean distance for 3D point clouds collected by 3D sensors or geodesic distance for manifolds like isometric shape surfaces -- it'll be desired to respect the localities of those point sets data. Therefore we invent PointNet++ that learns hierarchical features with increasing scales of context, just like that in convolutional neural networks. Besides, we also observe one problem that's very different from conv nets on images -- non-uniform densities in natural point clouds. Thus we further propose special layers that's able to learn how to make use of points in regions with different densities.

In this repository we release code and data for our PointNet++ classification and segmentation networks as well as a few utility scripts for training, testing and data processing and visualization.

Install TensorFlow. The code is tested under TF1.2 GPU version and Python 2.7 (version 3 should also work) on Ubuntu 14.04. There are also some dependencies for a few Python libraries for data processing and visualizations like cv2, h5py etc. It's highly recommended that you have access to GPUs.

The TF operators are included under tf_ops, you need to compile them (check tf_xxx_compile.sh under each ops subfolder) first. Update nvcc and python path if necessary. The code is tested under TF1.2.0. If you are using earlier version it's possible that you need to remove the -D_GLIBCXX_USE_CXX11_ABI=0 flag in g++ command in order to compile correctly.

There is also a handy point cloud visualization tool under utils, run sh compile_render_balls_so.sh to compile it and you can try the demo with python show3d_balls.py The original code is from here.

You can get our sampled point clouds of ModelNet40 (XYZ and normal from mesh, 10k points per shape) at this OneDrive link. The ShapeNetPart dataset (XYZ, normal and part labels) can be found here. Uncompress them to the data folder such that it becomes:

data/modelnet40_normal_resampled

data/shapenetcore_partanno_segmentation_benchmark_v0_normal

so that training and testing scripts can successfully locate them.

To train a model to classify point clouds sampled from ModelNet40 shapes:

cd classification

python train.py

To train a model to segment object parts for ShapeNet models:

cd part_seg

python train.py

TBA

Our code is released under MIT License (see LICENSE file for details).

- PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space by Qi et al. (CVPR 2017 Oral Presentation). Code and data released in GitHub.

- Frustum PointNets for 3D Object Detection from RGB-D Data by Qi et al. (arXiv) A novel framework for 3D object detection with RGB-D data. The method proposed has achieved first place on KITTI 3D object detection benchmark on all categories (last checked on 11/30/2017). Code and data release TBD.