- TensorFlow: 1.14.0 (Tested)

- TensorFlow: 2.0 (Tested)

This is an implementation of Attention (only supports Bahdanau Attention right now) in Keras. This implementation also supports multiple joint values as the input with a single query.

data (sample data for the examples)

|--- en.model

|--- en.vocab

|--- fr.model

|--- fr.vocab

└--- lstm_weights.h5

layers

└--- attention.py (Attention implementation)

examples

└--- colab

└--- LSTM.ipynb (Jupyter notebook to be run on Google Colab)

└--- nmt

|--- model.py (NMT model defined with Attention)

└--- train.py ( Code for training/inferring/plotting attention with NMT model)

└--- nmt_bidirectional

|--- model.py (NMT birectional model defined with Attention)

└--- train.py ( Code for training/inferring/plotting attention with NMT model)

results (created by train_nmt.py to store model)

Just like you would use any other tensoflow.python.keras.layers object.

from attention_keras.layers.attention import AttentionLayer

attn_layer = AttentionLayer(name='attention_layer')

attn_out, attn_states = attn_layer({"values": encoder_outputs, "query": decoder_outputs})Or as for the multiple joint values:

from attention_keras.layers.attention import AttentionLayer

attn_layer = AttentionLayer(name='attention_layer')

attn_out, attn_states = attn_layer({"values": [encoder1_outputs, encoder2_outputs],

"query": decoder_outputs})Here,

encoder_outputs- Sequence of encoder ouptputs returned by the RNN/LSTM/GRU (i.e. withreturn_sequences=True)decoder_outputs- The above for the decoderattn_out- Output context vector sequence for the decoder. This is to be concat with the output of decoder (refermodel/nmt.pyfor more details)attn_states- Energy values if you like to generate the heat map of attention (refermodel.train_nmt.pyfor usage)

An example of attention weights can be seen in model.train_nmt.py

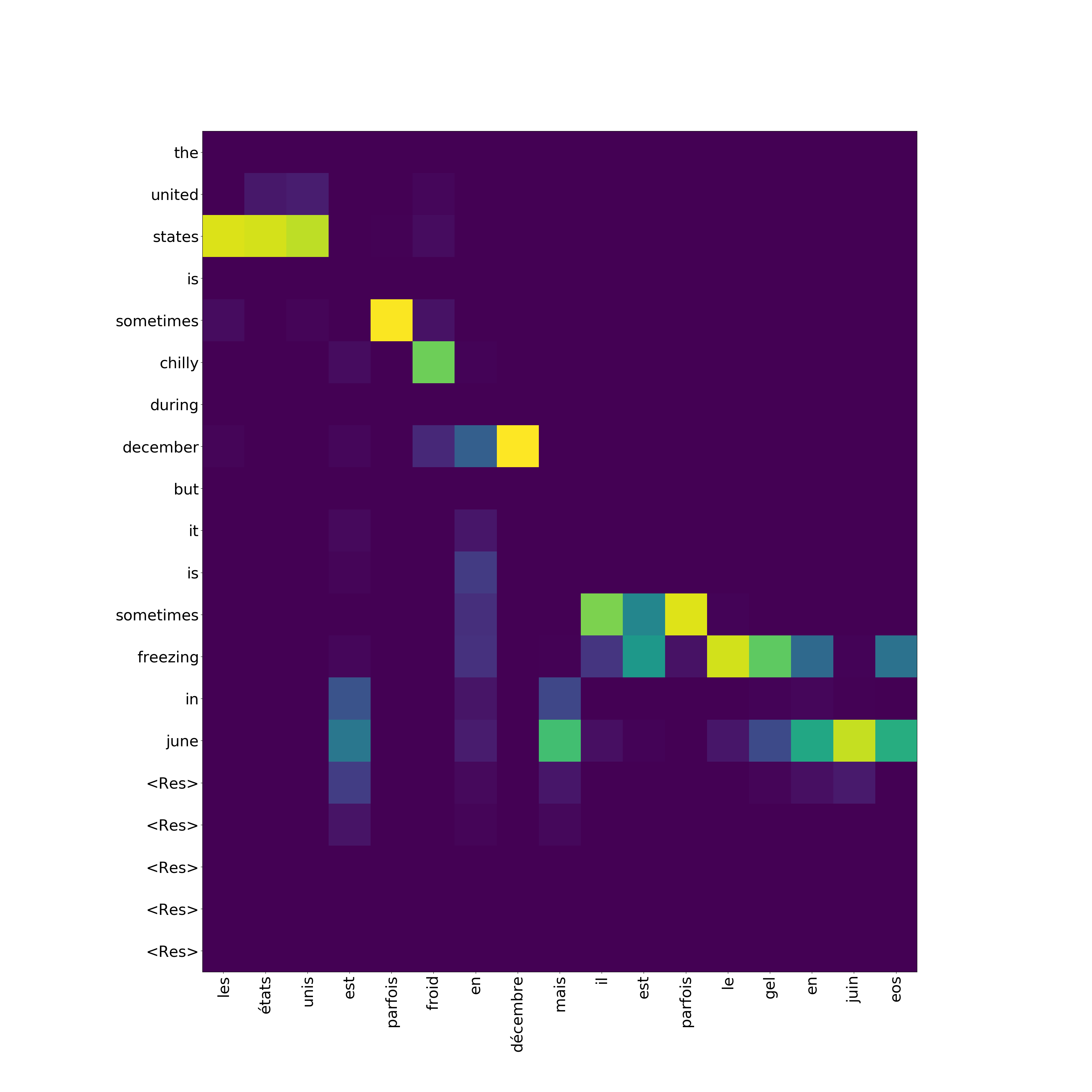

After the model trained attention result should look like below.

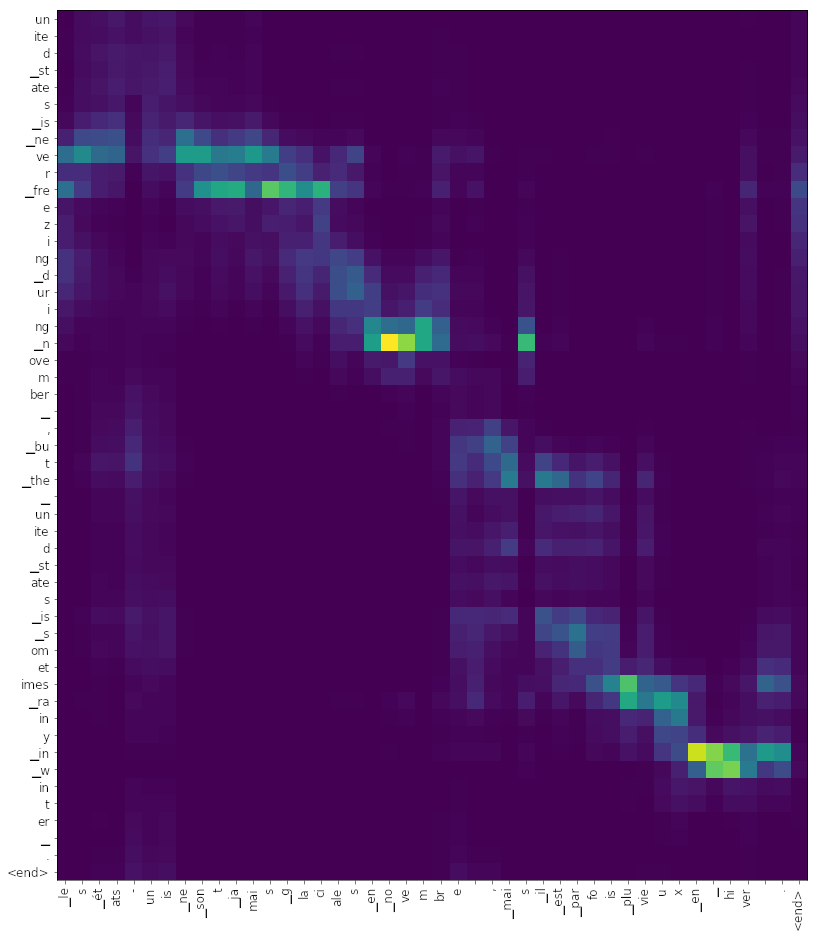

The same plot but for a model trained with sub-words as tokens.

In order to run the example you need to download small_vocab_en.txt and small_vocab_fr.txt from Udacity deep learning repository and place them in the data folder.

Also, there's an LSTM version of the same example in Colab, just follow the instructions

If you have improvements (e.g. other attention mechanisms), contributions are welcome!

The original credit goes to thushv89