Coach is a python reinforcement learning research framework containing implementation of many state-of-the-art algorithms.

It exposes a set of easy-to-use APIs for experimenting with new RL algorithms, and allows simple integration of new environments to solve. Basic RL components (algorithms, environments, neural network architectures, exploration policies, ...) are well decoupled, so that extending and reusing existing components is fairly painless.

Training an agent to solve an environment is as easy as running:

python coach.py -p CartPole_DQN -rNote: Coach has been tested on Ubuntu 16.04 LTS only.

Coach's installer will setup all the basics needed to get the user going with running Coach on top of OpenAI Gym environments. This can be done by running the following command and then following the on-screen printed instructions:

./install.shCoach creates a virtual environment and installs in it to avoid changes to the user's system.

In order to activate and deactivate Coach's virtual environment:

source coach_env/bin/activatedeactivateIn addition to OpenAI Gym, several other environments were tested and are supported. Please follow the instructions in the Supported Environments section below in order to install more environments.

Coach's installer installs Intel-Optimized TensorFlow, which does not support GPU, by default. In order to have Coach running with GPU, a GPU supported TensorFlow version must be installed. This can be done by overriding the TensorFlow version:

pip install tensorflow-gpuCoach supports both TensorFlow and neon deep learning frameworks.

Switching between TensorFlow and neon backends is possible by using the -f flag.

Using TensorFlow (default): -f tensorflow

Using neon: -f neon

There are several available presets in presets.py.

To list all the available presets use the -l flag.

To run a preset, use:

python coach.py -r -p <preset_name>For example:

- CartPole environment using Policy Gradients:

python coach.py -r -p CartPole_PG- Pendulum using Clipped PPO:

python coach.py -r -p Pendulum_ClippedPPO -n 8- MountainCar using A3C:

python coach.py -r -p MountainCar_A3C -n 8- Doom basic level using Dueling network and Double DQN algorithm:

python coach.py -r -p Doom_Basic_Dueling_DDQN- Doom health gathering level using Mixed Monte Carlo:

python coach.py -r -p Doom_Health_MMCIt is easy to create new presets for different levels or environments by following the same pattern as in presets.py

Training an agent to solve an environment can be tricky, at times.

In order to debug the training process, Coach outputs several signals, per trained algorithm, in order to track algorithmic performance.

While Coach trains an agent, a csv file containing the relevant training signals will be saved to the 'experiments' directory. Coach's dashboard can then be used to dynamically visualize the training signals, and track algorithmic behavior.

To use it, run:

python dashboard.pyFramework documentation, algoritmic description and instructions on how to contribute a new agent/environment can be found here.

Since the introduction of A3C in 2016, many algorithms were shown to benefit from running multiple instances in parallel, on many CPU cores. So far, these algorithms include A3C, DDPG, PPO, and NAF, and this is most probably only the begining.

Parallelizing an algorithm using Coach is straight-forward.

The following method of NetworkWrapper parallelizes an algorithm seamlessly:

network.train_and_sync_networks(current_states, targets)Once a parallelized run is started, the train_and_sync_networks API will apply gradients from each local worker's network to the main global network, allowing for parallel training to take place.

Then, it merely requires running Coach with the -n flag and with the number of workers to run with. For instance, the following command will set 16 workers to work together to train a MuJoCo Hopper:

python coach.py -p Hopper_A3C -n 16-

OpenAI Gym

Installed by default by Coach's installer.

-

ViZDoom:

Follow the instructions described in the ViZDoom repository -

https://github.com/mwydmuch/ViZDoom

Additionally, Coach assumes that the environment variable VIZDOOM_ROOT points to the ViZDoom installation directory.

-

Roboschool:

Follow the instructions described in the roboschool repository -

-

GymExtensions:

Follow the instructions described in the GymExtensions repository -

https://github.com/Breakend/gym-extensions

Additionally, add the installation directory to the PYTHONPATH environment variable.

-

PyBullet

Follow the instructions described in the Quick Start Guide (basically just - 'pip install pybullet')

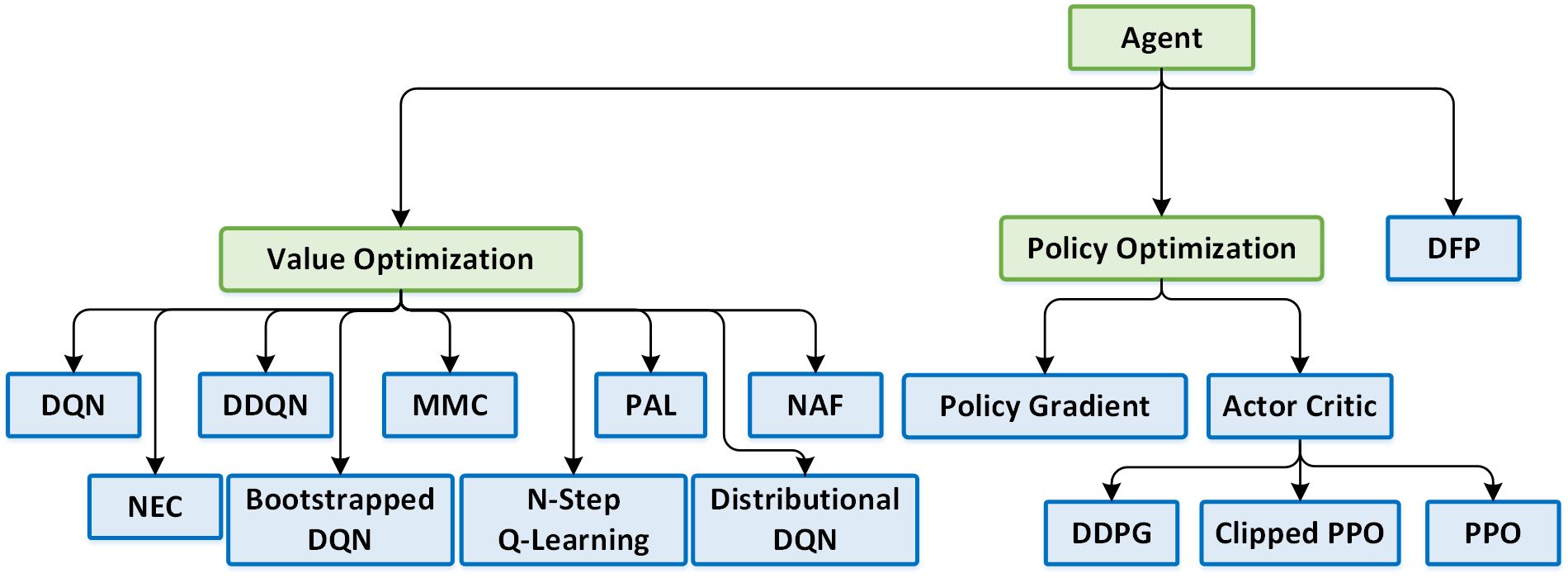

- Deep Q Network (DQN

- Double Deep Q Network (DDQN)

- Dueling Q Network

- Mixed Monte Carlo (MMC)

- Persistent Advantage Learning (PAL)

- Distributional Deep Q Network

- Bootstrapped Deep Q Network

- N-Step Q Learning | Distributed

- Neural Episodic Control (NEC)

- Normalized Advantage Functions (NAF) | Distributed

- Policy Gradients (PG) | Distributed

- Actor Critic / A3C | Distributed

- Deep Deterministic Policy Gradients (DDPG) | Distributed

- Proximal Policy Optimization (PPO)

- Clipped Proximal Policy Optimization | Distributed

- Direct Future Prediction (DFP) | Distributed

Coach is released as a reference code for research purposes. It is not an official Intel product, and the level of quality and support may not be as expected from an official product. Additional algorithms and environments are planned to be added to the framework. Feedback and contributions from the open source and RL research communities are more than welcome.