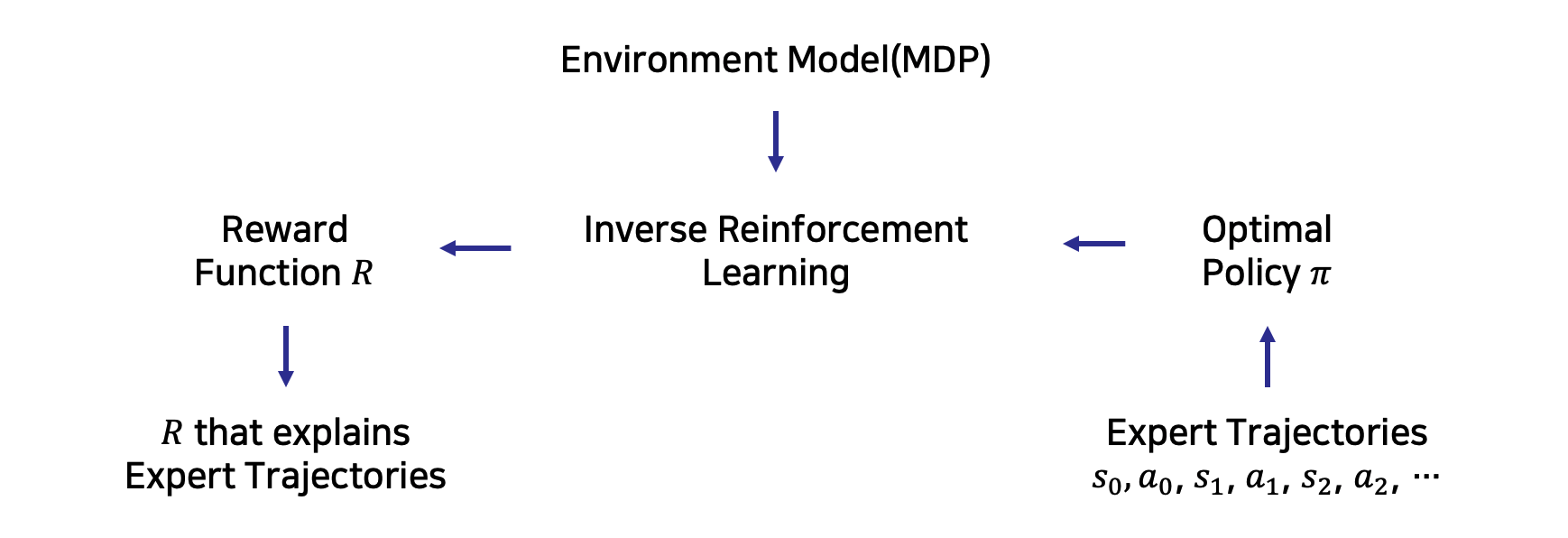

This repository contains PyTorch (v0.4.1) implementations of Inverse Reinforcement Learning (IRL) algorithms.

- Apprenticeship Learning via Inverse Reinforcement Learning [2]

- Maximum Entropy Inverse Reinforcement Learning [4]

- Generative Adversarial Imitation Learning [5]

- Variational Discriminator Bottleneck: Improving Imitation Learning, Inverse RL, and GANs by Constraining Information Flow [6]

We have implemented and trained the agents with the IRL algorithms using the following environments.

For reference, reviews of below papers related to IRL (in Korean) are located in Let's do Inverse RL Guide.

We have implemented APP, MaxEnt using Q-learning as RL step in MountainCar-v0 environment.

Navigate to expert_demo.npy in lets-do-irl/mountaincar/app/expert_demo or lets-do-irl/mountaincar/maxent/expert_demo.

Shape of expert's demonstrations is (20, 130, 3); (number of demonstrations, length of demonstrations, states and actions of demonstrations)

If you make demonstrations, Navigate to make_expert.py in lets-do-irl/mountaincar/app/expert_demo or lets-do-irl/mountaincar/maxent/expert_demo.

Navigate to lets-do-irl/mountaincar/app folder.

Train the agent wtih APP without rendering.

python train.py

If you want to test APP, Test the agent with the saved model app_q_table.npy in app/results folder.

python test.py

Navigate to lets-do-irl/mountaincar/maxent folder.

Train the agent wtih MaxEnt without rendering.

python train.py

If you want to test MaxEnt, Test the agent with the saved model maxent_q_table.npy in maxent/results folder.

python test.py

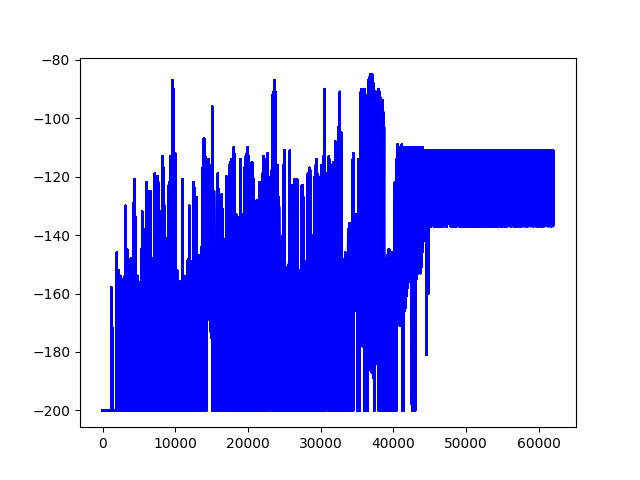

We have trained the agents with two different IRL algortihms using MountainCar-v0 environment.

| Algorithms | Scores / Episodes | GIF |

|---|---|---|

| APP |  |

|

| MaxEnt |  |

|

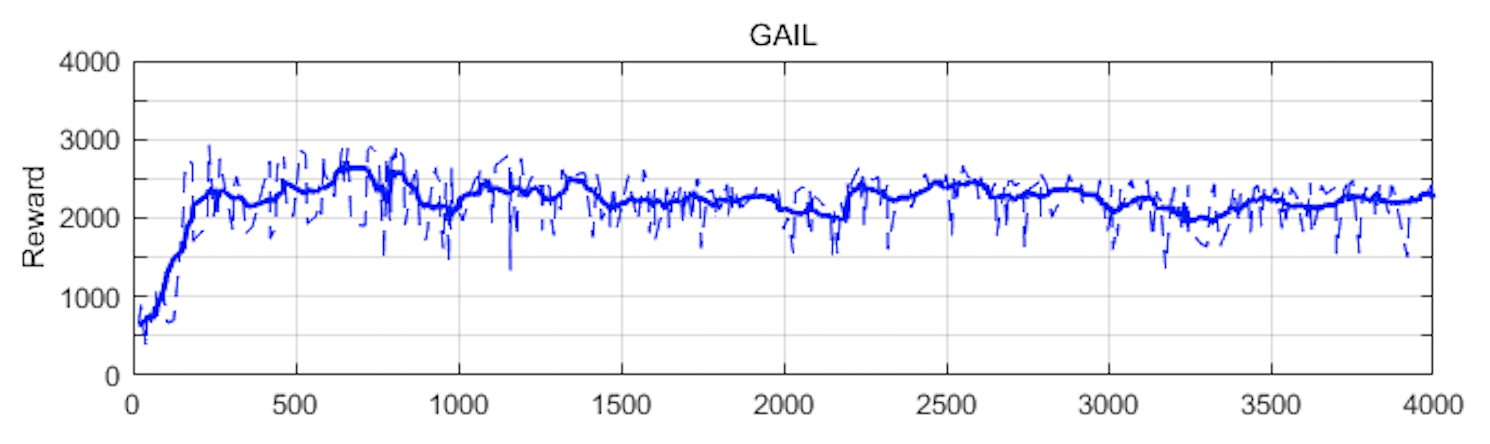

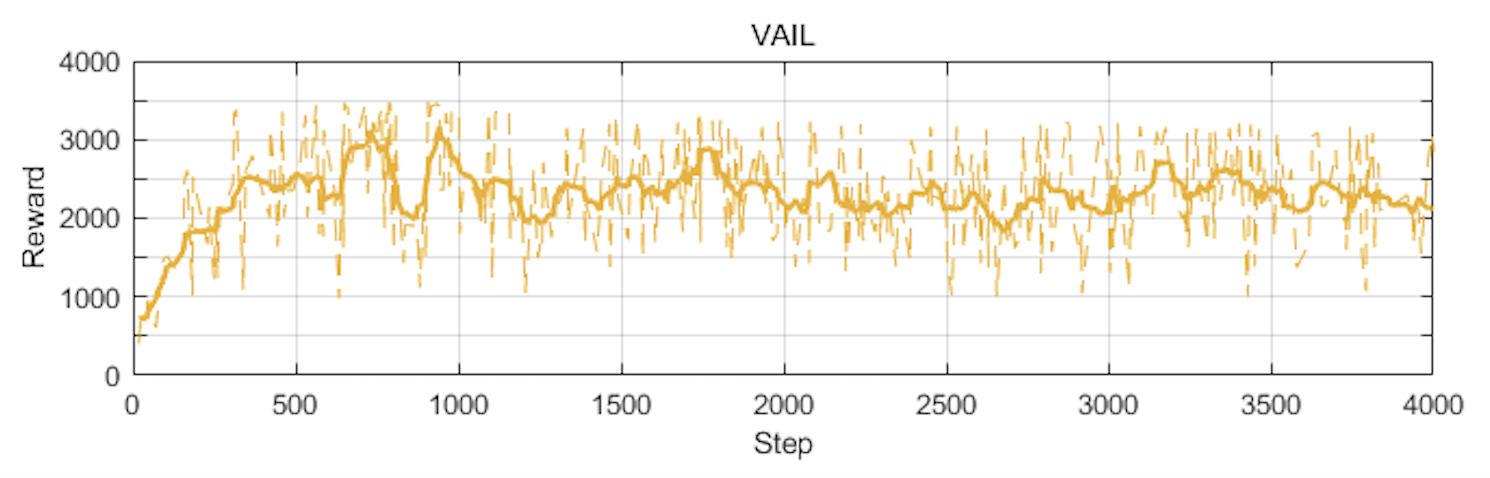

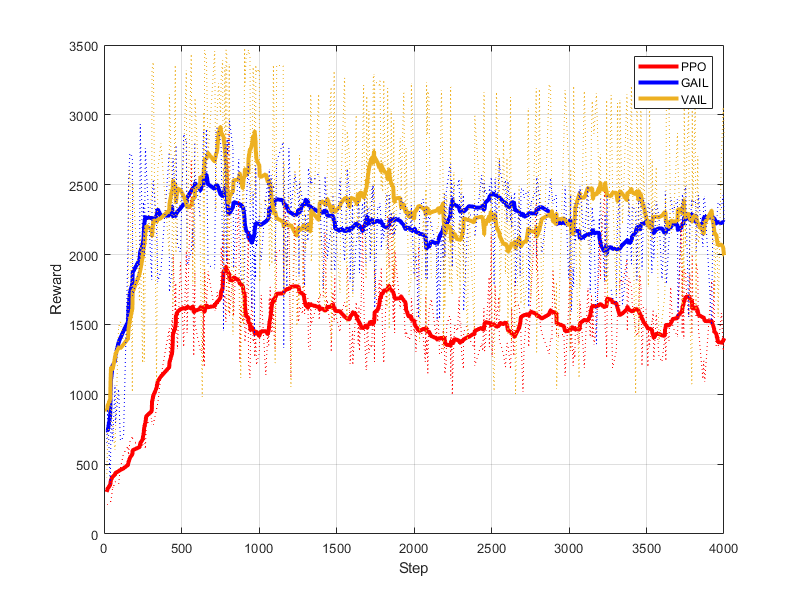

We have implemented GAIL, VAIL using PPO as RL step in Hopper-v2 environment.

Navigate to expert_demo.p in lets-do-irl/mujoco/gail/expert_demo or lets-do-irl/mujoco/vail/expert_demo.

Shape of expert's demonstrations is (50000, 14); (number of demonstrations, states and actions of demonstrations)

We used demonstrations that get scores between about 2200 and 2600 on average.

If you want to make demonstrations, Navigate to main.py in lets-do-irl/mojoco/ppo folder.

Also, you can see detailed implementation story (in Korean) of PPO in PG Travel implementation story.

Navigate to lets-do-irl/mujoco/gail folder.

Train the agent wtih GAIL without rendering.

python main.py

If you want to Continue training from the saved checkpoint,

python main.py --load_model ckpt_4000_gail.pth.tar

- Note that

ckpt_4000_gail.pth.tarfile should be in themujoco/gail/save_modelfolder.

If you want to test GAIL, Test the agent with the saved model ckpt_4000_gail.pth.tar in the mujoco/gail/save_model folder.

python test.py --load_model ckpt_4000_gail.pth.tar

- Note that

ckpt_4000_gail.pth.tarfile should be in themujoco/gail/save_modelfolder.

Navigate to lets-do-irl/mujoco/vail folder.

Train the agent wtih VAIL without rendering.

python main.py

If you want to Continue training from the saved checkpoint,

python main.py --load_model ckpt_4000_vail.pth.tar

- Note that

ckpt_4000_vail.pth.tarfile should be in themujoco/vail/save_modelfolder.

If you want to test VAIL, Test the agent with the saved model ckpt_4000_vail.pth.tar in the mujoco/vail/save_model folder.

python test.py --load_model ckpt_4000_vail.pth.tar

- Note that

ckpt_4000_vail.pth.tarfile should be in themujoco/vail/save_modelfolder.

Note that the results of trainings are automatically saved in logs folder. TensorboardX is the Tensorboard-like visualization tool for Pytorch.

Navigate to the lets-do-irl/mujoco/gail or lets-do-irl/mujoco/vail folder.

tensorboard --logdir logs

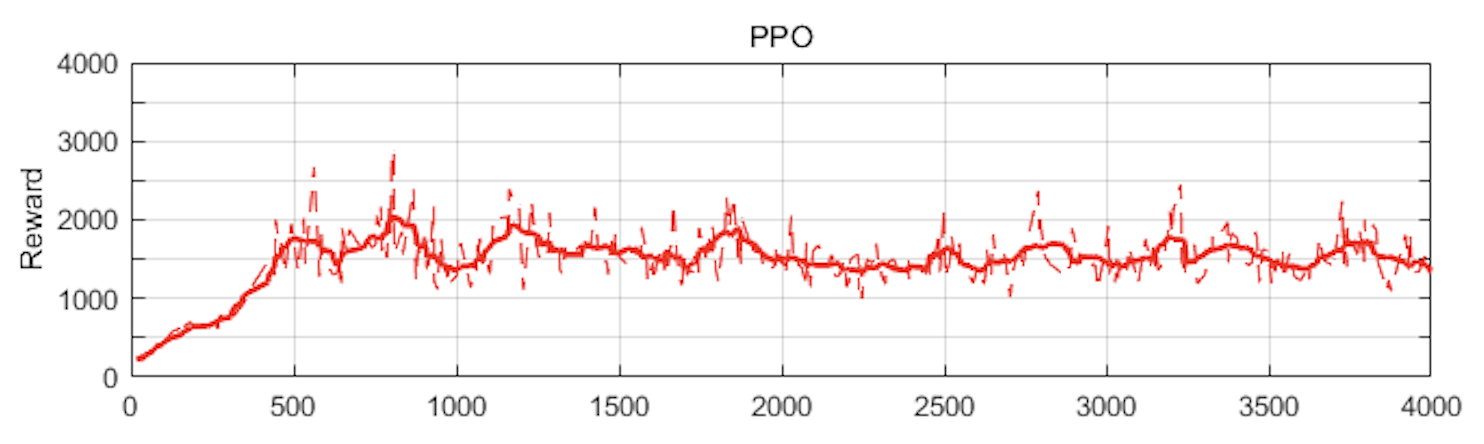

We have trained the agents with two different IRL algortihms using Hopper-v2 environment.

| Algorithms | Scores / Iterations (total sample size : 2048) |

|---|---|

| PPO (to compare) |  |

| GAIL |  |

| VAIL |  |

| Total |  |

We referenced the codes from below repositories.

- Implementation of APP

- Implementation of MaxEnt

- Pytorch implementation for Policy Gradient algorithms (REINFORCE, NPG, TRPO, PPO)

- Pytorch implementation of GAIL

Dongmin Lee (project manager) : Github, Facebook

Seungje Yoon : Github, Facebook