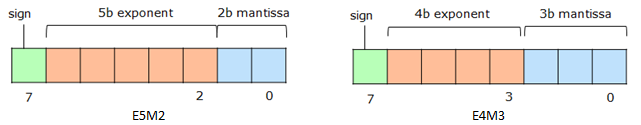

This repository provides PyTorch tools to emulate the new FP8 formats as defined by the joint specification from ARM-Intel-NVIDIA. The toolkit suports two binary formats namely E5M2 and E4M3, emulated on top of existing floating point hardware from Intel (FP32) and NVIDIA (FP16).

Following table shows the binary formats and the numeric range:

| E5M2 | E4M3 | |

|---|---|---|

| Exponent Bias | 15 | 7 |

| Infinities | S.11111.002 | N/A |

| NaNs | S.11111.{01, 10, 11}2 | S.1111.1112 |

| Zeros | S.00000.002 | S.0000.0002 |

| Max normal | S.11110.112=1.75 * 215=57344.0 | S.1111.1102=1.75 * 28=448.0 |

| Min normal | S.00001.002=2-14=6.1e-05 | S.0001.0002=2-6=1.5e-02 |

| Max subnormal | S.00000.112=0.75 * 2-14=4.5e-05 | S.0000.1112=0.875 * 2-6=1.3e-03 |

| Min subnormal | S.00000.012=2-16=1.5e-05 | S.0000.0012=2-9=1.9e-03 |

Follow the instructions below to install FP8 Emulation Toolkit in a Python virtual environment. Alternatively, this installation can also be performed in a docker environment.

Install or upgrade the following packages on your linux machine.

- Python >= 3.8.5

- CUDA >= 11.1

- gcc >= 8.4.0

Make sure these versions are reflected in the $PATH

- CPU >= Icelake Xeon

- GPU >= V100

$ python3 -m ~/py-venv

$ cd ~/py-venv

$ source bin/activate

$ pip3 install --upgrade pip3

$ git clone https://github.com/IntelLabs/FP8-Emulation-Toolkit.git

$ cd FP8-Emulation-Toolkit

$ pip3 install -r requirements.txt

$ python setup.py install

The emulated FP8 formats can be experimented with by integrated them into standard deep learning flows. Please check the examples folder for code samples. Following example demonstrates the post-training quantization flow for converting pre-trained models to use FP8 for inference.

# import the emulator

from mpemu import mpt_emu

...

# layers exempt from e4m3 conversion

list_exempt_layers = ["conv1","fc"]

model, emulator = mpt_emu.quantize_model (model, dtype="e4m3_rne", "None",

list_exempt_layers=list_exempt_layers)

# calibrate the model for a few batches of training data

evaluate(model, criterion, train_loader, device,

num_batches=<num_calibration_batches>, train=True)

# Fuse BatchNorm layers and quantize the model

model = emulator.fuse_layers_and_quantize_model(model)

# Evaluate the quantized model

evaluate(model, criterion, test_loader, device)

An example demostrating post-training quantization can be found here.