What I have accomplished cannot be reversed

The English documentation was generated by GPT3.5

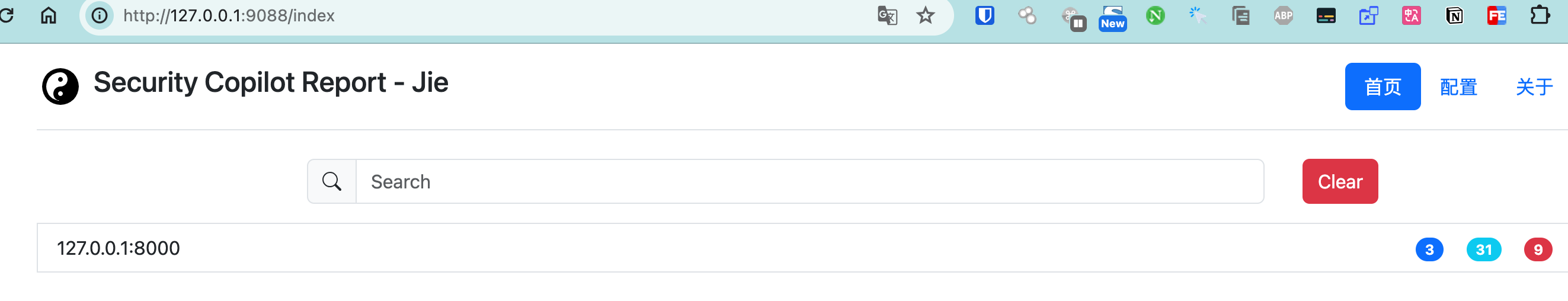

Analyze and scan traffic by using active crawler mode or passive proxy.

Please read the documentation carefully before using

- nmap

- masscan

- chromium

You should check whether the above programs exist on your machine before using them

If you do not want to install nmap and masscan, you can use-nps to specify that port scanning will not be performed and turn off checking

Three built-in crawler modes are available:

| Mode | Corresponding Parameter |

|---|---|

| crawlergo Crawler (Headless browser mode crawler) | --craw c |

| Default katana Crawler (Standard crawling mode using standard go http library to handle HTTP requests/responses) | --craw k |

| katana Crawler (Headless browser mode crawler) | --craw kh |

When using headless mode, you can specify --show to display the crawling process of the browser.

In active mode, you can enter the Security Copilot mode by specifying --copilot, which will not exit after scanning, making it convenient to view the web results page.

./Jie web -t https://public-firing-range.appspot.com/ -p xss -o vulnerability_report.html --copilotIf the username and password for the web are not specified, a yhy/password will be automatically generated, which can be viewed in the logs. For example, the following is the automatically generated one:

INFO [cmd:webscan.go(glob):55] Security Copilot web report authorized:yhy/3TxSZw8t8w

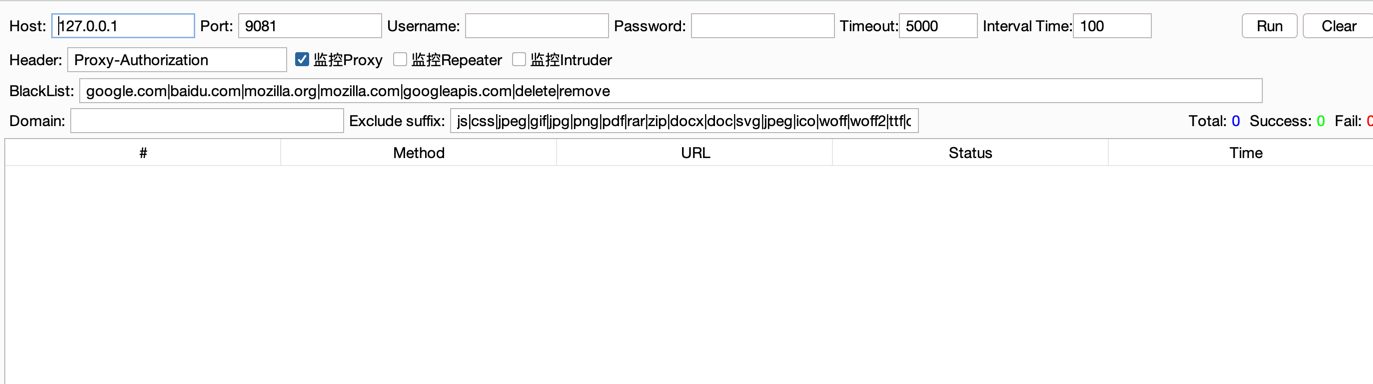

Passive proxy is implemented through go-mitmproxy.

Why is it called Security Copilot? According to my idea, this is not just a vulnerability scanner, but also a comprehensive auxiliary tool.

After hanging the scanner, go through the website once. Even if there are no vulnerabilities, it should tell me the approximate information of this website (fingerprint, cdn, port information, sensitive information, API paths, subdomains, etc.), which helps in further exploration manually, assisting in vulnerability discovery, rather than just finishing the scan and considering it done, requiring manual reevaluation.

HTTPS websites under passive proxy require installing certificates. The HTTPS certificate-related logic is compatible with mitmproxy,

and The certificate is automatically generated after the command is started for the first time, and the path is ~/.mitmproxy/mitmproxy-ca-cert.pem.

Install the root certificate. Installation steps can be found in the Python mitmproxy documentation: About Certificates.

./Jie web --listen :9081 --web 9088 --user yhy --pwd 123 --debugThis will listen on port 9081, and the web interface (SecurityCopilot) will be open on port 9088.

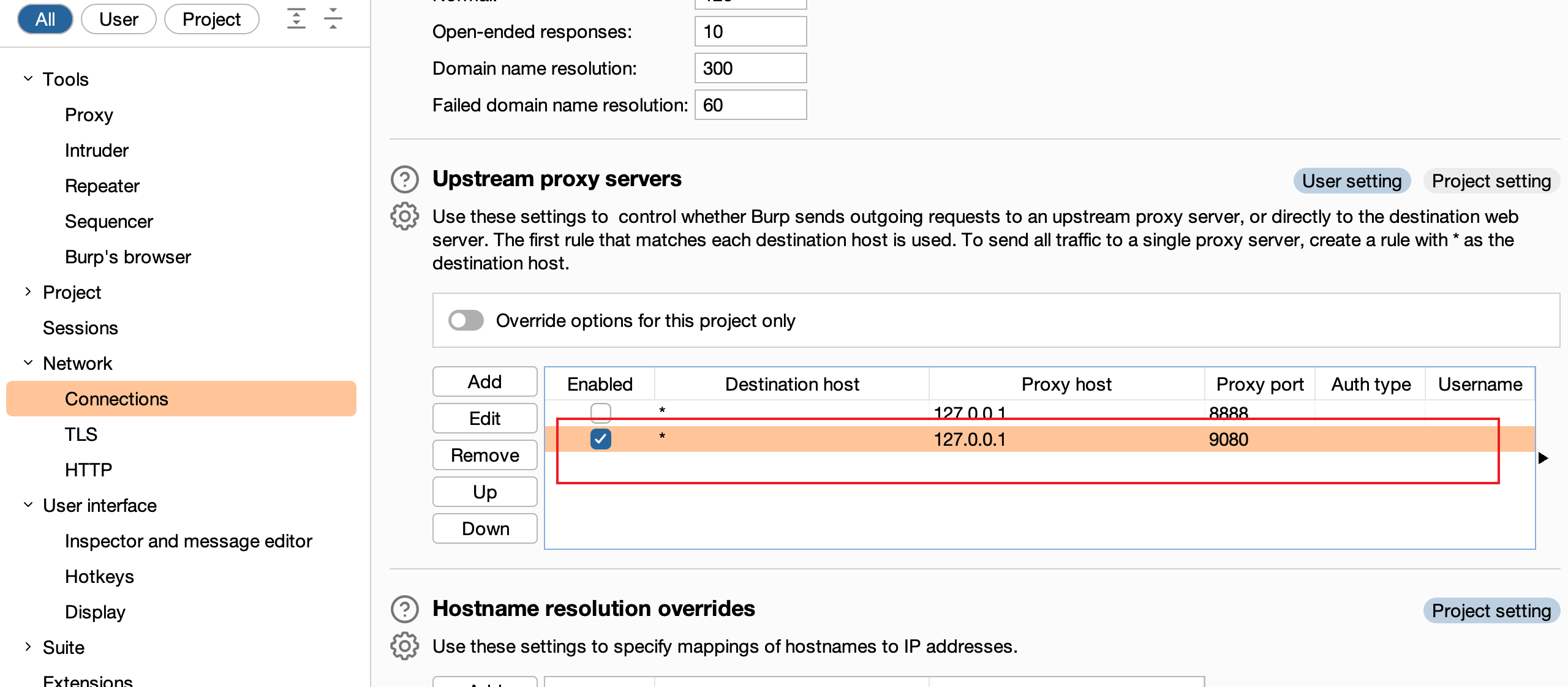

Set the browser's proxy to 9081, or integrate with Burp.

Some configurations can be modified through Jie_config.yaml, or through the configuration interface of https://127.0.0.1:9088/ (changes made in the web interface will be updated in the configuration file in real-time).

./Jie web -h

Flags:

--copilot Blocking program, go to the default port 9088 to view detailed scan information.

In active mode, specify this parameter to block the program. After scanning, the program will not exit, and you can view information on the web port.

-h, --help help for web

--listen string use proxy resource collector, value is proxy addr, (example: 127.0.0.1:9080).

Proxy address listened to in passive mode, default is 127.0.0.1:9080

--np not run plugin.

Disable all plugins

-p, --plugin strings Vulnerable Plugin, (example: --plugin xss,csrf,sql,dir ...)

Specify the enabled plugins. Specify 'all' to enable all plugins.

--poc strings specify the nuclei poc to run, separated by ','(example: test.yml,./test/*).

Custom nuclei vulnerability template address

--pwd string Security Copilot web report authorized pwd.

Web page login password. If not specified, a random password will be generated.

--show specifies whether to show the browser in headless mode.

Whether to display the browser in active scanning mode

--user string Security Copilot web report authorized user, (example: yhy).]

Web page login username, default is yhy (default "yhy")

--web string Security Copilot web report port, (example: 9088)].

Web page port, default is 9088 (default "9088")

Global Flags:

--debug debug

-f, --file string target file

-o, --out string output report file(eg:vulnerability_report.html)

--proxy string proxy, (example: --proxy https://127.0.0.1:8080)

-t, --target string targetDownload the corresponding program from https://github.com/yhy0/Jie/releases/latest. The entire process is built automatically by Github Action, so

feel free to use it.

Simply execute make to compile.

export CGO_ENABLED=1;go build -ldflags "-s -w" -o Jie main.goFreely select which scanner to use via three monitoring switches. Note: JavaScript and CSS should also go through the scanner to collect information.

The traffic of the Upstream Proxy Intruder and Repeater modules will also go through the scanner.

This will cause all traffic from manual testing to go through the scanner, which may not be ideal. This should be done as needed.

The plugins internally judge whether they have been scanned based on the traffic collected passively or actively (TODO Should the scanning plugin be executed in a certain order?).

- Website fingerprint information

- Aggregated display of URLs requested by each website

- Website domain information: cdn/waf/cloud, resolution records

- Jwt automatic blasting (todo generate dictionary automatically based on domain name)

- Sensitive information

- Active path scanning (bbscan rules, added a fingerprint field, when there is a fingerprint, only the corresponding rule will be scanned, for example, php websites will not scan springboot rules)

- Port information

- Collect domain names, IPs, APIs

Some scans will recognize the language environment based on the collected fingerprint information to prevent invoking Java scanning plugins for PHP websites.

The scan directory is the scan plugin library, and each directory's plugin handles different situations.

- PerFile: For each URL, including parameters, etc.

- PerFolder: For the directory of the URL, the directory will be accessed separately

- PerServer: For each domain, meaning a target is only scanned once

| Plugin | Description | Default On | Scope |

|---|---|---|---|

| xss | Semantic analysis, prototype pollution, DOM pollution point propagation analysis | true | PerFile |

| sql | Currently only implements some simple SQL injection detection | true | PerFile |

| sqlmap | Forward traffic to sqlmap via specified sqlmap API for injection detection | false | PerFile |

| ssrf | true | PerFile | |

| jsonp | true | PerFile | |

| cmd | Command execution | true | PerFile |

| xxe | true | PerFile | |

| fastjson | When a request is detected as json, it is patched with @a1phaboy's FastjsonScan scanner to detect fastjson; jackson is not implemented yet | true | PerFile |

| bypass403 | dontgo403 403 bypass detection | true | PerFile |

| crlf | crlf injection | true | PerFolder |

| iis | iis high version short filename guessing [iis7.5-10.x-ShortNameFuzz]( | false | PerFolder |

| nginx-alias-traversal | Directory traversal due to Nginx misconfiguration nginx | true | PerFolder |

| log4j | log4j vulnerability detection, currently only tests request headers | true | PerFolder |

| bbscan | bbscan rule directory scan | true | PerFolder PerServer (for rules that specify the root directory) |

| portScan | Use naabu to scan Top 1000 ports, then use fingerprintx to identify services | false | PerServer |

| brute | If service blasting is enabled, service blasting will be performed after scanning the port service is detected | PerServer | |

| nuclei | Integrated nuclei | false | PerServer |

| archive | Utilize https://web.archive.org/ to obtain historical url links (parameters) and then scan | true | PerServer |

| poc | poc module written in Go for detection. The poc module relies on fingerprint recognition, and scanning will only occur when the corresponding fingerprint is recognized. No pluginization anymore | false | PerServer |

Add multiple user cookies for authorization detection (it seems better to write tests with Burp plugins themselves, so there seems to be no need to write them here).

package main

import (

"github.com/logrusorgru/aurora"

"github.com/yhy0/Jie/SCopilot"

"github.com/yhy0/Jie/conf"

"github.com/yhy0/Jie/crawler"

"github.com/yhy0/Jie/pkg/mode"

"github.com/yhy0/Jie/pkg/output"

"github.com/yhy0/logging"

"net/url"

)

/**

@author: yhy

@since: 2023/12/28

@desc: //TODO

**/

func lib() {

logging.Logger = logging.New(conf.GlobalConfig.Debug, "", "Jie", true)

conf.Init()

conf.GlobalConfig.Http.Proxy = ""

conf.Global

Config.WebScan.Craw = "k"

conf.GlobalConfig.WebScan.Poc = nil

conf.GlobalConfig.Reverse.Host = "https://dig.pm/"

conf.GlobalConfig.Passive.WebPort = "9088"

conf.GlobalConfig.Passive.WebUser = "yhy"

conf.GlobalConfig.Passive.WebPass = "123456" // Remember to change to a strong password

// Enable all plugins

for k := range conf.Plugin {

// if k == "nuclei" || k == "poc" {

// continue

// }

conf.Plugin[k] = true

}

if conf.GlobalConfig.Passive.WebPort != "" {

go SCopilot.Init()

}

// Initialize crawler

crawler.NewCrawlergo(false)

go func() {

for v := range output.OutChannel {

// Show in SCopilot

if conf.GlobalConfig.Passive.WebPort != "" {

parse, err := url.Parse(v.VulnData.Target)

if err != nil {

logging.Logger.Errorln(err)

continue

}

msg := output.SCopilotData{

Target: v.VulnData.Target,

}

if v.Level == "Low" {

msg.InfoMsg = []output.PluginMsg{

{

Url: v.VulnData.Target,

Plugin: v.Plugin,

Result: []string{v.VulnData.Payload},

Request: v.VulnData.Request,

Response: v.VulnData.Response,

},

}

} else {

msg.VulMessage = append(msg.VulMessage, v)

}

output.SCopilot(parse.Host, msg)

logging.Logger.Infoln(aurora.Red(v.PrintScreen()).String())

}

logging.Logger.Infoln(aurora.Red(v.PrintScreen()).String())

}

}()

mode.Active("https://testphp.vulnweb.com/", nil)

}Currently under development, even I need to look at the code for help information, detailed documentation will be written once it's done.

Due to most of the vulnerability exploitation tools being written in Java and supporting different Java versions, setting up the environment is too cumbersome and frustrating, so Jie has been redefined.

Jie: A comprehensive and powerful vulnerability scanning and exploitation tool.

The current version (1.0.0) supports exploitation of the following vulnerabilities

A Powerful security assessment and utilization tools

Usage:

Jie [command]

Available Commands:

apollo apollo scan && exp

fastjson fastjson scan && exp

help Help about any command

log4j log4j scan && exp

other other scan && exp bb:BasicBrute、swagger:Swagger、nat:NginxAliasTraversal、dir:dir)

s2 Struts2 scan && exp

shiro Shiro scan && exp

web Run a web scan task

weblogic WebLogic scan && exp

Flags:

--debug debug

-f, --file string target file

-h, --help help for Jie

-o, --out string output report file(eg:vulnerability_report.html)

--proxy string proxy, (example: --proxy https://127.0.0.1:8080)

-t, --target string target

Use "Jie [command] --help" for more information about a command.For example, Shiro key vulnerability exploitation:

# Without specifying -m, it defaults to blasting the key and exploitation chain

Jie shiro -t https://127.0.0.1

# Exploitation

Jie Shiro -t https://127.0.0.1 -m exp -k 213123 -g CCK2 -e spring -km CBC --cmd whoamiWhere various tools by other researchers have been stitched together, some of which are included in the description of scanning and exploiting vulnerabilities. If anything is missing, you can contact me to add it. More vulnerability exploitation will be supported later.

https://github.com/lqqyt2423/go-mitmproxy

Semantic analysis, prototype pollution, DOM pollution point propagation analysis

https://github.com/w-digital-scanner/w13scan

https://github.com/ac0d3r/xssfinder

https://github.com/kleiton0x00/ppmap

Extracted code related to detection from sqlmap

Detection through fingerprint recognition

todo Not embedding the nuclei's yml files, changing to download and update online from the official website

https://github.com/projectdiscovery/nuclei

Some of the POCs in xray are written improperly, causing parsing problems, which need to be corrected. For example: response.status == 200 && response.headers["content-type"] == "text/css" && response.body.bcontains(b"$_GET['css']")

content-type should be Content-Type

But it seems there is a parsing problem.

Do not use xray's POC, only use nuclei's yml files Together with the need for organization to prevent duplicate scanning, nuclei-template's POCs are enough.

https://github.com/wrenchonline/glint

https://github.com/veo/vscan

https://github.com/mazen160/secrets-patterns-db https://github.com/pingc0y/URLFinder

https://github.com/a1phaboy/FastjsonScan

https://github.com/w-digital-scanner/w13scan

https://github.com/SleepingBag945/dddd

This code is distributed under the AGPL-3.0 license. See LICENSE in this directory.

Thanks to the open source works and blogs of various masters, as well as JetBrains' support for a series of easy-to-use IDEs for this project.