中文 | English

- Added a local large model node, currently compatible with GLM and LLM. However, LLM cannot be used for tool invocations because the native LLM does not include this functionality. Please provide the absolute paths to the tokenizer and model folders in the node to load LLM locally.

- Introduced a code interpreter tool.

- Enabled file loading with the option to input absolute paths.

- Expanded the components available for the large model node, giving you more choices.

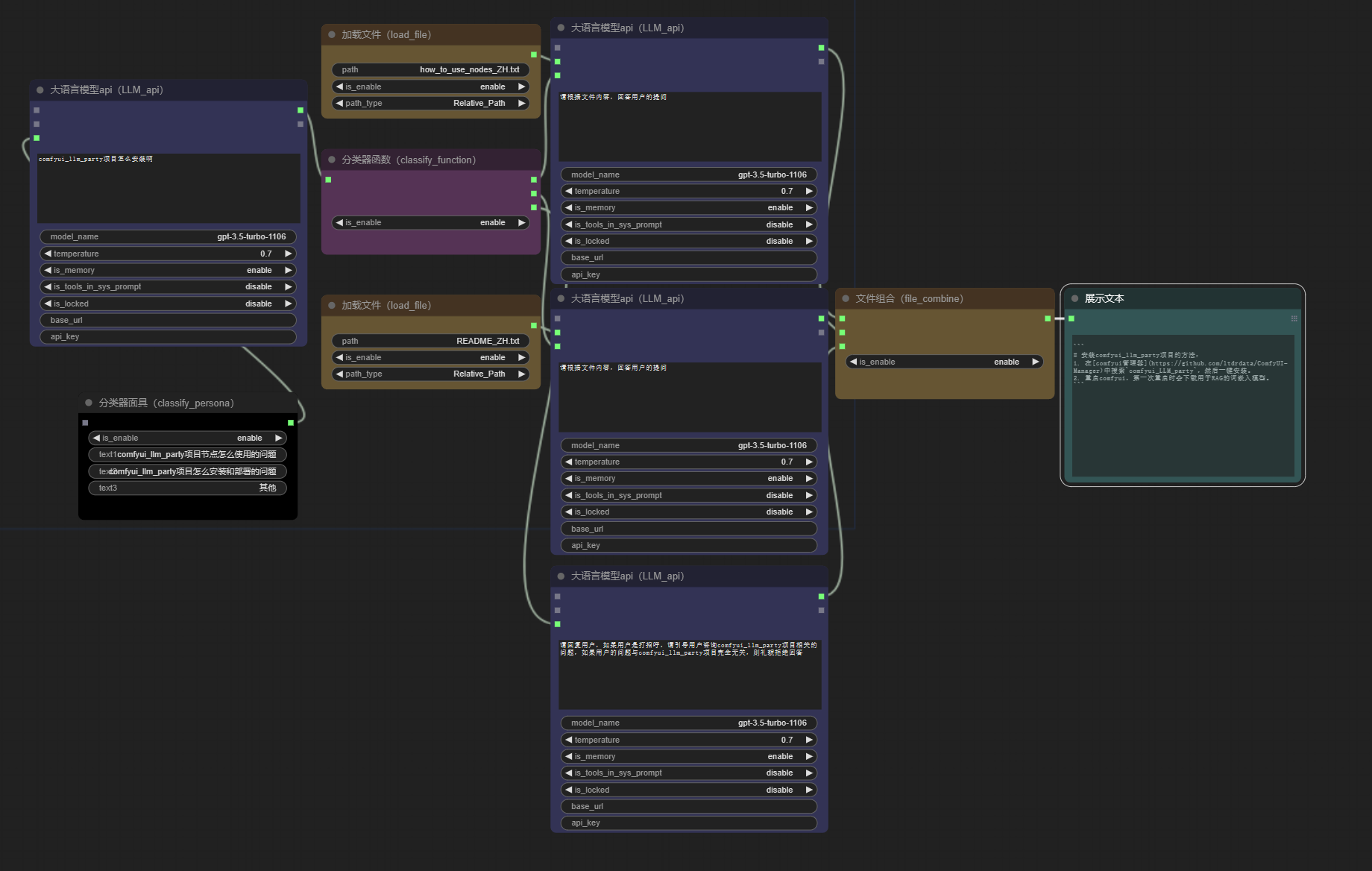

comfyui is an extremely minimalist UI interface, primarily used for AI drawing and other workflows based on the SD model. This project aims to develop a complete set of nodes for LLM workflow construction based on comfyui. It allows users to quickly and conveniently build their own LLM workflows and easily integrate them into their existing SD workflows.(The image shows the intelligent customer service workflow built by the nodes of this project.For more example workflows, please check the workflow folder.)

Building a Modular AI with ComfyUI×LLM: A Step-by-Step Tutorial (Super Easy!)

- You can right-click in the comfyui interface, select

llmfrom the context menu, and you will find the nodes for this project. how to use nodes - Supports OpenAI API driving and custom base_url, allowing the use of a proxy API to drive LLM nodes.If you are using other large model interfaces, you can convert them to the OpenAI API format using openai-style-api. Please select the LLM_local node for local deployment. Currently, both GLM and LLAMA have been adapted, but LLAMA cannot be used for tool invocations because the native LLAMA does not include this functionality.

- The base_url must end with

/v1/. - Supports importing various file types into LLM nodes. With RAG technology, LLM can answer questions based on file content. Currently supported file types include: .docx, .xlsx, .csv, .txt, .py, .js, .java, .c, .cpp, .html, .css, .sql, .r, .swift

- The tool combine node allows multiple tools to be passed into the LLM node, and the file combine node allows multiple files to be passed into the LLM node.

- Supports Google search and single web page search, enabling LLM to perform online queries.

- Through the start_dialog node and the end_dialog node, a loopback link can be established between two LLMs, meaning the two LLMs act as each other’s input and output!

- It is recommended to use the show_text node from ComfyUI-Custom-Scripts in conjunction with the LLM node for output display.

Baidu Cloud Download (Recommended! Includes a compressed package of comfyui with the environment setup completed, and a folder for this project. After downloading the former, there’s no need for further environment configuration!)

Or install using one of the following methods:

- Search for comfyui_LLM_party in the comfyui manager and install it with one click.

- Restart comfyui.

- Navigate to the

custom_nodessubfolder under the ComfyUI root folder. - Clone this repository with

git clone https://github.com/heshengtao/comfyui_LLM_party.git.

- Click

CODEin the upper right corner. - Click

download zip. - Unzip the downloaded package into the

custom_nodessubfolder under the ComfyUI root folder.

- Navigate to the

comfyui_LLM_partyproject folder. - Enter

pip install -r requirements.txtin the terminal to deploy the third-party libraries required by the project into the comfyui environment. Please ensure you are installing within the comfyui environment and pay attention to anypiperrors in the terminal. - If you are using the comfyui launcher, you need to enter

path_in_launcher_configuration\python_embeded\python.exe path_in_launcher_configuration\python_embeded\Scripts\pip.exe install -r requirements.txtin the terminal to install. Thepython_embededfolder is usually at the same level as yourComfyUIfolder.

Configure the APIKEY using one of the following methods:

- Open the

config.inifile in thecomfyui_LLM_partyproject folder. - Enter your

openai_api_keyandbase_urlinconfig.ini. - If you want to use the Google search tool, enter your

google_api_keyandcse_idinconfig.ini.

- Open the comfyui interface.

- Create a new Large Language Model (LLM) node and directly enter your

openai_api_keyandbase_urlin the node. - Create a new Google Search Tool (google_tool) node and directly enter your

google_api_keyandcse_idin the node.

- More common utility nodes, such as: code interpreters, text-to-speech output, recognition of text information in images, etc.

- Allow LLM to internally call an LLM that is subsidiary to it, giving the assistant its own assistant.

- New nodes that can connect with the numerous SD nodes in comfyui, expanding the possibilities for LLM and SD, and providing related workflows.