Many real-world scenarios demand the interpretability of NLP models. Despite various interpretation methods, it's often hard to guarantee those interpretations to truly reflect the model's decision. Meanwhile, rationale extraction first extracts the relevant part of the input (rationale), and then feed it as the only input to the predictor. This ensures the strict faithfulness of the rationale.

In this project, I implemented FRESH (Jain et al., 2020) for rationale extraction in sentiment classification, using KR3: Korean Restaurant Review with Ratings. Discontiguous rationale attained a comparable performance to the original paper, easily outperforming the random baseline. Unfortunately, this wasn't true for contiguous rationale. Plus, it would be worth noting that rationale extraction could excel better in more appropriate settings. This discussion is elaborated in the section Limits.

Presentation slides used in the class.

As deep learning revolutionizes the field of NLP, researchers are also calling for interpretability. Interpretability is often used to confirm other important desiderata which are hard to codify, such as fairness, trust, and causality (Doshi-Velze and Kim, 2017). The term "interpretability" should be used with caution though as interpretability is not a monolithic concept, but in fact reflects several distinct ideas (Lipton, 2018). Two large aspects of interpretability often discussed in NLP are faithfulness and plausibility (Wiegreffe and Pinter, 2019; Jacovi and Golberg, 2020; DeYoung et al., 2020). Faithfulness refers to how accurately the interpretation reflects the true reasoning process of the model, while plausibility refers to how convincing the interpretation is to humans (Jacovi and Golberg, 2020).

Interpretation can have many different forms, but the most common in NLP is rationale. Rationale is a text snippet from the input text. It is analoguous to saliency map in computer vision.

Some works use attention (Bahdanau et al., 2015) to obtain rationales for interpretation.

However, it is argued that attention cannot provide a faithful interpretation (Jain and Wallace, 2019; Brunner et al., 2020).

Some works use post-hoc methods (Ribeiro et al., 2015; Sundararajan et al., 2017), but these methods also do not ensure faithfulness (Lipton, 2018; Adebayo et al., 2022).

Meanwhile, some claims that strictly faithful interpretation is impossible.

Explanations must be wrong. They cannot have perfect fidelity with respect to the original model.

Rudin, 2019

...we believe strictly faithful interpretation is a 'unicorn' which will likely never be found.

Jacovi and Golberg, 2020

This is where rationale extraction comes in. Instead of attributing the model's decision to a certain part of the input, this framework first extracts the important part of the input and then feed it as the only input to the prediction model. In this way, no matter how complex the prediction model is, we can guarantee that the extracted input is fully faithful to the model's prediction. Hence, this framework is known to be faithful-by-construction (Jain et al., 2020).

Extracting the rationale, which is essentially assigning a binary mask to every input token, is non-differentiable. To tackle this problem, using reinforcement learning was initially proposed (Lei et al., 2016), while more recent works employed reparameterization (Bastings et al., 2019; Paranjape et al., 2020). Another work has bypassed this problem by decoupling the extraction and prediction, achieving a competitive performance with an ease of use (Jain et al., 2020).

Succeeding my last project, KR3: Korean Restaurant Review with Ratings, I applied rationlae extraction on sentiment classification.

⚠️ Please note that these dataset and task are probably not the most ideal setting for rationale extraction (See the section Limits for more details).

I followed the framework FRESH(Faithful Rationale Extraction from Saliency tHresholding), proposed by Jain et al., 2020.

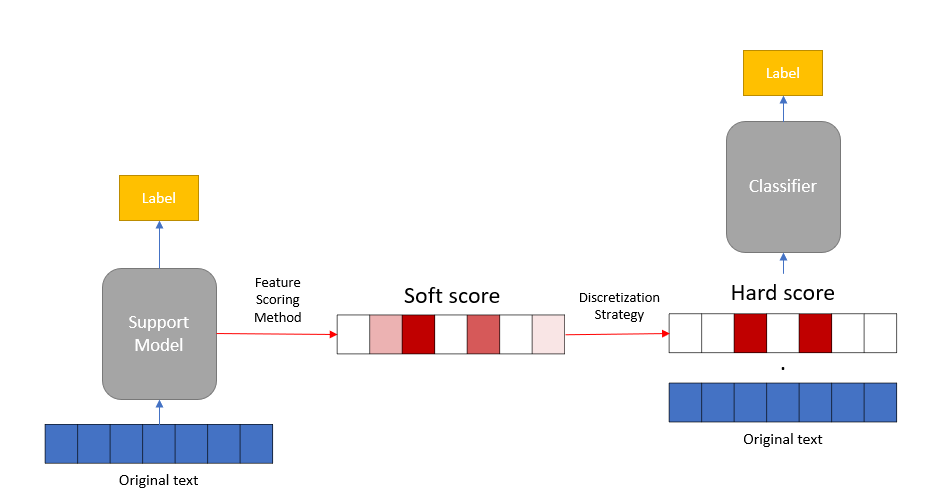

FRESH could be divided into three parts.

- Train support model end-to-end on the original text.

- Use feature scoring method to obtain soft(continuous) score, and then discretize it with selected strategy.

- Train classifier on the label and the extracted rationale.

Since FRESH is a framework, many design choices could be made. Following are some of the choices in the paper, with choices in this project marked bold.

- Support Model: BERT

- Feature Scoring Method: attention, gradient

- Strategy: contiguous, Top-k

- Classifier: BERT

Among the two aspects of interpretability, faithfulness doesn't have to be evaluated. This is due to the nature of faithful-by-construction framework. The other aspect, plausibility, requires human evaluation which I couldn’t afford. Results below only show the performance of the model, which is also crucial. If the prediction is faithful but wrong, what’s the point?

- All the performance metrcs are macro F1, so higher-the-better.

- All the metrics regarding to Jain et al., 2020 are reported in the paper, i.e. not reproduced.

However, for contiguous rationales, there was no significant difference.

| Method | F1 |

|---|---|

| Full text | .93 |

| Top-k; attention | .82 |

| Contiguous; attention | .75 |

| Top-k; random | .74 |

| Contiguous; random | .74 |

- Compared to SST, the performance drop is similar. However, for contiguous rationales, the performance drop was larger.

- It's worth noting that for Movies the drop was marginal, though the rationale ratio is higher.

- Note that SST and Movies are chosen among several evaluation datasets from Jain et al., 2020, as these are sentiment classification, just like KR3.

| Dataset (rationale ratio) | Jain - SST (20%) | Jain - Movies (30%) | Ours - KR3 (20%) |

|---|---|---|---|

| Full text | .90 | .95 | .93 |

| Top-k; attention | .81 | .93 | .82 |

| Contiguous; attention | .81 | .94 | .75 |

- This isn’t desirable, because it shows that rationales are not necessary for the prediction.

- I suspect this is largely due to the nature of the task, i.e. information is scattered all over the text.

| Method | Performance |

|---|---|

| Full text | .93 |

| Remaining after Top-k | .74 |

| Remaining after Contiguous | .74 |

Order of python scripts:

fresh_support_model.pyfresh_extract.pyfresh_tokenize.pyfresh_train.py

Training logs: Weights & Biases

- Originally 14345 batches, but only 11967 batches had successfully gone through extraction via contiguous strategy (cf. 14171 batches for Top-k).

- Reduced dataset size in contiguous might’ve contributed to the poor performance.

- Why don't you fix the bug? -> in fact... (see the next section)

Settings I'd prefer for rationale extraction are

- Long text (vs short text)

- Text-based task (vs requires common sense/world knowledge)

- Information needed for the task compressed in a narrow region (vs scattered all over)

Examples of more ideal settings would be

- BoolQ (Clark et al., 2019)

- FEVER (Thorne et al., 2018)

- Evidence Inference (Lehman et al., 2019)

- ECtHR (Chalkidis et al., 2021)

🍒 Cherry-picked example to show a long review. Bold text are the extracted rationales. Korean is the original review. English version is first machine translated by Naver Papago, and then rationales are highlighted by myself.

(Top-k; negative)

SNS에서 인기가 제일 많아서 처음 샤로수길 방문하면서 식사를 했습니다. 웨이팅에 이름 올리고 40분 정도 기다려서 식사했습니다. 처음 비주얼은 와 처음 몇 숟가락은 외에의 맛인데... 중간부터는 속이 느글거려 먹는 걸 즐기지 못했습니다. 그냥 한번 이런 맛이 있구나 정도지 두 번은 가고 싶지 않습니다. 물론 개인 취향은 있겠지만, 같이 동행한 중학생과 초등학생 딸이 맛이 없었다고 하네요. 먼 길 가서 기대한 점심 식사인데... 소셜은 역시 걸러서 들어야 하나 봅니다.

(Contiguous; negative)

SNS에서 인기가 제일 많아서 처음 샤로수길 방문하면서 식사를 했습니다. 웨이팅에 이름 올리고 40분 정도 기다려서 식사했습니다. 처음 비주얼은 와 처음 몇 숟가락은 외에의 맛인데... 중간부터는 속이 느글거려 먹는 걸 즐기지 못했습니다. 그냥 한번 이런 맛이 있구나 정도지 두 번은 가고 싶지 않습니다. 물론 개인 취향은 있겠지만, 같이 동행한 중학생과 초등학생 딸이 맛이 없었다고 하네요. 먼 길 가서 기대한 점심 식사인데... 소셜은 역시 걸러서 들어야 하나 봅니다.

(Top-k; negative)

It was the most popular on SNS, so I had a meal while visiting Sharosu-gil for the first time. I put my name on the waiting list and waited for about 40 minutes to eat. Wow, the first few spoons taste like something else. I couldn't enjoy eating because I felt sick from the middle. I don't want to go there twice. Of course, there will be personal preferences, but the middle school and elementary school daughter who accompanied them said it was not delicious. It's a long-awaited lunch. I think you should listen to social media.

(Contiguous; negative)

It was the most popular on SNS, so I had a meal while visiting Sharosu-gil for the first time. I put my name on the waiting list and waited for about 40 minutes to eat. Wow, the first few spoons taste like something else. I couldn't enjoy eating because I felt sick from the middle. I don't want to go there twice. Of course, there will be personal preferences, but the middle school and elementary school daughter who accompanied them said it was not delicious. It's a long-awaited lunch. I think you should listen to social media.

This work was done in the class, Data Science Capstone Project at Seoul National University. I thank professor Wen-Syan Li for the support and Seoul National University Graduate School of Data Science for the compute resources.