Auto-calibration of lidar and camera based on maximization of intensity mutual information. This is the reimplementation of the paper: Automatic Targetless Extrinsic Calibration of a 3D Lidar and Camera by Maximizing Mutual Information

- reimplementation of Automatic Targetless Extrinsic Calibration of a 3D Lidar and Camera by Maximizing Mutual Information

- test on real lidar, camera data

- tested on Ubuntu 20.04

sudo apt-get install \

libopencv-dev \

libpcl-dev \

rapidjson-dev \make default -j`nproc`

# build examples

make apps -j`nproc`- Download livox lidar data together with images from here(If you are interested, you can search for more about low-cost livox lidars).

- Extract the sample data, and create two files images.txt and point_clouds.txt that store absolute paths to image, pcd data file respectively. One line for one file.

- Create camera info with camera instrinsic matrix, a sample is provided here

- Create initial guess of transformation info from lidar to camera, in the form of translation(tx, ty, tz), rotation(roll, pitch, yaw); a sample is provided here

- Fill absolute paths to the above files in calibration_handler_param.json

- Run (after make apps)

./build/examples/sensors_calib_app ./data/samples/calibration_handler_param.json

After the optimization finishes, the final transformation info will be printed out.

Also the projected (image to) pointclouds; (pointcloud to) images will be saved. Check cloud*.pcd and img*.png files.

- Here is the sample results:

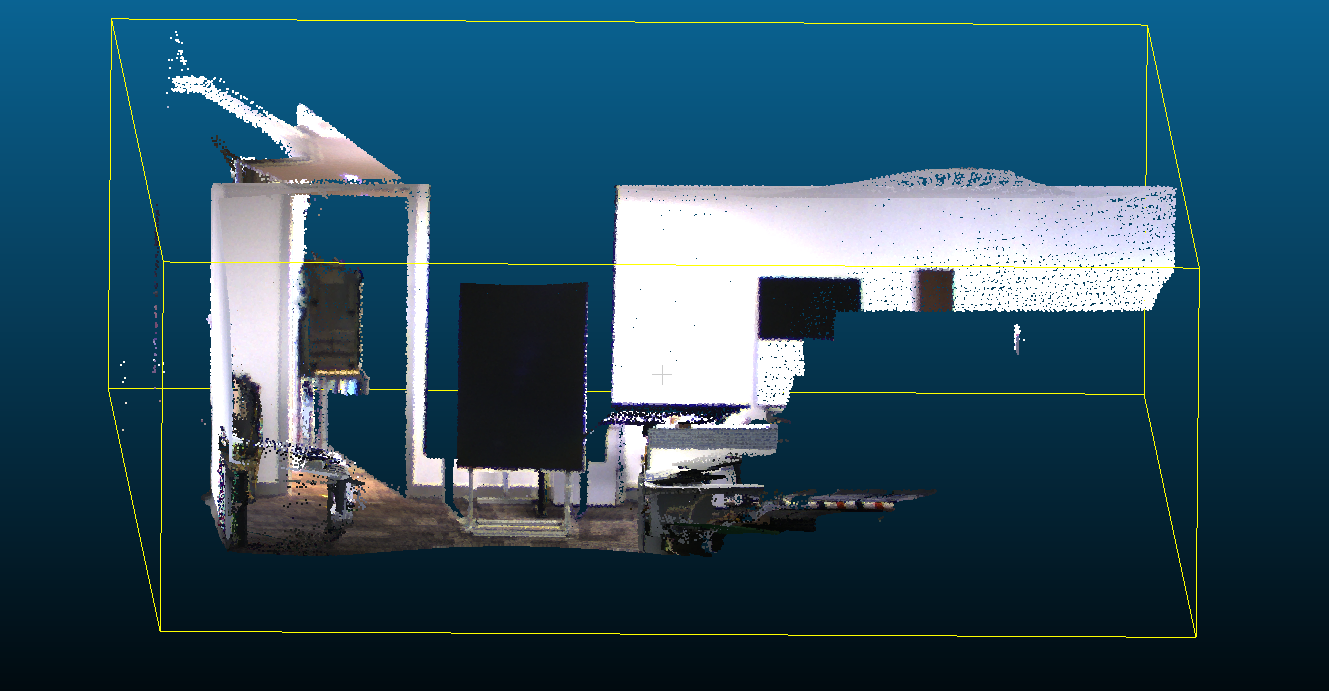

projected cloud:

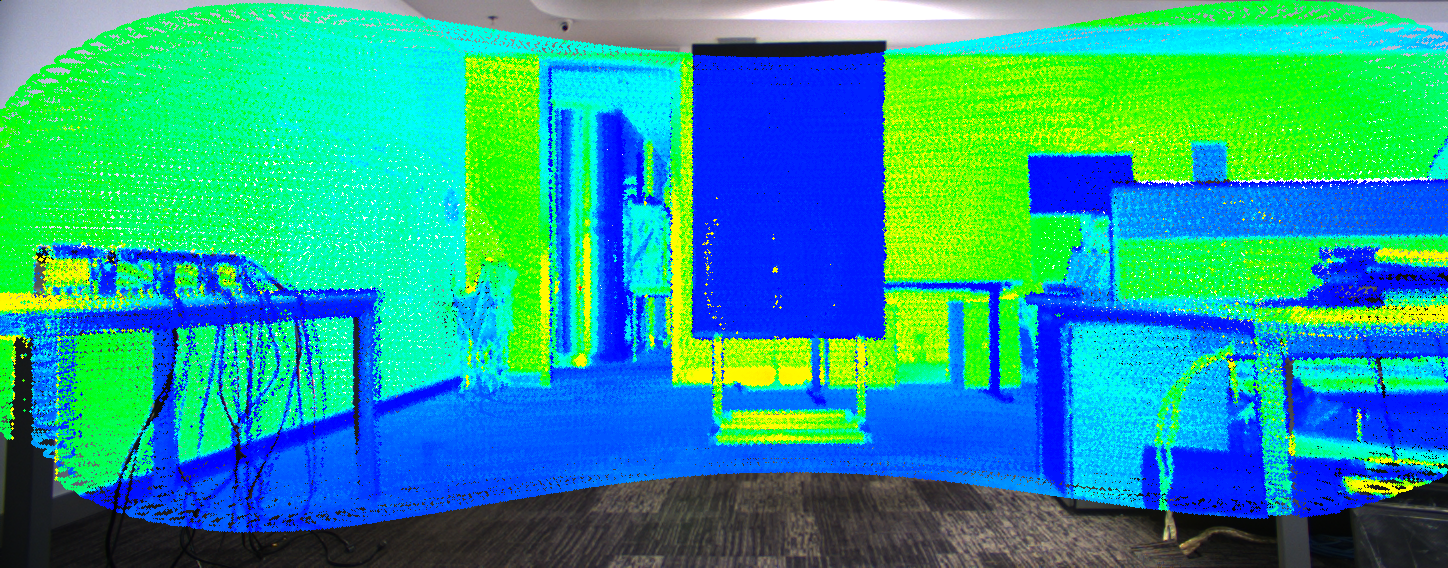

projected image:

- Automatic Targetless Extrinsic Calibration of a 3D Lidar and Camera by Maximizing Mutual Information, AAAI 2012

- Automatic Calibration of Lidar and Camera Images using Normalized Mutual Information, ICRA 2013

- Accurate Extrinsic Calibration between Monocular Camera and Sparse 3D Lidar Points without Markers, IV 2017