Codebase for Order Matters: Agent-by-agent Policy Optimization. Please note that this repo is currently undergoing reconstruction and may potentially contain bugs. As a result, its performance may not be consistent with that which was reported in the paper.

CTDE MARL algorithms for StarCraft II Multi-agent Challenge (SMAC), PettingZoo, Multi-agent Particle Environment, Multi-agent MUJOCO, and Google Research Football (comming later).

This repo is heavily based on https://github.com/marlbenchmark/on-policy.

Implemented algorithms include:

- MAPPO

- CoPPO (without advantage-mix / credit assignment)

- HAPPO

- A2PO

Parameter sharing is recommended for SMAC, while separated parameters are recommended for other environments.

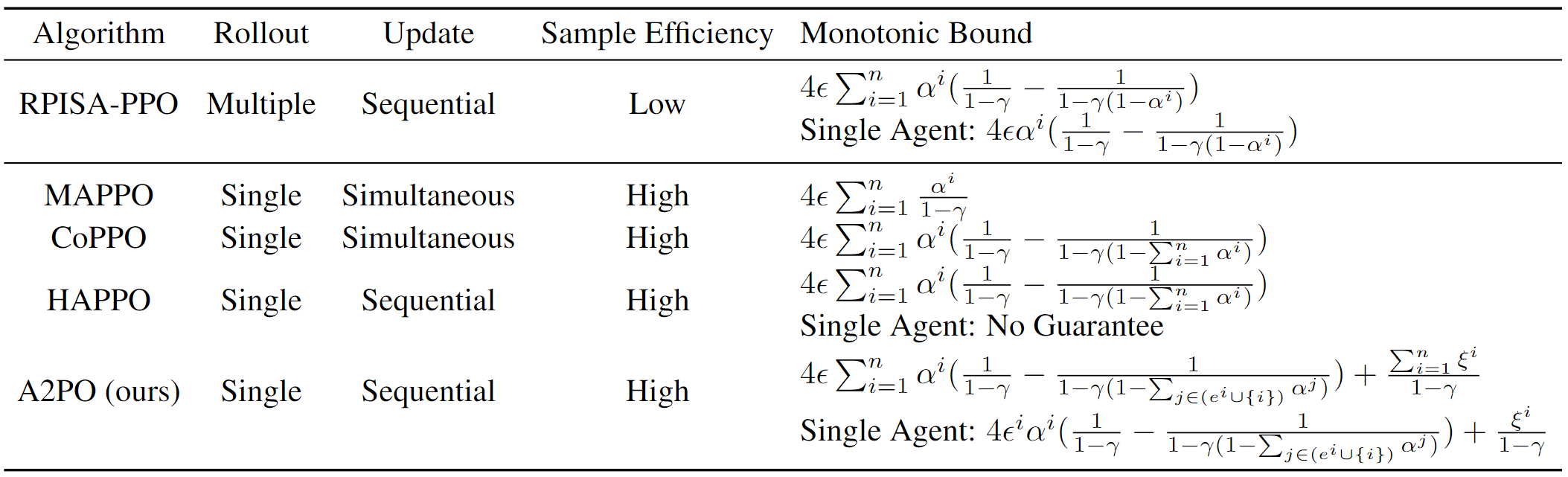

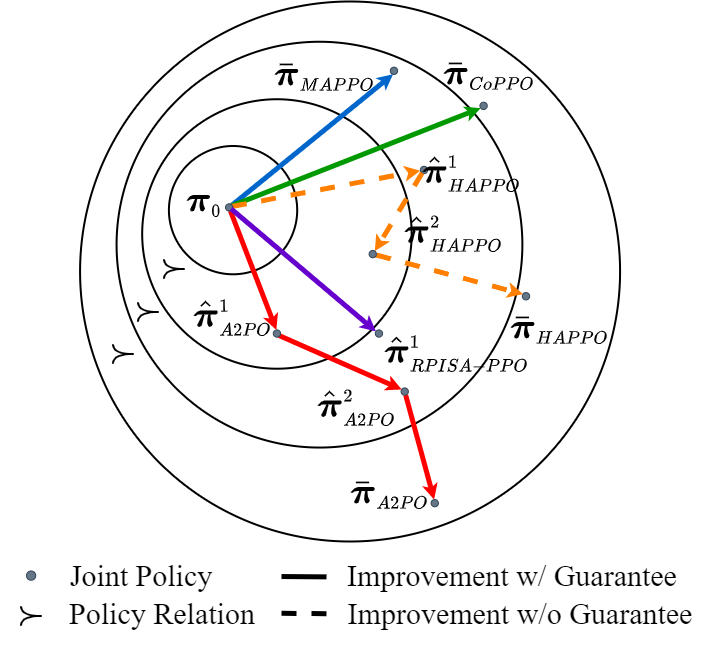

Monotonic Bounds

Cooperative Break Through

Serve, Pass and Shoot

# create conda environment

conda create -n co-marl python==3.8

conda activate co-marl

conda install pytorch torchvision torchaudio cudatoolkit=11.3 -c pytorch -y

pip install psycopg2-binary setproctitle absl-py pysc2 gym tensorboardX

# sudo apt install build-essential -y

pip install Cython

pip install sacred aim

# install on-policy package

pip install -e .or

bash install.shbash install_sc2.shbash install_pettingzoo.shbash install_mujoco.shbash install_grf.shHere we use training MMM2 (a hard task in SMAC) as an example:

sh run_scripts/SMAC/MMM2.sh