English | 中文

Data-Juicer is a data-centric text processing system to make data higher-quality, juicier, and more digestible for LLMs. This project is being actively updated and maintained, and we will periodically enhance and add more features and data recipes. We welcome you to join us in promoting LLM data development and research!

-

Broad Range of Operators: Equipped with 50+ core operators (OPs), including Formatters, Mappers, Filters, Deduplicators, and beyond.

-

Specialized Toolkits: Feature-rich specialized toolkits such as Text Quality Classifier, Dataset Splitter, Analysers, Evaluators, and more that elevate your dataset handling capabilities.

-

Systematic & Reusable: Empowering users with a systematic library of reusable config recipes and OPs, designed to function independently of specific datasets, models, or tasks.

-

Data-in-the-loop: Allowing detailed data analyses with an automated report generation feature for a deeper understanding of your dataset. Coupled with real-time multi-dimension automatic evaluation capabilities, it supports a feedback loop at multiple stages in the LLM development process.

-

Comprehensive Processing Recipes: Offering tens of pre-built data processing recipes for pre-training, SFT, en, zh, and more scenarios.

-

User-Friendly Experience: Designed for simplicity, with comprehensive documentation, easy start guides and demo configs, and intuitive configuration with simple adding/removing OPs from existing configs.

-

Flexible & Extensible: Accommodating most types of data formats (e.g., jsonl, parquet, csv, ...) and allowing flexible combinations of OPs. Feel free to implement your own OPs for customizable data processing.

-

Enhanced Efficiency: Providing a speedy data processing pipeline requiring less memory, optimized for maximum productivity.

- Recommend Python==3.8

- gcc >= 5 (at least C++14 support)

- Run the following commands to install the latest

data_juicerversion in editable mode:

cd <path_to_data_juicer>

pip install -v -e .[all]- Or install optional dependencies:

cd <path_to_data_juicer>

pip install -v -e . # install a minimal dependencies

pip install -v -e .[tools] # install a subset of tools dependenciesThe dependency options are listed below:

| Tag | Description |

|---|---|

| . | Install minimal dependencies for basic Data-Juicer. |

| .[all] | Install all optional dependencies (all of the following) |

| .[dev] | Install dependencies for developing the package as contributors |

| .[tools] | Install dependencies for dedicated tools, such as quality classifiers. |

- Installation check:

import data_juicer as dj

print(dj.__version__)- Run

process_data.pytool with your config as the argument to process your dataset.

python tools/process_data.py --config configs/demo/process.yaml- Note: For some operators that involve third-party models or resources which are not stored locally on your computer, it might be slow for the first running because these ops need to download corresponding resources into a directory first.

The default download cache directory is

~/.cache/data_juicer. Change the cache location by setting the shell environment variable,DATA_JUICER_CACHE_HOMEto another directory, and you can also changeDATA_JUICER_MODELS_CACHEorDATA_JUICER_ASSETS_CACHEin the same way:

# cache home

export DATA_JUICER_CACHE_HOME="/path/to/another/directory"

# cache models

export DATA_JUICER_MODELS_CACHE="/path/to/another/directory/models"

# cache assets

export DATA_JUICER_ASSETS_CACHE="/path/to/another/directory/assets"- Run

analyze_data.pytool with your config as the argument to analyse your dataset.

python tools/analyze_data.py --config configs/demo/analyser.yaml- Note: Analyser only compute stats of Filter ops. So extra Mapper or Deduplicator ops will be ignored in the analysis process.

- Run

app.pytool to visualize your dataset in your browser.

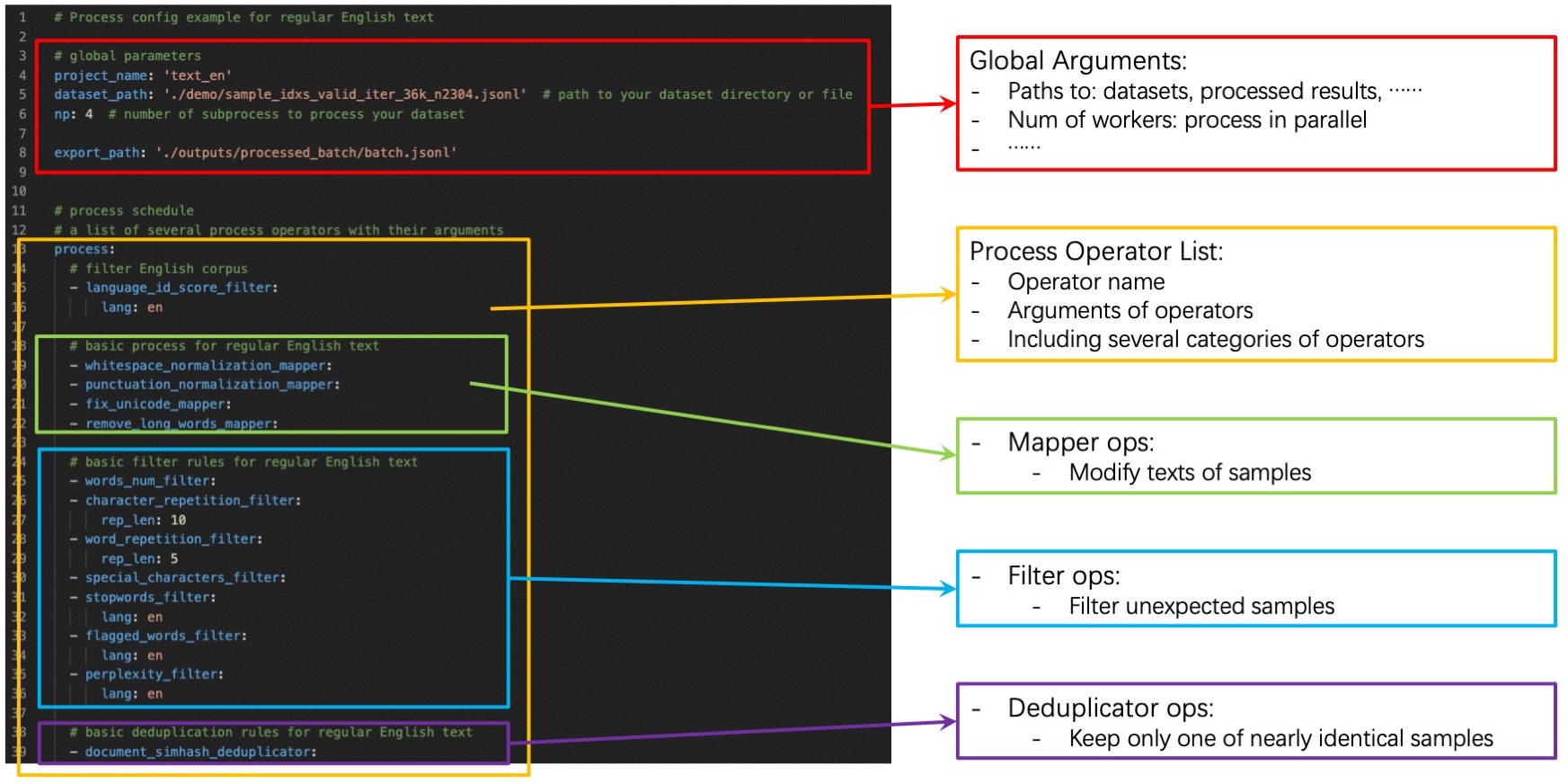

streamlit run app.py- Config files specify some global arguments, and an operator list for the

data process. You need to set:

- Global arguments: input/output dataset path, number of workers, etc.

- Operator list: list operators with their arguments used to process the dataset.

- You can build up your own config files by:

- ➖:Modify from our example config file

config_all.yamlwhich includes all ops and default arguments. You just need to remove ops that you won't use and refine some arguments of ops. - ➕:Build up your own config files from scratch. You can refer our

example config file

config_all.yaml, op documents, and advanced Build-Up Guide for developers. - Besides the yaml files, you also have the flexibility to specify just one (of several) parameters on the command line, which will override the values in yaml files.

- ➖:Modify from our example config file

python xxx.py --config configs/demo/process.yaml --language_id_score_filter.lang=en- Our formatters support some common input dataset formats for now:

- Multi-sample in one file: jsonl/json, parquet, csv/tsv, etc.

- Single-sample in one file: txt, code, docx, pdf, etc.

- However, data from different sources are complicated and diverse. Such as:

- Raw arxiv data downloaded from S3 include thousands of tar files and even more gzip files in them, and expected tex files are embedded in the gzip files so they are hard to obtain directly.

- Some crawled data include different kinds of files (pdf, html, docx, etc.). And extra information like tables, charts, and so on is hard to extract.

- It's impossible to handle all kinds of data in Data-Juicer, issues/PRs are welcome to contribute to process new data types!

- Thus, we provide some common preprocessing tools in

tools/preprocessfor you to preprocess these data.- You are welcome to make your contributions to new preprocessing tools for the community.

- We highly recommend that complicated data can be preprocessed to jsonl or parquet files.

- Overview | 概览

- Operator Zoo | 算子库

- Configs | 配置系统

- Developer Guide | 开发者指南

- Dedicated Toolkits | 专用工具箱

- Third-parties (LLM Ecosystems) | 第三方库(大语言模型生态)

- API references

- Recipes for data process in BLOOM

- Recipes for data process in RedPajama

- Refined recipes for pretraining data

- Refined recipes for SFT data

- Introduction to Data-Juicer [ModelScope]

- Data Visualization:

- Basic Statistics [ModelScope]

- Lexical Diversity [ModelScope]

- Operator Effect [ModelScope]

- Data Processing:

- Scientific Literature (e.g. ArXiv) [ModelScope]

- Programming Code (e.g. TheStack) [ModelScope]

- Chinese Instruction Data (e.g. Alpaca-CoT) [ModelScope]

- Tool Pool:

- Quality Classifier for CommonCrawl [ModelScope]

- Auto Evaluation on HELM [ModelScope]

- Data Sampling and Mixture [ModelScope]

- Data Process Loop [ModelScope]

- Data Process HPO [ModelScope]

Data-Juicer is released under Apache License 2.0.

We greatly welcome contributions of new features, bug fixes, and discussions. Please refer to How-to Guide for Developers.

Our paper is coming soon!