This repository contains resources referenced in the paper Instruction Tuning for Large Language Models: A Survey.

If you find this repository helpful, please cite the following:

@article{zhang2023instruction,

title={Instruction Tuning for Large Language Models: A Survey},

author={Zhang, Shengyu and Dong, Linfeng and Li, Xiaoya and Zhang, Sen and Sun, Xiaofei and Wang, Shuhe and Li, Jiwei and Hu, Runyi and Zhang, Tianwei and Wu, Fei and others},

journal={arXiv preprint arXiv:2308.10792},

year={2023}

}Stay tuned! More related work will be updated!

- [12 Mar, 2024] We update work (papers and projects) related to large multimodal models.

- [11 Mar, 2024] We update work (papers and projects) related to synthetic data generation and image-text generation.

- [07 Sep, 2023] The repository is created.

- [21 Aug, 2023] We release the first version of the paper.

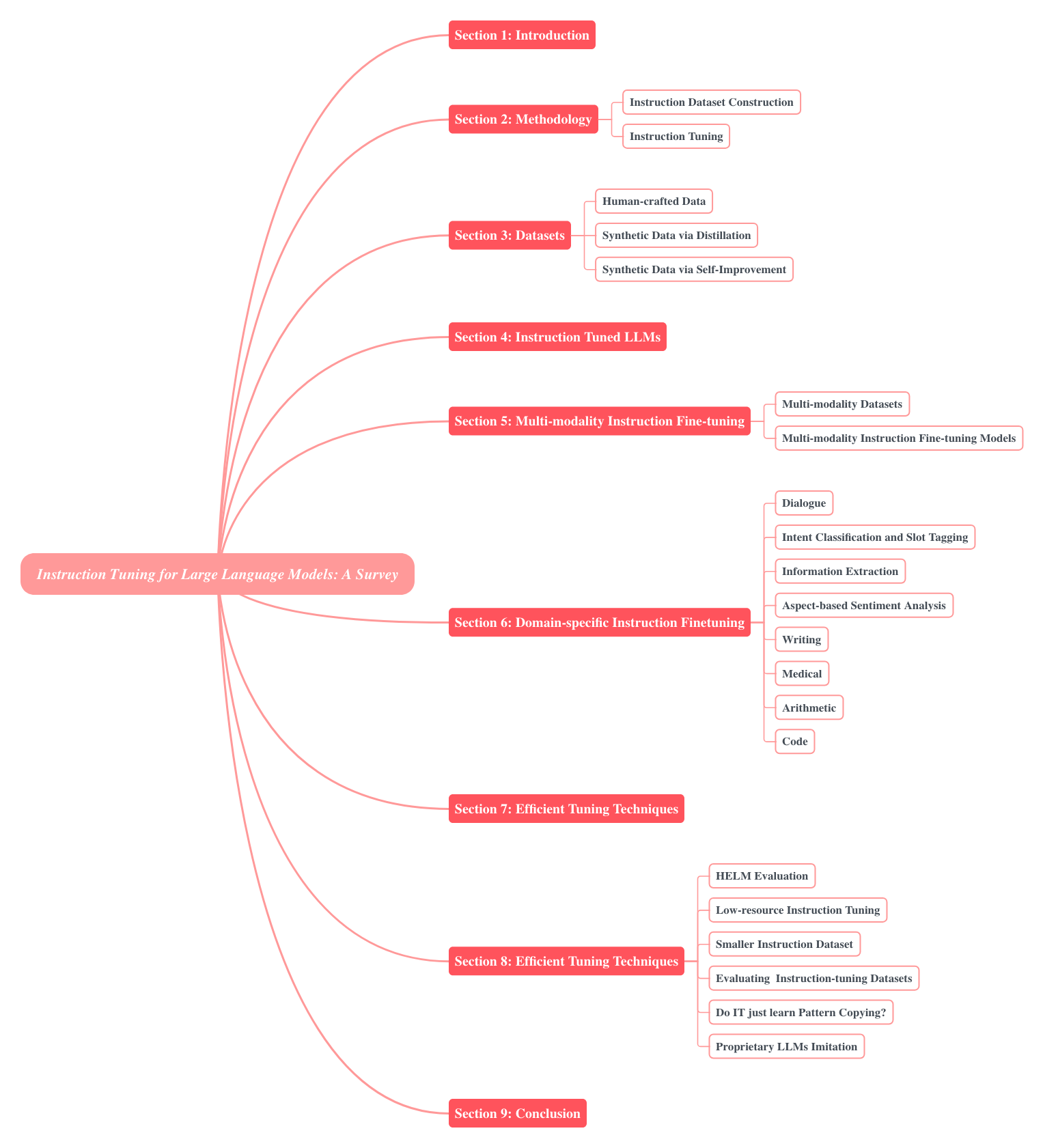

- Overview

- Instruction Tuning

- Multi-modality Instruction Tuning

- Domain-specific Instruction Tuning

- Efficient Tuning Techniques

- References

- Contact

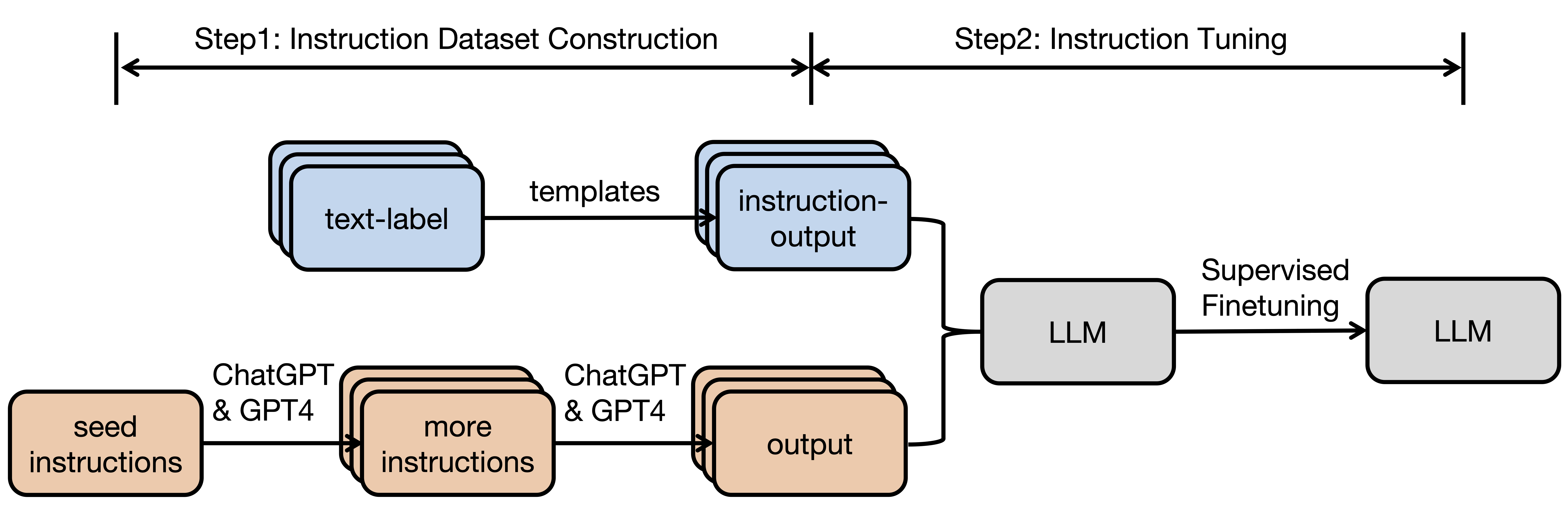

Instruction tuning (IT) refers to the process of further training large language models (LLMs) on a dataset consisting

of (instruction, output) pairs

in a supervised fashion,

which bridges the gap between the next-word prediction objective of LLMs and the users' objective of having LLMs adhere

to human instructions. The general pipeline of instruction tuning is shown in the following:

In the paper, we make a systematic review of the literature, including the general methodology of IT, the construction of IT datasets, the training of IT models, and applications to different modalities, domains and application, along with analysis on aspects that influence the outcome of IT (e.g., generation of instruction outputs, size of the instruction dataset, etc). We also review the potential pitfalls of IT along with criticism against it, along with efforts pointing out current deficiencies of existing strategies and suggest some avenues for fruitful research. The typology of the paper is as follows:

| Type | Dataset Name | Paper | Project | # of Instructions | # of Lang | Construction | Open Source |

|---|---|---|---|---|---|---|---|

| Human-Crafted | UnifiedQA [1] | paper | project | 750K | En | human-crafted | Yes |

| UnifiedSKG [2] | paper | project | 0.8M | En | human-crafted | Yes | |

| Natural Instructions [3] | paper | project | 193K | En | human-crafted | Yes | |

| Super-Natural Instructions [4] | paper | project | 5M | 55 Lang | human-crafted | Yes | |

| P3 [5] | paper | project | 12M | En | human-crafted | Yes | |

| xP3 [6] | paper | project | 81M | 46 Lang | human-crafted | Yes | |

| Flan 2021 [7] | paper | project | 4.4M | En | human-crafted | Yes | |

| COIG [8] | paper | project | - | - | - | Yes | |

| InstructGPT [9] | paper | - | 13K | Multi | human-crafted | No | |

| Dolly [10] | paper | project | 15K | En | human-crafted | Yes | |

| LIMA [11] | paper | project | 1K | En | human-crafted | Yes | |

| ChatGPT [12] | paper | - | - | Multi | human-crafted | No | |

| OpenAssistant [13] | paper | project | 161,443 | Multi | human-crafted | Yes | |

| Synthetic Data (Distillation) | OIG [14] | - | project | 43M | En | ChatGPT (No technique reports) | Yes |

| Unnatural Instructions [3] | paper | project | 240K | En | InstructGPT-generated | Yes | |

| InstructWild [15] | - | project | 104K | - | ChatGPT-Generated | Yes | |

| Evol-Instruct / WizardLM [16] | paper | project | 52K | En | ChatGPT-Generated | Yes | |

| Alpaca [17] | - | project | 52K | En | InstructGPT-generated | Yes | |

| LogiCoT [18] | paper | project | - | En | GPT-4-Generated | Yes | |

| GPT-4-LLM [19] | paper | project | 52K | En&Zh | GPT-4-Generated | Yes | |

| Vicuna [20] | - | project | 70K | En | Real User-ChatGPT Conversations | No | |

| Baize v1 [21] | paper | project | 111.5K | En | ChatGPT-Generated | Yes | |

| UltraChat [22] | paper | project | 675K | En&Zh | GPT 3/4-Generated | Yes | |

| Guanaco [23] | - | project | 534,530 | Multi | GPT (Unknonwn Version)-Generated | Yes | |

| Orca [24] | paper | project | 1.5M | En | GPT 3.5/4-Generated | Yes | |

| ShareGPT | - | project | 90K | Multi | Real User-ChatGPT Conversations | Yes | |

| WildChat | - | project | 150K | Multi | Real User-ChatGPT Conversations | Yes | |

| WizardCoder [25] | paper | - | - | Code | LLaMa 2-Generated | No | |

| Magicoder [26] | paper | project | 75K/110K | Code | GPT-3.5-Generated | Yes | |

| WaveCoder [27] | paper | - | - | Code | GPT 4-Generated | No | |

| Phi-1 [28] | paper | project | 6B Tokens | Code Q and A | GPT-3.5-Generated | Yes | |

| Phi-1.5 [29] | paper | - | - | Code Q and A | GPT-3.5-Generated | No | |

| Nectar [30] | paper | project | ~183K | En | GPT 4-Generated | Yes | |

| Synthetic Data (Self-Improvement) | Self-Instruct [31] | paper | project | 52K | En | InstructGPT-generated | Yes |

| Instruction Backtranslation [32] | paper | - | 502K | En | LLaMa-Generated | No | |

| SPIN [33] | paper | project | 49.8K | En | Zephyr-Generated | Yes |

| Model Name | # Params | Paper | Project | Base Model | Instruction Train Set | ||

|---|---|---|---|---|---|---|---|

| Self-build | Name | Size | |||||

| InstructGPT [9] | 176B | paper | - | GPT-3 [36] | Yes | - | - |

| BLOOMZ [34] | 176B | paper | project | BLOOM [37] | No | xP3 | - |

| FLAN-T5 [35] | 11B | paper | project | T5 [38] | No | FLAN 2021 | - |

| Alpaca [17] | 7B | - | project | LLaMA [39] | Yes | - | 52K |

| Vicuna [20] | 13B | - | project | LLaMA [39] | Yes | - | 70K |

| GPT-4-LLM [19] | 7B | paper | project | LLaMA [39] | Yes | - | 52K |

| Claude [40] | - | paper | - | - | Yes | - | - |

| WizardLM [16] | 7B | paper | project | LLaMA [39] | Yes | Evol-Instruct | 70K |

| ChatGLM2 [41] | 6B | paper | project | GLM[41] | Yes | - | 1.1 Tokens |

| LIMA [11] | 65B | paper | project | LLaMA [39] | Yes | 1K | |

| OPT-IML [42] | 175B | paper | project | OPT [43] | No | - | - |

| Dolly 2.0 [44] | 12B | - | project | Pythia [45] | No | - | 15K |

| Falcon-Instruct [46] | 40B | paper | project | Falcon [46] | No | - | - |

| Guanaco [23] | 7B | - | project | LLaMA [39] | Yes | - | 586K |

| Minotaur [47] | 15B | - | project | Starcoder Plus [48] | No | - | - |

| Nous-Hermes [49] | 13B | - | project | LLaMA [39] | No | - | 300K+ |

| TÜLU [50] | 6.7B | paper | project | OPT [43] | No | Mixed | - |

| YuLan-Chat [51] | 13B | - | project | LLaMA [39] | Yes | - | 250K |

| MOSS [52] | 16B | - | project | - | Yes | - | - |

| Airoboros [53] | 13B | - | project | LLaMA [39] | Yes | - | - |

| UltraLM [22] | 13B | paper | project | LLaMA [39] | Yes | - | - |

| Dataset Name | Paper | Project | Modalities | # Tasks | |

|---|---|---|---|---|---|

| Modality Pair | # Instance | ||||

| MUL-TIINSTRUCT [54] | paper | project | Image-Text | 5K to 5M per task | 62 |

| PMC-VQA [55] | paper | project | Image-Text | 227K | 9 |

| LAMM [56] | paper | project | Image-Text | 186K | 9 |

| Point Cloud-Text | 10K | 3 | |||

| Vision-Flan [57] | paper | project | Multi-Pairs | ~1M | 200+ |

| ALLAVA [58] | paper | project | Image-Text | 1.4M | 2 |

| ShareGPT4V [59] | paper | project | Image-Text | 1.2M | 2 |

| Model Name | # Params | Paper | Project | Modality | Base Model | Train set | ||

|---|---|---|---|---|---|---|---|---|

| Model Name | # Params | Self-build | Size | |||||

| InstructPix2Pix [60] | 983M | paper | project | Image-Text | Stable Diffusion [62] | 983M | Yes | 450K |

| LLaVA [61] | 13B | paper | project | Image-Text | CLIP [63] | 400M | Yes | 158K |

| LLaMA [39] | 7B | |||||||

| LLaMA [39] | 7B | |||||||

| Video-LLaMA [64] | - | paper | project | Image-Text-Video-Audio | BLIP-2 [65] | - | No | - |

| ImageBind [66] | - | |||||||

| Vicuna[20] | 7B/13B | |||||||

| InstructBLIP [67] | 12B | paper | project | Image-Text-Video | BLIP-2 [65] | - | No | - |

| Otter [68] | - | paper | project | Image-Text-Video | OpenFlamingo [69] | 9B | Yes | 2.8M |

| MultiModal-GPT [70] | - | paper | project | Image-Text-Video | OpenFlamingo [69] | 9B | No | - |

| Domain | Model Name | # Params | Paper | Project | Base Model | Train Size |

|---|---|---|---|---|---|---|

| Medical | Radiology-GPT [71] | 7B | paper | project | Alpaca[17] | 122K |

| ChatDoctor [72] | 7B | paper | project | LLaMA [39] | 122K | |

| ChatGLM-Med [73] | 6B | - | project | ChatGLM [41] | - | |

| Writing | Writing-Alpaca [74] | 7B | paper | - | LLaMA [39] | - |

| CoEdIT [75] | 11B | paper | project | FLAN-T5 [7] | 82K | |

| CoPoet [76] | 11B | paper | project | T5[38] | - | |

| Code Generation | WizardCoder [25] | 15B | paper | project | StarCoder [48] | 78K |

| Sentiment Analysis | IT-MTL [77] | 220M | paper | project | T5[38] | - |

| Arithmetic | Goat [78] | 7B | paper | project | LLaMA [39] | 1.0M |

| Information Extraction | InstructUIE [79] | 11B | paper | project | FLAN-T5 [7] | 1.0M |

| Name | Paper | Project |

|---|---|---|

| LoRA [80] | paper | project |

| HINT [81] | paper | project |

| QLoRA [82] | paper | project |

| LOMO [83] | paper | project |

| Delta-tuning [84] | paper | project |

[1] Khashabi, Daniel, Sewon Min, Tushar Khot, Ashish Sabharwal, Oyvind Tafjord, Peter Clark, and Hannaneh Hajishirzi. Unifiedqa: Crossing format boundaries with a single qa system. arXiv preprint arXiv:2005.00700 (2020). Paper

[2] Tianbao Xie, Chen Henry Wu, Peng Shi, Ruiqi Zhong, Torsten Scholak, Michihiro Yasunaga, Chien-Sheng Wu, Ming Zhong, Pengcheng Yin, Sida I. Wang, Victor Zhong, Bailin Wang, Chengzu Li, Connor Boyle, Ansong Ni, Ziyu Yao, Dragomir R. Radev, Caiming Xiong, Lingpeng Kong, Rui Zhang, Noah A. Smith, Luke Zettlemoyer, and Tao Yu. Unifiedskg: Unifying and multi-tasking structured knowledge grounding with text-to-text language models. In Conference on Empirical Methods in Natural Language Processing, 2022. Paper

[3] Mishra, Swaroop and Khashabi, Daniel and Baral, Chitta and Hajishirzi, Hannaneh. Unnatural instructions: Tuning language models with (almost) no human labor. arXiv preprint arXiv:2212.09689, 2022. Paper

[3] Or Honovich, Thomas Scialom, Omer Levy, and Timo Schick. Unnatural instructions: Tuning language models with (almost) no human labor. arXiv preprint arXiv:2212.09689, 2022. Paper

[4] Yizhong Wang, Swaroop Mishra, Pegah Alipoormolabashi, Yeganeh Kordi, Amirreza Mirzaei, Anjana Arunkumar, Arjun Ashok, Arut Selvan Dhanasekaran, Atharva Naik, David Stap, et al. Super-naturalinstructions:generalization via declarative instructions on 1600+ tasks. In EMNLP, 2022. Paper

[5] Victor Sanh, Albert Webson, Colin Raffel, Stephen H Bach, Lintang Sutawika, Zaid Alyafeai, Antoine Chaffin, Arnaud Stiegler, Teven Le Scao, Arun Raja, et al. Multitask prompted training enables zero-shot task generalization. arXiv preprint arXiv:2110.08207, 2021. Paper

[6] Niklas Muennighoff, Thomas Wang, Lintang Sutawika, Adam Roberts, Stella Biderman, Teven Le Scao, M Saiful Bari, Sheng Shen, Zheng-Xin Yong, Hailey Schoelkopf, et al. Crosslingual generalization through multitask finetuning. arXiv preprint arXiv:2211.01786, 2022. Paper

[7] Shayne Longpre, Le Hou, Tu Vu, Albert Webson, Hyung Won Chung, Yi Tay, Denny Zhou, Quoc V Le, Barret Zoph, Jason Wei, et al. The flan collection: Designing data and methods for effective instruction tuning. arXiv preprint arXiv:2301.13688, 2023. Paper

[8] Ge Zhang, Yemin Shi, Ruibo Liu, Ruibin Yuan, Yizhi Li, Siwei Dong, Yu Shu, Zhaoqun Li, Zekun Wang, Chenghua Lin, Wen-Fen Huang, and Jie Fu. Chinese open instruction generalist: A preliminary release. ArXiv, abs/2304.07987, 2023. Paper

[9] Long Ouyang, Jeffrey Wu, Xu Jiang, Diogo Almeida, Carroll Wainwright, Pamela Mishkin, Chong Zhang, Sandhini Agarwal, Katarina Slama, Alex Ray, et al. Training language models to follow instructions with human feedback. Advances in Neural Information Processing Systems, 35:27730– 27744, 2022. Paper

[10] Mike Conover, Matt Hayes, Ankit Mathur, Xiangrui Meng, Jianwei Xie, Jun Wan, Sam Shah, Ali Ghodsi, Patrick Wendell, Matei Zaharia, et al. Free dolly: Introducing the world’s first truly open instruction- tuned llm, 2023. Paper

[11] Chunting Zhou, Pengfei Liu, Puxin Xu, Srini Iyer, Jiao Sun, Yuning Mao, Xuezhe Ma, Avia Efrat, Ping Yu, L. Yu, Susan Zhang, Gargi Ghosh, Mike Lewis, Luke Zettlemoyer, and Omer Levy. Lima: Less is more for alignment. ArXiv, abs/2305.11206, 2023. Paper

[12] OpenAI. Introducing chatgpt. Blog post openai.com/blog/chatgpt, 2022. Paper

[13] Andreas Köpf, Yannic Kilcher, Dimitri von Rütte, Sotiris Anagnostidis, Zhi-Rui Tam, Keith Stevens, Abdullah Barhoum, Nguyen Minh Duc, Oliver Stanley, Richárd Nagyfi, et al. Openassistant conversations–democratizing large language model alignment. arXiv preprint arXiv:2304.07327, 2023. Paper

[14] LAION.ai. Oig: the open instruction generalist dataset, 2023.

[15] Fuzhao Xue, Kabir Jain, Mahir Hitesh Shah,

Zangwei Zheng, and Yang You. Instruction

in the wild: A user-based instruction dataset.

github.com/XueFuzhao/InstructionWild,2023.

[16] Can Xu, Qingfeng Sun, Kai Zheng, Xiubo Geng, Pu Zhao, Jiazhan Feng, Chongyang Tao, and Daxin Jiang. Wizardlm: Empowering large language models to follow complex instructions, 2023. Paper

[17] Rohan Taori, Ishaan Gulrajani, Tianyi Zhang,

Yann Dubois, Xuechen Li, Carlos Guestrin, Percy

Liang, and Tatsunori B Hashimoto. Alpaca:

A strong, replicable instruction-following model.

Stanford Center for Research on Foundation Models.

https://crfm.stanford.edu/2023/03/13/alpaca.html,

3(6):7, 2023.

[18] Hanmeng Liu, Zhiyang Teng, Leyang Cui, Chaoli Zhang, Qiji Zhou, and Yue Zhang. Logicot: Logical chain-of-thought instruction-tuning data collection with gpt-4. ArXiv, abs/2305.12147, 2023. Paper

[19] Baolin Peng, Chunyuan Li, Pengcheng He, Michel Galley, and Jianfeng Gao. Instruction tuning with gpt-4. arXiv preprint arXiv:2304.03277, 2023. Paper

[20] Wei-Lin Chiang, Zhuohan Li, Zi Lin, Ying Sheng,

Zhanghao Wu, Hao Zhang, Lianmin Zheng, Siyuan

Zhuang, Yonghao Zhuang, Joseph E Gonzalez, et al.

Vicuna: An open-source chatbot impressing gpt-4

with 90% chatgpt quality. See https://vicuna.lmsys.org (accessed 14 April 2023), 2023.

[21] Canwen Xu and Daya Guo and Nan Duan and Julian McAuley. Baize: An Open-Source Chat Model with Parameter-Efficient Tuning on Self-Chat Data. Paper

[22] Ning Ding, Yulin Chen, Bokai Xu, Yujia Qin, Zhi Zheng, Shengding Hu, Zhiyuan Liu, Maosong Sun, and Bowen Zhou. Enhancing chat language models by scaling high-quality instructional conversations. arXiv preprint arXiv:2305.14233, 2023. Paper

[23] JosephusCheung. Guanaco: Generative universal assistant for natural-language adaptive context-aware omnilingual outputs, 2021.

[24] Subhabrata Mukherjee, Arindam Mitra, Ganesh Jawahar, Sahaj Agarwal, Hamid Palangi, and Ahmed Awadallah. 2023. Orca: Progressive learning from complex explanation traces of gpt-4. arXiv preprint arXiv:2306.02707. Paper

[25] Ziyang Luo, Can Xu, Pu Zhao, Qingfeng Sun, Xiubo Geng, Wenxiang Hu, Chongyang Tao, Jing Ma, Qingwei Lin, and Daxin Jiang. 2023. Wizardcoder: Empowering code large language models with evol-instruct. Paper

[26] Yuxiang Wei, Zhe Wang, Jiawei Liu, Yifeng Ding, and Lingming Zhang. 2023b. Magicoder: Source code is all you need. arXiv preprint arXiv:2312.02120. Paper

[27] Zhaojian Yu, Xin Zhang, Ning Shang, Yangyu Huang, Can Xu, Yishujie Zhao, Wenxiang Hu, and Qiufeng Yin. 2023. Wavecoder: Widespread and versatile enhanced instruction tuning with refined data generation. arXiv preprint arXiv:2312.14187. Paper

[28] Suriya Gunasekar, Yi Zhang, Jyoti Aneja, Caio César Teodoro Mendes, Allie Del Giorno, Sivakanth Gopi, Mojan Javaheripi, Piero Kauffmann, Gustavo de Rosa, Olli Saarikivi, et al. 2023. Textbooks are all you need. arXiv preprint arXiv:2306.11644. Paper

[29] Yuanzhi Li, Sébastien Bubeck, Ronen Eldan, Allie Del Giorno, Suriya Gunasekar, and Yin Tat Lee. 2023h. Textbooks are all you need ii: phi-1.5 technical report. arXiv preprint arXiv:2309.05463. Paper

[30] Banghua Zhu, Evan Frick, Tianhao Wu, Hanlin Zhu, and Jiantao Jiao. 2023a. Starling-7b: Improving llm helpfulness & harmlessness with rlaif. Paper

[31] Yizhong Wang, Yeganeh Kordi, Swaroop Mishra, Alisa Liu, Noah A Smith, Daniel Khashabi, and Hannaneh Hajishirzi. Self-instruct: Aligning language model with self generated instructions. arXiv preprint arXiv:2212.10560, 2022. Paper

[32] Xian Li, Ping Yu, Chunting Zhou, Timo Schick, Luke Zettlemoyer, Omer Levy, Jason Weston, and Mike Lewis. 2023g. Self-alignment with instruction backtranslation. arXiv preprint arXiv:2308.06259. Paper

[33] Zixiang Chen, Yihe Deng, Huizhuo Yuan, Kaixuan Ji, and Quanquan Gu. 2024. Self-play fine-tuning converts weak language models to strong language models. arXiv preprint arXiv:2401.01335. Paper

[34] Niklas Muennighoff, Thomas Wang, Lintang Sutawika, Adam Roberts, Stella Biderman, Teven Le Scao, M Saiful Bari, Sheng Shen, Zheng-Xin Yong, Hailey Schoelkopf, et al. 2022. Crosslingual generalization through multitask finetuning. arXiv preprint arXiv:2211.01786. Paper

[35] Hyung Won Chung, Le Hou, S. Longpre, Barret Zoph, Yi Tay, William Fedus, Eric Li, Xuezhi Wang, Mostafa Dehghani, Siddhartha Brahma, Albert Webson, Shixiang Shane Gu, Zhuyun Dai, Mirac Suzgun, Xinyun Chen, Aakanksha Chowdhery, Dasha Valter, Sharan Narang, Gaurav Mishra, Adams Wei Yu, Vincent Zhao, Yanping Huang, Andrew M. Dai, Hongkun Yu, Slav Petrov, Ed Huai hsin Chi, Jeff Dean, Jacob Devlin, Adam Roberts, Denny Zhou, Quoc V. Le, and Jason Wei. Scaling instruction-finetuned language models. ArXiv, abs/2210.11416, 2022. Paper

[36] Tom B. Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared Kaplan, Prafulla Dhariwal, Arvind Neelakantan, Pranav Shyam, Girish Sastry, Amanda Askell, Sandhini Agarwal, Ariel Herbert- Voss, Gretchen Krueger, T. J. Henighan, Rewon Child, Aditya Ramesh, Daniel M. Ziegler, Jeff Wu, Clemens Winter, Christopher Hesse, Mark Chen, Eric Sigler, Mateusz Litwin, Scott Gray, Benjamin Chess, Jack Clark, Christopher Berner, Sam McCandlish, Alec Radford, Ilya Sutskever, and Dario Amodei. Language models are few-shot learners. ArXiv, abs/2005.14165, 2020. Paper

[37] Scao, Teven Le, Angela Fan, Christopher Akiki, Ellie Pavlick, Suzana Ilić, Daniel Hesslow, Roman Castagné et al. Bloom: A 176b-parameter open-access multilingual language model. arXiv preprint arXiv:2211.05100 (2022). Paper

[38] Colin Raffel, Noam M. Shazeer, Adam Roberts, Katherine Lee, Sharan Narang, Michael Matena, Yanqi Zhou, Wei Li, and Peter J. Liu. Exploring the limits of transfer learning with a unified text-to-text transformer. ArXiv, abs/1910.10683, 2019. Paper

[39] Hugo Touvron, Thibaut Lavril, Gautier Izacard, Xavier Martinet, Marie-Anne Lachaux, Timothée Lacroix, Baptiste Rozière, Naman Goyal, Eric Hambro, Faisal Azhar, Aur’elien Rodriguez, Armand Joulin, Edouard Grave, and Guillaume Lample. Llama: Open and efficient foundation language models. ArXiv, abs/2302.13971, 2023. Paper

[40] Yuntao Bai, Saurav Kadavath, Sandipan Kundu, Amanda Askell, Jackson Kernion, Andy Jones, Anna Chen, Anna Goldie, Azalia Mirhoseini, Cameron McKinnon, et al. Constitutional ai: Harmlessness from ai feedback. arXiv preprint arXiv:2212.08073, 2022. Paper

[41] Zhengxiao Du, Yujie Qian, Xiao Liu, Ming Ding, Jiezhong Qiu, Zhilin Yang, and Jie Tang. Glm: General language model pretraining with autoregressive blank infilling. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 320–335, 2022. Paper

[42] Srinivas Iyer, Xiaojuan Lin, Ramakanth Pasunuru, Todor Mihaylov, Daniel Simig, Ping Yu, Kurt Shuster, Tianlu Wang, Qing Liu, Punit Singh Koura, Xian Li, Brian O’Horo, Gabriel Pereyra, Jeff Wang, Christopher Dewan, Asli Celikyilmaz, Luke Zettlemoyer, and Veselin Stoyanov. Opt-iml: Scaling language model instruction meta learning through the lens of generalization. ArXiv, abs/2212.12017, 2022. Paper

[43] Susan Zhang, Stephen Roller, Naman Goyal, Mikel Artetxe, Moya Chen, Shuohui Chen, Christopher Dewan, Mona T. Diab, Xian Li, Xi Victoria Lin, Todor Mihaylov, Myle Ott, Sam Shleifer, Kurt Shuster, Daniel Simig, Punit Singh Koura, Anjali Sridhar, Tianlu Wang, and Luke Zettlemoyer. 2022a. Opt: Open pre-trained transformer language models. ArXiv, abs/2205.01068. Paper

[44] Mike Conover, Matt Hayes, Ankit Mathur, Xiangrui Meng, Jianwei Xie, Jun Wan, Sam Shah, Ali Ghodsi, Patrick Wendell, Matei Zaharia, et al. Free dolly: Introducing the world’s first truly open instruction- tuned llm, 2023.

[45] Stella Rose Biderman, Hailey Schoelkopf, Quentin G. Anthony, Herbie Bradley, Kyle O’Brien, Eric Hallahan, Mohammad Aflah Khan, Shivanshu Purohit, USVSN Sai Prashanth, Edward Raff, Aviya Skowron, Lintang Sutawika, and Oskar van der Wal. Pythia: A suite for analyzing large language models across training and scaling. ArXiv, abs/2304.01373, 2023. Paper

[46] Ebtesam Almazrouei, Hamza Alobeidli, Abdulaziz Alshamsi, Alessandro Cappelli, Ruxandra Cojocaru, Merouane Debbah, Etienne Goffinet, Daniel Heslow, Julien Launay, Quentin Malartic, Badreddine Noune, Baptiste Pannier, and Guilherme Penedo. Falcon- 40B: an open large language model with state-of-the- art performance. 2023. Paper

[47] OpenAccess AI Collective. software: huggingface.co/openaccess-ai-collective/minotaur- 15b, 2023.

[48] Raymond Li, Loubna Ben Allal, Yangtian Zi, Niklas Muennighoff, Denis Kocetkov, Chenghao Mou, Marc Marone, Christopher Akiki, Jia Li, Jenny Chim, et al. Starcoder: may the source be with you! arXiv preprint arXiv:2305.06161, 2023. Paper

[49] NousResearch. software: huggingface.co/NousResearch/Nous-Hermes-13b, 2023.

[50] Yizhong Wang, Hamish Ivison, Pradeep Dasigi, Jack Hessel, Tushar Khot, Khyathi Raghavi Chandu, David Wadden, Kelsey MacMillan, Noah A. Smith, Iz Beltagy, and Hanna Hajishirzi. How far can camels go? exploring the state of instruction tuning on open resources. ArXiv, abs/2306.04751, 2023. Paper

[51] YuLan-Chat-Team. Yulan-chat: An open- source bilingual chatbot. github.com/RUC-GSAI/YuLan-Chat, 2023.

[52] Sun Tianxiang and Qiu Xipeng. Moss. Blog post txsun1997.github.io/blogs/moss.html, 2023.

[53] Jon Durbin. Airoboros. software: github.com/jondurbin/airoboros, 2023.

[54] Zhiyang Xu, Ying Shen, and Lifu Huang. Multiinstruct: Improving multi-modal zero- shot learning via instruction tuning. ArXiv, abs/2212.10773, 2022. Paper

[55] Xiaoman Zhang, Chaoyi Wu, Ziheng Zhao, Weixiong Lin, Ya Zhang, Yanfeng Wang, and Weidi Xie. Pmc-vqa: Visual instruction tuning for medical visual question answering. ArXiv, abs/2305.10415. 2023. Paper

[56] Zhenfei Yin, Jiong Wang, Jianjian Cao, Zhelun Shi, Dingning Liu, Mukai Li, Lu Sheng, Lei Bai, Xiaoshui Huang, Zhiyong Wang, Wanli Ouyang, and Jing Shao. Lamm: Language-assisted multi-modal instruction- tuning dataset, framework, and benchmark. ArXiv, abs/2306.06687, 2023. Paper

[57] Zhiyang Xu, Chao Feng, Rulin Shao, Trevor Ashby, Ying Shen, Di Jin, Yu Cheng, Qifan Wang, and Lifu Huang. 2024. Vision-flan: Scaling human-labeled tasks in visual instruction tuning. arXiv preprint arXiv:2402.11690. Paper

[58] Guiming Hardy Chen, Shunian Chen, Ruifei Zhang, Junying Chen, Xiangbo Wu, Zhiyi Zhang, Zhihong Chen, Jianquan Li, Xiang Wan, and Benyou Wang. 2024a. Allava: Harnessing gpt4v-synthesized data for a lite vision-language model. arXiv preprint arXiv:2402.11684. Paper

[59] Lin Chen, Jisong Li, Xiaoyi Dong, Pan Zhang, Conghui He, Jiaqi Wang, Feng Zhao, and Dahua Lin. 2023a. Sharegpt4v: Improving large multi- modal models with better captions. arXiv preprint arXiv:2311.12793. Paper

[60] Tim Brooks, Aleksander Holynski, and Alexei A. Efros. Instructpix2pix: Learning to follow image editing instructions. ArXiv, abs/2211.09800, 2022. Paper

[61] Haotian Liu, Chunyuan Li, Qingyang Wu, and Yong Jae Lee. Visual instruction tuning. ArXiv, abs/2304.08485, 2023. Paper

[62] Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Björn Ommer. High- resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 10684–10695, 2022. Paper

[63] Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, Gretchen Krueger, and Ilya Sutskever. Learning transferable visual models from natural language supervision. In International Conference on Machine Learning, 2021. Paper

[64] Hang Zhang, Xin Li, and Lidong Bing. Video- llama: An instruction-tuned audio-visual language model for video understanding. arXiv preprint arXiv:2306.02858, 2023. Paper

[65] Junnan Li, Dongxu Li, Silvio Savarese, and Steven Hoi. BLIP-2: bootstrapping language-image pre- training with frozen image encoders and large language models. In ICML, 2023. Paper

[66] Rohit Girdhar, Alaaeldin El-Nouby, Zhuang Liu, Mannat Singh, Kalyan Vasudev Alwala, Armand Joulin, and Ishan Misra. Imagebind: One embedding space to bind them all. In CVPR, 2023. Paper

[67] Wenliang Dai, Junnan Li, Dongxu Li, Anthony Meng Huat Tiong, Junqi Zhao, Weisheng Wang, Boyang Li, Pascale Fung, and Steven Hoi. Instructblip: Towards general-purpose vision- language models with instruction tuning. ArXiv, abs/2305.06500, 2023. Paper

[68] Bo Li, Yuanhan Zhang, Liangyu Chen, Jinghao Wang, Jingkang Yang, and Ziwei Liu. Otter: A multi-modal model with in-context instruction tuning. ArXiv, abs/2305.03726, 2023. Paper

[69] Anas Awadalla, Irena Gao, Joshua Gardner, Jack Hessel, Yusuf Hanafy, Wanrong Zhu, Kalyani Marathe, Yonatan Bitton, Samir Gadre, Jenia Jitsev, et al. Openflamingo, 2023.

[70] Tao Gong, Chengqi Lyu, Shilong Zhang, Yudong Wang, Miao Zheng, Qianmengke Zhao, Kuikun Liu, Wenwei Zhang, Ping Luo, and Kai Chen. Multimodal-gpt: A vision and language model for dialogue with humans. ArXiv, abs/2305.04790, 2023. Paper

[71] Zheng Liu, Aoxiao Zhong, Yiwei Li, Longtao Yang, Chao Ju, Zihao Wu, Chong Ma, Peng Shu, Cheng Chen, Sekeun Kim, Haixing Dai, Lin Zhao, Dajiang Zhu, Jun Liu, Wei Liu, Dinggang Shen, Xiang Li, Quanzheng Li, and Tianming Liu. Radiology-gpt: A large language model for radiology. 2023. Paper

[72] Yunxiang Li, Zihan Li, Kai Zhang, Ruilong Dan, and You Zhang. Chatdoctor: A medical chat model fine-tuned on llama model using medical domain knowledge. ArXiv, abs/2303.14070, 2023. Paper

[73] Sendong Zhao Bing Qin Ting Liu Haochun Wang, Chi Liu. Chatglm-med. github.com/SCIR- HI/Med-ChatGLM, 2023.

[74] yue Zhang, Leyang Cui, Deng Cai, Xinting Huang, Tao Fang, and Wei Bi. 2023d. Multi-task instruction tuning of llama for specific scenarios: A preliminary study on writing assistance. ArXiv, abs/2305.13225. Paper

[75] Vipul Raheja, Dhruv Kumar, Ryan Koo, and Dongyeop Kang. 2023. Coedit: Text editing by task-specific instruction tuning. ArXiv, abs/2305.09857. Paper

[76] Tuhin Chakrabarty, Vishakh Padmakumar, and Hengxing He. 2022. Help me write a poem-instruction tuning as a vehicle for collaborative poetry writing. ArXiv, abs/2210.13669. Paper

[77] Siddharth Varia, Shuai Wang, Kishaloy Halder, Robert Vacareanu, Miguel Ballesteros, Yassine Benajiba, Neha Ann John, Rishita Anubhai, Smaranda Muresan, and Dan Roth. 2022. Instruction tuning for few-shot aspect-based sentiment analysis. ArXiv, abs/2210.06629. Paper

[78] Tiedong Liu and Bryan Kian Hsiang. Goat: Fine-tuned llama outperforms gpt-4 on arithmetic tasks. arXiv preprint arXiv:2305.14201, 2023. Paper

[79] Xiao Wang, Wei Zhou, Can Zu, Han Xia, Tianze Chen, Yuan Zhang, Rui Zheng, Junjie Ye, Qi Zhang, Tao Gui, Jihua Kang, J. Yang, Siyuan Li, and Chunsai Du. Instructuie: Multi-task instruction tuning for unified information extraction. ArXiv, abs/2304.08085, 2023. Paper

[80] Edward J Hu, Yelong Shen, Phillip Wallis, Zeyuan Allen-Zhu, Yuanzhi Li, Shean Wang, Lu Wang, and Weizhu Chen. 2021. Lora: Low-rank adaptation of large language models. arXiv preprint arXiv:2106.09685. Paper

[81] Hamish Ivison, Akshita Bhagia, Yizhong Wang, Hannaneh Hajishirzi, and Matthew E. Peters. 2022. Hint: Hypernetwork instruction tuning for efficient zero-shot generalisation. ArXiv, abs/2212.10315. Paper

[82] Tim Dettmers, Artidoro Pagnoni, Ari Holtzman, and Luke Zettlemoyer. 2023. Qlora: Efficient finetuning of quantized llms. arXiv preprint arXiv:2305.14314. Paper

[83] Kai Lv, Yuqing Yang, Tengxiao Liu, Qi jie Gao, Qipeng Guo, and Xipeng Qiu. 2023. Full parameter fine-tuning for large language models with limited resources. Paper

[84] Weize Chen, Jing Yi, Weilin Zhao, Xiaozhi Wang, Zhiyuan Liu, Haitao Zheng, Jianfei Chen, Y. Liu, Jie Tang, Juanzi Li, and Maosong Sun. 2023b. Parameter-efficient fine-tuning of large-scale pre-trained language models. Nature Machine Intelligence, 5:220–235. Paper

If you have any questions or suggestions, please feel free to create an issue or send an e-mail to [email protected].