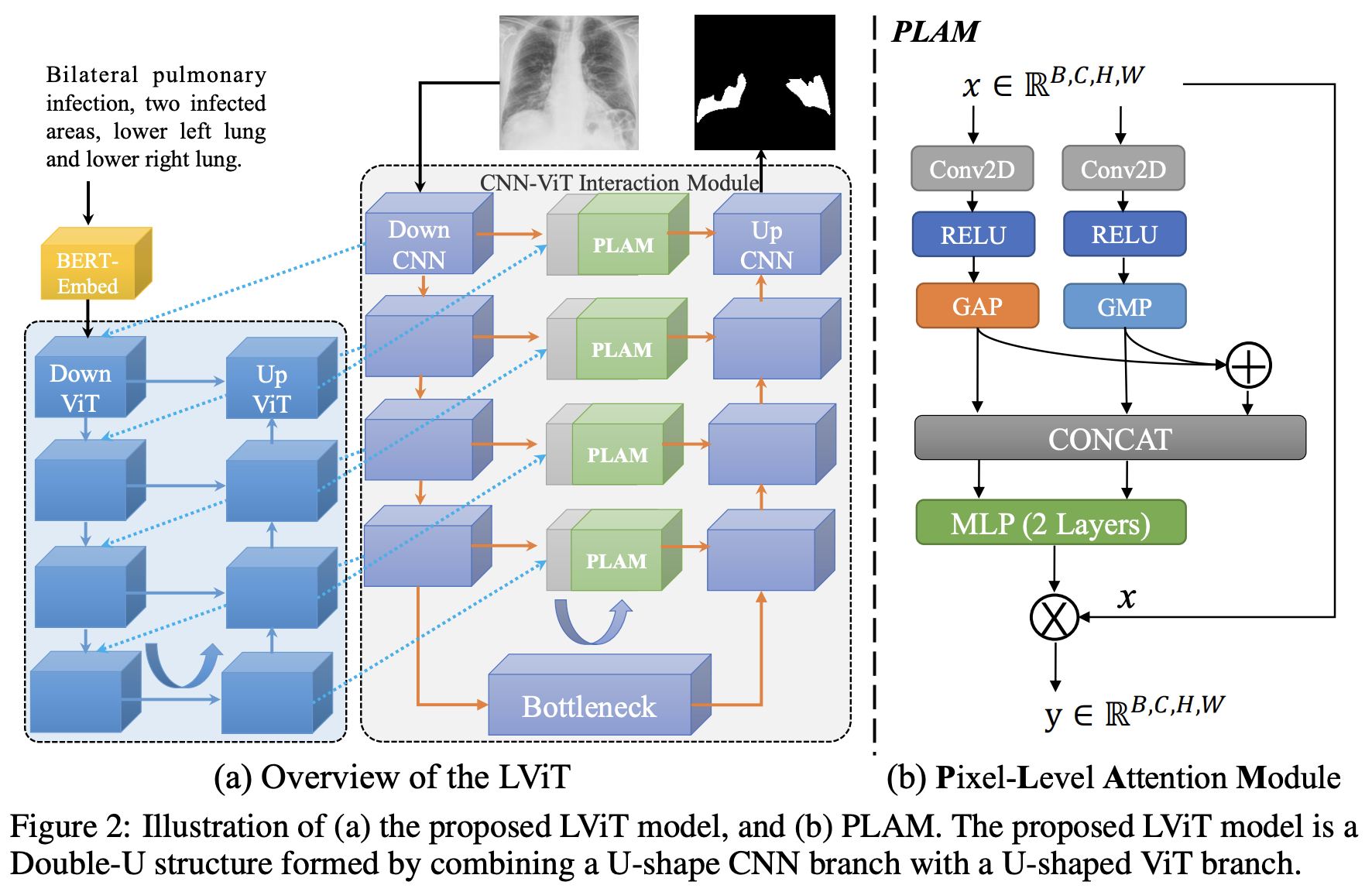

This repo is the official implementation of "LViT: Language meets Vision Transformer in Medical Image Segmentation" Paper Link

Install from the requirements.txt using:

pip install -r requirements.txt

The original data can be downloaded in following links:

-

QaTa-COV19 Dataset - Link (Original)

-

MoNuSeG Dataset - Link (Original)

(Note: The text annotation of QaTa-COV19 dataset will be released in the future.)

Then prepare the datasets in the following format for easy use of the code:

├── datasets

├── Covid19

│ ├── Test_Folder

| | ├── Test_text.xlsx

│ │ ├── img

│ │ └── labelcol

│ ├── Train_Folder

| | ├── Train_text.xlsx

│ │ ├── img

│ │ └── labelcol

│ └── Val_Folder

| ├── Val_text.xlsx

│ ├── img

│ └── labelcol

└── MoNuSeg

├── Test_Folder

| ├── Test_text.xlsx

│ ├── img

│ └── labelcol

├── Train_Folder

| ├── Train_text.xlsx

│ ├── img

│ └── labelcol

└── Val_Folder

├── Val_text.xlsx

├── img

└── labelcol

You can replace LVIT with U-Net for pre training and run:

python train_model.py

You can train to get your own model. It should be noted that using the pre-trained model in the step 2.1 will get better performance or you can simply change the model_name from LViT to LViT_pretrain in config.

python train_model.py

Here, we provide pre-trained weights on QaTa-COV19 and MoNuSeg, if you do not want to train the models by yourself, you can download them in the following links:

(Note: the pre-trained model will be released in the future.)

- QaTa-COV19:

- MoNuSeg:

First, change the session name in Config.py as the training phase. Then run:

python test_model.py

You can get the Dice and IoU scores and the visualization results.

| Dataset | Model Name | Dice (%) | IoU (%) |

|---|---|---|---|

| QaTa-COV19 | U-Net | 79.02 | 69.46 |

| QaTa-COV19 | LViT-T | 83.66 | 75.11 |

| MoNuSeg | U-Net | 76.45 | 62.86 |

| MoNuSeg | LViT-T | 80.36 | 67.31 |

| MoNuSeg | LViT-T w/o pretrain | 79.98 | 66.83 |

In our code, we carefully set the random seed and set cudnn as 'deterministic' mode to eliminate the randomness. However, there still exsist some factors which may cause different training results, e.g., the cuda version, GPU types, the number of GPUs and etc. The GPU used in our experiments is 2-card NVIDIA V100 (32G) and the cuda version is 11.2. And the upsampling operation has big problems with randomness for multi-GPU cases. See https://pytorch.org/docs/stable/notes/randomness.html for more details.