A Knowledge Graph Checking Benchmark of AI Agent for Biomedical Science.

[Paper] [Project Page] [HuggingFace]

Pursuing artificial intelligence for biomedical science, a.k.a. AI Scientist, draws increasing attention, where one common approach is to build a copilot agent driven by Large Language Models (LLMs).

However, to evaluate such systems, people either rely on direct Question-Answering (QA) to the LLM itself, or in a biomedical experimental manner. How to precisely benchmark biomedical agents from an AI Scientist perspective remains largely unexplored. To this end, we draw inspiration from one most important abilities of scientists, understanding the literature, and introduce BioKGBench.

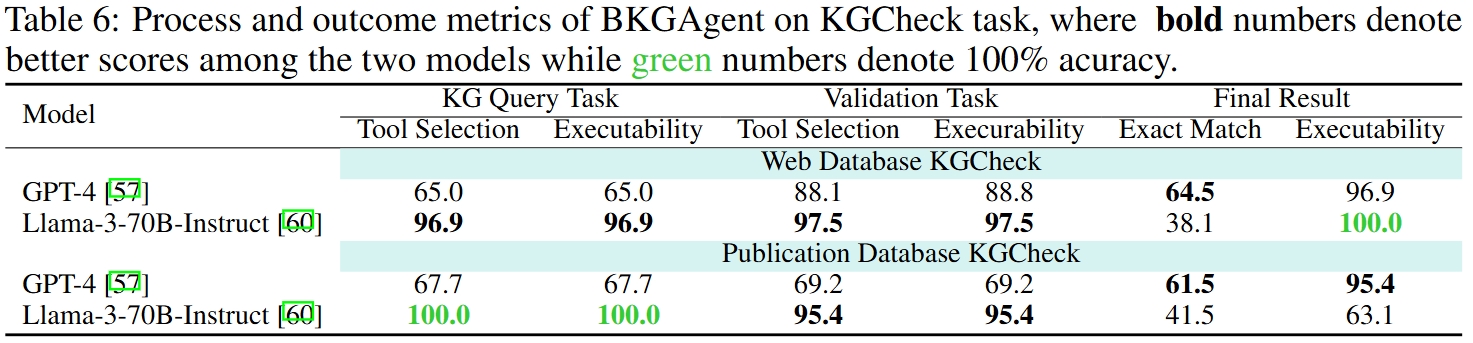

In contrast to traditional evaluation benchmark that only focuses on factual QA, where the LLMs are known to have hallucination issues, we first disentangle Understanding Literature into two atomic abilities, i) Understanding the unstructured text from research papers by performing scientific claim verification, and ii) Ability to interact with structured Knowledge-Graph Question-Answering (KGQA) as a form of Literature grounding. We then formulate a novel agent task, dubbed KGCheck, using KGQA and domain-based Retrieval-Augmented Generation (RAG) to identify the factual errors of existing large-scale knowledge graph databases. We collect over two thousand data for two atomic tasks and 225 high-quality annotated data for the agent task. Surprisingly, we discover that state-of-the-art agents, both daily scenarios and biomedical ones, have either failed or inferior performance on our benchmark. We then introduce a simple yet effective baseline, dubbed BKGAgent. On the widely used popular dataset, we discover over 90 factual errors which yield the effectiveness of our approach, yields substantial value for both the research community or practitioners in the biomedical domain.

BioKGBench

|-- assets

| `-- img

|-- config

| |-- kg_config.yml # config for build kg and connect to neo4j

| `-- llm_config.yml # config for llm

|-- data

| |-- bioKG # dataset for build kg

| |-- kgcheck # dataset for KGCheck experiment

| |-- kgqa # dataset for KGQA experiment

| `-- scv # dataset for SCV experiment

`-- tasks

|-- KGCheck # KGCheck task

|-- KGQA # KGQA task

|-- SCV # SCV task

`-- utils

|-- agent_fucs # agent functions

|-- embedding # embedding model starter for scv task

|-- kg # kg builder and kg connecotr

|-- constant_.py # constant variables

`-- threadpool_concurrency_.py # threadpool concurrency method

Dataset(Need to download from huggingface)

- bioKG: The knowledge graph used in the dataset.

- KGCheck: Given a knowledge graph and a scientific claim, the agent needs to check whether the claim is supported by the knowledge graph. The agent can interact with the knowledge graph by asking questions and receiving answers.

- Dev: 20 samples

- Test: 205 samples

- corpus: 51 samples

- KGQA: Given a knowledge graph and a question, the agent needs to answer the question based on the knowledge graph.

- Dev: 60 samples

- Test: 638 samples

- SCV: Given a scientific claim and a research paper, the agent needs to check whether the claim is supported by the research paper.

- Dev: 120 samples

- Test: 1265 samples

- corpus: 5664 samples

Tasks

-

KGCheck: Given a knowledge graph and a scientific claim, the agent needs to check whether the claim is supported by the knowledge graph. The agent can interact with the knowledge graph by asking questions and receiving answers.

-

KGQA: Given a knowledge graph and a question, the agent needs to answer the question based on the knowledge graph.

-

SCV: Given a scientific claim and a research paper, the agent needs to check whether the claim is supported by the research paper.

[2024-06-06] BioKGBench v0.1.0 is released.

-

This project has provided an environment setting file of conda, users can easily reproduce the environment by the following commands:

conda create -n agent4s-biokg python=3.10 conda activate agent4s-biokg pip install -r requirements.txt

-

Important Note about KG

- Building this knowledge graph (KG) locally requires at least 26GB of disk space.

- We provide the TSV files for constructing the KG in the

data/bioKGdirectory. These files are parsed from the following databases. The databases have their own licenses, and the use of the KG and data files still requires compliance with these data use restrictions. Please, visit the data sources directly for more information:

Source type Source URL Reference Database UniProt https://www.uniprot.org/ PubMed Database TISSUES https://tissues.jensenlab.org/ PubMed Database STRING https://string-db.org/ PubMed Database SMPDB https://smpdb.ca/ PubMed Database SIGNOR https://signor.uniroma2.it/ PubMed Database Reactome https://reactome.org/ PubMed Database Intact https://www.ebi.ac.uk/intact/ PubMed Database HGNC https://www.genenames.org/ PubMed Database DisGeNET https://www.disgenet.org/ PubMed Database DISEASES https://diseases.jensenlab.org/ PubMed Ontology Disease Ontology https://disease-ontology.org/ PubMed Ontology Brenda Tissue Ontology https://www.brenda-enzymes.org/ontology.php?ontology_id=3 PubMed Ontology Gene Ontology https://geneontology.org/ PubMed Ontology Protein Modification Ontology https://www.ebi.ac.uk/ols/ontologies/mod PubMed Ontology Molecular Interactions Ontology https://www.ebi.ac.uk/ols/ontologies/mi PubMed

Obtaining dataset:

The dataset can be found in the [release]. The dataset is divided into three parts: KGCheck, KGQA, and SCV, every part is split into Dev and Test.

[🤗 BioKGBench-Dataset]

git lfs install

GIT_LFS_SKIP_SMUDGE=1 git clone https://huggingface.co/datasets/AutoLab-Westlake/BioKGBench-Dataset ./data

cd data

git lfs pull

Building Knowledge Graph:

-

Start neo4j

By default, we download the data to thedata/bioKGfolder, and start neo4j using docker.docker run -d --name neo4j -p 7474:7474 -p 7687:7687 -v $PWD/data/bioKG:/var/lib/neo4j/import neo4j:4.2.3 -

Config

You need to modify the configuration filekg_config.ymlin theconfigfolder.python -m tasks.utils.kg.graphdb_builder.builder

Running Baseline:

-

Config

You need to modify the configuration filellm_config.ymlin theconfigfolder. -

KGCheck:

run experiment

--data_file: the path of the dataset file.python -m tasks.KGCheck.team --data_file data/kgcheck/dev.json

evaluate

--history_file: the path of the log file.

--golden_answer_file: the path of the golden answer file.python -m tasks.KGCheck.evalutation.evaluate --history_file results/kgcheck/log_1718880808.620556.txt --golden_answer_file data/kgcheck/dev.json

-

KGQA:

In this section, we reference the evaluation framework of AgentBench.First, modify the configuration files under

tasks/KGQA/configs:- You can modify the data path in the

tasks/KGQA/configs/tasks/kg.yamlfile. The default path isdata/kgqa/test.json. - In the configuration files under

tasks/KGQA/configs/agents, configure your agent. We have provided template configuration files for the OpenAI model, ChatGLM, and locally deployed models using vLLM. Please fill in the corresponding API Key for API-based LLMs or the address of your locally deployed model for OSS LLMs. - Fill in the corresponding IP and port in

tasks/KGQA/configs/assignments/definition.yaml, and modify the task configuration intasks/KGQA/configs/assignments/default.yaml. By default,gpt-3.5-turbo-0613will be evaluated.

Then start the task server. Make sure the ports you use are available.

python -m tasks.KGQA.start_task -a

If the terminal shows ".... 200 OK", you can open another terminal and start the assigner:

python -m tasks.KGQA.assigner

- You can modify the data path in the

-

SCV:

start embedding api server:python -m tasks.SCV.embedding.webapi

start SCV task:

python -m tasks.SCV.scv_lc -d data/scv/dev.jsonl

analysis:

python -m tasks.SCV.analysis -r results/svc/dev_1718903779.497523_answer_Qwen1.5-72B-Chat.jsonl

BioKGBench is an open-source project for Agent evaluation created by researchers in AutoLab and CAIRI Lab from Westlake University. We encourage researchers interested in LLM Agent and other related fields to contribute to this project!

If you find our work helpful, please use the following citations.

@misc{lin2024biokgbenchknowledgegraphchecking,

title={BioKGBench: A Knowledge Graph Checking Benchmark of AI Agent for Biomedical Science},

author={Xinna Lin and Siqi Ma and Junjie Shan and Xiaojing Zhang and Shell Xu Hu and Tiannan Guo and Stan Z. Li and Kaicheng Yu},

year={2024},

eprint={2407.00466},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2407.00466},

}

For adding new features, looking for helps, or reporting bugs associated with BioKGBench, please open a GitHub issue and pull request with the tag new features, help wanted, or enhancement. Feel free to contact us through email if you have any questions.

- Xinna Lin([email protected]), Westlake University

- Siqi Ma([email protected]), Westlake University

- Junjie Shan([email protected]), Westlake University

- Xiaojing Zhang([email protected]), Westlake University

- Support pip installation