This project implements GPU kernels in CUDA/Triton for Allreduce, PagedAttention, and Activation-aware Weight Quantization.

There's an implementation of a one-pass allreduce (all ranks read/write from other ranks). The implementation is largely a stripped down version of: vllm-project/vllm#2192. I rewrote parts from scratch, but also copy-pasted a fair bit as well. It's also similar to pytorch/pytorch#114001, which itself is inspired by FasterTransformer. In the process of writing the code, I learned a bunch about CUDA/MPI/etc.

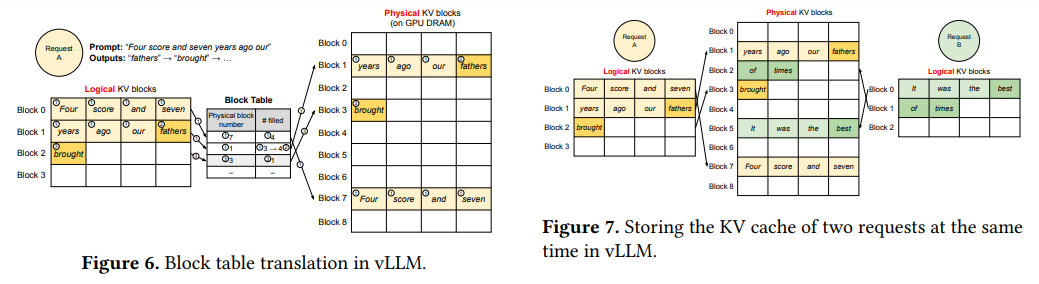

Paged attention stores KV vectors in a cache, instead of recomputing them.

The PagedAttention kernel is not faster than the existing CUDA kernel because Triton has limitations that prevent it from doing the necessary tensor operations. See

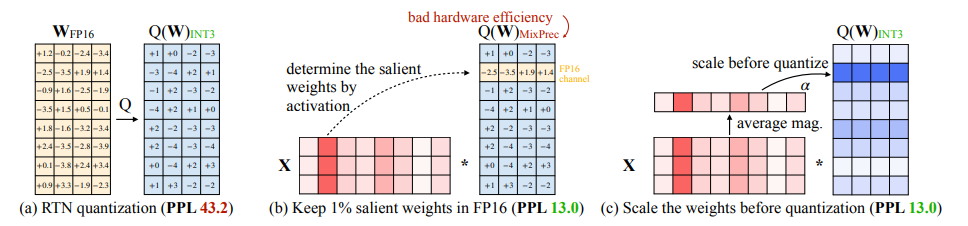

AWQ is a quantization method. This kernel implements fast inference using the quantized weights.

Roughly, the AWQ kernel is dequantizing a matrix using the formula scale * (weight - zero_point) before doing a standard FP16 matmul.

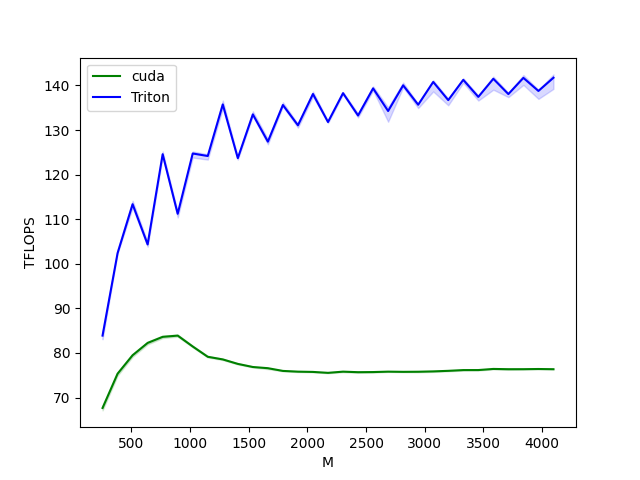

The AWQ kernel is much faster than the existing CUDA implementation, in addition to being simpler (~ 300 lines of C + inline assembly vs ~ 50 lines of Triton).

Here's a performance comparison:

Credit to

- The Triton matmul tutorial

- GPTQ-Triton for discovering a few clever tricks I used in this kernel and making me realize that using Triton for quantization inference was possible