RangeViT: Towards Vision Transformers for 3D Semantic Segmentation in Autonomous Driving [arXiv]

Angelika Ando, Spyros Gidaris, Andrei Bursuc, Gilles Puy, Alexandre Boulch and Renaud Marlet

CVPR 2023

If you use our RangeViT code in your research, please consider citing:

@inproceedings{RangeViT,

title={RangeViT: Towards Vision Transformers for 3D Semantic Segmentation in Autonomous Driving},

author={Ando, Angelika and Gidaris, Spyros and Bursuc, Andrei and Puy, Gilles and Boulch, Alexandre and Marlet, Renaud},

booktitle={CVPR},

year={2023}

}

Results of RangeViT on the nuScenes validation set and on the SemanticKITTI test set with different weight initializations.

In particular, we initialize RangeViT’s backbone with ViTs pretrained (a) on supervised ImageNet21k classification and fine-tuned on supervised image segmentation on Cityscapes with Segmenter (entry Cityscapes) (b) on supervised ImageNet21k classification (entry IN21k), (c) with the DINO self-supervised approach on ImageNet1k (entry DINO), and (d) trained from scratch (entry Random). The Cityscapes pre-trained ViT encoder weights can be downloaded from here.

| Train data | Test data | Pre-trained weights | mIoU (%) | Download | Config |

|---|---|---|---|---|---|

| nuScenes train set | nuScenes val set | Cityscapes | 75.2 | RangeViT model | config |

| nuScenes train set | nuScenes val set | IN21k | 74.8 | RangeViT model | config |

| nuScenes train set | nuScenes val set | DINO | 73.3 | RangeViT model | config |

| nuScenes train set | nuScenes val set | Random | 72.4 | RangeViT model | config |

| SemanticKITTI train+val set | SemanticKITTI test set | Cityscapes | 64.0 | RangeViT model | config |

| SemanticKITTI train set | SemanticKITTI val set | Cityscapes | 60.8 | RangeViT model | config |

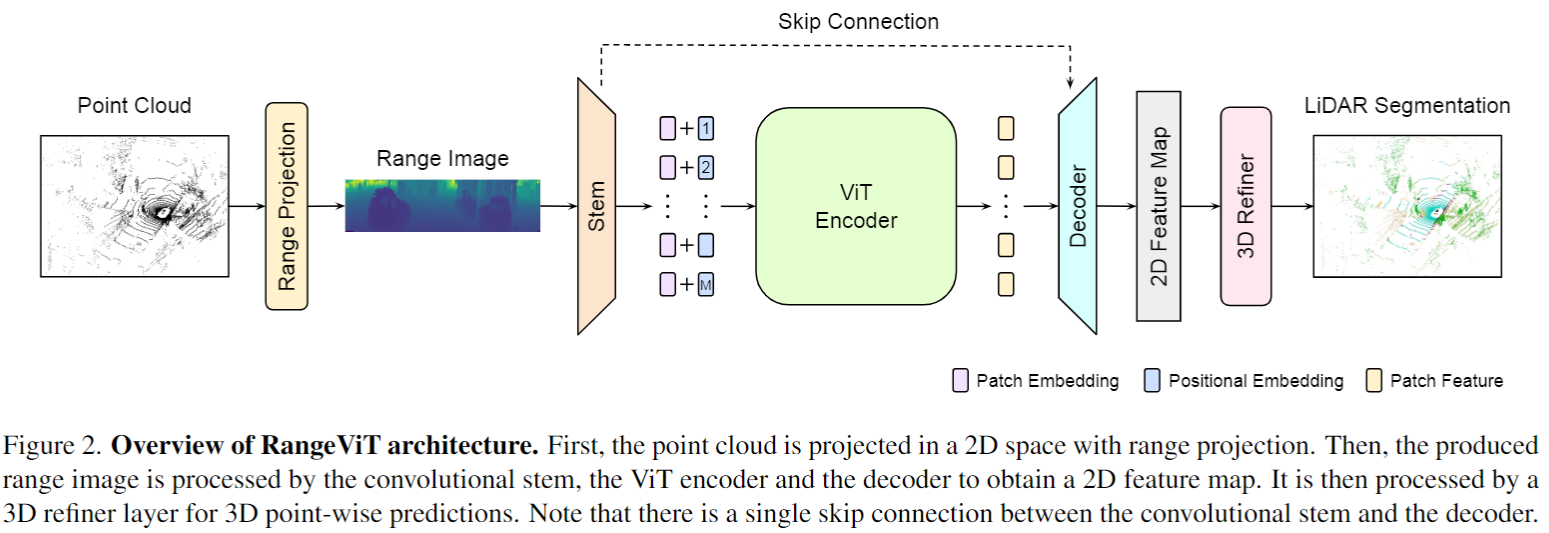

Note that the positional embeddings are initialized with the corresponding pre-trained weights or randomly when training from scratch. The convolutional stem, the decoder and the 3D refiner layer are always randomly initialized.

Please install PyTorch and then install the nuScenes devkit with

pip install nuscenes-devkitFinally, install the requirements with

pip install -r requirements.txtTo train on nuScenes or on SemanticKITTI, use (and modify if needed) the config file config_nusc.yaml or config_kitti.yaml, respectively. For instance, to train on nuScenes, run the following command:

python -m torch.distributed.launch --nproc_per_node=4 --master_port=63545 \

--use_env main.py 'config_nusc.yaml' \

--data_root '<path_to_nuscenes_dataset>' \

--save_path '<path_to_log>' \

--pretrained_model '<path_to_image_pretrained_model.pth>'The --pretrained_model argument specifies the image-pretrained ViT-encoder that is used for initializing the ViT-encoder of RangeViT. For instance, to use the ImageNet21k-pretrained ViT-S encoder set --pretrained_model "timmImageNet21k". For the other initialization cases, you will need to download the pretrained weights. Read the Results section above to see where to download these pretrained weights from. Note that for all ViT-encoder initialization cases the peak learning rate of RangeViT is 0.0008, apart from the DINO initialization, in which case the peak learning rate is 0.0002.

Similarly, to train on SemanticKITTI, run the following command:

python -m torch.distributed.launch --nproc_per_node=4 --master_port=63545 \

--use_env main.py 'config_kitti.yaml' \

--data_root '<path_to_nuscenes_dataset>/dataset/sequences/' \

--save_path '<path_to_log>' \

--pretrained_model '<path_to_image_pretrained_model.pth>'The same config files can be used for evaluating the pre-trained RangeViT models. For instance, to evaluate on the nuScenes validation set, run the following command:

python -m torch.distributed.launch --nproc_per_node=1 --master_port=63545 \

--use_env main.py 'config_nusc.yaml' \

--data_root '<path_to_nuscenes_dataset>' \

--save_path '<path_to_log>' \

--checkpoint '<path_to_pretrained_rangevit_model.pth>' \

--val_onlyTo evaluate on the SemanticKITTI validation set, run the following command (adding the --test_split and --save_eval_results arguements for evaluating on the test split and saving the prediction results):

python -m torch.distributed.launch --nproc_per_node=1 --master_port=63545 \

--use_env main.py 'config_kitti.yaml' \

--data_root '<path_to_semantic_kitti_dataset>' \

--save_path '<path_to_log>' \

--checkpoint '<path_to_pretrained_rangevit_model.pth>' \

--val_only