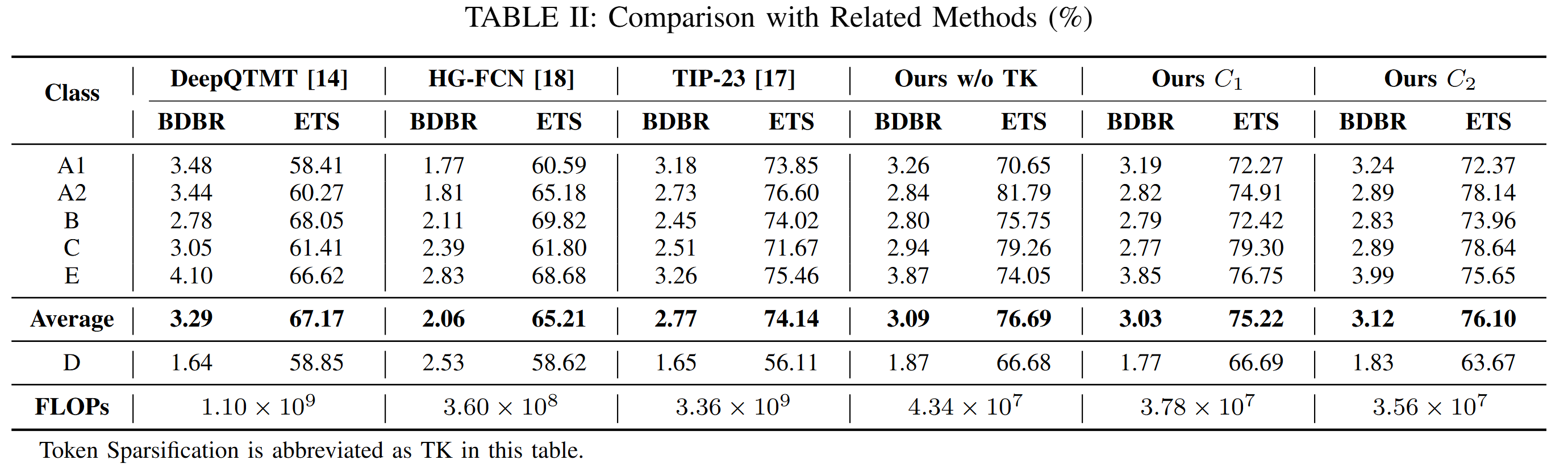

As one of the key aspects of Versatile Video Coding (VVC), the quad-tree with a nested multi-type tree (QTMT) partition structure enhances the rate-distortion (RD) performance but at the cost of extensive computational encoding complexity. To reduce the complexity of QTMT partition in VVC intraframe coding, researchers proposed the partition map-based fast block partitioning algorithm, which achieves advanced encoding time savings and coding efficiency. However, it encounters high inference overhead due to the over-parameterized neural network. To efficiently deploy this algorithm, we first propose a lightweight neural network based on the hierarchical vision transformer that predicts the partition map effectively with restricted computational complexity, thereby reducing the inference complexity uniformly. Next, we introduce token sparsification to select the most informative tokens using a predefined pruning ratio, achieving content-adaptive computation reduction and parallel-friendly inference. Experimental results demonstrate that the proposed method reduces 98.94% FLOPs with a negligible BDBR increase compared to the original methods.

- 2024.08.03: Our paper is accepted by MMSP2024 as a oral paper.

- Pytorch >= 1.13.1

- CUDA >= 11.3

- Other required packages in

pip_opt.sh

You can put the JVET CTC test video sequences in the [input_dir] folder.

python Inference_QBD.py --C_ratio 0.125 --jobID DySA_C0.125_QP --inputDir [input_dir] --outDir [output_dir] --batchSize 200 --startSqeID 0 --SeqNum 22 --checkpoints_dir [model_zoo_dir]

The path of output partition map [output_dir] and checkpoints [model_zoo_dir] can be changed.

In the experiments, we use video sequences from CDVL with multiple resolutions {3840x2160, 1920x1050, 1280x720} and part of DIV2K image dataset with resolution 1920x1280 to construct the training and validation datasets.

Example usage:

python Train_QBD_Dy.py --aux_loss --C_ratio 0.0625 --jobID DyLight_SA_C0.0625 --isLuma --post_test --classification --inputDir /data/fengxm/VVC_Intra/ --outDir /model/fengxm/AVS3/pmp_intra/sa --model_type DyLight_SA --lr 5e-4 --dr 20 --epoch 60 --qp 22 --batchSize 1200 --train_num_workers 8 --predID 2 --classification

There are other arguments you may want to change. You can change the hyperparameters using the cmd line.

For example, you can use the following command to train from scratch.

python Train_QBD_Dy.py \

--isLuma \

--post_test \

--classification \

--aux_loss \

--C_ratio 0.0625 \

--jobID DyLight_SA_C0.0625 \

--inputDir /data/fengxm/VVC_Intra/ \

--outDir /model/fengxm/AVS3/pmp_intra/sa \

--model_type DyLight_SA \

--lr 5e-4 \

--dr 20 \

--epoch 60 \

--qp 22 \

--batchSize 1200 \

--train_num_workers 8 \

--predID 2 \

--classification

If you find our work useful for your research, please consider citing the paper and our previous paper:

@article{feng2023partition,

title={Partition map prediction for fast block partitioning in VVC intra-frame coding},

author={Feng, Aolin and Liu, Kang and Liu, Dong and Li, Li and Wu, Feng},

journal={IEEE Transactions on Image Processing},

year={2023},

publisher={IEEE},

volume={32},

number={},

pages={2237-2251},

doi={10.1109/TIP.2023.3266165}}

If you have any questions, please feel free to reach out at [email protected].

Partition Map Prediction for Fast Block Partitioning in VVC Intra-frame Coding