by Kaisiyuan Wang, Qianyi Wu, Linsen Song, Zhuoqian Yang, Wayne Wu, Chen Qian, Ran He, Yu Qiao, Chen Change Loy.

This repository is for our ECCV2020 paper MEAD: A Large-scale Audio-visual Dataset for Emotional Talking-face Generation.

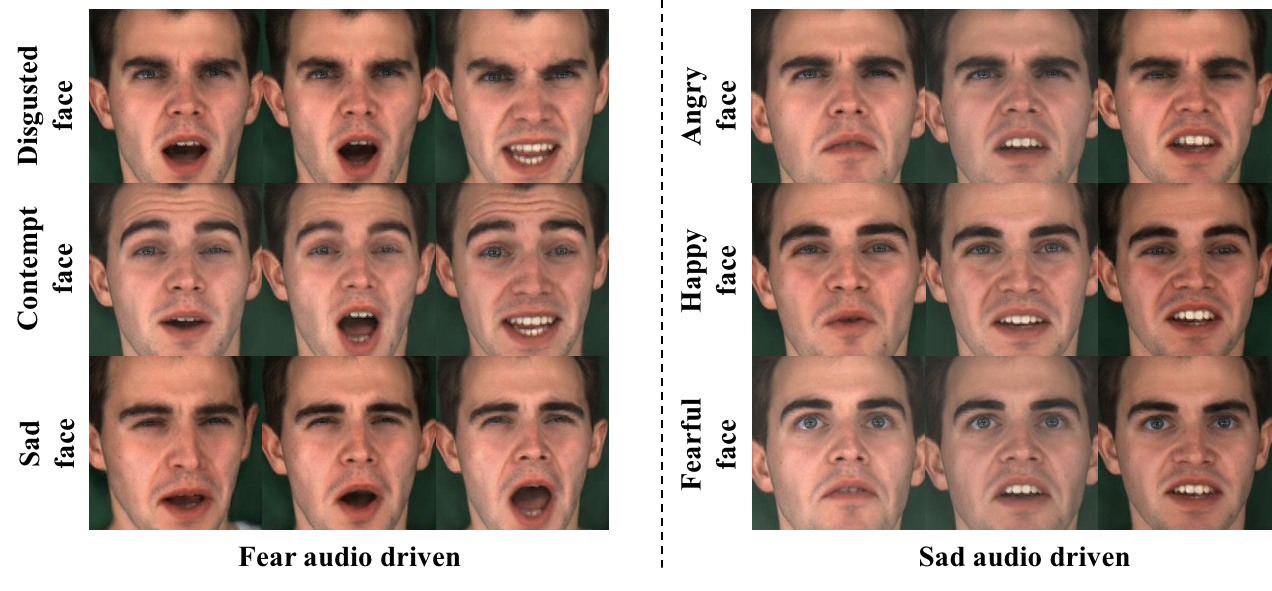

To cope with the challenge of realistic and natural emotional talking face genertaion, we build the Multi-view Emotional Audio-visual Dataset (MEAD) which is a talking-face video corpus featuring 60 actors and actresses talking with 8 different emotions at 3 different intensity levels. High-quality audio-visual clips are captured at 7 different view angles in a strictly-controlled environment. Together with the dataset, we also release an emotional talking-face generation baseline which enables the manipulation of both emotion and its intensity. For more specific information about the dataset, please refer to here.

This repository is based on Pytorch, so please follow the official instructions in here. The code is tested under pytorch1.0 and Python 3.6 on Ubuntu 16.04.

Please refer to the Section 6 "Speech Corpus of Mead" in the supplementary material. The speech corpora are basically divided into 3 parts, (i.e., common, generic, and emotion-related). For each intensity level, we directly use the last 10 sentences of neutral category and the last 6 sentences of the other seven emotion categories as the testing set. Note that all the sentences in the testing set come from the "emotion-related" part. Meanwhile if you are trying to manipulate the emotion category, you can use all the 40 sentences of neutral category as the input samples.

- Download the dataset from here. We package the audio-visual data of each actor in a single folder named after "MXXX" or "WXXX", where "M" and "W" indicate actor and actress, respectively.

- As Mead requires different modules to achieve different functions, thus we seperate the training for Mead into three stages. In each stage, the corresponding configuration (.yaml file) should be set up accordingly, and used as below:

cd Audio2Landmark

python train.py --config config.yaml

cd Neutral2Emotion

python train.py --config config.yaml

cd Refinement

python train.py --config config.yaml

- First, download the pretrained models and put them in models folder.

- Second, download the demo audio data.

- Run the following command to generate a talking sequence with a specific emotion

cd Refinement

python demo.py --config config_demo.yaml

You can try different emotions by replacing the number with other integers from 0~7.

- 0:angry

- 1:disgust

- 2:contempt

- 3:fear

- 4:happy

- 5:sad

- 6:surprised

- 7:neutral

In addition, you can also try compound emotion by setting up two different emotions at the same time.

- The results are stored in outputs folder.

If you find this code useful for your research, please cite our paper:

@inproceedings{kaisiyuan2020mead,

author = {Wang, Kaisiyuan and Wu, Qianyi and Song, Linsen and Yang, Zhuoqian and Wu, Wayne and Qian, Chen and He, Ran and Qiao, Yu and Loy, Chen Change},

title = {MEAD: A Large-scale Audio-visual Dataset for Emotional Talking-face Generation},

booktitle = {ECCV},

month = Augest,

year = {2020}

}