This repository contains resources and research artifacts for the paper "Environment Texture Optimization for Augmented Reality" that will appear in Proceedings of ACM IMWUT 2024. You can find the the code required to implement MoMAR here.

To create the semi-synthetic VI-SLAM datasets that we used to study the effect of environment texture on AR pose tracking performance, we used our previously published game engine-based emulator, Virtual-Inertial SLAM. For more information on this tool, implementation code and instructions, and examples of the types of projects it can support, please visit the Virtual-Inertial SLAM GitHub repository.

Our MoMAR system provides situated visualizations of AR user motion patterns, to inform environment designers where texture patches (e.g., posters or mats) should be placed in an environment. The premise of MoMAR is that distinct texture is required to support accurate pose tracking in regions users view while performing certain challenging motions with their AR device, but that fine, low-contrast textures (e.g., a carpet) are sufficient where users are slowly inspecting virtual content. Our code facilitates two visualization modes, illustrated in the image below: 1) highlighting environment regions AR users face when they are focused on virtual content (left), 2) highlighting environment regions AR users face when they are performing challenging device motions (right).

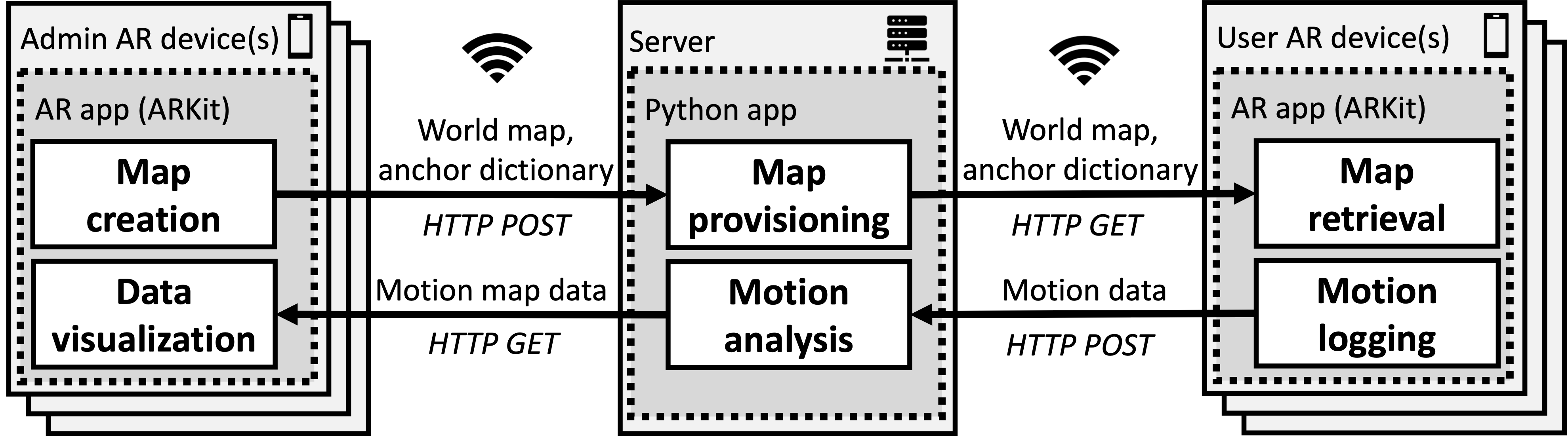

The system architecture for MoMAR is shown below. To create a persistent AR experience, an administrator uses the map creation module on an admin AR device to generate a world map and place one or more spatial anchors within that space. These data are then transferred to and stored on the server. When a user starts a new session, the map retrieval module on the user AR device requests the map and anchor data from the map provisioning module on the server. These data are then used to localize the new session within the saved world map. Upon successful localization, the motion logging module on the user AR device is activated, which periodically sends device motion data to the server while the session is active. The motion analysis module on the server can be run on demand or periodically to analyze all user motion data, or those from a specified time range, to produce the motion map data. Finally, the data visualization module on the admin AR device is used to request motion map data from the server and display a motion map using situated visualizations.

Our current MoMAR implementation is for ARKit (iOS) AR devices. The required code is provided in three parts, for the admin AR device, the server and the user AR device respectively. The code for each can be found in the repository folders named 'admin-AR-device', 'server', and 'user-AR-device'. The implementation resources consist of the following:

Admin AR device: the C# scripts PlaceAnchorOnPlane.cs and ARWorldMapController.cs, which implement the 'Map creation' module in MoMAR. The C# script DataVisualization.cs, which implements the 'Data visualization' module in MoMAR.

Server: a Python script MoMAR-Server.py, which implements the 'Map provisioning' module and handles HTTP POST and GET requests between the AR devices and the server, and a Python script motionAnalysis.py, which implements the 'Motion analysis' module.

User AR device: the C# scripts RenderAnchorContent.cs and ARWorldMapController.cs, which implement the 'Map retrieval' module in MoMAR. RenderAnchorContent.cs also provides a framework for how virtual content can be rendered based on the contents of the anchor dictionary. The C# script MotionLog.cs, which implements the 'Motion logging' module in MoMAR.

Prerequisites: 1 or more iOS or iPad OS devices running iOS/iPad OS 15 or above, and an edge server with Python 3.8 or above and and FastAPI (https://fastapi.tiangolo.com/lo/) Python packages installed. For building the necessary apps to AR devices, Unity 2021.3 or later is required, with the AR Foundation framework v4.2 or later installed.

Tested with an iPhone 13 (iOS 16), an iPhone 13 Pro Max (iOS 15), an iPhone 14 Pro Max (iOS 17), an iPad Pro 2nd gen. (iPad OS 17, and an iPad Pro 4th gen. (iPad OS 16) as AR devices, and a desktop PC with an Intel i7-9700K CPU and an Nvidia GeForce RTX 2060 GPU as an edge server (Python 3.8).

Admin AR device:

- Create a Unity project with the AR Foundation template.

- Add the AR Anchor Manager script (provided with the AR Foundation template) to the AR Session Origin GameObject.

- Add the PlaceAnchorOnPlane.cs script (in the admin-AR-device folder) to the AR Session Origin GameObject. Insert the IP address of your edge server on line 365.

- Add UI canvas buttons to handle placing different anchors, and link each button to the appropriate method in PlaceAnchorOnPlane.cs (e.g., PlaceAnchorA()).

- Add the DataVisualization.cs script (in the admin-AR-device folder) to the AR Session Origin GameObject. Insert the IP address of your edge server on line 65 and line 96.

- Create a new GameObject 'ARWorldMapController'. Drag the AR Session Game Object to the appropriate slot in the inspector. Add the ARWorldMapController.cs script (in the admin-AR-device folder, adapted from the example in the AR Foundation template) to the ARWorldMapController GameObject. Insert the IP address of your edge server on line 179 and line 289.

- Set the Build platform to iOS, and click Build.

- Load your built project in XCode, sign it using your Apple Developer ID, and run it on your admin AR device.

Server:

- Create a folder on the server where MoMAR files will be located.

- Download the server folder in the repository to your new MoMAR folder.

- In Terminal or Command Prompt, navigate to your MoMAR folder.

- Start the server using the following command:

uvicorn server.MoMAR-Server:app --host 0.0.0.0. The server will now facilitate the setup and loading of your persistent AR experience, and capture motion log data. The motion log data will be appended to a new file in your MoMAR folder, 'motionlog.txt'. - When you wish to run motion analysis (required before data visualization), run the motionAnalysis.py script, which will create the 'motionData.csv' file. This file is downloaded by the data visualization module on the admin AR device.

User AR device:

- Create a Unity project with the AR Foundation template.

- Add the AR Anchor Manager script (provided with the AR Foundation template) to the AR Session Origin GameObject.

- Add the RenderAnchorContent.cs script (in the user-AR-device folder) to the AR Session Origin GameObject. Add code to declare and instantiate the specific GameObjects and Colliders you wish to render as indicated in the script. Insert the IP address of your edge server on line 66.

- Drag the Prefabs you wish to render to the appropriate places in the inspector for the RenderAnchorContent.cs script.

- Add the MotionLog.cs script (in the user-AR-device folder) to the AR Session Origin GameObject. Insert the IP address of your edge server on line 131.

- Create a new GameObject 'ARWorldMapController'. Drag the AR Session Game Object to the appropriate slot in the inspector. Add the ARWorldMapController.cs script (in the admin-AR-device folder, adapted from the example in the AR Foundation template) to the ARWorldMapController GameObject. Insert the IP address of your edge server on line 179 and line 289.

- Set the Build platform to iOS, and click Build.

- Load your built project in XCode, sign it using your Apple Developer ID, and run it on your admin AR device.

If you use MoMAR in an academic work, please cite:

@inproceedings{MoMAR,

title={Environment texture optimization for augmented reality},

author={Scargill, Tim and Janamsetty, Ritvik and Fronk, Christian and Eom, Sangjun and Gorlatova, Maria},

booktitle={Proceedings of ACM IMWUT 2024},

year={2024}

}

The authors of this repository are Tim Scargill and Maria Gorlatova. Contact information of the authors:

- Tim Scargill (timothyjames.scargill AT duke.edu)

- Maria Gorlatova (maria.gorlatova AT duke.edu)

This work was supported in part by NSF grants CNS-1908051, CNS-2112562, CSR-2312760 and IIS-2231975, NSF CAREER Award IIS-2046072, a Meta Research Award and a CISCO Research Award.