Clone of official kNN-VC, simple kNN-based voice conversion.

# Python >=3.10

pip install "torch>=2" "torchaudio>=2" numpyNo kNN-VC install is needed. torch.hub handle everything😉

import torch, torchaudio

src_wav_path = '<path to arbitrary 16kHz waveform>.wav'

ref_wav_paths = ['<path to arbitrary 16kHz waveform from target speaker>.wav', '<path to 2nd utterance from target speaker>.wav', ...]

knn_vc = torch.hub.load('tarepan/knn-vc-official', 'knn_vc', prematched=True, trust_repo=True, pretrained=True)

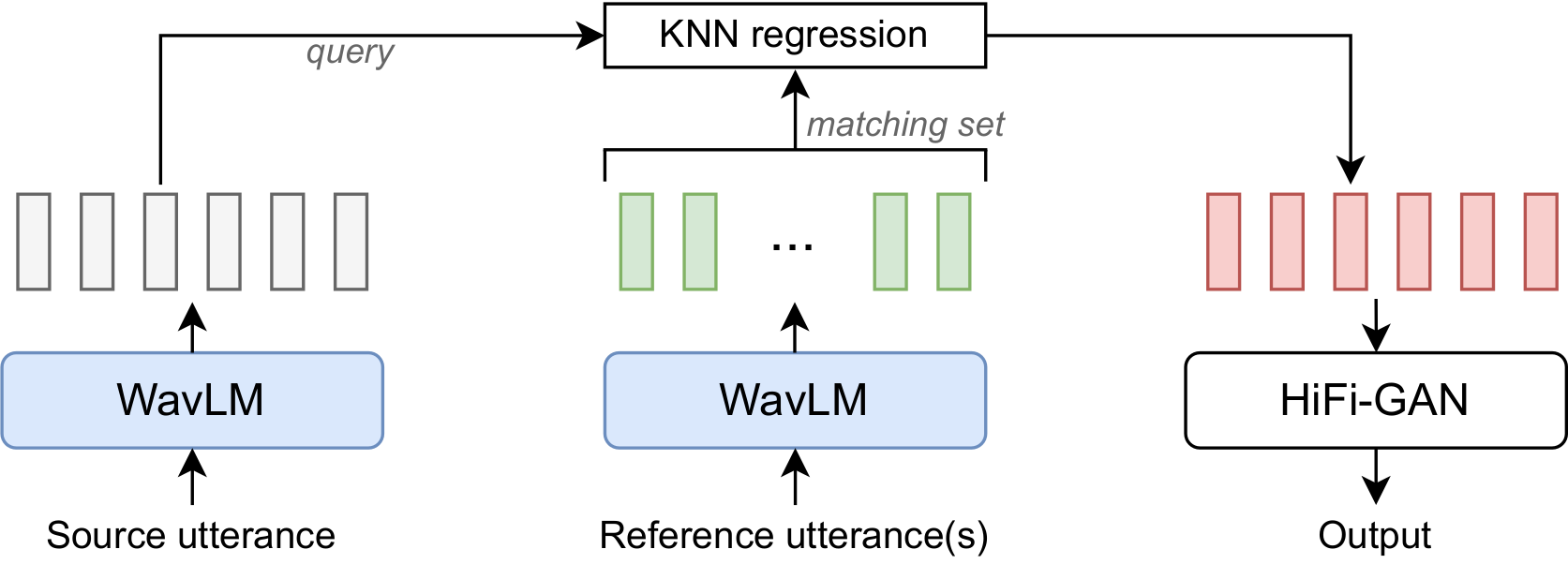

query_seq = knn_vc.get_features(src_wav_path)

matching_set = knn_vc.get_matching_set(ref_wav_paths)

out_wav = knn_vc.match(query_seq, matching_set, topk=4)

# out_wav is (T,) tensor converted 16kHz output wav using k=4 for kNN.Options:

knn_vc.matchtopk: int - Top K

torch.hub.loadprematched: bool - Whether to use prematched model or non-prematched model

Under the releases tab of this repo we provide three checkpoints:

- Encoder: WavLM (taken from official WavLM)

- Vocoder 1: HiFiGAN w/ raw-WavLM-L6

- Vocoder 2: HiFiGAN w/ prematched-WavLM-L6

For the HiFiGAN models we provide both the generator inference checkpoint and full training checkpoint with optimizer states.

For performance, see the paper.

Install librosa, tensorboard, matplotlib, fastprogress and scipy.

-

Precompute WavLM features of the vocoder dataset: we provide a utility for this for the LibriSpeech dataset in

prematch_dataset.py:usage: prematch_dataset.py [-h] --librispeech_path LIBRISPEECH_PATH [--seed SEED] --out_path OUT_PATH [--device DEVICE] [--topk TOPK] [--matching_layer MATCHING_LAYER] [--synthesis_layer SYNTHESIS_LAYER] [--prematch] [--resume]e.g. (prematch):

python prematch_dataset.py --librispeech_path /path/to/librispeech/root --out_path /path/where/you/want/outputs/to/go --topk 4 --matching_layer 6 --synthesis_layer 6 --prematch -

Train HiFiGAN: until 2.5M steps

python -m hifigan.train --audio_root_path /path/to/librispeech/root/ --feature_root_path /path/to/the/output/of/previous/step/ --input_training_file data_splits/wavlm-hifigan-train.csv --input_validation_file data_splits/wavlm-hifigan-valid.csv --checkpoint_path /path/where/you/want/to/save/checkpoint --fp16 False --config hifigan/config_v1_wavlm.json --stdout_interval 25 --training_epochs 1800 --fine_tuning

- training

- xx [iter/sec] @ NVIDIA A100 on paperspace gradient Notebook (ConvTF32+/AMP+)

- take about xx days for whole training

- inference

- z.z [sec/sample] @ xx

- HuBERT-Base work well (issue#10)

@misc{2305.18975,

Author = {Matthew Baas and Benjamin van Niekerk and Herman Kamper},

Title = {Voice Conversion With Just Nearest Neighbors},

Year = {2023},

Eprint = {arXiv:2305.18975},

}