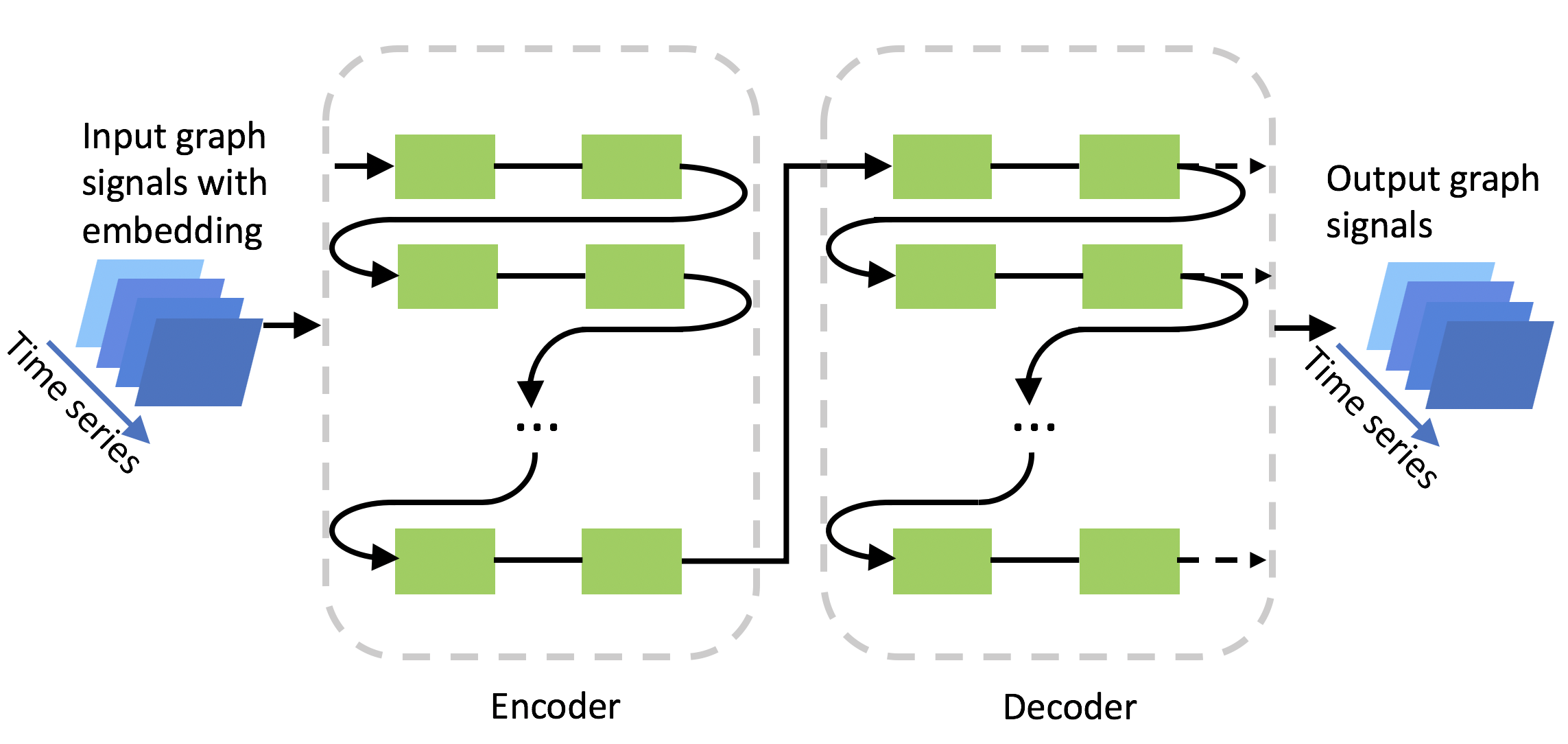

This project inherits the main structure of DCRNN described in the Li et al, ICLR 2018 paper. In general, we discuss how much pre-knowledge of distances is needed. The edge weights of adjacency matrices are

+ Distance-smoothed as in paper

+ Binary values indicating connectivity

We then further discuss repectively in the two cases:

+ How fully-connected RNN cells with graph embedding knowledge compare to DCRNN 1-hop and 2-hop models?

+ Will attention mechanism, which is added to the diffusion process, learn meaningful attention weights, e.g. mapping from distance?

Current implementation currently only supports sensor ids in Los Angeles (see data/sensor_graph/sensor_info_201206.csv). We prepare distance-smoothed graph edge weight matrix as follows:

python -m scripts.gen_adj_mx.py --sensor_ids_filename=data/sensor_graph/graph_sensor_ids.txt --normalized_k=0.1\

--output_pkl_filename=data/sensor_graph/adj_mx_la.pkl.pkland plain adjancency matrix (binary indicating connectivity) as

python scripts.gen_adj_binary.py --sensor_ids_filename=data/sensor_graph/graph_sensor_ids.txt --output_pkl_filename=data/sensor_graph/adj_bin_la.pkl.pklIn file /figures/ProduceEdgeList.ipynb, we inspect the dimension, directedness and other features of the adjacency matrix of LA highway sensor system.

The traffic data file for Los Angeles, i.e., df_highway_2012_4mon_sample.h5, is available here, and should be

put into the data/METR-LA folder.

Besides, the locations of sensors are available at data/sensor_graph/graph_sensor_locations.csv.

python -m scripts.generate_training_data --output_dir=data/METR-LAThe generated train/val/test dataset will be saved at files data/METR-LA/{train,val,test}.npz.

Follow the instructions in file embeddings.ipynb, we produce node2vecgraph embeddings of designated dimension, which later is attached to the input feature matrix and is then fed to the fully connected neural network.

Activate Python2 environment for node2vec by source activate cs224w;

Then either generate a single node2vec by

python n2v-main.py --input METR-LA.txt --output LA-n2v-temp.txt --dimensions 8 --p 1 --weighted --directed

or by sh generate-hyperparam-grid.sh to generate files with hyperparameters from grid search.

Before you proceed, please make sure embeddings files are in bash data/embeddings directory and traffic data are correctly generated in bash data/METR-LA directory.

We talk about training in one GPU.

The arguments fed into dcrnn_train.py are dependent as follows:

- --config_filename: the configuration file where hyperparameters are recorded;

- --use_cpu_only: flag to use cpu only for training;

- --weightType: distance-smoothed or binary adjancency;

- --att: flag to use attention;

- --no-att: flag not to use attention;

- fc: flag to use fully connected cells

- --gEmbedFile: if fc, need to specify which embedding file to use;

- graphConv: flag to use graph convolution

- --hop: if graphConv, need to specify hop of neighborhood;

Therefore, this is a combination of {distance-aware, binary}, {fully connected, graph convolution}, and {attention, no-attention}.

You could do these for distance-aware training

python dcrnn_train.py --config_filename data/model/dcrnn_la.yaml --weightType d --no-att graphConv --hop 1

python dcrnn_train.py --config_filename data/model/dcrnn_la.yaml --weightType d --no-att fc --gEmbedFile LA-n2v-14-0.1-1and these for binary

python dcrnn_train.py --config_filename data/model/dcrnn_la.yaml --weightType a --no-att graphConv --hop 2

python dcrnn_train.py --config_filename data/model/dcrnn_la.yaml --weightType a --no-att fc --gEmbedFile LA-n2v-14-0.1-1Each epoch takes about 5min with a single GTX 1080 Ti (on DCRNN).

The preferred way is to actually make use of the log information.

See /figures/plot.ipynb for the visualization of results.

If you find this repository useful in your research, please cite the following papers:

@inproceedings{li2018dcrnn_traffic,

title={Diffusion Convolutional Recurrent Neural Network: Data-Driven Traffic Forecasting},

author={Li, Yaguang and Yu, Rose and Shahabi, Cyrus and Liu, Yan},

booktitle={International Conference on Learning Representations (ICLR '18)},

year={2018}

}

@inproceedings{grover2016node2vec,

title={node2vec: Scalable feature learning for networks},

author={Grover, Aditya and Leskovec, Jure},

booktitle={Proceedings of the 22nd ACM SIGKDD international conference on Knowledge discovery and data mining},

pages={855--864},

year={2016},

organization={ACM}

}

@inproceedings{wang2016structural,

title={Structural deep network embedding},

author={Wang, Daixin and Cui, Peng and Zhu, Wenwu},

booktitle={Proceedings of the 22nd ACM SIGKDD international conference on Knowledge discovery and data mining},

pages={1225--1234},

year={2016},

organization={ACM}

}