BayesFlow is a Python library for simulation-based Amortized Bayesian Inference with neural networks. It provides users with:

- A user-friendly API for rapid Bayesian workflows

- A rich collection of neural network architectures

- Multi-Backend Support: PyTorch, TensorFlow, JAX, and NumPy

BayesFlow is designed to be a flexible and efficient tool, enabling rapid statistical inference after a potentially longer simulation-based training phase.

First, install your machine learning backend of choice. Note that BayesFlow will not run without a backend.

Once installed, set the appropriate backend environment variable. For example, to use PyTorch:

export KERAS_BACKEND=torchIf you use conda, you can instead set this individually for each environment:

conda env config vars set KERAS_BACKEND=torchWe recommend installing with conda (or mamba).

conda install -c conda-forge bayesflowpip install bayesflowStable version:

git clone https://github.com/stefanradev93/bayesflow

cd bayesflow

conda env create --file environment.yaml --name bayesflowDevelopment version:

git clone https://github.com/stefanradev93/bayesflow

cd bayesflow

git checkout dev

conda env create --file environment.yaml --name bayesflowCheck out some of our walk-through notebooks:

- Quickstart amortized posterior estimation

- Tackling strange bimodal distributions

- Detecting model misspecification in posterior inference

- Principled Bayesian workflow for cognitive models

- Posterior estimation for ODEs

- Posterior estimation for SIR-like models

- Model comparison for cognitive models

- Hierarchical model comparison for cognitive models

Documentation is available at https://bayesflow.org. Please use the BayesFlow Forums for any BayesFlow-related questions and discussions, and GitHub Issues for bug reports and feature requests.

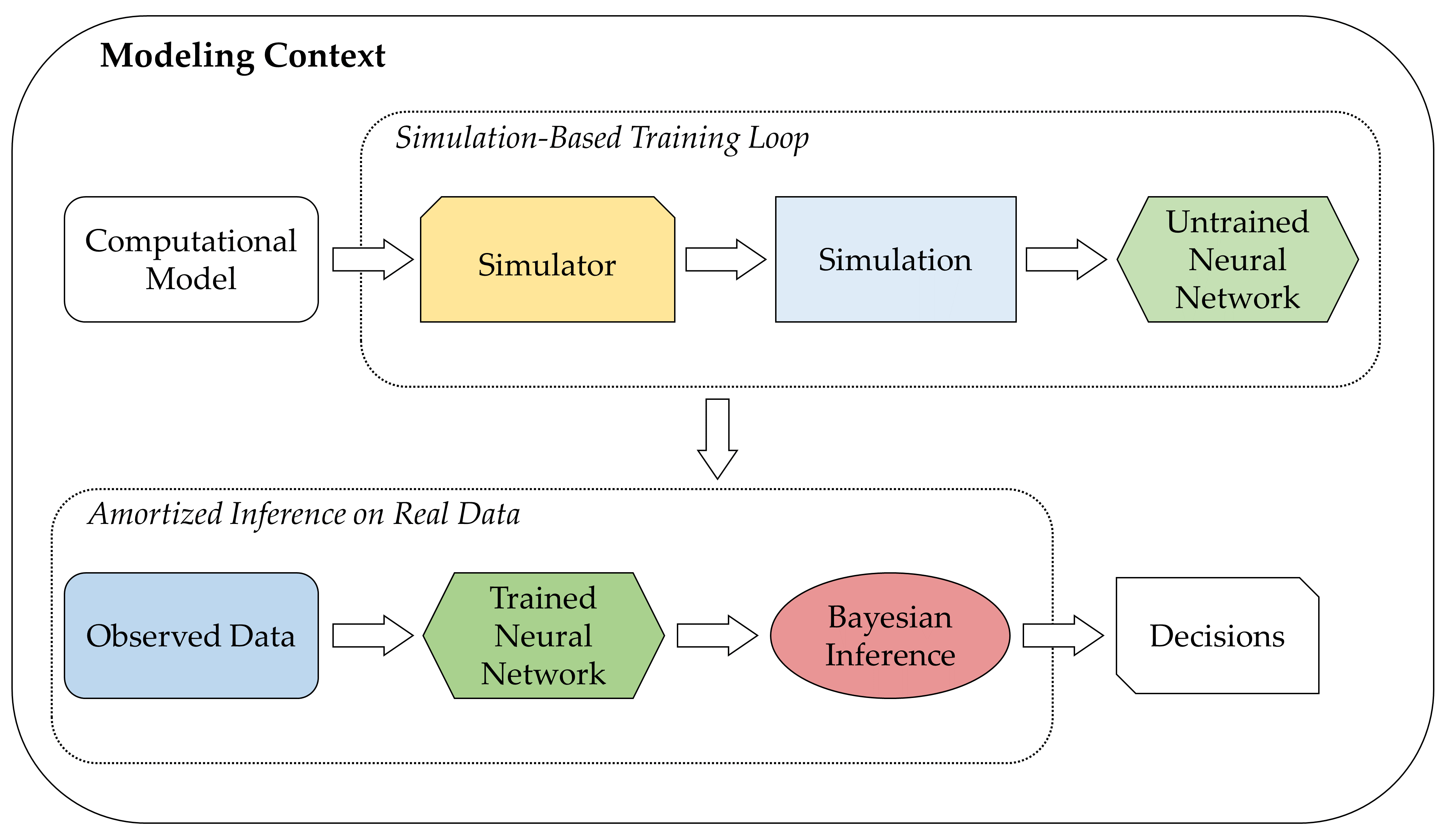

A cornerstone idea of amortized Bayesian inference is to employ generative neural networks for parameter estimation, model comparison, and model validation when working with intractable simulators whose behavior as a whole is too complex to be described analytically. The figure below presents a higher-level overview of neurally bootstrapped Bayesian inference.

-

Radev S. T., D’Alessandro M., Mertens U. K., Voss A., Köthe U., & Bürkner P. C. (2021). Amortized Bayesian Model Comparison with Evidental Deep Learning. IEEE Transactions on Neural Networks and Learning Systems. doi:10.1109/TNNLS.2021.3124052 available for free at: https://arxiv.org/abs/2004.10629

-

Schmitt, M., Radev, S. T., & Bürkner, P. C. (2022). Meta-Uncertainty in Bayesian Model Comparison. In International Conference on Artificial Intelligence and Statistics, 11-29, PMLR, available for free at: https://arxiv.org/abs/2210.07278

-

Elsemüller, L., Schnuerch, M., Bürkner, P. C., & Radev, S. T. (2023). A Deep Learning Method for Comparing Bayesian Hierarchical Models. ArXiv preprint, available for free at: https://arxiv.org/abs/2301.11873

-

Radev, S. T., Schmitt, M., Pratz, V., Picchini, U., Köthe, U., & Bürkner, P.-C. (2023). JANA: Jointly amortized neural approximation of complex Bayesian models. Proceedings of the Thirty-Ninth Conference on Uncertainty in Artificial Intelligence, 216, 1695-1706. (arXiv)(PMLR)

This project is currently managed by researchers from Rensselaer Polytechnic Institute, TU Dortmund University, and Heidelberg University. It is partially funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation, Project 528702768). The project is further supported by Germany's Excellence Strategy -- EXC-2075 - 390740016 (Stuttgart Cluster of Excellence SimTech) and EXC-2181 - 390900948 (Heidelberg Cluster of Excellence STRUCTURES), as well as the Informatics for Life initiative funded by the Klaus Tschira Foundation.

You can cite BayesFlow along the lines of:

- We approximated the posterior with neural posterior estimation and learned summary statistics (NPE; Radev et al., 2020), as implemented in the BayesFlow software for amortized Bayesian workflows (Radev et al., 2023a).

- We approximated the likelihood with neural likelihood estimation (NLE; Papamakarios et al., 2019) without hand-crafted summary statistics, as implemented in the BayesFlow software for amortized Bayesian workflows (Radev et al., 2023b).

- We performed simultaneous posterior and likelihood estimation with jointly amortized neural approximation (JANA; Radev et al., 2023a), as implemented in the BayesFlow software for amortized Bayesian workflows (Radev et al., 2023b).

- Radev, S. T., Schmitt, M., Schumacher, L., Elsemüller, L., Pratz, V., Schälte, Y., Köthe, U., & Bürkner, P.-C. (2023a). BayesFlow: Amortized Bayesian workflows with neural networks. The Journal of Open Source Software, 8(89), 5702.(arXiv)(JOSS)

- Radev, S. T., Mertens, U. K., Voss, A., Ardizzone, L., Köthe, U. (2020). BayesFlow: Learning complex stochastic models with invertible neural networks. IEEE Transactions on Neural Networks and Learning Systems, 33(4), 1452-1466. (arXiv)(IEEE TNNLS)

- Radev, S. T., Schmitt, M., Pratz, V., Picchini, U., Köthe, U., & Bürkner, P.-C. (2023b). JANA: Jointly amortized neural approximation of complex Bayesian models. Proceedings of the Thirty-Ninth Conference on Uncertainty in Artificial Intelligence, 216, 1695-1706. (arXiv)(PMLR)

BibTeX:

@article{bayesflow_2023_software,

title = {{BayesFlow}: Amortized {B}ayesian workflows with neural networks},

author = {Radev, Stefan T. and Schmitt, Marvin and Schumacher, Lukas and Elsemüller, Lasse and Pratz, Valentin and Schälte, Yannik and Köthe, Ullrich and Bürkner, Paul-Christian},

journal = {Journal of Open Source Software},

volume = {8},

number = {89},

pages = {5702},

year = {2023}

}

@article{bayesflow_2020_original,

title = {{BayesFlow}: Learning complex stochastic models with invertible neural networks},

author = {Radev, Stefan T. and Mertens, Ulf K. and Voss, Andreas and Ardizzone, Lynton and K{\"o}the, Ullrich},

journal = {IEEE transactions on neural networks and learning systems},

volume = {33},

number = {4},

pages = {1452--1466},

year = {2020}

}

@inproceedings{bayesflow_2023_jana,

title = {{JANA}: Jointly amortized neural approximation of complex {B}ayesian models},

author = {Radev, Stefan T. and Schmitt, Marvin and Pratz, Valentin and Picchini, Umberto and K\"othe, Ullrich and B\"urkner, Paul-Christian},

booktitle = {Proceedings of the Thirty-Ninth Conference on Uncertainty in Artificial Intelligence},

pages = {1695--1706},

year = {2023},

volume = {216},

series = {Proceedings of Machine Learning Research},

publisher = {PMLR}

}