Code and pre-trained models for our paper, “Simple Pose: Rethinking and Improving a Bottom-up Approach for Multi-Person Pose Estimation”, accepted by AAAI-2020.

Also this repo serves as the Part B of our paper "Multi-Person Pose Estimation using Body Parts" (under review). The Part A is available at this link.

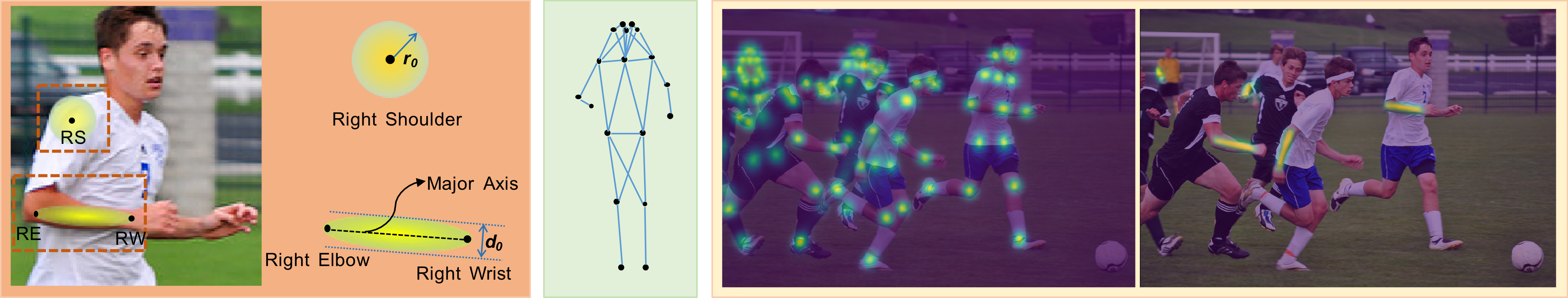

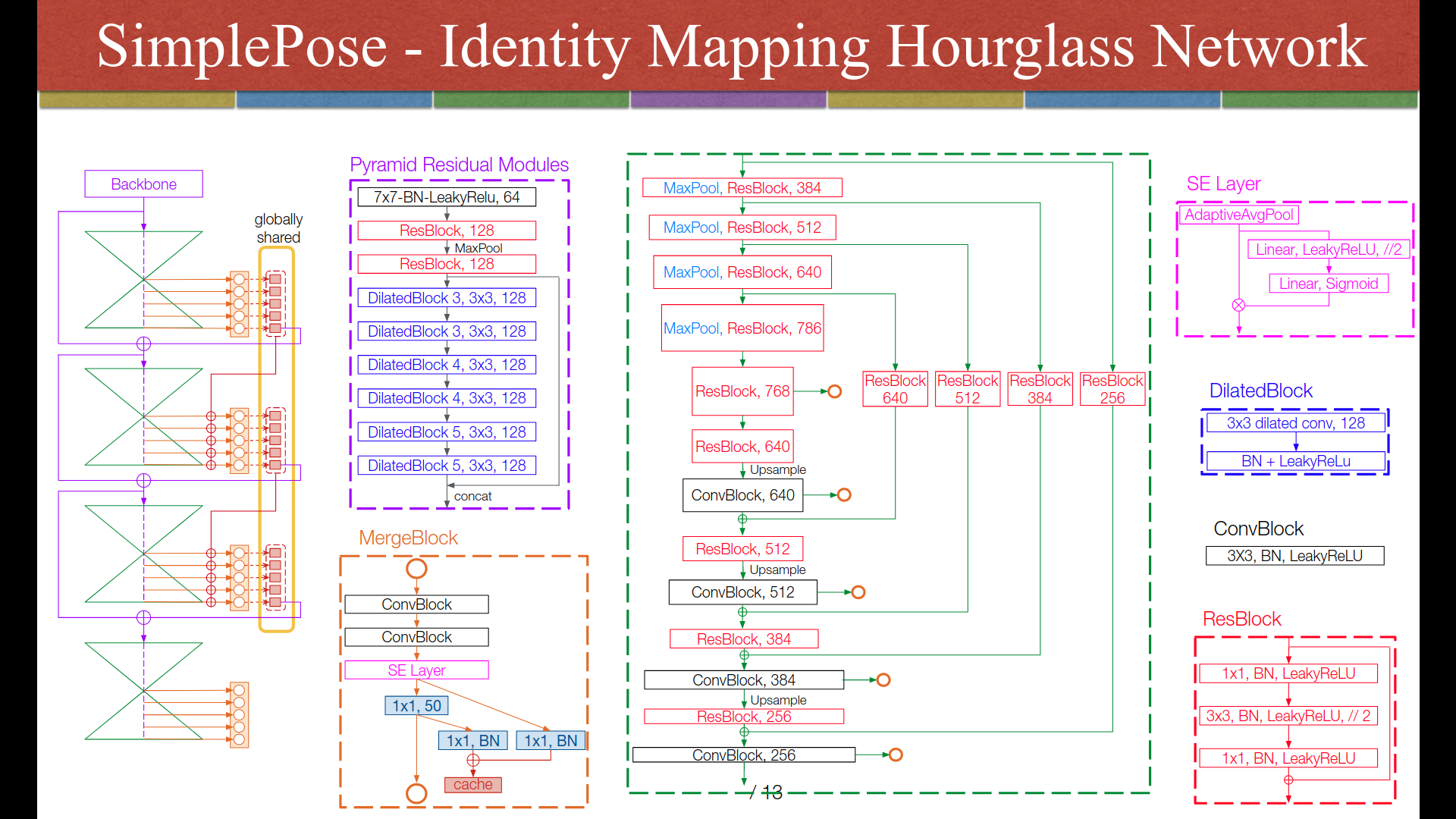

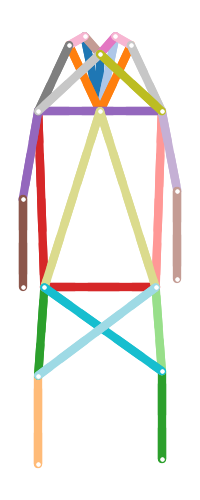

A bottom-up approach for the problem of multi-person pose estimation.

- human score calculation:

- changed

1 - 1.0 / scoretoscore / joint count. - This increased

0.3 %AP overall (minival 2017).

- changed

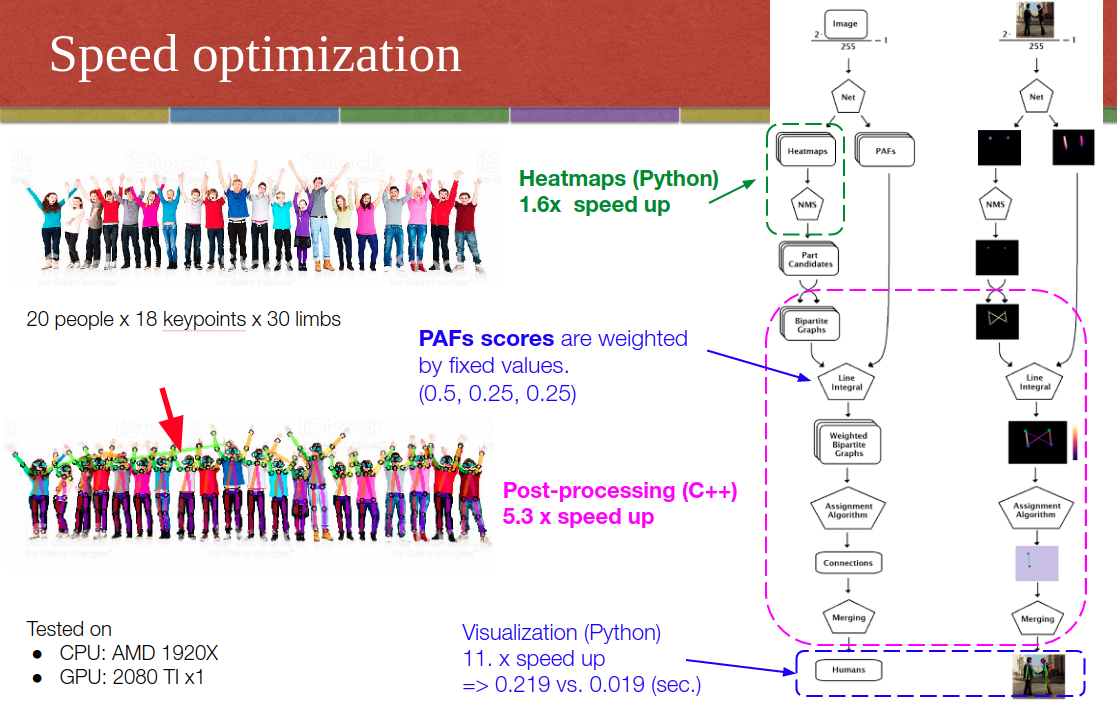

- add C++ acceleration for post-processing.

- results of sorting in C++ is different from Python

- this gives different results accordingly

| Changes | Input size | C++ | MS | Flip | AP | AP(M) | AP(L) | AR | AR(M) | AR(L) | fps |

|---|---|---|---|---|---|---|---|---|---|---|---|

| original | 512 | v | 65.8 | 59.0 | 75.8 | 69.9 | 61.2 | 82.3 | 2.2 fps | ||

| refactored | 512 | v | 65.8 | 59.0 | 75.9 | 69.9 | 61.2 | 82.5 | 3.3 fps | ||

| refactored + score calc | 512 | v | 66.1 | 59.8 | 76.2 | 69.9 | 61.2 | 82.6 | |||

| refactored + score calc | 512 | v | v | 65.8 | 59.6 | 75.4 | 69.8 | 61.0 | 82.1 | 7.3 fps |

- Tested on

GeForce 2080 Ti x 1

- Training

- Evaluation

- Demo

- Implement the models using Pytorch in auto mixed-precision (using Nvidia Apex).

- Supprot training on multiple GPUs (over 90% GPU usage rate on each GPU card).

- Fast data preparing and augmentation during training (generating about 40 samples per second on signle CPU process and much more if warpped by DataLoader Class).

- Focal L2 loss.

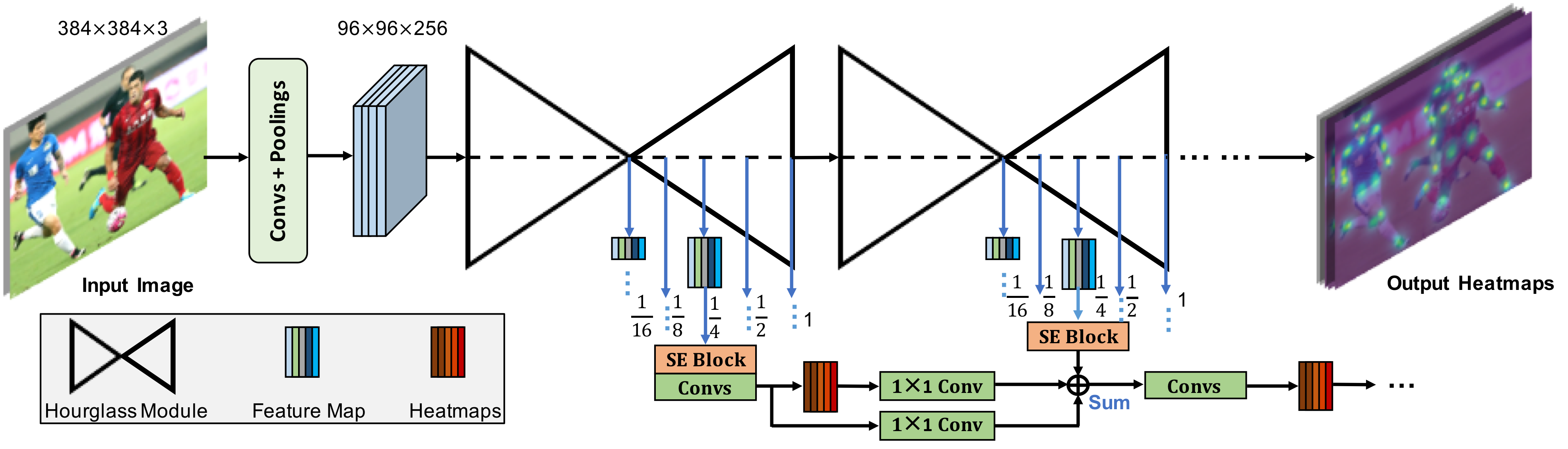

- Multi-scale supervision.

- This project can also serve as a detailed practice to the green hand in Pytorch.

-

Install packages:

Python=3.6, Pytorch>1.0, Nvidia Apex and other packages needed.

-

Download the COCO dataset.

-

Download the pre-trained models (default configuration: download the pretrained model snapshotted at epoch 52 provided as follow).

Download Link: BaiduCloud

Alternatively, download the pre-trained model without optimizer checkpoint only for the default configuration via: GoogleDrive

-

Compile Cpp files

cd utils/pafprocesssh make.sh

-

Change the paths in the code according to your environment.

python demo_image.py

The speed of our system is tested on the MS-COCO test-dev dataset.

- Inference speed of our 4-stage IMHN with 512 × 512 input on one 2080TI GPU: 38.5 FPS (100% GPU-Util).

- Processing speed of the keypoint assignment algorithm part that is implemented in pure Python and a single process on Intel Xeon E5-2620 CPU: 5.2 FPS (has not been well accelerated).

The corresponding code is in pure python without multiprocess for now.

python evaluate.py

Results on MSCOCO 2017 minival skeletons with refactored Python (focal L2 loss with gamma=2):

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets= 20 ] = 0.661

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets= 20 ] = 0.859

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets= 20 ] = 0.716

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets= 20 ] = 0.598

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets= 20 ] = 0.762

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 20 ] = 0.699

Average Recall (AR) @[ IoU=0.50 | area= all | maxDets= 20 ] = 0.873

Average Recall (AR) @[ IoU=0.75 | area= all | maxDets= 20 ] = 0.742

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets= 20 ] = 0.612

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets= 20 ] = 0.825

- run about

3fps using official pretrained model, post-processing included.

Results on MSCOCO 2017 minival skeletons (focal L2 loss with gamma=2):

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets= 20 ] = 0.658

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets= 20 ] = 0.856

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets= 20 ] = 0.713

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets= 20 ] = 0.596

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets= 20 ] = 0.754

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 20 ] = 0.698

Average Recall (AR) @[ IoU=0.50 | area= all | maxDets= 20 ] = 0.872

Average Recall (AR) @[ IoU=0.75 | area= all | maxDets= 20 ] = 0.740

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets= 20 ] = 0.610

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets= 20 ] = 0.824

- run about

7fps using official pretrained model, post-processing included.

Results on MSCOCO 2017 test-dev skeletons (focal L2 loss with gamma=2):

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets= 20 ] = 0.685

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets= 20 ] = 0.867

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets= 20 ] = 0.749

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets= 20 ] = 0.664

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets= 20 ] = 0.719

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 20 ] = 0.728

Average Recall (AR) @[ IoU=0.50 | area= all | maxDets= 20 ] = 0.892

Average Recall (AR) @[ IoU=0.75 | area= all | maxDets= 20 ] = 0.782

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets= 20 ] = 0.688

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets= 20 ] = 0.784

Before training, prepare the training data using ''SimplePose/data/coco_masks_hdf5.py''.

Multiple GUPs are recommended to use to speed up the training process, but we support different training options.

-

Most code has been provided already, you can train the model with.

- 'train.py': single training process on one GPU only.

- 'train_parallel.py': signle training process on multiple GPUs using Dataparallel.

- 'train_distributed.py' (recommended): multiple training processes on multiple GPUs using Distributed Training:

python -m torch.distributed.launch --nproc_per_node=4 train_distributed.pyNote: The loss_model_parrel.py is for train.py and train_parallel.py, while the loss_model.py is for train_distributed.py and train_distributed_SWA.py. They are different in dividing the batch size. Please refer to the code about the different choices.

For distributed training, the real batch_size = batch_size_in_config* × GPU_Num (world_size actually). For others, the real batch_size = batch_size_in_config*. The differences come form the different mechanisms of data parallel training and distrubited training.

- Realtime Multi-Person Pose Estimation verson 1

- Realtime Multi-Person Pose Estimation verson 2

- Realtime Multi-Person Pose Estimation version 3

- Realtime Multi-Person Pose Estimation by tensorboy

- Associative Embedding

- NVIDIA/apex

Please kindly cite this paper in your publications if it helps your research.

@inproceedings{li2019simple,

title={Simple Pose: Rethinking and Improving a Bottom-up Approach for Multi-Person Pose Estimation},

author={Jia Li and Wen Su and Zengfu Wang},

booktitle = {arXiv preprint arXiv:1911.10529},

year={2019}

}