Minimal Implementation of Paper Deep Encoder Shallow Decoder with Pytorch nn.Transformer and Huggingface Tokenizer.

| Korean ➡️ English | English ➡️ Korean | |

|---|---|---|

| BLEU | 35.82 | - |

| Example | 🔗 Translation Result | - |

| CPU Inference Time** | 4.5 sentences / sec | - |

**Tested with 2.4 GHz 8-Core Intel Core i9

from transformers import PreTrainedTokenizerFast

from model import DeepShallowConfig, DeepShallowModel # fetch from model.py file

# source and target tokenizer

korean_tokenizer = PreTrainedTokenizerFast.from_pretrained("snoop2head/Deep-Shallow-Ko")

english_tokenizer = PreTrainedTokenizerFast.from_pretrained("snoop2head/Deep-Shallow-En")

# Korean(source) -> English(target) translation with pretrained model

config = DeepShallowConfig.from_pretrained("snoop2head/Deep-Shallow-Ko2En")

model = DeepShallowModel.from_pretrained("snoop2head/Deep-Shallow-Ko2En", config=config)

# English(source) -> Korean(target) translation with pretrained model

config = DeepShallowConfig.from_pretrained("snoop2head/Deep-Shallow-En2Ko")

model = DeepShallowModel.from_pretrained("snoop2head/Deep-Shallow-En2Ko", config=config)Manipulate config.yaml for source language & target langauge setting or number of inference sample for the output.

python inference.py # creates translation output as csv file on result folder

python metrics.py # calculates bleu score based on csv output from inference.pyFor customized training, change arguments in config.yaml.

python train.py # trains with arguments on config.yaml| Model Hyperparameter | Value |

|---|---|

| Encoder embedding dimension | 512 |

| Encoder feedforward network dimension | 2048 |

| Decoder embedding dimension | 512 |

| Decoder feedforward network dimension | 2048 |

| # Encoder Attention Heads | 8 |

| # Decoder Attention Heads | 8 |

| # Encoder Layers | 12 |

| # Decoder Layers | 1 |

| Training Hyperparameter | Value |

|---|---|

| Train Batch Size | 256 |

| # Train Steps | 190K |

| Optimizer | AdamW |

| Learning Rate | 5e-4 |

| Weight decay rate | 1e-2 |

| Learning Rate Scheduler | Cosine Annealing |

| # Warm-up Steps | 4700 |

| Dropout rate | 0.1 |

| AdamW betas | (0.9, 0.98) |

| Loss function | CrossEntropy |

Datasets were proviced by AIHub, which were Korean - English pairs.

| Dataset Information | Value |

|---|---|

| Train set size (#pairs) | 4.8 Million |

| Valid set size (#pairs) | 0.1 Million |

| Test set size (#pairs) | 0.1 Million |

| Tokenizer | WordPiece |

| Source Vocab Size | 10000 |

| Target Vocab Size | 10000 |

| Max token length | 64 |

| Positional encoding maximum length | 64 |

| Source Language | Korean (ko) |

| Target Language | English (en) |

Parallel corpus shape of source langauge and target language pairs.

| Index | source_language | target_langauge |

|---|---|---|

| 1 | 귀여움이 주는 위로가 언제까지 계속될 수는 없다. | Comfort from being cute can't last forever. |

| 2 | 즉, 변환 처리부(114A)는 백색-포화 영역에 해당하는 영역에서의 불필요한 휘도 ... | In other words, the conversion processor 114A ... |

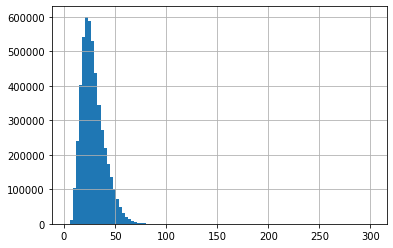

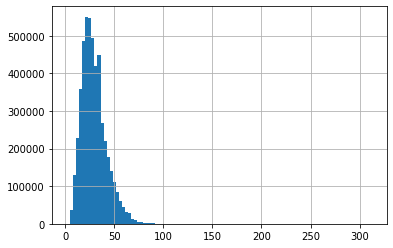

Maximum Token Length was selected as 62 which is 95% percentile of the target dataset.

| Source Language(Korean) Token Length Distribution | Target Language(English) Token Length Distribution |

|---|---|

|

|

- Knowledge distllation is yet to be applied.

- Package seq2seq transformer model to huggingface hub.

- Make Machine Translation Model class similar to MarianMTModel in order to be trainable with huggingface trainer.

- Get BLEU score from the test set.

- Compare BLEU score from Google Translate, Papago, Pororo on the test set(or test set which is out of domain).

- Enable batch inferencing on GPU

- For tasks such as Named Entity Relation or Relation Extraction, enable entity marker to wrap around even after translation

- Inference with Beam Search

@article{Kasai2020DeepES,

title={Deep Encoder, Shallow Decoder: Reevaluating the Speed-Quality Tradeoff in Machine Translation},

author={Jungo Kasai and Nikolaos Pappas and Hao Peng and J. Cross and Noah A. Smith},

journal={ArXiv},

year={2020},

volume={abs/2006.10369}

}

inproceedings{opennmt,

author = {Guillaume Klein and

Yoon Kim and

Yuntian Deng and

Jean Senellart and

Alexander M. Rush},

title = {OpenNMT: Open-Source Toolkit for Neural Machine Translation},

booktitle = {Proc. ACL},

year = {2017},

url = {https://doi.org/10.18653/v1/P17-4012},

doi = {10.18653/v1/P17-4012}

}