Deployments coming soon!

- FAISS - Vector database

- Google Colab - Development/ Inference using T4 GPU

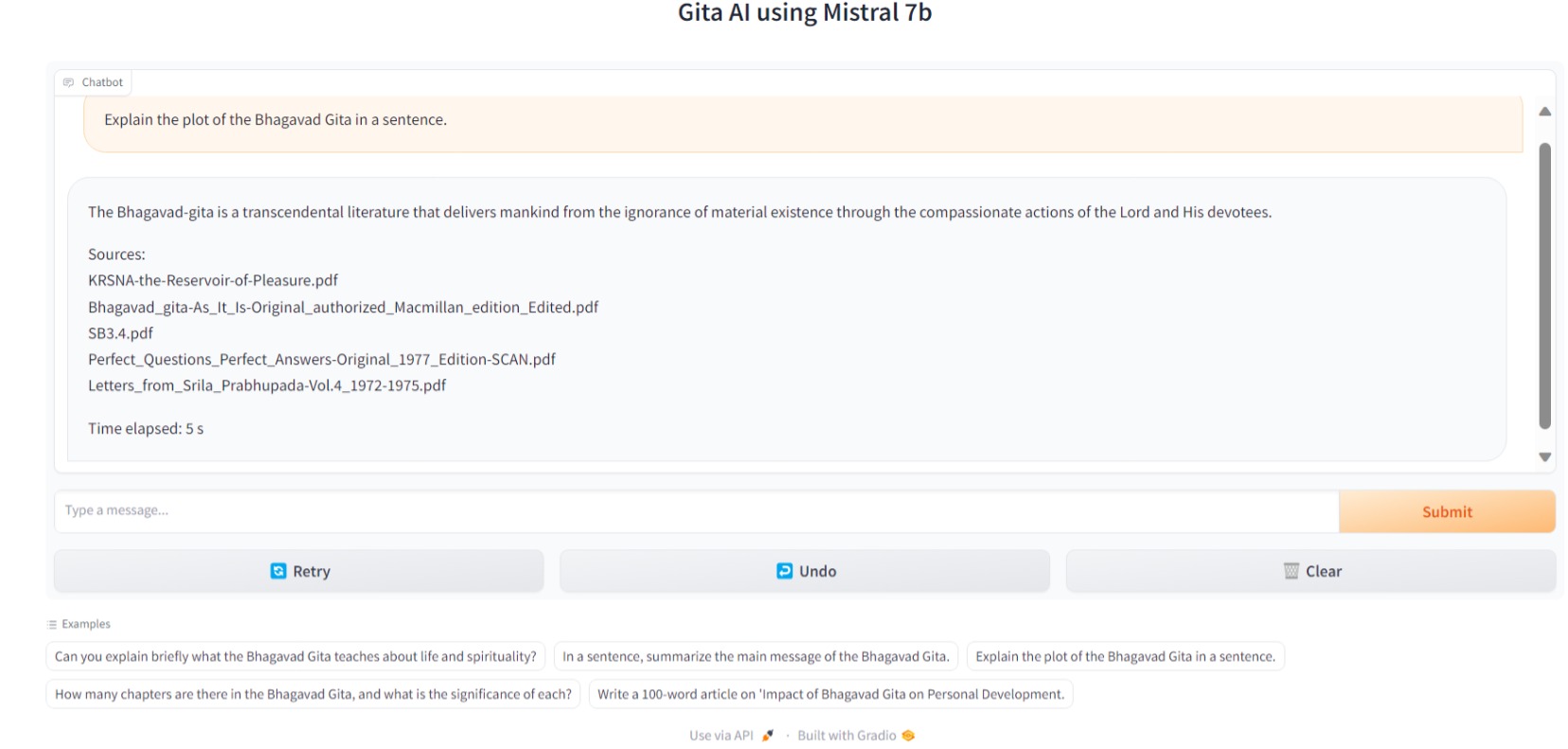

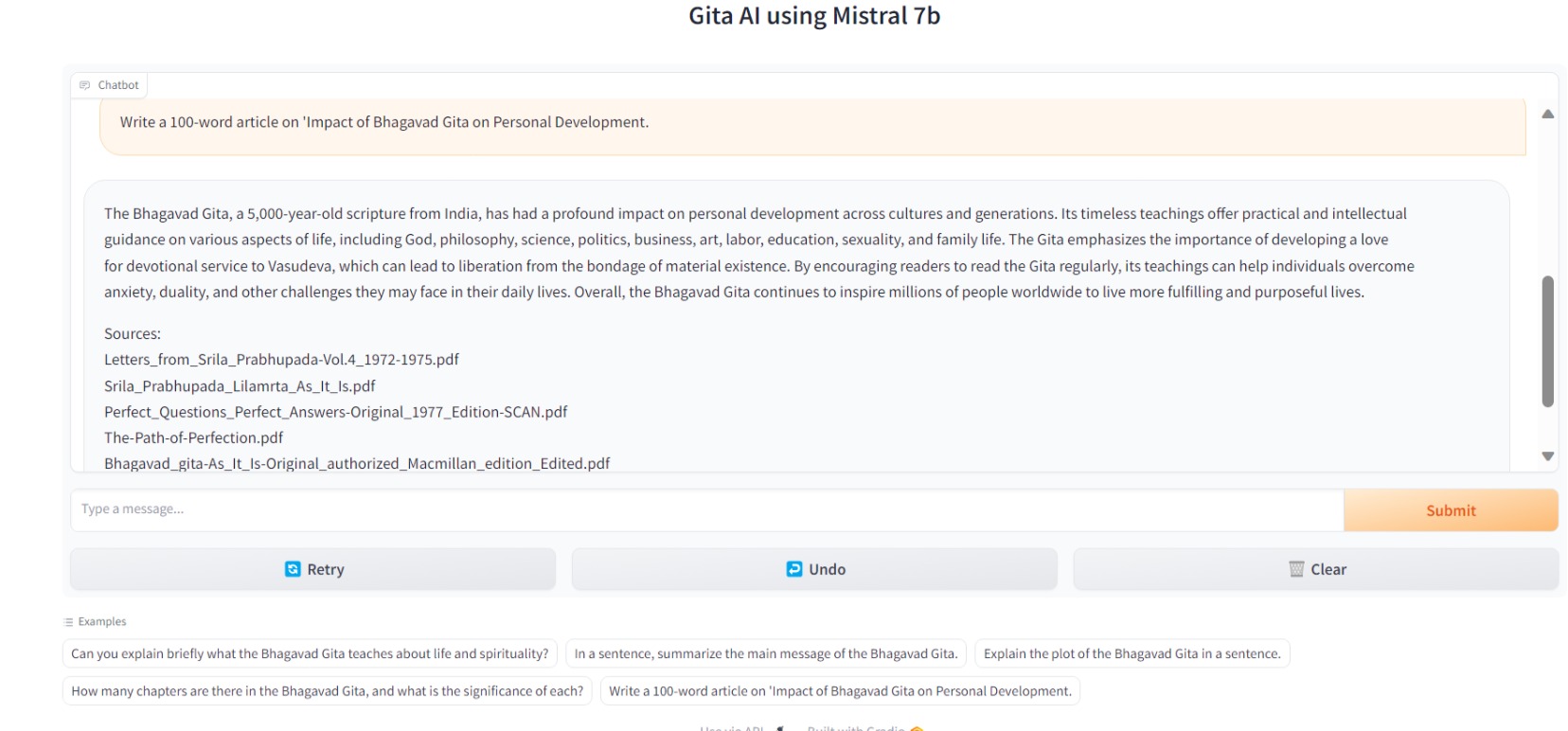

- Gradio - Web UI, inference using free-tier Colab T4 GPU

- HuggingFace - Transformer, Sentence transformers (for creating vector embeddings), Mistral7b quantized model

- LangChain - Retrieval augmented generation (RAG) using RetrievalQA chain functionality

| Colab | Info |

|---|---|

| Creating FAISS vector database from Kaggle dataset | |

| Mistral7b (4bit) RAG Inference of Bhagavad Gita using Gradio |

- Store the vector database in your Google Drive in the following format "vectorstore/db_faiss". The db_faiss contains the following: index.faiss and index.pkl.

- Mount the Google Drive to load the vector embeddings for inference. Mistral7b (4bit) RAG Inference of Bhagavad Gita using Gradio

- Using BitandBytes configurations (load_in_4bit) for quantization - A bit loss in precision, but performance is almost at par with the Mistral7b (base) model.

- HuggingFace pipeline for "text-generation".

- AutoTokenizer and AutoModelforCasualLM from "transformers" for tokenization and loading Mistral7b model from HuggingFace Spaces.

- See - Kaggle Dataset

- 150 books, 37486 pages are there.

- Using sentence-transformers/all-MiniLM-L6-V2 from Huggingface

- Vector database - Google Drive