Data extraction with ML and LLM

Sparrow is an innovative open-source solution for efficient data extraction and processing from various documents and images. It seamlessly handles forms, invoices, receipts, and other unstructured data sources. Sparrow stands out with its modular architecture, offering independent services and pipelines all optimized for robust performance. One of the critical functionalities of Sparrow - pluggable architecture. You can easily integrate and run data extraction pipelines using tools and frameworks like LlamaIndex, Haystack, or Unstructured. Sparrow enables local LLM data extraction pipelines through Ollama or Apple MLX. With Sparrow solution you get API, which helps to process and transform your data into structured output, ready to be integrated with custom workflows.

Sparrow Agents - with Sparrow you can build independent LLM agents, and use API to invoke them from your system.

- sparrow-data-ocr - OCR service, providing optical character recognition as part of the Sparrow.

- sparrow-ml-llm - LLM RAG pipeline, Sparrow service for data extraction and document processing.

- sparrow-ui - Dashboard UI for LLM RAG pipeline.

Sparrow implementation with Donut ML model - sparrow-donut

- Install Weaviate local DB with Docker:

docker compose up -d

- Install the requirements:

pip install -r requirements.txt

- Install Ollama and pull LLM model specified in config.yml

Follow the install steps outlined here:

- Sparrow OCR services install steps

-

Copy text PDF files to the

datafolder or use the sample data provided in thedatafolder. -

Run the script, to convert text to vector embeddings and save in Weaviate. By default it will use LlamaIndex agent:

./sparrow.sh ingest

You can specify agent name explicitly, for example:

./sparrow.sh ingest --agent haystack

./sparrow.sh ingest --agent llamaindex

- Run the script, to process data with LLM RAG and return the answer. By default, it will use

llamaindexagent. You can specify other agents (see ingest example), such ashaystack:

./sparrow.sh "invoice_number, invoice_date, client_name, client_address, client_tax_id, seller_name, seller_address,

seller_tax_id, iban, names_of_invoice_items, gross_worth_of_invoice_items, total_gross_worth" "int, str, str, str, str,

str, str, str, str, List[str], List[float], str"

Answer:

{

"invoice_number": 61356291,

"invoice_date": "09/06/2012",

"client_name": "Rodriguez-Stevens",

"client_address": "2280 Angela Plain, Hortonshire, MS 93248",

"client_tax_id": "939-98-8477",

"seller_name": "Chapman, Kim and Green",

"seller_address": "64731 James Branch, Smithmouth, NC 26872",

"seller_tax_id": "949-84-9105",

"iban": "GB50ACIE59715038217063",

"names_of_invoice_items": [

"Wine Glasses Goblets Pair Clear Glass",

"With Hooks Stemware Storage Multiple Uses Iron Wine Rack Hanging Glass",

"Replacement Corkscrew Parts Spiral Worm Wine Opener Bottle Houdini",

"HOME ESSENTIALS GRADIENT STEMLESS WINE GLASSES SET OF 4 20 FL OZ (591 ml) NEW"

],

"gross_worth_of_invoice_items": [

66.0,

123.55,

8.25,

14.29

],

"total_gross_worth": "$212,09"

}FastAPI Endpoint for Local LLM RAG

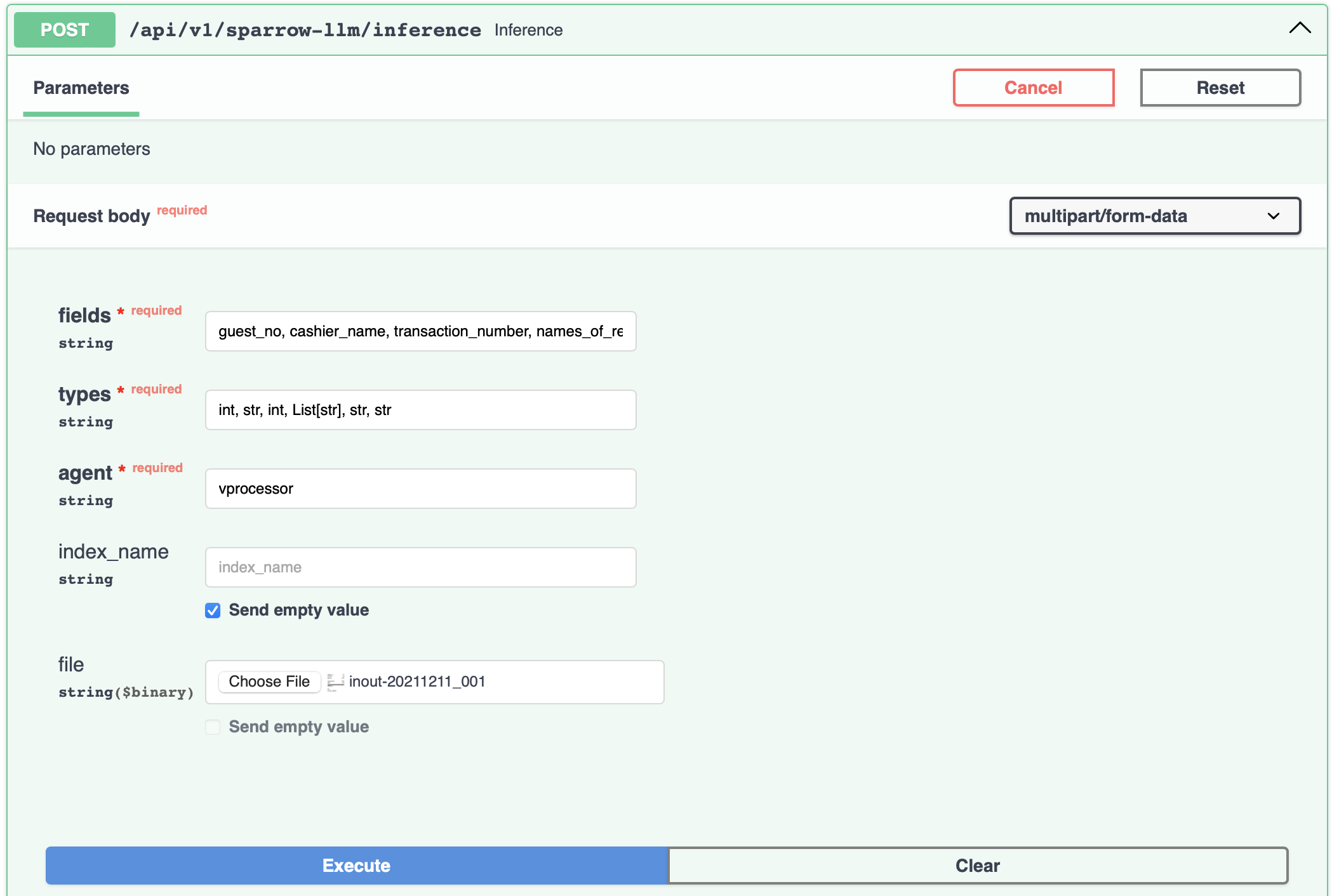

Sparrow enables you to run a local LLM RAG as an API using FastAPI, providing a convenient and efficient way to interact with our services. You can pass the name of the plugin to be used for the inference. By default, llamaindex agent is used.

To set this up:

- Start the Endpoint

Launch the endpoint by executing the following command in your terminal:

python api.py

- Access the Endpoint Documentation

You can view detailed documentation for the API by navigating to:

http:https://127.0.0.1:8000/api/v1/sparrow-llm/docs

For visual reference, a screenshot of the FastAPI endpoint

Example of API call through CURL

curl -X 'POST' \

'http:https://127.0.0.1:8000/api/v1/sparrow-llm/inference' \

-H 'accept: application/json' \

-H 'Content-Type: application/json' \

-d '{

"fields": "invoice_number",

"types": "int",

"agent": "llamaindex"

}'

Follow the steps outlined here:

- Sparrow OCR services usage steps

Request:

./sparrow.sh "invoice_number, invoice_date, client_name, client_address, client_tax_id, seller_name, seller_address,

seller_tax_id, iban, names_of_invoice_items, gross_worth_of_invoice_items, total_gross_worth" "int, str, str, str, str,

str, str, str, str, List[str], List[float], str"

Response:

Sparrow is available for free commercial use. This offer applies to organizations with gross revenue below $5 million USD in the past 12 months.

For businesses exceeding this revenue limit and seeking to bypass GPL license restrictions for inference, please contact me at [email protected] to discuss dual licensing options. The same applies if you are looking for custom workflows to automate business processes, consulting options, and support/maintenance options.

Licensed under the Apache License, Version 2.0. Copyright 2020-2024 Katana ML, Andrej Baranovskij. Copy of the license.