A toolbox for vocal computing built with Pion, whisper.cpp, and Coqui TTS. Build your own personal, self-hosted J.A.R.V.I.S powered by WebRTC

View Demo • Getting Started • Request Features •

Project S.A.T.U.R.D.A.Y is a toolbox for vocal computing. It provides tools to build elegant vocal interfaces to modern LLMs. The goal of this project is to foster a community of like minded individuals who want to bring forth the technology we have been promised in sci-fi movies for decades. It aims to be highly modular and flexible while staying decoupled from specific AI Models. This allows for seamless upgrades when new AI technology is released.

Project S.A.T.U.R.D.A.Y is composed of tools. A tool is an abstraction that encapsulates a specific part of the vocal computing stack. There are 2 main constructs that comprise a tool:

-

Engine - An engine encapsulates the domain specific functionality of a tool. This logic should remain the same regardless of the inference backend used. For example, in the case of the STT tool the engine contains the Voice Activity Detection algorithm along with some custom buffering logic. This allows the backend to be easily changed without needing to re-write code.

-

Backend - A backend is what actually runs the AI inference. This is usually a thin wrapper but allows for more flexibility and ease of upgrade. A backend can also be written to interface with an HTTP server to allow for easy language inter-op.

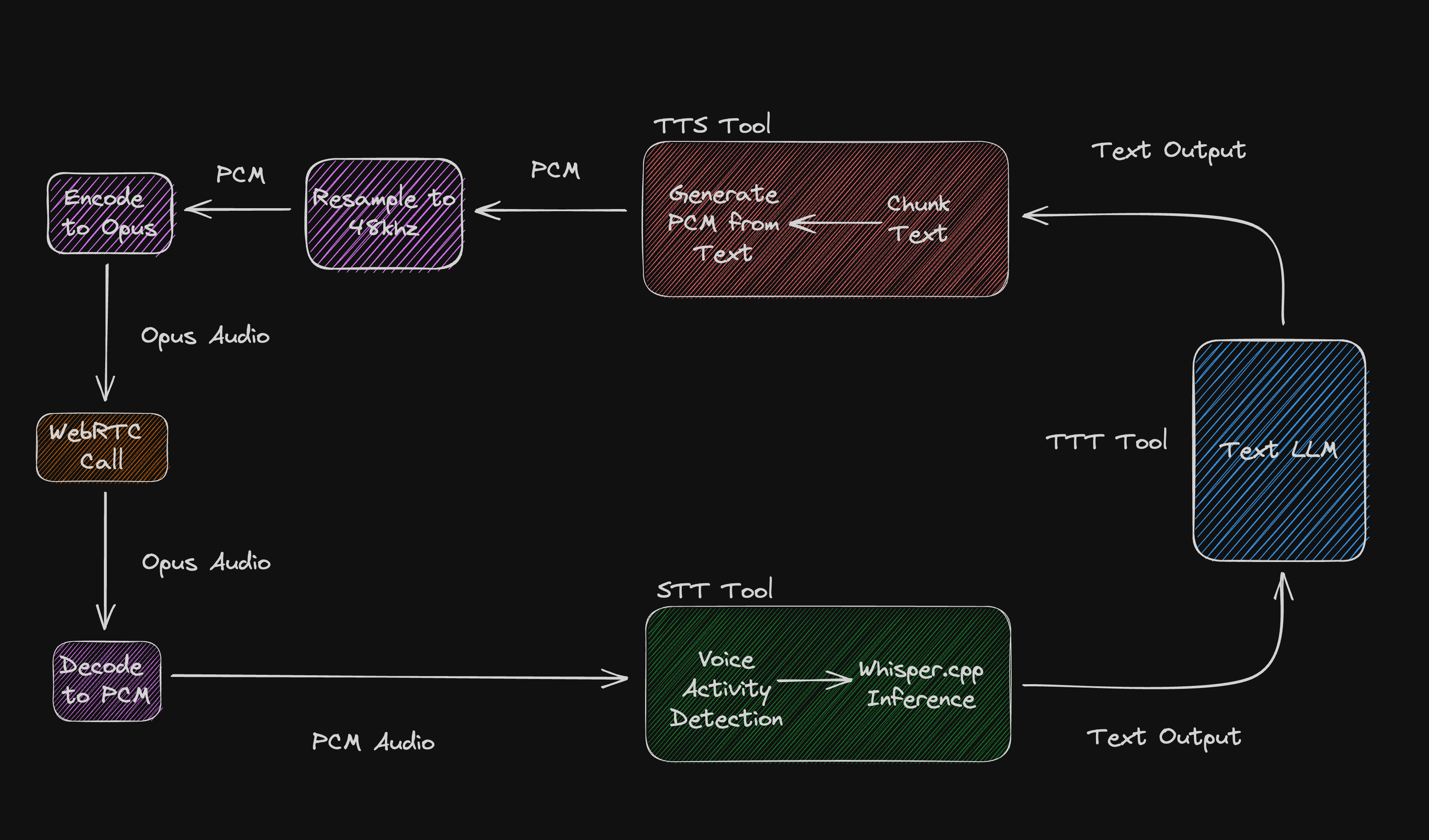

This project contains 3 main kinds of tools. The 3 main tools are STT, TTT and TTS.

STT tools are the ears of the system and perform Speech-to-Text inference on incoming audio.

TTT tools are the brains of the system and perform Text-to-Text inference once the audio has been transformed into Text.

TTS tools are the mouth of the system and perform Text-to-Speech inference on the text proved by the TTT tool.

Here is a diagram of how the main demo currently works.

The demo that comes in this repo is your own personal, self-hosted J.A.R.V.I.S like assistant.

DISCLAIMER: I have only tested this on M1 Pro and Max processors. We are doing a lot of local inference so the demo requires quite a bit of processing power. Your mileage may very on different operating systems and hardware. If you run into problems please open an issue.

In order to run the demo there are some pre-requisites.

In order to run the demo, Golang, Python, Make and a C Complier are required.

There are 3 processes that need to be running for the demo:

- RTC - The RTC server hosts the web page and a WebRTC server. The WebRTC server is what you connect to when you load the page and it is also what the client connects to to start listening to your audio.

- Client - The Client is where all of the magic happens. When it is started it joins the RTC server and starts listening to your audio. When you start speaking it will buffer the incoming audio until you stop. Once you stop speaking it will run STT inference on that audio, pass it to the TTT tool to generate a response to your text and then pass that output to the TTS tool to turn that response into speech. There are 2 system libraries needed to use the client

pkg-configandopus. On macOS these can be installed with brew:

brew install opus pkg-config- TTS - The TTS server is where text from the TTT tool is tranformed into speech. In the demo this uses Coqui TTS. There are 2 system libraries that are needed for this tool

mecabandespeak. On macOS they can be installed with brew:

brew install mecab espeakNOTE: For now the order in which you start the processes matters. You MUST start the RTC server and the TTS server BEFORE you start the client.

From the root of the project run make rtc

make runFIRST TIME SETUP: When you run the tts server for the first time you will need to install the dependencies. Consider using a virtual environment for this.

cd tts/servers/coqui-tts

pip install -r requirements.txtFrom the root of the project run make tts

The client requires whisper.cpp and the use of cgo however the make script should take care of this for you.

From the root of the project run make client

make clientThe main thing on the roadmap right now is getting TTT inference to run locally with something like llama.cpp. At the time of publishing this I do not have great internet and cannot download the model weights needed to get this working.

The second largest item on my roadmap is continuing to improve the setup and configuration process.

The final thing on my roadmap is to continue to build applications with S.A.T.U.R.D.A.Y. I hope more people will build along with me as this is the #1 way to improve the project and uncover new features that need to be added.

Join the Discord to stay up to date!

This project is built with the following open source packages:

I am very from perfect and there are bound to be bugs and things I've overlooked in the installation process. Please, add issues and feel free to reach out if anything is unclear. Also, we have a Discord.

Contributions are what make the open source community such an amazing place to be learn, inspire, and create. Any contributions you make are greatly appreciated.

- Fork the Project

- Create your Feature Branch:

git checkout -b feature/AmazingFeature - Commit your Changes:

git commit -m 'Add some AmazingFeature' - Push to the Branch:

git push origin feature/AmazingFeature - Open a Pull Request

MIT

If you like the project and want to financially support it feel free to buy me a coffee

GitHub @GRVYDEV · Twitter @grvydev · Email [email protected]