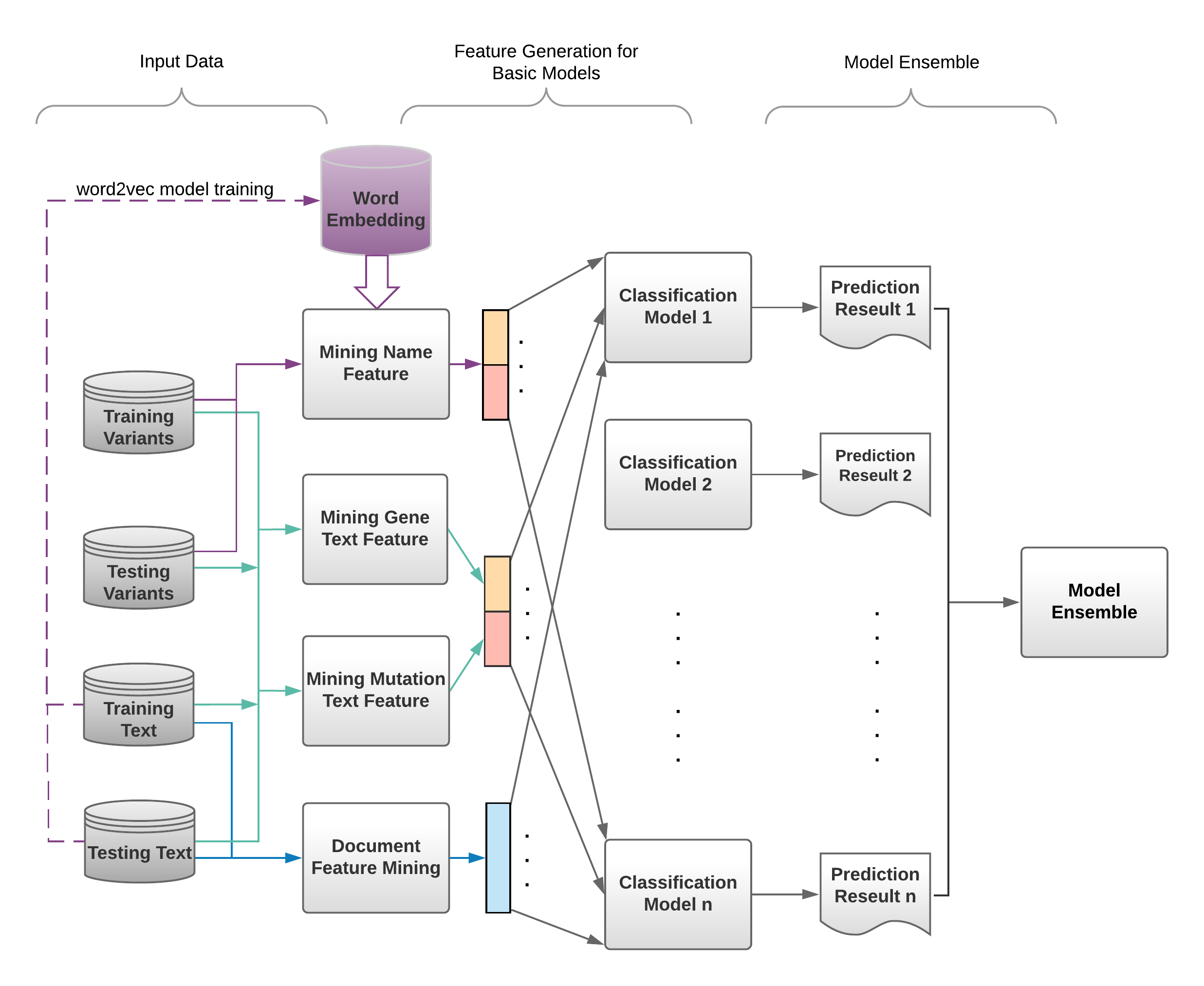

Our solution is the Top-1 winner of the NeurIPS competition of Classifying Clinically Actionable Genetic Mutations. For more details, please refer to kaggle. This repository contains the slides we presented in NeurIPS 2017. The illustration of our proposed framework is:

Please send your email to Xi Sheryl Zhang [email protected] and Dandi Chen [email protected] with questions and comments associated with the code.

- Environment Setting Up

- Directory Structure

- Dataset

- Demo

- Usage Example

- Parameters

- Reference

The following instructions are based on Ubuntu 16.04 and Python 3.6.

-

Install Anaconda.

a) Download Anaconda at your working directory by typing 'wget https://repo.continuum.io/archive/Anaconda3-5.0.1-Linux-x86_64.sh' in the terminal.b) Install Anaonda by typing 'bash Anaconda3-5.0.1-Linux-x86_64.sh' at your working directory. Then follow the instruction on the screen.

c) Run the command 'source ~/.bashrc' in the terminal. After that, you are supposed to run python within Anaconda. To check it, run the command 'python' in the terminal, and it should print something similar to 'Python 3.6.3 |Anaconda, Inc.| (default, Oct 13 2017, 12:02:49)'.

-

Install NLTK data.

Please make sure the nltk.path is set correcly if you customize installation path. Follow the instruction at http:https://www.nltk.org/data.html to install NLTK data. Or you can simply run the command 'python -m nltk.downloader all' in ubuntu terminal.If you choose a different path to install NLTK data, please add the path to let nltk know. Example code('ubuntu' in path.append() stands for the username) from nltk.data import path path.append('/home/ubuntu/software/nltk_data')

-

Install XGBoost and LightGBM.

Run the commands 'conda install -c conda-forge xgboost' and 'conda install -c conda-forge lightgbm' seperately to install both XGBoost and LightGBM.Other install options for XGBoost and LightGBM can be found at http:https://xgboost.readthedocs.io/en/latest/build.html and https://github.com/Microsoft/LightGBM/blob/master/docs/Installation-Guide.rst.

-

Install other necessary packages, including:

a) ahocorasick(https://pypi.python.org/pypi/pyahocorasick/#api-overview).

Run the command 'pip install pyahocorasick' directly. If you meet some problems, try to install other packages used by pyahocorasick, such as gcc, by running 'sudo apt install gcc'. Before that, you may need to update the package lists for upgrades for packages that need upgrading by running 'sudo apt-get update'. Thus, the correct order for intalling pyahocorasick is: first update package lists, then install gcc. Finally install pyahocorasick.b) gensim.

Please refer to https://radimrehurek.com/gensim/install.html for installation guide, or you can run 'conda install -c anaconda gensim' in the terminal directly.c) testfixtures.

Simply run 'conda install -c conda-forge testfixtures'.d) jsonlines.

Command is 'conda install -c conda-forge jsonlines'.

Notes:

-

Anaconda is always the first one that needs to be installed. Installation orders of all other packages can be different.

-

Installation commands summary:

wget https://repo.continuum.io/archive/Anaconda3-5.0.1-Linux-x86_64.sh

bash Anaconda3-5.0.1-Linux-x86_64.sh

source ~/.bashrc

python -m nltk.downloader all

conda install -c conda-forge xgboost

conda install -c conda-forge lightgbm

sudo apt-get update

sudo apt install gcc

pip install pyahocorasick

conda install -c anaconda gensim

conda install -c conda-forge testfixtures

conda install -c conda-forge jsonlines

Please make sure your directories/files are satisfied the following structure before running the code.

.

├── classifier

│ ├── lightgbm.py

│ ├── logistic_regression.py

│ ├── multi_layer_perceptron.py

│ ├── random_forest.py

│ ├── svc.py

│ └── xgboost.py

├── cross_validation

│ └── nfold_cv.py

├── data

│ ├── 5fold_cv

│ ├── features

│ ├── intermediate

│ ├── models

│ │ ├── doc2vec

│ │ └── word2vec

│ ├── pre_define

│ │ ├── 5fold.map.pkl

│ │ ├── Actionable.txt

│ │ ├── chemical.tsv

│ │ ├── disease.tsv

│ │ ├── gene.tsv

│ │ ├── mutation.tsv

│ │ ├── nips.10k.dict.pkl

│ │ ├── pubmed.jsonl

│ │ ├── pubmed_stopword_list.txt

│ │ └── tree_feat_dict.pkl

│ ├── stage1_variants

│ ├── stage2_variants

│ ├── stage1_solution_filtered.csv

│ ├── stage1_test_368

│ ├── stage_2_private_solution.csv

│ ├── stage2_sample_submission.csv

│ ├── stage2_test_text.csv

│ ├── stage2_test_variants.csv

│ ├── test_text

│ ├── test_variants

│ ├── training_text

│ └── training_variants

├── ensemble

│ ├── feature_fusion.py

│ └── result_ensemble.py

├── feature

│ ├── document_mining.py

│ ├── name_mining.py

│ └── relation_mining.py

├── helper.py

├── ReadMe

├── demo.py

└── run.pyNote:

- 5fold.map.pkl is a pre-defined 5 fold cross-validation split for testing only.

- tree_feat_dict.pkl is a pre-defined Python dictionary for recommended number of trees in random forest classifier. Each single feature has recommended number of trees as classifier parameter in random forest only.

- chemical.tsv, disease.tsv, gene.tsv, mutation.tsv are collected online by bioentity names.

- pubmed.jsonl and pubmen_stopword_list.txt are generated from PubMed.

- nips.10k.dict.pkl stores top 10k words in text data.

- embedding learning will take time. Instead, get the pretrained word2vec/doc2vec from here and copy them under the folder 'models'.

The input data can be found via kaggle's page or data. Copy the input files into the folder 'data'. The necesesary files in other folders including 'features' and 'intermediate' will be generated by running the code at the first time.

The index map of the predefined 9 Classes is:

CLASS_NUM_MAP ={'Likely Loss-of-function':1, 'Likely Gain-of-function':2, 'Neutral':3,

'Loss-of-function':4, 'Likely Neutral':5, 'Inconclusive':6, 'Gain-of-function':7,

'Likely Switch-of-function':8, 'Switch-of-function':9}Run demo.py to show the prediction results by giving a gene name and a variation name as the following example:

python demo.py -gene=TGFBR1 -variation=S387YThe predicted results should be:

ID: 95 Gene: TGFBR1 Variation: S387Y

class prediction groundtruth

1 0.06485872604678475 0.0

2 0.0020891761251413538 0.0

3 0.0011311423530236504 0.0

4 0.9245424266930476 1.0

5 0.0017318023781274679 0.0

6 0.0017330807090426808 0.0

7 0.002021304704367886 0.0

8 0.0009614994703982653 0.0

9 0.0009308415200664855 0.0The list of <gene, variation> can be found in the file 'data/stage1_variants'.

XGBoost parameters: https://github.com/dmlc/xgboost/blob/master/doc/parameter.rst

LightGBM parameters: http:https://lightgbm.readthedocs.io/en/latest/Parameters.html

The source code contains four major sections: learning features, classification, ensembling and cross-validation.

To run all four sections, switch to the path with the run.py in the code. Then run 'python run.py'. To run a few sections, please refer to run.py to comment some lines, such as comment line starts with 'ensemble' in main() to run classification section only.

Note:

- pos_tagging_nmf() needs to be called after calling pos_tagging_feats() in helper.py.

- It is time-consufing to run all four sections at once. It may take several days to finish without parallel settings, depending on computation power.

If you find this project useful in your research, please consider citing our paper:

@incollection{zhang2018multi,

title={Multi-view Ensemble Classification for Clinically Actionable Genetic Mutations},

author={Zhang, Xi and Chen, Dandi and Zhu, Yongjun and Che, Chao and Su, Chang and Zhao, Sendong and Min, Xu and Wang, Fei},

booktitle={The NIPS'17 Competition: Building Intelligent Systems},

pages={79--99},

year={2018},

publisher={Springer}

}

This paper can be accessed on : [Classifying Genetic Mutations] (https://arxiv.org/pdf/1806.09737.pdf)