English | 中文

- Our Philosophy

- A Feature Platform for ML Applications

- Highlights

- FAQ

- Download and Install

- QuickStart

- Use Cases

- Documentation

- Roadmap

- Contribution

- Community

- Publications

- The User List

OpenMLDB is an open-source machine learning database that provides a feature platform computing consistent features for training and inference.

For the artificial intelligence (AI) engineering, 95% of the time and effort is consumed by data related workloads. In order to tackle this challenge, tech giants spend thousands of hours on building in-house data and feature platforms to address engineering issues such as data leakage, feature backfilling, and efficiency. The other small and medium-sized enterprises have to purchase expensive SaaS tools and data governance services.

OpenMLDB is an open-source machine learning database that is committed to solving the data and feature challenges. OpenMLDB has been deployed in hundreds of real-world enterprise applications. It prioritizes the capability of feature engineering using SQL for open-source, which offers a feature platform enabling consistent features for training and inference.

Real-time features are essential for many machine learning applications, such as real-time personalized recommendation and risk analytics. However, a feature engineering script developed by data scientists (Python scripts in most cases) cannot be directly deployed into production for online inference because it usually cannot meet the engineering requirements, such as low latency, high throughput and high availability. Therefore, a engineering team needs to be involved to refactor and optimize the source code using database or C++ to ensure its efficiency and robustness. As there are two teams and two toolchains involved for the development and deployment life cycle, the verification for consistency is essential, which usually costs a lot of time and human power.

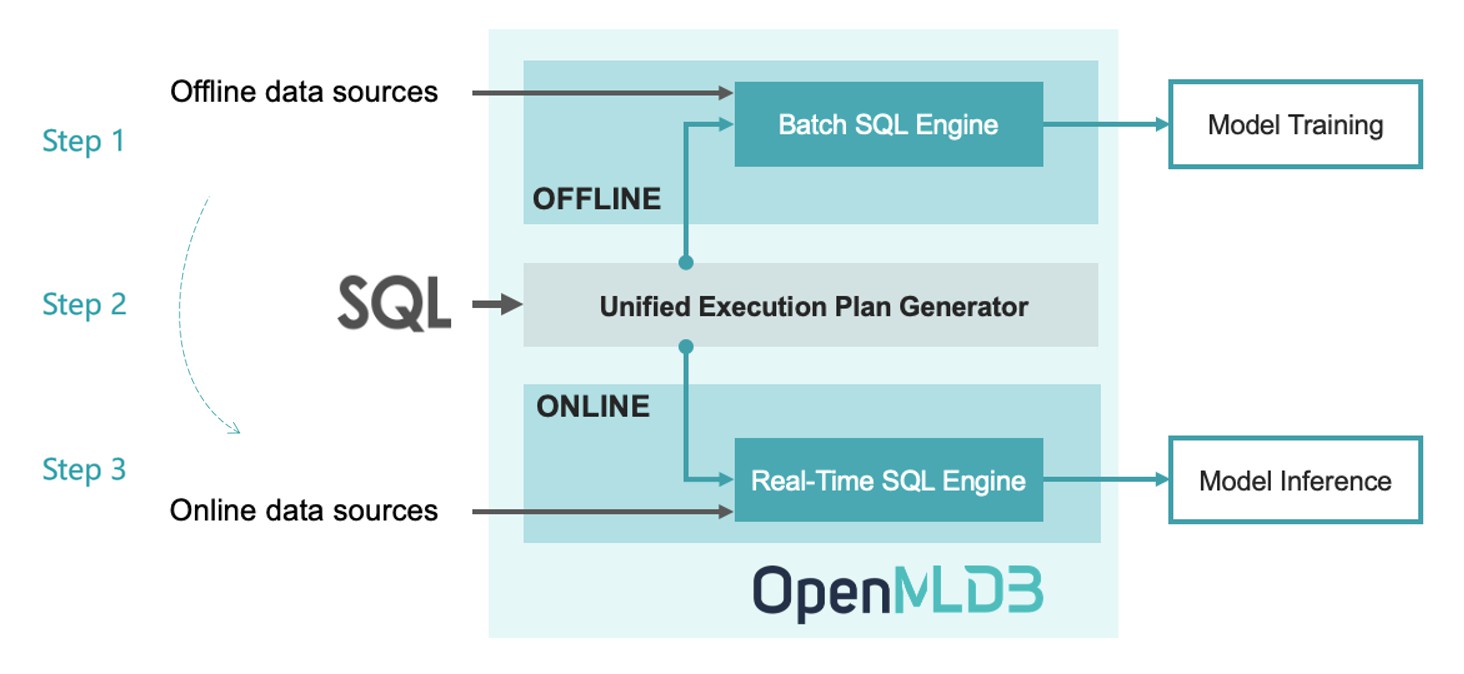

OpenMLDB is particularly designed as a feature platform for ML applications to accomplish the mission of Development as Deployment, to significantly reduce the cost from the offline training to online inference. Based on OpenMLDB, there are three steps only for the entire life cycle:

- Step 1: Offline development of feature engineering script based on SQL

- Step 2: SQL online deployment using just one command

- Step 3: Online data source configuration to import real-time data

With those three steps done, the system is ready to serve real-time features, and highly optimized to achieve low latency and high throughput for production.

In order to achieve the goal of Development as Deployment, OpenMLDB is designed to provide consistent features for training and inference. The figure above shows the high-level architecture of OpenMLDB, which consists of four key components: (1) SQL as the unified programming language; (2) The real-time SQL engine for for extra-low latency services; (3) The batch SQL engine based on a tailored Spark distribution; (4) The unified execution plan generator to bridge the batch and real-time SQL engines to guarantee the consistency.

Consistent Features for Training and Inference: Based on the unified execution plan generator, correct and consistent features are produced for offline training and online inference, providing hassle-free time travel without data leakage.

Real-Time Features with Ultra-Low Latency: The real-time SQL engine is built from scratch and particularly optimized for time series data. It can achieve the response time of a few milliseconds only to produce real-time features, which significantly outperforms other commercial in-memory database systems (Figures 9 & 10, the VLDB 2021 paper).

Define Features as SQL: SQL is used as the unified programming language to define and manage features. SQL is further enhanced for feature engineering, such as the extended syntax LAST JOIN and WINDOW UNION.

Production-Ready for ML Applications: Production features are seamlessly integrated to support enterprise-grade ML applications, including distributed storage and computing, fault recovery, high availability, seamless scale-out, smooth upgrade, monitoring, heterogeneous memory support, and so on.

-

What are use cases of OpenMLDB?

At present, it is mainly positioned as a feature platform for ML applications, with the strength of low-latency real-time features. It provides the capability of Development as Deployment to significantly reduce the cost for machine learning applications. On the other hand, OpenMLDB contains an efficient and fully functional time-series database, which is used in finance, IoT and other fields.

-

How does OpenMLDB evolve?

OpenMLDB originated from the commercial product of 4Paradigm (a leading artificial intelligence service provider). In 2021, the core team has abstracted, enhanced and developed community-friendly features based on the commercial product; and then makes it publicly available as an open-source project to benefit more enterprises to achieve successful digital transformations at low cost. Before the open-source, it had been successfully deployed in hundreds of real-world ML applications together with 4Paradigm's other commercial products.

Irrespective of the name, it is unrelated to MLDB, a different open source project in development since 2015.

-

Is OpenMLDB a feature store?

OpenMLDB is more than a feature store to provide features for ML applications. OpenMLDB is capable of producing real-time features in a few milliseconds. Nowadays, most feature stores in the market serve online features by syncing features pre-computed at offline. But they are unable to produce low latency real-time features. By comparison, OpenMLDB is taking advantage of its optimized online SQL engine, to efficiently produce real-time features in a few milliseconds.

-

Why does OpenMLDB choose SQL to define and manage features?

SQL (with extension) has the elegant syntax but yet powerful expression ability. SQL based programming experience flattens the learning curve of using OpenMLDB, and further makes it easier for collaboration and sharing.

- Download: GitHub release, mirror site (China)

- Install and deploy: English, Chinese

We are building a list of real-world use cases based on OpenMLDB to demonstrate how it can fit into your business.

| Use Cases | Tools | Brief Introduction |

|---|---|---|

| New York City Taxi Trip Duration | OpenMLDB, LightGBM | This is a challenge from Kaggle to predict the total ride duration of taxi trips in New York City. You can read more detail here. It demonstrates using the open-source tools OpenMLDB + LightGBM to build an end-to-end machine learning applications easily. |

| Importing real-time data streams from Pulsar | OpenMLDB, Pulsar, OpenMLDB-Pulsar connector | Apache Pulsar is a cloud-native streaming platform. Based on the OpenMLDB-Kafka connector , we are able to seamlessly import real-time data streams from Pulsar to OpenMLDB as the online data sources. |

| Importing real-time data streams from Kafka | OpenMLDB, Kafka, OpenMLDB-Kafka connector | Apache Kafka is a distributed event streaming platform. With the OpenMLDB-Kafka connector, the real-time data streams can be imported from Kafka as the online data sources for OpenMLDB. |

| Importing real-time data streams from RocketMQ | OpenMLDB, RocketMQ, OpenMLDB-RocketMQ connector | Apache RocketMQ is a distributed messaging and streaming platform. The OpenMLDB-RocketMQ connector is used to efficiently import real-data streams from RocketMQ to OpenMLDB. |

| Building end-to-end ML pipelines in DolphinScheduler | OpenMLDB, DolphinScheduler, OpenMLDB task plugin | We demonstrate to build an end-to-end machine learning pipeline based on OpenMLDB and DolphinScheduler (an open-source workflow scheduler platform). It consists of feature engineering, model training, and deployment. |

| Ad Tracking Fraud Detection | OpenMLDB, XGBoost | This demo uses OpenMLDB and XGBoost to detect click fraud for online advertisements. |

| SQL-based ML pipelines | OpenMLDB, Byzer, OpenMLDB Plugin for Byzer | Byzer is a low-code open-source programming language for data pipeline, analytics and AI. Byzer has integrated OpenMLDB to deliver the capability of building ML pipelines with SQL. |

| Building end-to-end ML pipelines in Airflow | OpenMLDB, Airflow, Airflow OpenMLDB Provider, XGBoost | Airflow is a popular workflow management and scheduling tool. This demo shows how to effectively schedule OpenMLDB tasks in the Airflow through the provider package. |

| Precision marketing | OpenMLDB, OneFlow | OneFlow is a deep learning framework designed to be user-friendly, scalable and efficient. This use case demonstrates to use OpenMLDB for feature engineering and OneFlow for model training/inference, to build an application for precision marketing. |

- Chinese documentations: https://openmldb.ai/docs/zh

- English documentations: https://openmldb.ai/docs/en/

Please refer to our public Roadmap page.

Furthermore, there are a few important features on the development roadmap but have not been scheduled yet. We appreciate any feedbacks on those features.

- A cloud-native OpenMLDB

- Automatic feature extraction

- Optimization based on heterogeneous storage and computing resources

- A lightweight OpenMLDB for edge computing

We really appreciate the contribution from our community.

- If you are interested to contribute, please read our Contribution Guideline for more details.

- If you are a new contributor, you may get start with the list of issues labeled with

good first issue. - If you have experience of OpenMLDB development, or want to tackle a challenge that may take 1-2 weeks, you may find the list of issues labeled with

call-for-contributions.

-

Website: https://openmldb.ai/en

-

Email: [email protected]

-

GitHub Issues and GitHub Discussions: The GitHub Issues is used to report bugs and collect new feature requirements. The GitHub Discussions is open to any discussions related to OpenMLDB.

-

WeChat Groups (Chinese):

- PECJ: Stream Window Join on Disorder Data Streams with Proactive Error Compensation. Xianzhi Zeng, Shuhao Zhang, Hongbin Zhong, Hao Zhang, Mian Lu, Zhao Zheng, and Yuqiang Chen. International Conference on Management of Data (SIGMOD/PODS) 2024.

- Principles and Practices of Real-Time Feature Computing Platforms for ML. Hao Zhang, Jun Yang, Cheng Chen, Siqi Wang, Jiashu Li, and Mian Lu. 2023. Communications of the ACM 66, 7 (July 2023), 77–78.

- Scalable Online Interval Join on Modern Multicore Processors in OpenMLDB. Hao Zhang, Xianzhi Zeng, Shuhao Zhang, Xinyi Liu, Mian Lu, and Zhao Zheng. In 2023 IEEE 39rd International Conference on Data Engineering (ICDE) 2023. [code]

- FEBench: A Benchmark for Real-Time Relational Data Feature Extraction. Xuanhe Zhou, Cheng Chen, Kunyi Li, Bingsheng He, Mian Lu, Qiaosheng Liu, Wei Huang, Guoliang Li, Zhao Zheng, Yuqiang Chen. International Conference on Very Large Data Bases (VLDB) 2023. [code].

- A System for Time Series Feature Extraction in Federated Learning. Siqi Wang, Jiashu Li, Mian Lu, Zhao Zheng, Yuqiang Chen, and Bingsheng He. 2022. In Proceedings of the 31st ACM International Conference on Information & Knowledge Management (CIKM) 2022. [code].

- Optimizing in-memory database engine for AI-powered on-line decision augmentation using persistent memory. Cheng Chen, Jun Yang, Mian Lu, Taize Wang, Zhao Zheng, Yuqiang Chen, Wenyuan Dai, Bingsheng He, Weng-Fai Wong, Guoan Wu, Yuping Zhao, and Andy Rudoff. International Conference on Very Large Data Bases (VLDB) 2021.

13. The User List

We are building a user list to collect feedback from the community. We really appreciate it if you can provide your use cases, comments, or any feedback when using OpenMLDB. We want to hear from you!