by Sahand Sharifzadeh1, Sina Moayed Baharlou2*, Max Berrendorf1*, Rajat Koner1, Volker Tresp1,3

1 Ludwig Maximilian University, Munich, Germany, 2 Sapienza University of Rome, Italy

3 Siemens AG, Munich, Germany

* Equal contribution

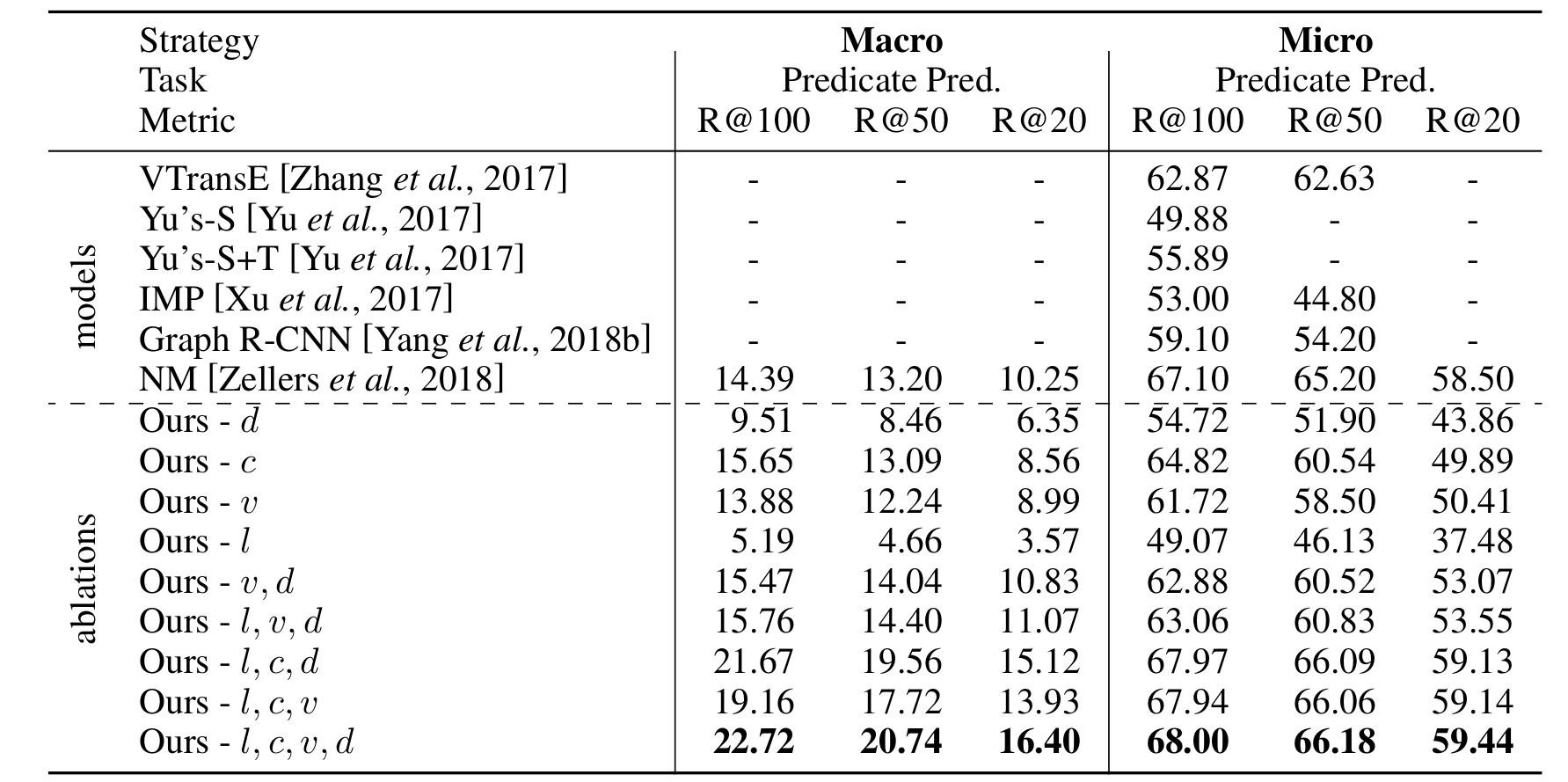

State-of-the-art visual relation detection methods mostly rely on object information extracted from RGB images such as predicted class probabilities, 2D bounding boxes and feature maps. Depth maps can additionally provide valuable information on object relations, e.g. helping to detect not only spatial relations, such as standing behind, but also non-spatial relations, such as holding. In this work, we study the effect of using different object information with a focus on depth maps. To enable this study, we release a new synthetic dataset of depth maps, VG-Depth, as an extension to Visual Genome (VG). We also note that given the highly imbalanced distribution of relations in VG, typical evaluation metrics for visual relation detection cannot reveal improvements of under-represented relations. To address this problem, we propose using an additional metric, calling it Macro Recall@K, and demonstrate its remarkable performance on VG. Finally, our experiments confirm that by effective utilization of depth maps within a simple, yet competitive framework, the performance of visual relation detection can be significantly improved.

-

We perform an extensive study on the effect of using different sources of object information in visual relation detection. We show in our empirical evaluations using the VG dataset, that our model can outperform competing methods by a margin of up to 8% points, even those using external language sources or contextualization.

-

We release a new synthetic dataset VG-Depth, to compensate for the lack of depth maps in Visual Genome.

-

We propose Macro Recall@K as a competitive metric for evaluating the visual relation detection performance in highly imbalanced datasets such as Visual Genome.

For further information, please read our paper here.

@misc{sah2019improving,

title={Improving Visual Relation Detection using Depth Maps},

author={Sahand Sharifzadeh and Sina Moayed Baharlou and Max Berrendorf and Rajat Koner and Volker Tresp},

year={2019},

eprint={1905.00966},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

We release a new dataset called VG-Depth as an extension to Visual Genome. This dataset contains synthetically generated depth maps from Visual Genome images and can be downloaded from the following link: VG-Depth

The main requirements of the provided code are as follows:

- Python >= 3.6

- PyTorch >= 1.1

- TorchVision >= 0.2.0

- TensorboardX

- CUDA Toolkit 10.0

- Pandas

- Overrides

- Gdown

Please make sure you have Cuda Toolkit 10.0 installed, then you can setup the environment by calling the following script:

./setup_env.sh

This script will perform the following operations:

- Install the required libraries.

- Download the Visual-Genome dataset.

- Download the Depth version of VG.

- Download the necessary checkpoints.

- Compile the CUDA libraries.

- Prepare the environment.

If you have already installed some of the libraries or downloaded the datasets, you can run the following scripts individually:

./data/fetch_dataset.sh # Download Visual-Genome

./data/fetch_depth_1024.sh # Download VG-Depth

./checkpoints/fetch_checkpoints.sh # Download the Checkpoints

Update the config.py file to set the datasets paths, and adjust your PYTHONPATH: (e.g. export PYTHONPATH=/home/sina/Depth-VRD).

To train or evaluate the networks, run the following scripts and select between different models and hyper-parameters by passing the pre-defined arguments:

- To train the depth models separately (without the fusion layer):

./scripts/shz_models/train_depth.sh 1.1

- To train the each modality seperately:

./scripts/shz_models/train_individual.sh 1.1

- To train the fusion models:

./scripts/shz_models/train_fusion.sh 1.1

- To evaluate the models:

./scripts/shz_models/eval_scripts.sh 1

The training scripts will be performed for eight different random seeds and 25 epochs each.

You can also separately download the full model (LCVD) here. Please note that this checkpoint generates results that are slightly different that the ones reported in our paper. The reason is that for a fair comparison, we have reported the mean values from evaluations over several models.

This repository is created and maintained by Sahand Sharifzadeh, Sina Moayed Baharlou, and Max Berrendorf.

The skeleton of our code is built on top of the nicely organized Neural-Motifs framework (incl. the rgb data loading pipeline and part of the evaluation code). We have upgraded these parts to be compatible with PyTorch 1. To enable a fair comparison of models, our object detection backbone also uses the same Faster-RCNN weights as that work.