The Vodalus App Stack includes several key components and functionalities:

-

Data Generation: Utilizes LLMs to generate synthetic data based on Wikipedia content. See

main.pyfor implementation details. -

LLM Interaction: Manages interactions with LLMs through the

llm_handler.py, which configures and handles messaging with the LLM. -

Wikipedia Content Processing: Processes and searches Wikipedia content to find relevant articles using models loaded in

wiki.py.

-

Model Training and Fine-Tuning: Supports training and fine-tuning of MLX models with custom datasets, as detailed in the MLX_Fine-Tuning guide.

-

Quantizing Models: Guides on quantizing models to GGUF format for efficient local execution, as described in the Quantize_GGUF guide.

-

Interactive Notebooks: Provides Jupyter notebooks for training and fine-tuning models, such as

mlx-fine-tuning.ipynbandconvert_to_gguf.ipynb.

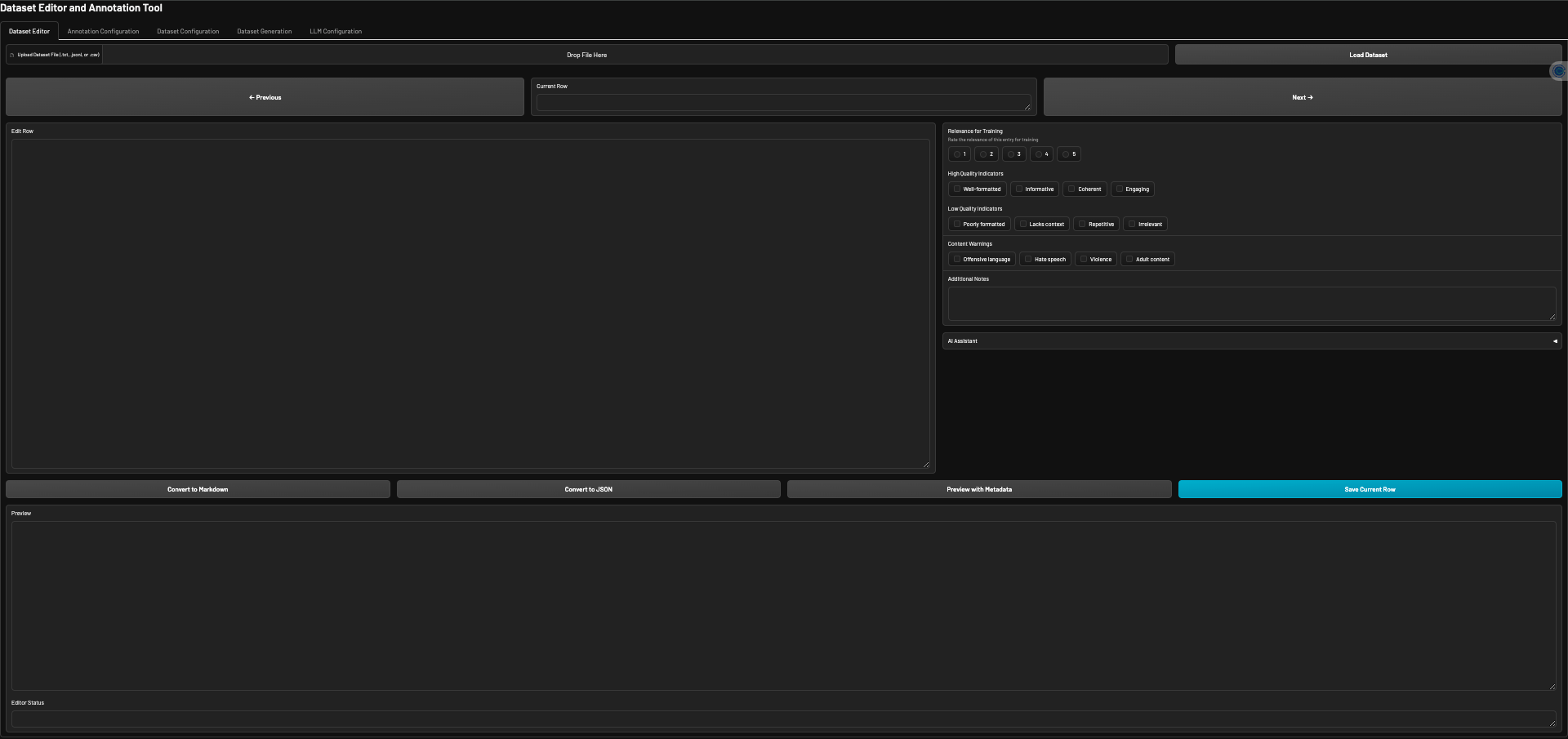

The new Gradio UI (app.py) provides a comprehensive interface for managing and interacting with the Vodalus App Stack:

-

Dataset Editor:

- Load, view, and edit JSONL datasets

- Navigate through dataset entries

- Convert between JSON and Markdown formats

- Annotate entries with quality ratings, tags, and additional notes

- Preview entries with metadata

-

Annotation Configuration:

- Customize quality scales, tag categories, and free-text fields

- Save and load annotation configurations

-

Dataset Configuration:

- Edit system messages, prompts, and topics used for data generation

- Save dataset configurations

-

Dataset Generation:

- Generate new dataset entries using configured parameters

- Specify number of workers and generations

- View generation status and output

-

AI Assistant:

- Chat with an AI assistant for help with annotation and quality checking

- Choose between local and remote LLM options

- Comprehensive Documentation: Each component is accompanied by detailed guides and instructions to assist users in setup, usage, and customization.

For more detailed information on each component, refer to the respective guides and source files included in the repository.

- Ensure Python is installed on your system.

- Familiarity with basic command line operations is helpful.

- Clone the repository to your local machine.

- Navigate to the project directory in your command line interface.

- Run the following commands to set up the environment:

- Create env: conda create -n vodalus -y

- conda activate vodalus

pip install -r requirements.txt

Execute the main script to start data generation:

python main.pygradio app.py

- Imports and Setup: Imports libraries and modules, sets the provider for the LLM.

- Data Generation (

generate_datafunction): Fetches Wikipedia content, constructs prompts, and generates data using the LLM. - Execution (

mainfunction): Manages the data generation process using multiple workers for efficiency.

- OpenAI Client Configuration: Sets up the client for interacting with the LLM.

- Message Handling Functions: Includes functions to send messages to the LLM and handle the responses.

- Model Loading: Loads necessary models for understanding and processing Wikipedia content.

- Search Function: Implements semantic search to find relevant Wikipedia articles based on a query.

- Launch the Gradio UI by running

python app.py. - Use the different tabs to manage datasets, configurations, and generate new data.

- Utilize the AI Assistant for help with annotation and quality checking.

- Use the "Dataset Configuration" tab in the Gradio UI to modify topics and system messages.

- Adjust the number of workers and other parameters in the "Dataset Generation" tab of the Gradio UI.

ALTERNATE Way of Modifying Topics and System Messages

- To change the topics, edit

topics.py. - To modify system messages, adjust

system_messages.py.

- Adjust the number of workers and other parameters in

params.pyto optimize performance based on your system's capabilities.