EARLY PROTOTYPE, WORK IN PROGRESS 😴

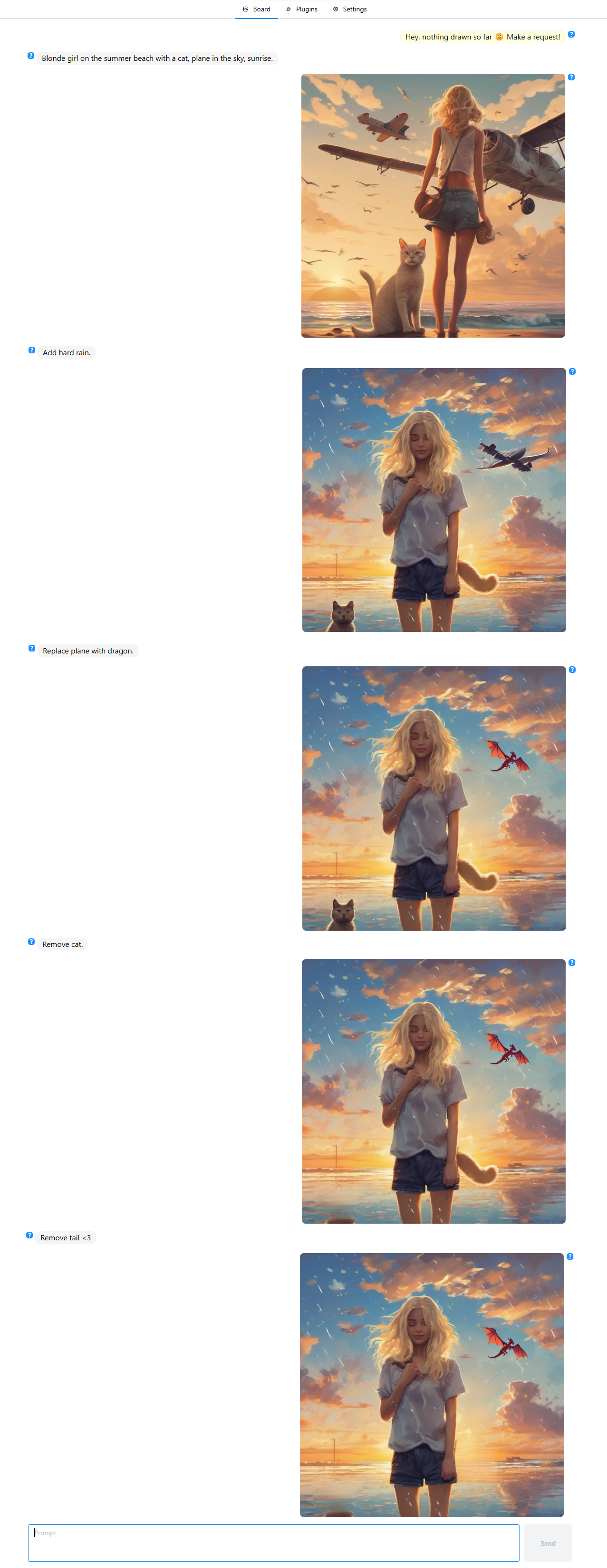

The initial idea was to replicate DALL-E 3's chat-like iterative drawing pipeline.

While drawing inspiration from Anything projects such as Inpaint Anything, IEA, Segment Anything for Stable Diffusion WebUI, Grounded-Segment-Anything, Edit Anything and other, the core concept is different: creating an application for pure text-guided sequential image editing similar to DALL-E 3. This involves further enriching it with features it lacked, such as pixel-perfect inpainting, object removal, etc.

☑️ Text-to-image

☑️ Text-guided inpainting

☑️ Text-guided object removal

⌛ Text-guided image resize

⌛ Text-guided object injection

⌛ Text-guided style transfer

⌛ Text-guided outpainting

⌛ Text-guided upscaling

⌛ Image-based inpainting

⌛ Text-guided image merge

⌛ Text-guided object editing

⌛ Text-guided composition control

⌛ Text-guided object extraction

⌛ Voice recognition

⌛ Fine-tuning LLM for enhanced prompt comprehension

You need to have Git (2.43), Python (3.10), Poetry (1.7), Node.js (21.6) installed, then:

git clone https://github.com/seruva19/flare

cd flare

Install core:

poetry install

poetry lock

Install plugins:

poetry run get-default-plugins

poetry run merge

poetry install

Install client:

npm install

npm run build

poetry run flare

And open browser at http:https://localhost:8000/

❓ What are system requirements?

👉 I'm not sure, it has only been tested on an RTX 3090. Although there is an Offload models after use option in Settings tab, enabling which may help to decrease VRAM consumption.

❓ Can prompt comprehension be improved?

👉 Currently, Flare utilizes in-context learning for the vanilla Phi-2 model. While this model is quite capable, its capacity for providing concise instruction interpretation is limited. However, I am confident that additional fine-tuning with a custom instruction dataset will allow Flare to achieve a level of comprehension comparable to DALL-E 3. This is already part of my roadmap.

❓ Why not use 7B/8B models like Mistral/Llama etc.?

👉 I am considering this, but it might increase system requirements even more, especially considering the fact that I am planning to use Stable Diffusion 3 as the primary image generator (upd. 18.06.2024: maybe I will stick to PixArt Sigma instead). And I think small models like Phi and Gemma must not be underestimated.

❓ Why not use vision models like LLaVA?

👉 While it's entirely feasible, I found it unnecessary for the prototype. I might explore this option later on. Because of Flare's fully modular design, experimenting with different pipelines would be effortless.

❓ Looks like reinvention of TaskMatrix or InstructPix2Pix?

👉 Probably, but Flare's primary focus is on text-guided drawing with the utilization of open-source language models instead of ChatGPT and without using dedicated instruction-trained image editing model. Additionally, one of long-term objectives is to empower users to expand its functionality through plugins written in natural language.

❓ Isn't it the same concept as DiffusionGPT?

👉 Likely, but I initiated Flare's development before discovering this project. Honestly, the idea of multistage processing itself is basic, and numerous comparable applications are anticipated to emerge soon, particularly with SD3 release and projects like ELLA and EMILIE gaining traction.

❓ Now, when Omost exists, does it make sense to continue developing Flare?

👉 Yes and no. Currently Omost too is far from the concept I have in mind when I started Flare, but who knows? Another option worth reviewing is to integrate Omost as backend into Flare. I haven't decided yet.

🔥 Transformers

🔥 Guidance

🔥 Diffusers

🔥 Segment Anything

🔥 Grounding DINO

🔥 LaMa

⚡ PixArt-Σ

⚡ Stable Diffusion XL

⚡ Stable Diffusion 3 Medium

⚡ Phi-2

💧 FastAPI

💧 React

💧 MantineUI

As for now, it doesn't work in free colab 😓